It looks like you're using an Ad Blocker.

Please white-list or disable AboveTopSecret.com in your ad-blocking tool.

Thank you.

Some features of ATS will be disabled while you continue to use an ad-blocker.

share:

She's not saying anything I haven't said 100 times already in this thread, though I think "wrong" needs to be qualified and is not so black and white in the case of Newton's model.

originally posted by: Hyperboles

a reply to: Arbitrageur

How come Prof ghez from UCLA is talking about newton being wrong recently?

First, my favorite quote from George Box: "All models are wrong. Some are useful."

I really believe that's true, though again with the caveat that "wrong" is a fuzzy term. If it's useful, how wrong can it really be?

Second, some thoughts from Isaac Asimov on the fuzziness of the concept of "wrong":

chem.tufts.edu...

Maybe a better alternative to what Ghez says about Newton's model being wrong would be to call it "incomplete", in the sense that Einstein was well aware of centuries of observations consistent with Newton's model, so he took care to explain that his then new theory of relativity simplified to Newton's model in the limited case (the limitation being velocities much lower than the speed of light and relatively weak gravitational fields).

The basic trouble, you see, is that people think that "right" and "wrong" are absolute; that everything that isn't perfectly and completely right is totally and equally wrong.

However, I don't think that's so. It seems to me that right and wrong are fuzzy concepts, and I will devote this essay to an explanation of why I think so...

Since the refinements in theory grow smaller and smaller, even quite ancient theories must have been sufficiently right to allow advances to be made; advances that were not wiped out by subsequent refinements...

Naturally, the theories we now have might be considered wrong..., but in a much truer and subtler sense, they need only be considered incomplete.

Because Relativity does simplify to approximately Newton's model in such limited cases, we do indeed still use Newton's model since it's useful, in giving us answers that are close enough for practical purposes where the limited case applies.

Take the example of adding two velocities of 2 miles per hour.

Newton says 2 mph + 2 mph = 4 mph

Einstein says 2 mph + 2 mph = 3.999999999999999964 mph

Can we even measure the difference? So was Newton really "wrong" about that, or close enough, in that case? These are subtle questions, and maybe not so black-and-white, as Asimov suggests.

When we get past the "limited case" where speeds are no longer much lower than the speed of light etcetera, then it's less fuzzy and it's clear that Newton's model doesn't work well enough for say, GPS, so situations like that are where we need to go past the limited case covered by Newton's model, and use general relativity.

Ghez says the same thing that I do about relativity having issues too; she like many physicists is not satisfied with the description relativity gives us of a black hole. The fact that it results in a singularity is not particularly useful. So some might say all models are wrong, some are useful...Newtonian mechanics is useful in non-relativistic conditions, and relativity is useful in a much wider range of conditions but perhaps not so useful for describing the center of a black hole, where "singularity" means we don't understand it as it's not a very useful description.

So if you're not overly concerned about the difference between 4 mph and 3.999999999999999964 mph, Newton's model is still useful in those velocity ranges if it's only off by a perhaps immeasurably small amount.

Here's an interesting article on Physicsforums about the "classical physics is wrong" fallacy:

One of the common questions or comments we get on PF is the claim that classical physics or classical mechanics (i.e. Newton’s laws, etc.) is wrong because it has been superseded by Special Relativity (SR) and General Relativity (GR), and/or Quantum Mechanics (QM). Such claims are typically made by either a student who barely learned anything about physics, or by someone who have not had a formal education in physics. There is somehow a notion that SR, GR, and QM have shown that classical physics is wrong, and so, it shouldn’t be used.

There is a need to debunk that idea, and it needs to be done in the clearest possible manner. This is because the misunderstanding that results in such an erroneous conclusion is not just simply due to lack of knowledge of physics, but rather due to something more inherent in the difference between science and our everyday world/practices. It is rooted in how people accept certain things and not being able to see how certain idea can merge into something else under different circumstances.

Before we deal with specific examples, let’s get one FACT straighten out:

Classical physics is used in an overwhelming majority of situations in our lives. Your houses, buildings, bridges, airplanes, and physical structures were built using the classical laws. The heat engines, motors, etc. were designed based on classical thermodynamics laws. And your radio reception, antennae, TV transmitters, wi-fi signals, etc. are all based on classical electromagnetic description.

These are all FACTS, not a matter of opinion. You are welcome to check for yourself and see how many of these were done using SR, GR, or QM. Most, if not all, of these would endanger your life and the lives of your loved ones if they were not designed or described accurately. So how can one claim that classical physics is wrong, or incorrect, if they work, and work so well in such situations?

It's a great article which got me re-thinking the way I communicate ideas about classical models, and whether it's a fallacy to call them wrong or not. It depends on the application one wishes to model, I suppose. You can't use Newton's model to design GPS, but you can use it and other classical models successfully to design many other things in our daily lives, as the above article suggests.

edit on 2019729 by Arbitrageur because: clarification

I got another one.

Perfect photon beam aimed at the exact corner point between two super reflectors, at a 90 degree angle.

Where do the photons go? How? Why?

Is this like one of those repeating Gosper Glider Gun things or something? I feel like this would be a practical method to build one, in an empty universe where only these things existed.

Perfect photon beam aimed at the exact corner point between two super reflectors, at a 90 degree angle.

Where do the photons go? How? Why?

Is this like one of those repeating Gosper Glider Gun things or something? I feel like this would be a practical method to build one, in an empty universe where only these things existed.

a reply to: Archivalist

That sort of reminds me of the "what happens when an unstoppable force encounters an unmovable structure?" question.

It's not a real world question or problem because we can't define anything which has either of those properties, so the question is unanswerable.

Likewise I don't know exactly what you mean by "Perfect photon beam" but I suspect that's as unlikely to exist in the real world as an unstoppable force or an immovable structure. As far as I know, photons can't be localized to an unlimited extent which is how I'm interpreting your "Perfect photon beam".

In the real world, the photon beams are "not perfect" meaning not localized to an unlimited extent, so if I understand the geometry you propose, they wouldn't hit the exact corner and so would tend to reflect back toward the source.

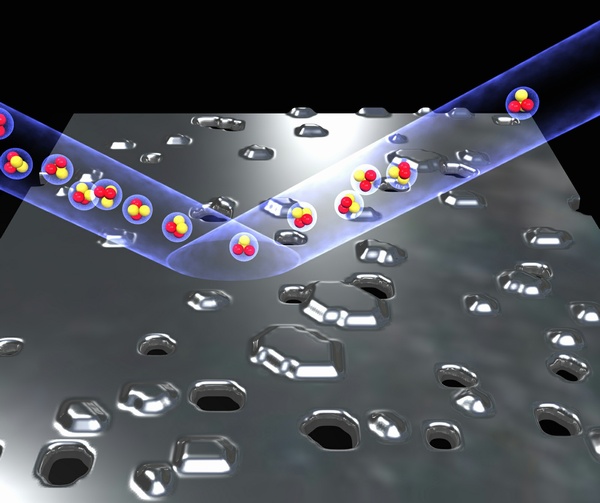

Also consider the microscopic structure of the mirrors composed of atoms, and the nature of the junction of the reflectors at the 90 degree "sharp corner" which probably wouldn't be as sharp as you think if you looked at a real world magnification of a real corner. This is supposed to illustrate what is claimed to be the smoothest mirror ever and it has holes and bumps though greatly exaggerated and not shown actual size of course:

Quantum Stabilized Atom Mirror - The Smoothest Surface Ever Created

Imagine how the 90 degree corner will look at that scale. Is it two different surfaces placed closely together, which might leave a tiny gap or discontinuity at the corner? Or, if a mirror coating is added after making the corner, can you really deposit the mirror coating without making a small radius at the corner? I've never tried that so I'm not really sure about the technology limits of that. But the photon position not being localized to an unlimited extent may be a fundamental uncertainty property, and not just a technology limitation.

That sort of reminds me of the "what happens when an unstoppable force encounters an unmovable structure?" question.

It's not a real world question or problem because we can't define anything which has either of those properties, so the question is unanswerable.

Likewise I don't know exactly what you mean by "Perfect photon beam" but I suspect that's as unlikely to exist in the real world as an unstoppable force or an immovable structure. As far as I know, photons can't be localized to an unlimited extent which is how I'm interpreting your "Perfect photon beam".

In the real world, the photon beams are "not perfect" meaning not localized to an unlimited extent, so if I understand the geometry you propose, they wouldn't hit the exact corner and so would tend to reflect back toward the source.

Also consider the microscopic structure of the mirrors composed of atoms, and the nature of the junction of the reflectors at the 90 degree "sharp corner" which probably wouldn't be as sharp as you think if you looked at a real world magnification of a real corner. This is supposed to illustrate what is claimed to be the smoothest mirror ever and it has holes and bumps though greatly exaggerated and not shown actual size of course:

Quantum Stabilized Atom Mirror - The Smoothest Surface Ever Created

Quantum stabilised atom mirror which, despite small holes and “islands”, mostly has a smooth surface...

Imagine how the 90 degree corner will look at that scale. Is it two different surfaces placed closely together, which might leave a tiny gap or discontinuity at the corner? Or, if a mirror coating is added after making the corner, can you really deposit the mirror coating without making a small radius at the corner? I've never tried that so I'm not really sure about the technology limits of that. But the photon position not being localized to an unlimited extent may be a fundamental uncertainty property, and not just a technology limitation.

edit on 2019729 by Arbitrageur because: clarification

"fundamental uncertainty property, and not just a technology limitation. "

I don't believe that any uncertainty supersedes technological limitation, infinitely.

My religion is one of universal advancement.

Infinity is a concept that does not actually exist in this reality.

There is no uncertainty that supersedes all reference frames indefinitely.

So, I assume with my question, one day, we have the ability to manufacture perfect super reflectors, place them together, with gaps of prediction, smaller than the typical photon "uncertainty" jiggle.

We will probably develop a means of photon transmission, such that it can not be distinguished from a true perfect beam.

I am making my conjecture based on technological advancements, as observed, relative to mass technical understanding, of the sentient populace. I believe my question is a reasonable question, as I have not placed any frame of constraint involving time on it.

One is free to imagine methods by which to create my idea, two dimensionally, if necessary.

Hence, my reference to the Gosper Glider. (Look into Optimum Theory for more on that.)

If nothing else, one could, in theory, construct my idea with modern equipment, then selectively ignore instances of "uncertain" photons outside of the constraints of the test, as well as ignore instances where flaws in the reflectors were the points of interaction.

Thus, you could run this experiment for years, for mere seconds of data, if that is necessary.

If no one is willing to tackle these questions, or determine experimental methods to test "questions of uncertainty" how do we know those are really barriers of physics?

I'm mostly certain the Earth is not flat. I have not personally attempted to sail over the edge or go to space.

However, SOMEONE HAD TO. This thread is just for discussion, not necessarily action. (Who expects action from a thread on the internet?)

I believe my question has value, in existing here. Beyond "This is an exercise of displaying uncertainty and how it is a boundary we will never cross." I believe that exact statement would be equine excrement, with all due respect, sir.

I don't believe that any uncertainty supersedes technological limitation, infinitely.

My religion is one of universal advancement.

Infinity is a concept that does not actually exist in this reality.

There is no uncertainty that supersedes all reference frames indefinitely.

So, I assume with my question, one day, we have the ability to manufacture perfect super reflectors, place them together, with gaps of prediction, smaller than the typical photon "uncertainty" jiggle.

We will probably develop a means of photon transmission, such that it can not be distinguished from a true perfect beam.

I am making my conjecture based on technological advancements, as observed, relative to mass technical understanding, of the sentient populace. I believe my question is a reasonable question, as I have not placed any frame of constraint involving time on it.

One is free to imagine methods by which to create my idea, two dimensionally, if necessary.

Hence, my reference to the Gosper Glider. (Look into Optimum Theory for more on that.)

If nothing else, one could, in theory, construct my idea with modern equipment, then selectively ignore instances of "uncertain" photons outside of the constraints of the test, as well as ignore instances where flaws in the reflectors were the points of interaction.

Thus, you could run this experiment for years, for mere seconds of data, if that is necessary.

If no one is willing to tackle these questions, or determine experimental methods to test "questions of uncertainty" how do we know those are really barriers of physics?

I'm mostly certain the Earth is not flat. I have not personally attempted to sail over the edge or go to space.

However, SOMEONE HAD TO. This thread is just for discussion, not necessarily action. (Who expects action from a thread on the internet?)

I believe my question has value, in existing here. Beyond "This is an exercise of displaying uncertainty and how it is a boundary we will never cross." I believe that exact statement would be equine excrement, with all due respect, sir.

edit on 29-7-2019 by Archivalist because: optimum theory reference added, seems relevant. They demonstrate an approximation of unified field

theory in 2 dimensional simulations.

a reply to: Archivalist

You're entitled to your opinion, of course, as am I.

As I've said before the models we use are only the best models we've found today, and they could be tweaked as we learn more, so I never claim our current models are any kind of ultimate truth. However there's a lot of experimental foundation for the models which seem to suggest there may be some fundamental issues with what you suggest. If you can figure out how to make a "perfect photon beam" then maybe the models are wrong, which is possible, but please let us know as I'd like to see it.

Here's an article that discusses some of the known problems with trying to make a photon beam more and more narrow, and maybe you're clever enough to figure out ways around this, but I admit that I don't know how to do it so these limitations seem real to me.

How do you make a one-photon-thick beam of light?

So the wave-like properties of photons are one of the limitations in narrowing the beam to an unlimited extent but I think it's also the wave-like properties of photons that will create the result I suggested of being reflected back toward the source, provided you're using visible light photons and provided I understand your proposed geometry, which I'm not sure I do since you didn't provide any diagram.

As the article says, limitations in beam width depend on frequency, where higher frequencies can be made more narrow but once you go beyond visible light, the photons can interact differently with materials than visible light does. We call the photons with the highest frequencies gamma rays, but gamma rays are not reflected by mirrors. If you use gamma rays, it doesn't really matter if there's a gap at the 90 degree corner or not, because the gamma rays "see" gaps everywhere in the molecular structure of the mirror, which is why they aren't reflected. So, the narrowest beam of photons you could hypothetically make with gamma rays won't reflect from a mirror.

You're entitled to your opinion, of course, as am I.

As I've said before the models we use are only the best models we've found today, and they could be tweaked as we learn more, so I never claim our current models are any kind of ultimate truth. However there's a lot of experimental foundation for the models which seem to suggest there may be some fundamental issues with what you suggest. If you can figure out how to make a "perfect photon beam" then maybe the models are wrong, which is possible, but please let us know as I'd like to see it.

Here's an article that discusses some of the known problems with trying to make a photon beam more and more narrow, and maybe you're clever enough to figure out ways around this, but I admit that I don't know how to do it so these limitations seem real to me.

How do you make a one-photon-thick beam of light?

There is no such thing as a one-photon-thick beam of light. Photons are not solid little balls that can be lined up in a perfectly straight beam that is one photon wide. Instead, photons are quantum objects. As such, photons act somewhat like waves and somewhat like particles at the same time. When traveling through free space, photons act mostly like waves. Waves can take on a variety of beam widths. But they cannot be infinitely narrow since waves are, by definition, extended objects. The more you try to narrow down the beam width of a wave, the more it will tend to spread out as it travels due to diffraction. This is true of water waves, sound waves, and light waves. The degree to which a light beam diffracts and diverges depends on the wavelength of the light. Light beams with larger wavelengths diverge more strongly than light beams with smaller wavelengths, all else being equal. As a result, smaller-wavelength beams can be made much narrower than larger-wavelength beams. The narrowness of a light beam therefore is ultimately limited by wave diffraction, which depends on wavelength, and not by a physical width of photon particles. The way to get the narrowest beam of light possible is by using the smallest wavelength available to you and focusing the beam, and not by lining up photons (which doesn't really make sense in the first place).

Furthermore, photons are bosons, meaning that many photons can overlap in the exact same quantum state. Millions of photons can all exist at the same location in space, going the same direction, with the same polarization, the same frequency, etc. In this additional way, the notion of a "one-photon-thick" beam of light does not really make any sense. Coherent beams such as laser beams and radar beams are composed of many photons all in the same state. The number of photons in a light beam is more an indication of the beam's brightness than of the beam's width. It does make sense to talk about a beam with a brightness of 1 photon per second. This statement means that a sensor receives one photon of energy from the light beam every second (which is a very faint beam of light, but is encountered in astronomy). Furthermore, we could construct a light source that only emits one photon of light every second. But we discover that as soon as the photon travels out into free space, the single photon spreads out into a wave that has a non-zero width and acts just like a coherent beam containing trillions of photons. Therefore, even if a beam has a brightness of only one photon per second, it still travels and spreads out through space like any other light beam.

So the wave-like properties of photons are one of the limitations in narrowing the beam to an unlimited extent but I think it's also the wave-like properties of photons that will create the result I suggested of being reflected back toward the source, provided you're using visible light photons and provided I understand your proposed geometry, which I'm not sure I do since you didn't provide any diagram.

As the article says, limitations in beam width depend on frequency, where higher frequencies can be made more narrow but once you go beyond visible light, the photons can interact differently with materials than visible light does. We call the photons with the highest frequencies gamma rays, but gamma rays are not reflected by mirrors. If you use gamma rays, it doesn't really matter if there's a gap at the 90 degree corner or not, because the gamma rays "see" gaps everywhere in the molecular structure of the mirror, which is why they aren't reflected. So, the narrowest beam of photons you could hypothetically make with gamma rays won't reflect from a mirror.

edit on 2019730 by Arbitrageur because: clarification

My previous link explained some limitations to making photon beams narrow, but it didn't mention uncertainty. There certainly have been experiments which seem to indicate that a certain amount of uncertainty is fundamental.

originally posted by: Archivalist

If no one is willing to tackle these questions, or determine experimental methods to test "questions of uncertainty" how do we know those are really barriers of physics?

Here's another explanation from the Univ. of Illinois department of physics that also explains why light beams spread out, which does mention the uncertainty. It also points out that perhaps somewhat counter-intuitively, a larger diameter beam will spread out less than a smaller diameter beam, though this isn't much help in trying to make the "perfect beam" suggested in your question.

Spatial and spectral spread of photons.

Light which is confined to a small aperture does indeed spread out in accordance with the uncertainty principle. This applies to laser beams as well. If you measure the laser beam at varying distances, you will find that its size continually increases at a hyperbolic rate. In fact, take your laser out to a field at night and look at it on a screen as far away as you can, and the beam will be huge!

If you tried to hit the moon with a laser, you'd want to use a BIG beam- 8 meters (24 feet) in radius. By the time it reached the moon, it would "only" be 16 meters across. That's the smallest spot you could make on the moon. In contrast, if you shone your handheld laser at the moon, it would expand to over 60,000 meters (~40 miles) wide!

An interesting fact to notice, however, is that the uncertainty principle only fixes the minimum amount a beam can spread out. Most beams spread out much faster than Heisenberg requires. A gaussian-shaped laser beam is one of the only types of light beams that actually spreads out by this minimum amount. That is why you don't see it spreading out unless you measure carefully, or just measure its size far away from the laser. (In addition, keep in mind that a typical laser beam is over 1000 times wider than the wavelength of light. This is reasonably large, so the beam doesn't diffract very rapidly. If your laser beam were much smaller, it would diverge faster.)

That's how nature seems to behave as far as we can tell from experiments already done. The narrower you try to make the beam, the more it spreads out.

edit on 2019730 by Arbitrageur because: clarification

Could it be that the universe was born from the collision of 2 or more dimensions?

For example, if I bring 2 circles closer and closer they eventually overlap like a Venn diagram.

The space between is seemingly popped into existence from its perspective, like a singularity.

For example, if I bring 2 circles closer and closer they eventually overlap like a Venn diagram.

The space between is seemingly popped into existence from its perspective, like a singularity.

a reply to: MahatmaGanja

The physics we know works a certain time after the big bang to the present, but before that, the physics we know sort of breaks down, so modeling the earliest time after the big bang is speculative. Trying to figure out what happened before that to cause the big bang is even more speculative. There is no shortage of ideas about that, but I don't know how we will ever test any of them.

If we can ever figure out how to model the earliest parts of the big bang, it's possible that might give us some insights into how it may have started, or not, but until we can do that I think we're getting ahead of ourselves speculating on what caused the big bang when we still can't even model conditions immediately after the big bang.

I suppose it's fun to talk about because it's so far beyond our understanding that you can come up with virtually any guess and how is anybody going to prove it's wrong? One possibility might be if an hypothesis proposed a trigger for the big bang that would leave an imprint of some sort on the Cosmic Microwave Background (CMB), in which case we could actually do some science to look for such an imprint. But unless there's something verifiable like that, isn't every guess just another "bedtime story" as Paul Sutter puts it?

What Triggered the Big Bang?

The physics we know works a certain time after the big bang to the present, but before that, the physics we know sort of breaks down, so modeling the earliest time after the big bang is speculative. Trying to figure out what happened before that to cause the big bang is even more speculative. There is no shortage of ideas about that, but I don't know how we will ever test any of them.

If we can ever figure out how to model the earliest parts of the big bang, it's possible that might give us some insights into how it may have started, or not, but until we can do that I think we're getting ahead of ourselves speculating on what caused the big bang when we still can't even model conditions immediately after the big bang.

I suppose it's fun to talk about because it's so far beyond our understanding that you can come up with virtually any guess and how is anybody going to prove it's wrong? One possibility might be if an hypothesis proposed a trigger for the big bang that would leave an imprint of some sort on the Cosmic Microwave Background (CMB), in which case we could actually do some science to look for such an imprint. But unless there's something verifiable like that, isn't every guess just another "bedtime story" as Paul Sutter puts it?

What Triggered the Big Bang?

Earlier than 10^-36 seconds, we simply don't understand the nature of the universe. The Big Bang theory is fantastic at describing everything after that, but before it, we're a bit lost. Get this: At small enough scales, we don't even know if the word "before" even makes sense! At incredibly tiny scales (and I'm talking tinier than the tiniest thing you could possible imagine), the quantum nature of reality rears its ugly head at full strength, rendering our neat, orderly, friendly spacetime into a broken jungle gym of loops and tangles and rusty spikes. Notions of intervals in time or space don't really apply at those scales. Who knows what's going on?

There are, of course, some ideas out there — models that attempt to describe what "ignited" or "seeded" the Big Bang, but at this stage, they're pure speculation. If these ideas can provide observational clues — for example, a special imprint on the CMB, then hooray — we can do science!

If not, they're just bedtime stories.

a reply to: Arbitrageur

Quick question.

Is the corkscrew motion of the solar system true?

And if so what is the best description of 'orbit'?

If no planets ever goes in front of the suns forward trajectory, aka in front of ol Sol as it flies forward (or falls into anothers gravity well) throughout the solar system, then what is a more correct definition of 'orbit'?

Quick question.

Is the corkscrew motion of the solar system true?

And if so what is the best description of 'orbit'?

If no planets ever goes in front of the suns forward trajectory, aka in front of ol Sol as it flies forward (or falls into anothers gravity well) throughout the solar system, then what is a more correct definition of 'orbit'?

edit on 14-8-2019 by Skyfox81 because: (no reason given)

The model you are referring to from DjSadhu is quite inaccurate for a few reasons in mostly what the author is trying to tell the viewer and the

slight inaccuracies it has.

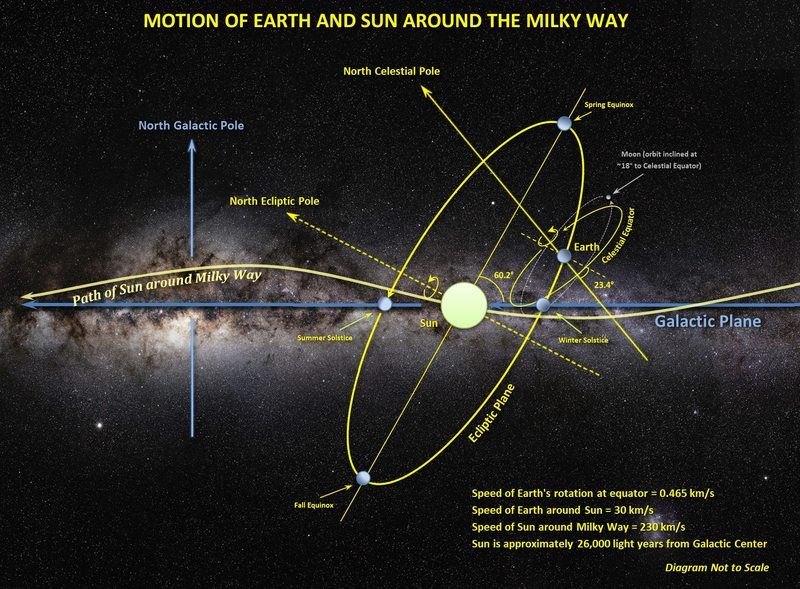

The planets are locked in an orbit with the Sun. In a frame of reference in which we track the sun, the planets orbit around the sun at a plane set at about 60degrees to the plane of the milky way. If you take your reference as that of the galaxy, then the motion of the sun, would make a spiral like path.

This is where the BUT comes in. The video and statements made by DjSadhu appear to claim your last statement, which is incorrect. The orbital inclination of the planets relative to the galactic plane is 60degrees and there is nothing wrong or disallowed by this, it is what we observe, that means that Yes, the planets do lead and trail the sun dependant upon the time of year. DjSadhu gets his models horrendously wrong, especially in his second video where he claims the solar system is moving edge on in line with the galactic disc and the only way it can be is if the planets are moving exactly perpendicular.

Also in his second video he claims the sun itself moves in a spiral path. This once more is absolutely incorrect. The suns path around the galactic disk is quite circular as far as we can tell, we rise and dip in and out of the disk, there might be some harmonics that make it appear not quite circular but it certainly isn't this exaggerated corkscrew.

The other issue is language. He never really refers to it as a corkscrew, but as a vortex. A vortex is something that is self interacting to form, that would mean that the interaction between the planets is equal or a high proportion of the total interactions occuring within the solar system... IE planet to planet interactions are similar to the sun and planets... this is not true either.

The best description of an orbit all depends upon the frame of reference. It is not problematic to lock the frame of reference to the sun, humanity has sent probes on orbits reliant mostly on Newtonian physics, there was no need to invoke some kind of helical or corkscrew description to make it work.

The planets are locked in an orbit with the Sun. In a frame of reference in which we track the sun, the planets orbit around the sun at a plane set at about 60degrees to the plane of the milky way. If you take your reference as that of the galaxy, then the motion of the sun, would make a spiral like path.

This is where the BUT comes in. The video and statements made by DjSadhu appear to claim your last statement, which is incorrect. The orbital inclination of the planets relative to the galactic plane is 60degrees and there is nothing wrong or disallowed by this, it is what we observe, that means that Yes, the planets do lead and trail the sun dependant upon the time of year. DjSadhu gets his models horrendously wrong, especially in his second video where he claims the solar system is moving edge on in line with the galactic disc and the only way it can be is if the planets are moving exactly perpendicular.

Also in his second video he claims the sun itself moves in a spiral path. This once more is absolutely incorrect. The suns path around the galactic disk is quite circular as far as we can tell, we rise and dip in and out of the disk, there might be some harmonics that make it appear not quite circular but it certainly isn't this exaggerated corkscrew.

The other issue is language. He never really refers to it as a corkscrew, but as a vortex. A vortex is something that is self interacting to form, that would mean that the interaction between the planets is equal or a high proportion of the total interactions occuring within the solar system... IE planet to planet interactions are similar to the sun and planets... this is not true either.

The best description of an orbit all depends upon the frame of reference. It is not problematic to lock the frame of reference to the sun, humanity has sent probes on orbits reliant mostly on Newtonian physics, there was no need to invoke some kind of helical or corkscrew description to make it work.

I don't think I've ever seen his model but I've seen some corkscrew youtube animations by others that never get it quite right because they use 90 degree angles instead of 60 degrees, and they don't show this:

originally posted by: ErosA433

The model you are referring to from DjSadhu is quite inaccurate for a few reasons...

the planets orbit around the sun at a plane set at about 60degrees to the plane of the milky way.

That rise and dip is never shown in any corkscrew animation I've seen.

The suns path around the galactic disk is quite circular as far as we can tell, we rise and dip in and out of the disk.

a reply to: Skyfox81

There's an old joke in physics:

"Assume a perfectly spherical hippopotamus"

The joke is supposed to highlight that especially when teaching physics but even in making simplified models, we sometimes make assumptions we know are not true, and thus the model is not exactly true. So, in school you might learn some simplified orbital models which only have two bodies, say the sun and the Earth for example, but as with the spherical hippo, this too is an oversimplification which we know is not exactly true, because there are other bodies in the solar system which also have gravitational effects.

However most of the mass in the solar system is in the sun so depending on how accurate you need to be, the gravitational effects other planets are relatively small when considering the Earth's orbit around the sun.

Regarding the sun's orbit around the Milky Way, I've never seen a corkscrew animation which is correct, because all the animations I've seen assume a 90 degree angle of the solar system to the galaxy, but the angle is only about 60 degrees, not 90. A poster on Physicsforums tried to make a diagram showing the alignment and asked physicists for some feedback, and I suspect this correctly shows the 60 degree angle though I'm not sure it's 100% accurate otherwise. It's obviously not to scale, it says so.

www.physicsforums.com...

That diagram also shows another motion in the sun's orbit I've never see in any corkscrew animation, see what Eros called the "rise and dip in and out of the disk" where the sun move above and below the plane of the galaxy? Again, you've probably never seen anything like that in a simplified orbit equation, but in disks composed of many objects, this is what happens; all the objects in the milky way are gravitationally interacting with all the other objects in the milky way and our sun has that up and down motion as a result.

I'm not understanding why you think that makes the accepted definition of "orbit" inaccurate. For example here's a definition I found for orbit:

If no planets ever goes in front of the suns forward trajectory, aka in front of ol Sol as it flies forward (or falls into anothers gravity well) throughout the solar system, then what is a more correct definition of 'orbit'?

"a path described by one body in its revolution about another"

From the reference frame of the sun, there is no corkscrew motion of the Earth's orbital motion, so the Earth's orbit is more or less an ellipse (with some small tugs from other planets).

From the reference frame of the Milky Way, the definition doesn't quite fit because I'm not sure I'd call the Milky Way a "body", it's a collection of bodies, and that's why it can produce some things like the roller-coaster type motion of the sun which we don't normally expect to be part of an "orbit" definition, and I'm not sure it should be.

The real universe is more complicated than simplified concepts and definitions. I'm not sure we need to invent a new word for a galaxy orbit just because the galaxy orbit is more complicated because it has more bodies. I'm ok with using the word "orbit" for that too, and remembering that things are often more complicated than a simplified model or description.

I actually have more of a problem with what the dictionary says about an electron orbit, here's the full definition of orbit in Merriam-Webster:

"a path described by one body in its revolution about another (as by the earth about the sun or by an electron about an atomic nucleus)"

We now know that the electron is likely not following any "path" traveling around an atomic nucleus, which was a concept in an early model called the "Bohr model" that we now know to be wrong, yet sometimes you still see the Bohr model and that concept is in that dictionary definition of orbit even though we know it's wrong.

So we actually do use another, more accurate word for the replacement to the Bohr model which doesn't involve a planet-like orbit for electrons, we call them orbitals, from Britannica:

"Orbital, in chemistry and physics, a mathematical expression, called a wave function, that describes properties characteristic of no more than two electrons in the vicinity of an atomic nucleus or of a system of nuclei as in a molecule."

So, that word orbital has a better definition than "orbit", as far as electrons are concerned, though it doesn't have anything to do with planet orbits.

originally posted by: Arbitrageur

I don't think I've ever seen his model but I've seen some corkscrew youtube animations by others that never get it quite right because they use 90 degree angles instead of 60 degrees, and they don't show this:

originally posted by: ErosA433

The model you are referring to from DjSadhu is quite inaccurate for a few reasons...

the planets orbit around the sun at a plane set at about 60degrees to the plane of the milky way.

yeah its the same one, he is the author of the clip you have seen that seems to show the sun dragging the planets behind in a spiral path. While the diagram is exaggerated it is linked with so much woo it starts to get troublesome because the inaccuracies in the model or representation can be used as a follow on statement to push his ideas.

Kind of like... this looks true right.... so if thats true, this should be true.

DjSadhu's model is conceptually ok, but not an accurate depiction of what is happening.

a reply to: ErosA433

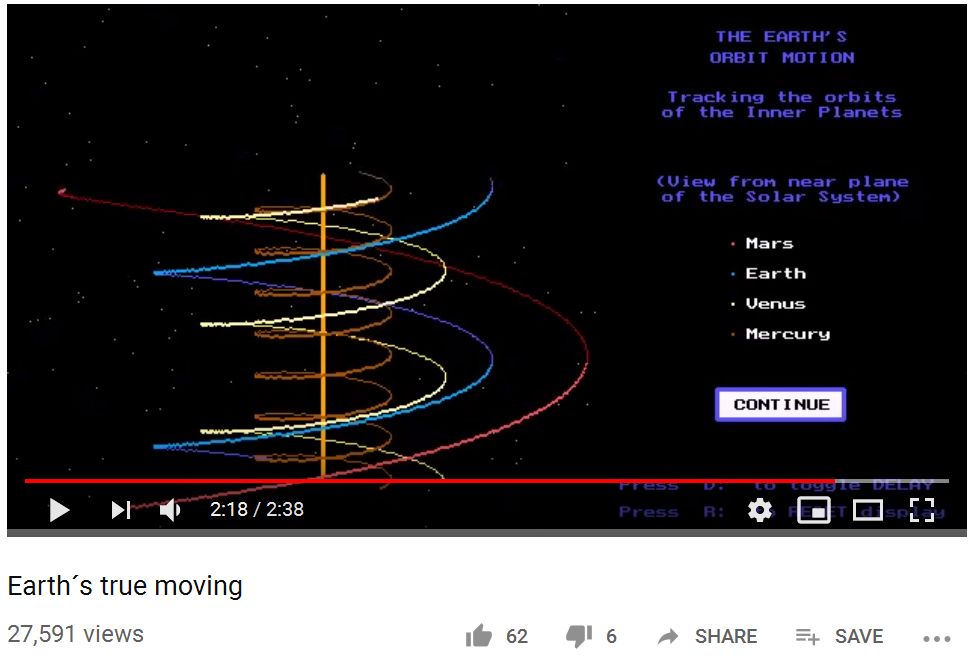

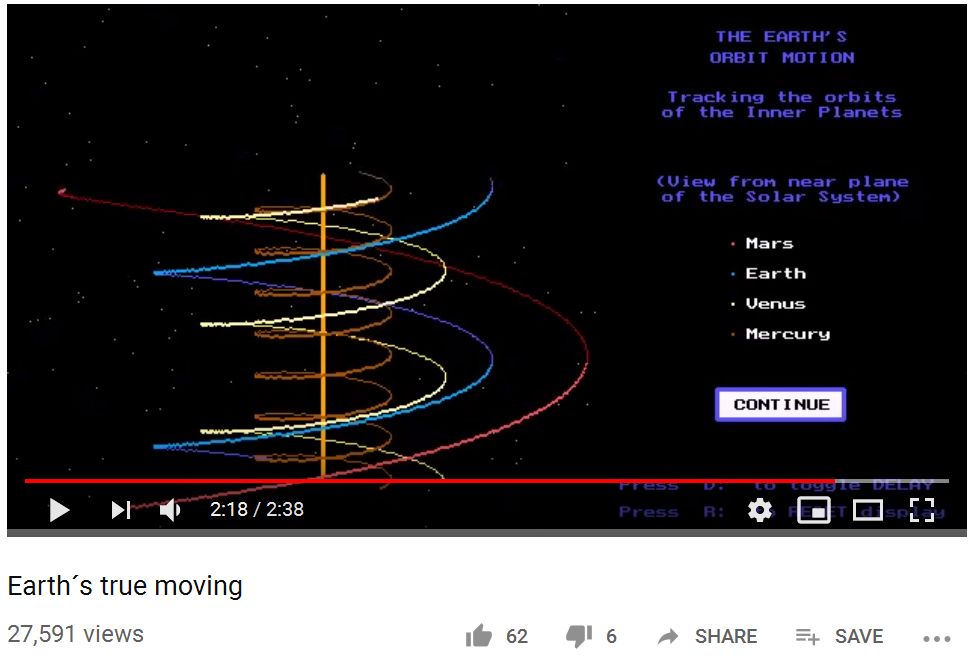

OK, I looked up some videos. DjSadhu's video went viral so it's probably the one people ask about, but the date on his video Aug 24, 2012 is later than the date on this video Published on Apr 22, 2012:

www.youtube.com...

That's probably not the original video I saw but it flashes the source "Resonanceproject.org" on the screen at times which I think was the source for the first time I saw such an animation. I'm not sure if someone in the Resonanceproject made it or if they copied it from someone else, but I think it pre-dates DjSadhu's video in any case and it's the same general idea with the same mistake of 90 degrees instead of 60 degres, but I'm wondering if maybe DjSadhu saw that or one like it in say early 2012 and then made a better one in later 2012, just a guess.

Nassim Haramein (Resonanceproject.org is his site) seemed to like that helical motion concept for some reason. He seems pretty far beyond fringe with with the mass of his Schwarzschild proton of 885 million metric tonnes, compared to the actual mass of a proton less than 2 trillionths of a gram. He doesn't seem to get the idea that if your prediction disagrees with observation, the model used to make the prediction is wrong, since he still tried to defend his model.

At the end of that video, there's a link back to an earlier video in 2007, which I didn't see in 2007

www.youtube.com...

Anyway to put the concept in perspective, people should also consider that the Earth is racing around the sun while the ISS is racing around the Earth, so this phenomenon of looking at things from larger reference frames showing a different appearance shouldn't seem unusual. In other words if your reference frame is larger than the earth, like the solar system, then the ISS orbit doesn't seem so simple anymore either. You can also go up to even larger scales than the galaxy, and add even more complicated motions, where the galaxies in our local group may be orbiting a point near the center of mass for the local group of galaxies, so from an expanded reference frame even more motions need to be added to any simple helix. Lots of things are moving and motions get complicated, but they all seem to follow the laws of physics as we know them so none of this debunks simpler, flat models we may have been taught in school, viewed from a particular reference frame.

Apparently some people think because they weren't taught these models viewed from other reference frames in school, that they can now conclude science was wrong because that's not what they taught, but there have been reference frames in science going at least as far back as Newton.

OK, I looked up some videos. DjSadhu's video went viral so it's probably the one people ask about, but the date on his video Aug 24, 2012 is later than the date on this video Published on Apr 22, 2012:

www.youtube.com...

That's probably not the original video I saw but it flashes the source "Resonanceproject.org" on the screen at times which I think was the source for the first time I saw such an animation. I'm not sure if someone in the Resonanceproject made it or if they copied it from someone else, but I think it pre-dates DjSadhu's video in any case and it's the same general idea with the same mistake of 90 degrees instead of 60 degres, but I'm wondering if maybe DjSadhu saw that or one like it in say early 2012 and then made a better one in later 2012, just a guess.

Nassim Haramein (Resonanceproject.org is his site) seemed to like that helical motion concept for some reason. He seems pretty far beyond fringe with with the mass of his Schwarzschild proton of 885 million metric tonnes, compared to the actual mass of a proton less than 2 trillionths of a gram. He doesn't seem to get the idea that if your prediction disagrees with observation, the model used to make the prediction is wrong, since he still tried to defend his model.

At the end of that video, there's a link back to an earlier video in 2007, which I didn't see in 2007

www.youtube.com...

So I think the origins of this incorrect 90 degree angle go as far back as 1989, since you can see it in the screen of that 1989 computer model.

The animation is a video screenshot from Voyage through The Solar System version 1.20 (1989) and shows the earth´s true motion in spirals.

Anyway to put the concept in perspective, people should also consider that the Earth is racing around the sun while the ISS is racing around the Earth, so this phenomenon of looking at things from larger reference frames showing a different appearance shouldn't seem unusual. In other words if your reference frame is larger than the earth, like the solar system, then the ISS orbit doesn't seem so simple anymore either. You can also go up to even larger scales than the galaxy, and add even more complicated motions, where the galaxies in our local group may be orbiting a point near the center of mass for the local group of galaxies, so from an expanded reference frame even more motions need to be added to any simple helix. Lots of things are moving and motions get complicated, but they all seem to follow the laws of physics as we know them so none of this debunks simpler, flat models we may have been taught in school, viewed from a particular reference frame.

Apparently some people think because they weren't taught these models viewed from other reference frames in school, that they can now conclude science was wrong because that's not what they taught, but there have been reference frames in science going at least as far back as Newton.

edit on 2019814 by Arbitrageur because: clarification

a reply to: Arbitrageur

Thanks for your response.

So in layman's terms, gravity well wise;

* Why does Gaia not 'fall' into Lunar when its between us an Sol?

* Why does Lunar not 'fall' into Gaia when its between us an Sol?

How do gravity wells work in space, on a solar scale?

Thanks for your response.

So in layman's terms, gravity well wise;

* Why does Gaia not 'fall' into Lunar when its between us an Sol?

* Why does Lunar not 'fall' into Gaia when its between us an Sol?

How do gravity wells work in space, on a solar scale?

edit on 15-8-2019 by Skyfox81 because: (no reason given)

a reply to: Skyfox81

Gravity works rather closely to the way Newton described over 300 years ago; his model explains most motions in the solar system except the precession of Mercury and we need to tweak his model a bit using Einstein's math to explain that.

Newton's thought experiment called "Newton's cannonball" is the idea that if you keep using more and more powder to fire a cannonball, the cannonball will keep going farther and farther. In the thought experiment, if you keep increasing the speed of the cannonball, eventually, it will have so much speed that it won't fall back down to the Earth, it will keep going around the earth, constantly falling toward the Earth but never hitting it.

That's basically how we get the international space station to orbit the Earth, except we use rockets instead of a cannon, and give it enough speed so it will keep falling toward the Earth but never actually hit it. Both the ISS and Newton's cannon thought experiment suffer from a slight flaw in the idea called atmospheric drag, which tends to take speed away from objects attempting to pass through an atmosphere. So, even though we give the ISS enough speed to not fall back to Earth if there was no atmosphere, the atmosphere makes it gradually slow down and it gradually falls back to Earth. We have to use thrusters on the ISS to "boost" the orbit every so often to keep it from falling too far.

But for the orbits of the Earth around the sun and the moon around the Earth, there's no atmospheric drag and they have enough speed to keep going in their orbits. It is that speed which answers these questions:

Because the Earth is so much more massive, the barycenter the moon and earth both orbit is actually inside the Earth, similar to where the red crosshairs are in this animation:

That Earth-moon barycenter moves around the sun at 30,000 meters per second or about 70,000 miles per hour, and that speed allows the Earth and moon to keep falling toward the sun, but never crash into it.

Gravity works rather closely to the way Newton described over 300 years ago; his model explains most motions in the solar system except the precession of Mercury and we need to tweak his model a bit using Einstein's math to explain that.

Newton's thought experiment called "Newton's cannonball" is the idea that if you keep using more and more powder to fire a cannonball, the cannonball will keep going farther and farther. In the thought experiment, if you keep increasing the speed of the cannonball, eventually, it will have so much speed that it won't fall back down to the Earth, it will keep going around the earth, constantly falling toward the Earth but never hitting it.

That's basically how we get the international space station to orbit the Earth, except we use rockets instead of a cannon, and give it enough speed so it will keep falling toward the Earth but never actually hit it. Both the ISS and Newton's cannon thought experiment suffer from a slight flaw in the idea called atmospheric drag, which tends to take speed away from objects attempting to pass through an atmosphere. So, even though we give the ISS enough speed to not fall back to Earth if there was no atmosphere, the atmosphere makes it gradually slow down and it gradually falls back to Earth. We have to use thrusters on the ISS to "boost" the orbit every so often to keep it from falling too far.

But for the orbits of the Earth around the sun and the moon around the Earth, there's no atmospheric drag and they have enough speed to keep going in their orbits. It is that speed which answers these questions:

Those questions seem to be posed as if there's a static or not moving situation when those occur. But the moon moves around the Earth at 2,288 miles per hour (3,683 kilometers per hour), and that is fast enough so that it keeps falling toward the Earth but never hits the Earth. The earth is also falling toward the moon. If the Earth and the moon were of equal mass, they would orbit a common center of gravity (called a barycenter) halfway between them, which would look something like this:

* Why does Gaia not 'fall' into Lunar when its between us an Sol?

* Why does Lunar not 'fall' into Gaia when its between us an Sol?

Because the Earth is so much more massive, the barycenter the moon and earth both orbit is actually inside the Earth, similar to where the red crosshairs are in this animation:

That Earth-moon barycenter moves around the sun at 30,000 meters per second or about 70,000 miles per hour, and that speed allows the Earth and moon to keep falling toward the sun, but never crash into it.

edit on 2019815 by Arbitrageur because: clarification

originally posted by: Skyfox81

a reply to: Arbitrageur

How do gravity wells work in space, on a solar scale?

They add up.

So Earth orbital path does not follow an exact ellipse, but wobbles around a bit, as explained by Arbitrageur. To be exact you would have to consider all masses in the system. But the effect of most of them is so small that it practically doesn't matter.

a reply to: moebius

Ques

Do you believe that dark matter existed before the big bang?

Tho I have said on here that it did and probably caused the big bang due to incredible time rate passing some instability threshold.

Now researchers at the John Hopkins are saying that dark matter existed before the big bang

Ques

Do you believe that dark matter existed before the big bang?

Tho I have said on here that it did and probably caused the big bang due to incredible time rate passing some instability threshold.

Now researchers at the John Hopkins are saying that dark matter existed before the big bang

The Hubble Constant is a correlation between the distance of galaxy clusters and their recession velocities. Dark flow is a claimed deviation from that where the recession velocity of a cluster of galaxies does not align with the Hubble constant.

originally posted by: MaxNocerino8

What is Dark Flow?

However there is some debate about whether the reported dark flow is accurate and I'm not current on the latest state of the debate, but this was a criticism of the dark flow claims by Ned Wright in 2008 saying the dark flow calculations can't be trusted because they have errors:

Dark Flow Detected - Not!

24 Sep 2008 - Kashlinsky et al. (2008) have claimed a detection of a bulk flow in the motion of many distant X-ray emitting clusters of galaxies. Unfortunately this paper and the companion paper have several errors so their conclusions cannot be trusted.

In 2013 research was published placing constraints on dark flow or bulk flow saying the Planck data didn't show evidence for it:

Planck intermediate results. XIII. Constraints on peculiar velocities

Planck data also set strong constraints on the local bulk flow in volumes centred on the Local Group. There is no detection of bulk flow as measured in any comoving sphere extending to the maximum redshift covered by the cluster sample. A blind search for bulk flows in this sample has an upper limit of 254 km s^−1 (95% confidence level) dominated by CMB confusion and instrumental noise, indicating that the Universe is largely homogeneous on Gpc scales.

So I don't know whether dark flow actually exists or not. The last I looked into it some years ago, it seemed the jury was still out, but there was definitely skepticism. I don't know if that's still the case or not. Maybe someone has a more up to date perspective.

If it does exist it could be an indication of a non-homogeneity in mass distribution beyond our observable universe, or some claimed the flow could be an indication of gravitational attraction to something beyond our universe, like another universe, but if it turns out to be the result of analytical errors, there's not much point in worrying about the source of a flow which doesn't really exist.

new topics

-

George Stephanopoulos and ABC agree to pay $15 million to settle Trump defamation suit

Mainstream News: 2 hours ago -

More Bad News for Labour and Rachel Reeves Stole Christmas from Working Families

Regional Politics: 8 hours ago -

Light from Space Might Be Travelling Instantaneously

Space Exploration: 10 hours ago -

The MSM has the United Healthcare assassin all wrong.

General Conspiracies: 11 hours ago -

2025 Bingo Card

The Gray Area: 11 hours ago

top topics

-

The Mystery Drones and Government Lies

Political Conspiracies: 13 hours ago, 14 flags -

George Stephanopoulos and ABC agree to pay $15 million to settle Trump defamation suit

Mainstream News: 2 hours ago, 12 flags -

Light from Space Might Be Travelling Instantaneously

Space Exploration: 10 hours ago, 8 flags -

2025 Bingo Card

The Gray Area: 11 hours ago, 7 flags -

The MSM has the United Healthcare assassin all wrong.

General Conspiracies: 11 hours ago, 7 flags -

More Bad News for Labour and Rachel Reeves Stole Christmas from Working Families

Regional Politics: 8 hours ago, 7 flags

active topics

-

George Stephanopoulos and ABC agree to pay $15 million to settle Trump defamation suit

Mainstream News • 7 • : WeMustCare -

Drones everywhere in New Jersey

Aliens and UFOs • 132 • : FlatBatt -

Light from Space Might Be Travelling Instantaneously

Space Exploration • 22 • : Freeborn -

Pelosi injured in Luxembourg

Other Current Events • 32 • : nugget1 -

The Mystery Drones and Government Lies

Political Conspiracies • 66 • : nugget1 -

They Know

Aliens and UFOs • 85 • : ARM19688 -

Nov 2024 - Former President Barack Hussein Obama Has Lost His Aura.

US Political Madness • 14 • : xuenchen -

2025 Bingo Card

The Gray Area • 15 • : onestonemonkey -

-@TH3WH17ERABB17- -Q- ---TIME TO SHOW THE WORLD--- -Part- --44--

Dissecting Disinformation • 3681 • : Thoughtful3 -

Something better

Dissecting Disinformation • 25 • : Astrocometus