It looks like you're using an Ad Blocker.

Please white-list or disable AboveTopSecret.com in your ad-blocking tool.

Thank you.

Some features of ATS will be disabled while you continue to use an ad-blocker.

share:

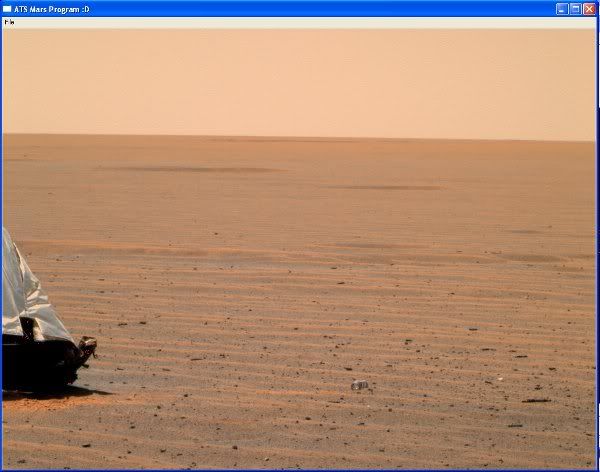

From Opportunity, dataset Sol 864 found HERE.

Later in the evening as the sun just drops below the horizon, again the Rayleigh scattering effect shows the sky changing color and follows the sunlight as it dips behind the horizon. Looks alot like an evening out in the western desert.

Full size HERE.

Cheers!!!!

[edit on 18-12-2008 by RFBurns]

Later in the evening as the sun just drops below the horizon, again the Rayleigh scattering effect shows the sky changing color and follows the sunlight as it dips behind the horizon. Looks alot like an evening out in the western desert.

Full size HERE.

Cheers!!!!

[edit on 18-12-2008 by RFBurns]

Originally posted by rocksarerocks

Sooo many pages and not 1 person can say why NASA would want to hide blue skies KNOWING we are going up there one day.

Please. No one is that stupid.

Why are you trolling the thread?

No one is claiming Nasa is trying to "hide" anything! Are you ignorant? or just plain stupid?

Read the entire thread, you will find your answer!

This is definately one of those threads that should be read from page 1, lots of info and lots of pictures.

More to come very soon!

Cheers!!!!

More to come very soon!

Cheers!!!!

I haven't read all postings on this thread, but from the ones I read, it looks like the different types of camera/image calibration is not taken into

consideration.

If you take the raw image and adjust the colors to make the color chart look like the one on Earth under a calibrated light source, you only get a relative calibration to that very calibrated light source. That would be the same as putting that light source on Mars next to the Rover, during night to elimate the influence of Martian ambience. You do not get the absolute calibration to reproduce the view an astronaut would have himself on the Martian surface.

To get that absolute calibration, absolute not in the meaning of perfect but in the meaning of relative to a common scale or standard, you need to have a picture taken by the Rover camera of the calibration chart on Earth under sunlight condition. That kind of pictures or at least their raw data with additional aperture and shutter speed data are kept well hidden and are not public. By chance I found raw Viking image data of that type, but without the auxilary camera data. If you are really interested in a scientific investigation into that, please contact me personally: [email protected]

As my primary interest is not to damage the public image of NASA or JPL as their engineers and scientists did an overall amazing job, any result of the investigation would be first send to JPL/NASA for discussion, so they would have the chance to fix the problem internally first. Maybe it was just a dumb error in the past, who knows?

Now some public available Viking images showing the different type of sky color during normal clear Martian weather with blue skies, sharp shadows, and the occasional dust storm season with red skies and diffuse light. Taken from a German article I wrote some years ago and tried to translate to English:

mars-news.de...

Picture 12E018, showing red sky during a dust storm:

03.Jul 1977, 15:20

Picture 12B166, showing the typical blue skies during normal weather conditions:

6.Oct 1976, 7:48

If you take the raw image and adjust the colors to make the color chart look like the one on Earth under a calibrated light source, you only get a relative calibration to that very calibrated light source. That would be the same as putting that light source on Mars next to the Rover, during night to elimate the influence of Martian ambience. You do not get the absolute calibration to reproduce the view an astronaut would have himself on the Martian surface.

To get that absolute calibration, absolute not in the meaning of perfect but in the meaning of relative to a common scale or standard, you need to have a picture taken by the Rover camera of the calibration chart on Earth under sunlight condition. That kind of pictures or at least their raw data with additional aperture and shutter speed data are kept well hidden and are not public. By chance I found raw Viking image data of that type, but without the auxilary camera data. If you are really interested in a scientific investigation into that, please contact me personally: [email protected]

As my primary interest is not to damage the public image of NASA or JPL as their engineers and scientists did an overall amazing job, any result of the investigation would be first send to JPL/NASA for discussion, so they would have the chance to fix the problem internally first. Maybe it was just a dumb error in the past, who knows?

Now some public available Viking images showing the different type of sky color during normal clear Martian weather with blue skies, sharp shadows, and the occasional dust storm season with red skies and diffuse light. Taken from a German article I wrote some years ago and tried to translate to English:

mars-news.de...

Picture 12E018, showing red sky during a dust storm:

03.Jul 1977, 15:20

Picture 12B166, showing the typical blue skies during normal weather conditions:

6.Oct 1976, 7:48

reply to post by Holger Isenberg

Hi Holger!!

What I do is I use the sundail calibration data which is contianed in 99 precent of the datasets for each images I process. I use those calibrations and apply them to the picture data. According to NASA, before they take a picture of the landscape or a cluster of rocks, or the sky, they always take pictures of the sundial calibration unit prior to taking any pictures of anything else. That means that the pictures taken of whatever they are taking of, the camera is calibrated for the given illumination levels where the rover happens to be at.

Unless they spend 5 hours from taking an image of the sundial to calibrate the camera, then take a picture of the surrounding area and or rocks, enough time would elapse that the natural sunlight that was used at time of sundial calibration, is now severely off and thus any pictures they take, no matter what filter used, would also be severely incorrect! I dont think thats how they were operating the system. Doesnt make sense to calibrate, wait till the light levels change, and not re-calibrate, and take a picture with mis-calibrated data. Even that would affect the results from the geology filters and the visual RGB filters.

Those cameras were set up here on Earth, for a base reference to the RGB standards and the filters used. So unless the units are severely degraded from their initial setups, then any calibration would be incorrect.

But taking all of the sundial data images and layering them, the sundial comes out correct with respect to white balance of the ring, the blue, red and green and orange color tabs, the silver outer ring, the tiny blue dot and red dot on the white larger ring. The sundial appears natural and correct. Using those settings on the other pictures in the dataset, the images come out as can be seen in the ones I have posted.

Surely a camera with 3 visual filters of RGB are going to see what they will pick up no matter their bandwidth limits. There have been comments saying that the red saturation images from NASA appear un-real, uninteresting and dull. Take images with color, even NASA's and those too look more alive and real, filled with more than just saturated red. Even the rocks look more like rocks, and the dark shades actually look dark, not redish dark.

Anyway good to see you here and thanks for the info! We are not here to dispute the established science protocols or findings. We are only disputing why so many red saturated images come out of NASA and Cornell when clearly we use the very same raw data they used and ours comes out very differently when calibrating and using those settings from the sundial to the scenery images.

Cheers!!!!

Hi Holger!!

What I do is I use the sundail calibration data which is contianed in 99 precent of the datasets for each images I process. I use those calibrations and apply them to the picture data. According to NASA, before they take a picture of the landscape or a cluster of rocks, or the sky, they always take pictures of the sundial calibration unit prior to taking any pictures of anything else. That means that the pictures taken of whatever they are taking of, the camera is calibrated for the given illumination levels where the rover happens to be at.

Unless they spend 5 hours from taking an image of the sundial to calibrate the camera, then take a picture of the surrounding area and or rocks, enough time would elapse that the natural sunlight that was used at time of sundial calibration, is now severely off and thus any pictures they take, no matter what filter used, would also be severely incorrect! I dont think thats how they were operating the system. Doesnt make sense to calibrate, wait till the light levels change, and not re-calibrate, and take a picture with mis-calibrated data. Even that would affect the results from the geology filters and the visual RGB filters.

Those cameras were set up here on Earth, for a base reference to the RGB standards and the filters used. So unless the units are severely degraded from their initial setups, then any calibration would be incorrect.

But taking all of the sundial data images and layering them, the sundial comes out correct with respect to white balance of the ring, the blue, red and green and orange color tabs, the silver outer ring, the tiny blue dot and red dot on the white larger ring. The sundial appears natural and correct. Using those settings on the other pictures in the dataset, the images come out as can be seen in the ones I have posted.

Surely a camera with 3 visual filters of RGB are going to see what they will pick up no matter their bandwidth limits. There have been comments saying that the red saturation images from NASA appear un-real, uninteresting and dull. Take images with color, even NASA's and those too look more alive and real, filled with more than just saturated red. Even the rocks look more like rocks, and the dark shades actually look dark, not redish dark.

Anyway good to see you here and thanks for the info! We are not here to dispute the established science protocols or findings. We are only disputing why so many red saturated images come out of NASA and Cornell when clearly we use the very same raw data they used and ours comes out very differently when calibrating and using those settings from the sundial to the scenery images.

Cheers!!!!

This isn't much different from what Cornell Univ. has except for brightness. Linear and cubic interpolations don't seem to make much of a

difference.

This is strangely absent from Cornell's website.

For comparison, here's Cornell's website:

marswatch.astro.cornell.edu...

This is strangely absent from Cornell's website.

For comparison, here's Cornell's website:

marswatch.astro.cornell.edu...

Originally posted by fleabit

Why would they try to alter the color of the sky? What does making us think the sky is red versus blue matter?

I have my ideas about that but what does that change about the fact that they are doing it? How does not being fully aware of the motives of others invalidate the possibility that they have motives you are not aware of?

I never understood that. The color of the sky isn't a conspiracy. A blue sky doesn't mean it's a breathable atmosphere.

But a blue sky would make it seem much like Earth as the blue skies photo's of Mars we do get to see have proven.

Heck, even in our own solar system, Neptune and Uranus have blue skies. If the atmosphere of any planet has gasses whose molecules are a lot smaller than the weavlength of light, the sky is generally assumed to have a blue color. Blue doesn't equate to oxygen, or even a less harsh atmosphere, it could be more harsh.

And maybe they are as always trying to manage our perceptions which generally does not mean that their deception are aimed at supposedly and otherwise well informed people such as yourself? I mean your clearly far too smart to be fooled by anyone?

If I were NASA, if I could think I could persuase the government and people that the atmosphere WAS less harsh, for the purpose of gaining support through government and private funding for later missions there, I'd do THAT.. not make it seem more harsh. This is sort of counter-productive to your mission.

But since NASA is a primarily military operation that pretty much does as it pleases independently of public perceptions of it's use why would it have to bother with making itself seem 'worth while'? Where is the evidence that NASA loses funding when it gives us red sky images of Mars? In fact why do the first thing they seem to cut from missions are those equipment and mission specialist who are best able to investigate issues that could capture the public imagination; where are the mission biologist?

So while I've heard the stories of them recoloring, I still don't think they are.... and if they are, I have no idea why.

So since you can't be fooled you aren't being fooled. This is why the normally arrogant educated people drive everyone around them absolutely mad.

Blue doesn't mean a thing. I don't get the point at all. Seems like a lot of work for no purpose.

Perception is everything if you are attempting to manage those who are currently mostly doing the things you want them to do. Perception of our surroundings are VERY important and if people believed that Mars had standing water, blue skies and active biology they would demand more information. It's a slippery slope and they are just doing the best they can to avoid the slide down it.

But perhaps you imagined that NASA was in the business of both finding the truth and telling the public about it?

Stellar

reply to post by RFBurns

Hi RFBurns!

There is one big problem with using the sundial images for calibration of other images even of the same day. The MER Pancam is set for almost all images to automatic exposure mode. That means it selects the exposure time for each color frame from the current amount of light at the very moment the image is taken. The result is, that each color frame has a different exposure time, meaning different calibration!

From the engineer's point of view, that approach is good, as it leads to optimized usage of the full dynamic range of the CCD and AD converter.

From the photographer's or scientist's point of view that approach is the worst, as in combination with nonlinear effects of the quantization, it is almost impossible to create calibrated color images from the 3 color frames.

As far as I know, the Pancam is capable of being set to manual exposure mode so I will try to search for images made in this mode in the PDS database.

Hi RFBurns!

There is one big problem with using the sundial images for calibration of other images even of the same day. The MER Pancam is set for almost all images to automatic exposure mode. That means it selects the exposure time for each color frame from the current amount of light at the very moment the image is taken. The result is, that each color frame has a different exposure time, meaning different calibration!

From the engineer's point of view, that approach is good, as it leads to optimized usage of the full dynamic range of the CCD and AD converter.

From the photographer's or scientist's point of view that approach is the worst, as in combination with nonlinear effects of the quantization, it is almost impossible to create calibrated color images from the 3 color frames.

As far as I know, the Pancam is capable of being set to manual exposure mode so I will try to search for images made in this mode in the PDS database.

reply to post by Holger Isenberg

Hi Holger!!

I also pay close attention to the filename numbers, which identify the location, time of day on Mars, and other information that helps me keep the calibration close. I dont use just one sundial setting across several datasets. I use the sundial calibrations for each of the individual datasets. Most of them have their own sundial calibration data for that given dataset, so I always refer to the dataset's individual sundial calibrations. I never use just one, only for its own dataset.

It wont ever be exact even if only 10 seconds elapse from the time they snap a shot of the sundial and calibrate, then tilt the pancam up and take a picture of the landscape. But certianly it isnt going to shift that much to a point where every single image taken has the very same level of red saturation across the entire scenery on a clear day.

I think that alot of "cooking" and "fibbing" is going on from NASA and Cornell about these figures and all this calibration stuff because really, red is red, green is green and blue is blue. Why even bother putting green or blue filters on the camera if there wasnt anything in those wavelengths to take a picture of on Mars? Why not just stick with the geology filters and IR filters and b/w?

Why is it that everything in these images looks more normal, more real with color than all those red saturated images do? Even the rocks look more natural. The hills, horizon, everything.

Lets look at Vikings color images. That uses an entirely different camera system and is analog in nature, meaning it has a linear characteristic which covers far more visible spectrum than these limited narrow bandwidth filters using CCD imagers.

Those images, when color corrected and white balanced to even make the co2 snow look white, and the white parts on the probes themselves, we end up with a bluish sky, brown/red ground, white parts on the probes, an American flag that actually looks normal, NASA's own emblem where the blue looks like its normal deep rich blue color with the red part, and the letters NASA are white as well. And we see the color chart squares, the white square is actually white, the red is red, the green is green, and most important of all, the blue square is blue, not dull bluish/violet.

The Viking images are using a whole different camera system. There were no CCD imagers in 76. The best electronic pickup device of the time was the Saticon and Plumbicon tube used in industrial and broadcast cameras. And both Viking probes used a Vidicon camera system and basically sent images line by line one at time. Analog in nature the cameras had far more bandwidth than the cams on Spirit and Opportunity for the visiual spectrum range.

It is the Viking images that show the most plausible evidence of the colors of Mars. There really is no reason why the cameras on Spirit and Opportunity would be so incapable of catching any of the blue or green stuff that Vikings 1 and 2 apparently did capture, even with Spirit's and Opportunity's narrow RGB filters, they can see enough to give us more than just red.

Thanks for posting and the info!!

Cheers!!!!

[edit on 21-12-2008 by RFBurns]

Hi Holger!!

I also pay close attention to the filename numbers, which identify the location, time of day on Mars, and other information that helps me keep the calibration close. I dont use just one sundial setting across several datasets. I use the sundial calibrations for each of the individual datasets. Most of them have their own sundial calibration data for that given dataset, so I always refer to the dataset's individual sundial calibrations. I never use just one, only for its own dataset.

It wont ever be exact even if only 10 seconds elapse from the time they snap a shot of the sundial and calibrate, then tilt the pancam up and take a picture of the landscape. But certianly it isnt going to shift that much to a point where every single image taken has the very same level of red saturation across the entire scenery on a clear day.

I think that alot of "cooking" and "fibbing" is going on from NASA and Cornell about these figures and all this calibration stuff because really, red is red, green is green and blue is blue. Why even bother putting green or blue filters on the camera if there wasnt anything in those wavelengths to take a picture of on Mars? Why not just stick with the geology filters and IR filters and b/w?

Why is it that everything in these images looks more normal, more real with color than all those red saturated images do? Even the rocks look more natural. The hills, horizon, everything.

Lets look at Vikings color images. That uses an entirely different camera system and is analog in nature, meaning it has a linear characteristic which covers far more visible spectrum than these limited narrow bandwidth filters using CCD imagers.

Those images, when color corrected and white balanced to even make the co2 snow look white, and the white parts on the probes themselves, we end up with a bluish sky, brown/red ground, white parts on the probes, an American flag that actually looks normal, NASA's own emblem where the blue looks like its normal deep rich blue color with the red part, and the letters NASA are white as well. And we see the color chart squares, the white square is actually white, the red is red, the green is green, and most important of all, the blue square is blue, not dull bluish/violet.

The Viking images are using a whole different camera system. There were no CCD imagers in 76. The best electronic pickup device of the time was the Saticon and Plumbicon tube used in industrial and broadcast cameras. And both Viking probes used a Vidicon camera system and basically sent images line by line one at time. Analog in nature the cameras had far more bandwidth than the cams on Spirit and Opportunity for the visiual spectrum range.

It is the Viking images that show the most plausible evidence of the colors of Mars. There really is no reason why the cameras on Spirit and Opportunity would be so incapable of catching any of the blue or green stuff that Vikings 1 and 2 apparently did capture, even with Spirit's and Opportunity's narrow RGB filters, they can see enough to give us more than just red.

Thanks for posting and the info!!

Cheers!!!!

[edit on 21-12-2008 by RFBurns]

reply to post by Deaf Alien

Hi DA!!

Ok the result from your program..that used Cornell's formulas..correct?

Have you tried the program with formulas from another source yet?

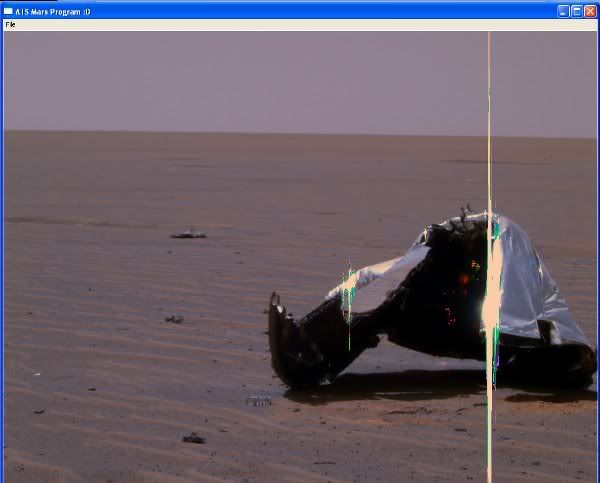

There is nothing strange about the missing image you got there and is not on Cornell's website. I think you found the smoking gun to what I am thinking is going on.

This is my result of that particular image using Gimp, nothing special, just layering the 3 data images.

Something tells me that those formulas at Cornell are exactly what NASA uses, and both are in the bandwagon of giving us only red images.

I think their numbers are cooked so that interpolation and all that ends up with the red. Numbers can be flexed and bended to achieve a specific result. I think another set of formulas should be tried and then compared next to Cornell's formulas so we can get an idea of where things stand.

Great video of the program too! Just point and click and open and see!! Excellent!!

Cheers!!!!!

[edit on 21-12-2008 by RFBurns]

[edit on 21-12-2008 by RFBurns]

Hi DA!!

Ok the result from your program..that used Cornell's formulas..correct?

Have you tried the program with formulas from another source yet?

There is nothing strange about the missing image you got there and is not on Cornell's website. I think you found the smoking gun to what I am thinking is going on.

This is my result of that particular image using Gimp, nothing special, just layering the 3 data images.

Something tells me that those formulas at Cornell are exactly what NASA uses, and both are in the bandwagon of giving us only red images.

I think their numbers are cooked so that interpolation and all that ends up with the red. Numbers can be flexed and bended to achieve a specific result. I think another set of formulas should be tried and then compared next to Cornell's formulas so we can get an idea of where things stand.

Great video of the program too! Just point and click and open and see!! Excellent!!

Cheers!!!!!

[edit on 21-12-2008 by RFBurns]

[edit on 21-12-2008 by RFBurns]

Yes, let's take a look at the Viking Lander data. Those cameras had for every color image fixed exposure times across all 3 RGB channels and only a

hand full of exposure and AD settings at all. The Viking color filters had some problems with good true color reproduction, but they are sufficient

for our needs. However, you will find the very same mystic calibration factor for each RGB channel as you find it for the MER rovers, Pathfinder and I

guess Phoenix, too. As far as I know, that calibration factor wasn't introduced just for fun and saved externally to the image data...

reply to post by RFBurns

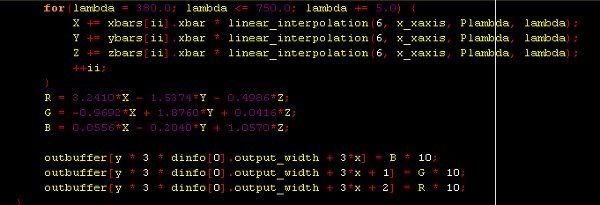

Yes that's the result using Cornell's formulas. I multiply the RGB values by 10 to brighten it up a little.

Here's the code snippet

I used CIE 2 degree standard. I haven't tried the 10 degree yet.

[edit on 21-12-2008 by Deaf Alien]

Yes that's the result using Cornell's formulas. I multiply the RGB values by 10 to brighten it up a little.

Here's the code snippet

I used CIE 2 degree standard. I haven't tried the 10 degree yet.

[edit on 21-12-2008 by Deaf Alien]

Originally posted by Holger Isenberg

Yes, let's take a look at the Viking Lander data. Those cameras had for every color image fixed exposure times across all 3 RGB channels and only a hand full of exposure and AD settings at all. The Viking color filters had some problems with good true color reproduction, but they are sufficient for our needs. However, you will find the very same mystic calibration factor for each RGB channel as you find it for the MER rovers, Pathfinder and I guess Phoenix, too. As far as I know, that calibration factor wasn't introduced just for fun and saved externally to the image data...

Isnt that interesting! Using the same calibrations on two totally different imaging systems, one analog, the other digital. I find that in itself a bit odd. Even an audio enthusiast who is presistant will tell you that the vinyl record sounds better and warmer than the CD. And a videographer will tell you that a Plumbicon camera system provides a more natural look than a CCD imager camera. I tend to agree with both. EVen a musican will prefer a tube amplifier than a solid state final amplifier anyday.

Well this will get interesting for sure!

Cheers!!!!

Originally posted by Deaf Alien

reply to post by RFBurns

Yes that's the result using Cornell's formulas. I multiply the RGB values by 10 to brighten it up a little.

Here's the code snippet

I used CIE 2 degree standard. I haven't tried the 10 degree yet.

[edit on 21-12-2008 by Deaf Alien]

Very cool DA! Cant wait to see the other test results! Im all..eyes!! O_O

Cheers!!!!

reply to post by RFBurns

The 10 degree standard observer results are pretty much the same.

Which XYZ to RGB transformation matrix would you think is the best? I'm no expert. I know there are different standards for monitors and TVs.

The 10 degree standard observer results are pretty much the same.

Which XYZ to RGB transformation matrix would you think is the best? I'm no expert. I know there are different standards for monitors and TVs.

Originally posted by Deaf Alien

reply to post by RFBurns

The 10 degree standard observer results are pretty much the same.

Which XYZ to RGB transformation matrix would you think is the best? I'm no expert. I know there are different standards for monitors and TVs.

Here is a LINK to the CIE 1931 color spec off of wiki. Maybe these might help? They look the same but I will look for some others off of the Sony databases I still have access to and snapshot them and post.

Here is something that might be of interest. LINK.

Another. LINK

And another. LINK

And one more for the road! LINK

Cheers!!!!

[edit on 21-12-2008 by RFBurns]

reply to post by RFBurns

Grrr, you had me calculate the inverse matrix. The result is dark. I had to multiply it by 20 without ruining the image. I tried the gamma correction in gimp but I don't know if I am doing it right.

Grrr, you had me calculate the inverse matrix. The result is dark. I had to multiply it by 20 without ruining the image. I tried the gamma correction in gimp but I don't know if I am doing it right.

Originally posted by Deaf Alien

reply to post by RFBurns

Grrr, you had me calculate the inverse matrix. The result is dark. I had to multiply it by 20 without ruining the image. I tried the gamma correction in gimp but I don't know if I am doing it right.

OOPS!!

Sorry, was just trying to gather up as much info as possible to try. There is that last link that might be of some good use. It has tons of formulas you can select from and might be what we are looking for.

Cheers!!!!

new topics

-

BIDEN Admin Begins Planning For January 2025 Transition to a New President - Today is 4.26.2024.

2024 Elections: 3 hours ago -

Big Storms

Fragile Earth: 5 hours ago -

Where should Trump hold his next rally

2024 Elections: 7 hours ago -

Shocking Number of Voters are Open to Committing Election Fraud

US Political Madness: 8 hours ago -

Gov Kristi Noem Shot and Killed "Less Than Worthless Dog" and a 'Smelly Goat

2024 Elections: 9 hours ago -

Falkville Robot-Man

Aliens and UFOs: 9 hours ago -

James O’Keefe: I have evidence that exposes the CIA, and it’s on camera.

Whistle Blowers and Leaked Documents: 10 hours ago -

Australian PM says the quiet part out loud - "free speech is a threat to democratic dicourse"...?!

New World Order: 11 hours ago -

Ireland VS Globalists

Social Issues and Civil Unrest: 11 hours ago

top topics

-

James O’Keefe: I have evidence that exposes the CIA, and it’s on camera.

Whistle Blowers and Leaked Documents: 10 hours ago, 17 flags -

Australian PM says the quiet part out loud - "free speech is a threat to democratic dicourse"...?!

New World Order: 11 hours ago, 15 flags -

Blast from the past: ATS Review Podcast, 2006: With All Three Amigos

Member PODcasts: 14 hours ago, 13 flags -

Biden "Happy To Debate Trump"

2024 Elections: 12 hours ago, 13 flags -

Ireland VS Globalists

Social Issues and Civil Unrest: 11 hours ago, 10 flags -

Mike Pinder The Moody Blues R.I.P.

Music: 14 hours ago, 8 flags -

BIDEN Admin Begins Planning For January 2025 Transition to a New President - Today is 4.26.2024.

2024 Elections: 3 hours ago, 7 flags -

What is the white pill?

Philosophy and Metaphysics: 13 hours ago, 6 flags -

Shocking Number of Voters are Open to Committing Election Fraud

US Political Madness: 8 hours ago, 6 flags -

Big Storms

Fragile Earth: 5 hours ago, 6 flags

active topics

-

Gov Kristi Noem Shot and Killed "Less Than Worthless Dog" and a 'Smelly Goat

2024 Elections • 63 • : GENERAL EYES -

Mood Music Part VI

Music • 3113 • : MRTrismegistus -

BIDEN Admin Begins Planning For January 2025 Transition to a New President - Today is 4.26.2024.

2024 Elections • 20 • : BustedBoomer -

One Flame Throwing Robot Dog for Christmas Please!

Weaponry • 12 • : worldstarcountry -

RAAF airbase in Roswell, New Mexico is on fire

Aliens and UFOs • 13 • : Ophiuchus1 -

President BIDEN's FBI Raided Donald Trump's Florida Home for OBAMA-NORTH KOREA Documents.

Political Conspiracies • 40 • : BingoMcGoof -

Australian PM says the quiet part out loud - "free speech is a threat to democratic dicourse"...?!

New World Order • 7 • : 19Bones79 -

It takes One to Be; Two to Tango; Three to Create.

Philosophy and Metaphysics • 8 • : Compendium -

Big Storms

Fragile Earth • 16 • : rickymouse -

James O’Keefe: I have evidence that exposes the CIA, and it’s on camera.

Whistle Blowers and Leaked Documents • 14 • : 19Bones79