It looks like you're using an Ad Blocker.

Please white-list or disable AboveTopSecret.com in your ad-blocking tool.

Thank you.

Some features of ATS will be disabled while you continue to use an ad-blocker.

4

share:

Some recent discussions on ATS and other forums that I visit have made me really interested in the way our brains are able to generate self aware

consciousness, and how we might go about designing an artificial brain which also has the ability to be aware of its self and make decisions according

to its own free will. I spent a few hours looking around the internet for interesting artificial brain models and here are some of the models I

discovered:

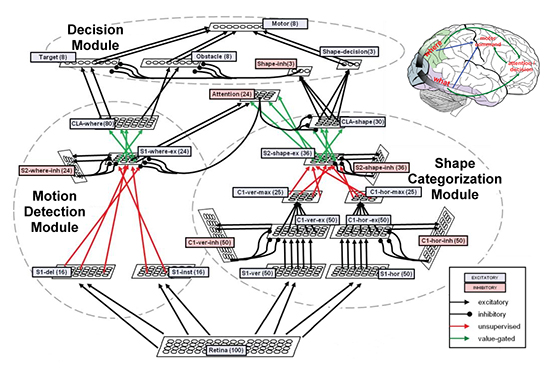

Spaun, the new human brain simulator

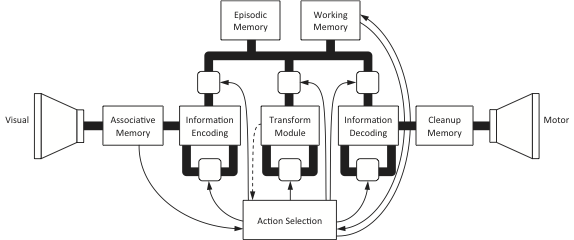

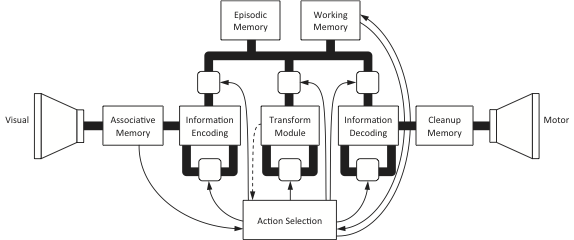

Creating an Artificial Brain in Software

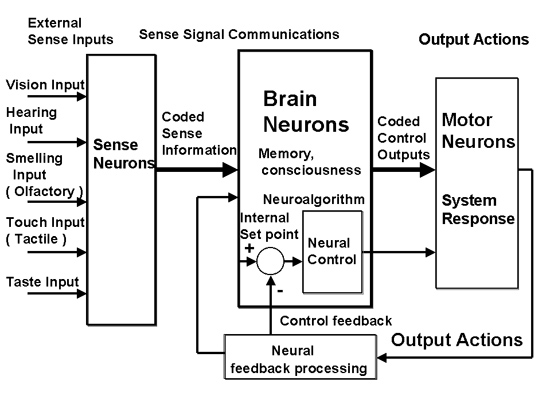

Simplified Neural Logic Block Diagram

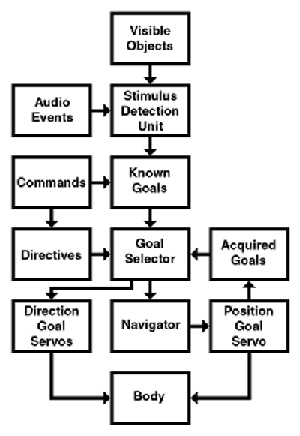

A Modular Framework for Artificial Intelligence Based on Stimulus Response Directives

DARPA SyNAPSE Program

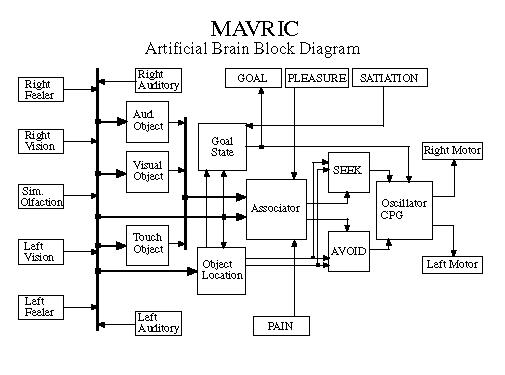

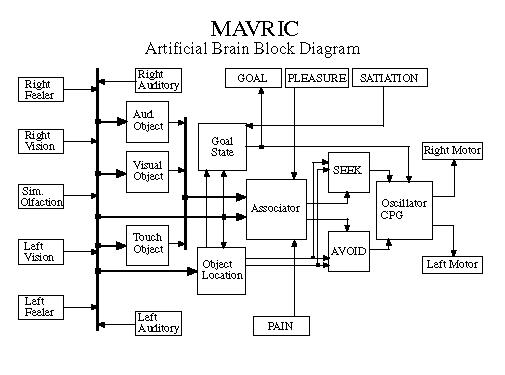

MAVRIC's Brain

Now I don't know about you, but I don't find any of those models particularly impressive. To be fair, some of them, like the MAVRIC model, is not trying to model a full human brain, it's actually used in an autonomous robot vehicle. And the DARPA SyNAPSE model just looks like a complicated mess, I'm not really sure what it's supposed to achieve but I doubt it achieves it. The Spaun model is supposed to function really well, but it's a very simple model that leaves a lot to be desired.

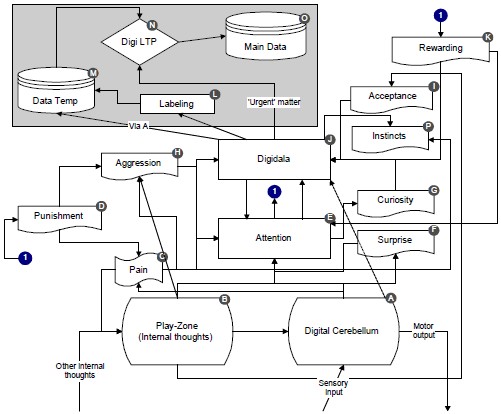

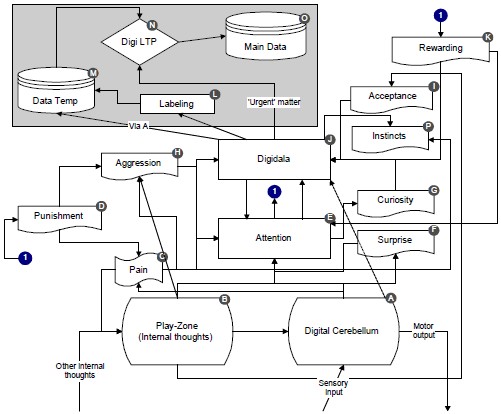

In my opinion the most impressive model is the second one in my list, because it takes into account more abstract concepts like imagination and emotional drives like curiosity. But it's still quite a messy model with no clear flow and I don't think it has ever been built. The project website doesn't seem to be online any more so I assume the developer ditched the project before he got around to actually implementing the idea.

Personally I think we can do much better than these models, and I thought it would be an interesting and fun idea to attempt to create my own model of a theoretically conscious brain which has the ability to think and make decisions by its own free will. I would also be interested to see any models that other members here can come up with because ATS members tend to think outside of the box and they have a higher than average ability to understand the way the human mind works.

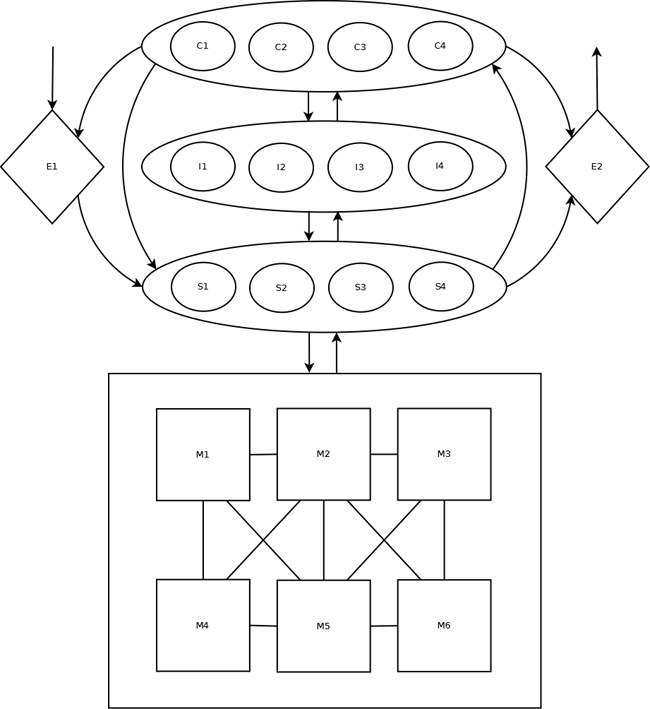

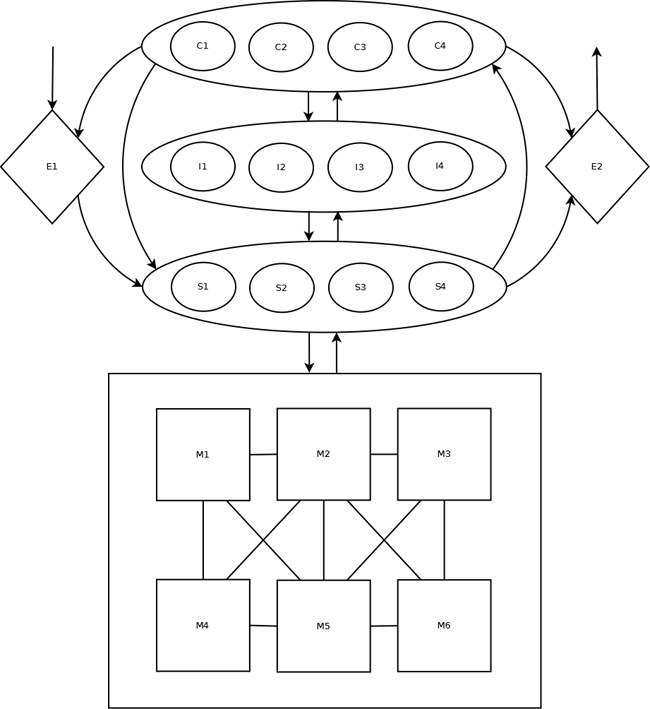

Here is the model I came up with:

E1: sensory input encoder - encodes the input sensory data and passes it to the subconscious layer.

E2: impulse output encoder - encodes the output impulse data to produce conscious and subconscious actions.

C1: decision processor - makes use of C2, C3, and C4 to make decisions and produce output impulses.

C2: imagination processor - simulates the senses to produce internal imagination (inner visualization etc).

C3: prediction processor - makes predictions about future events as to help make better decisions.

C4: randomization processor - randomizes input data to generate unpredictable visualizations and decisions.

I1: spacial processor - computes spacial (space) data to help the brain understand it's position and surroundings.

I2: temporal processor - computes temporal (time) related data to help the brain understand time related events.

I3: logic processor - performs simple logic and math calculates for many different crucial brain functions.

I4: language processor - computes language related data to help the brain understand conceptual meanings.

S1: pattern processor - performs analysis on input data to find patterns and symbols of meaning within complex data.

S2: reward/penalty processor - regulates the pleasure and pain signals issued to the conscious mind.

S3: emotional processor - regulates emotional state of the brain and controls the reward/penalty processor.

S4: system processor - controls unconscious system processes (eg heart beat) and things that keep the brain functioning.

M1: language memory - stores words and their associations with other words to form the basis of concepts.

M2: temporal memory - stores short and long term information about temporal events and their association with M3 memories.

M3: spacial memory - stores short and long term information about spacial events and the location of objects within space.

M4: goal memory - stores information about short term goals and long term goals and prime directives (instincts).

M5: conceptual memory - stores a vast array of associations between all other memory banks to form conceptual models.

M6: pattern memory - stores data relating to common and important patterns which need to be located in sensory input data.

Now I will try to give a more general explanation of how this model is supposed to work and how each component functions. Firstly, the 3 oval/ellipse shapes which contain the smaller circles are what I like to think of as the 3 layers of consciousness: there is the Conscious layer (top oval), the Intermediate layer (middle oval), and the Subconscious layer (bottom oval). Note the labelling of the inner circles C,I,S corresponds to the bolded letters in the last sentence, the small circle units are what really do all the work.

All 3 of these layers can be thought of as processors, they carry out computations on input data and output different data. I started off with a model which only had a conscious layer and a subconscious layer, but I found that the model worked better with an intermediate layer. The intermediate layer is the part of consciousness which is shared between the subconscious mind and the conscious mind, because the processors it contains (the smaller circles) perform functions which are useful for the conscious mind and the subconscious mind.

For example the logic processor is needed by both the conscious layer and the subconscious layer because they both need to compute simple math operations, and the temporal processor is required by both layers because they both need the ability to manage time based events. The same thing applies for the language processor, the subconscious mind needs to be able to process language related data, but the conscious mind also needs to process language related data in order to produce an internal monologue which uses language to ponder conceptual models.

The internal monologue is achieved via the imagination processor, it is able to simulate auditory input which should theoretically produce that inner voice which lets us hear a voice in our head. But the imagination processor doesn't just simulate auditory events, it should be capable of simulating all the senses. The most important of which is probably visual simulation, which allows us to picture objects inside our minds eye even if our eyes are closed. Empathy is simply us trying to simulate the feelings and thoughts of another person.

Spaun, the new human brain simulator

Creating an Artificial Brain in Software

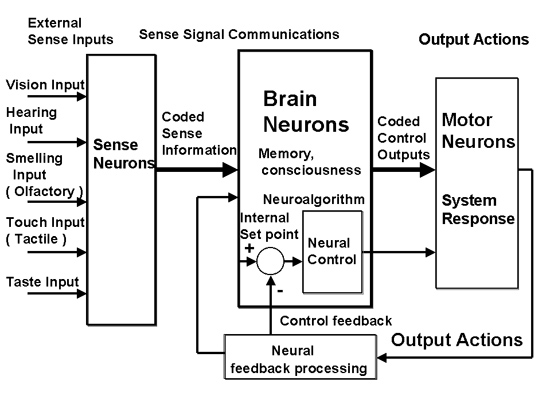

Simplified Neural Logic Block Diagram

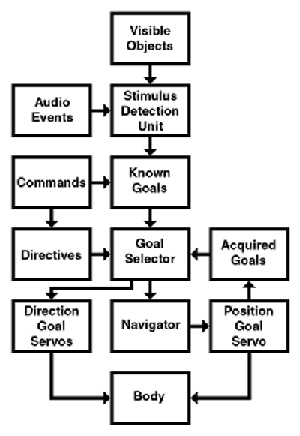

A Modular Framework for Artificial Intelligence Based on Stimulus Response Directives

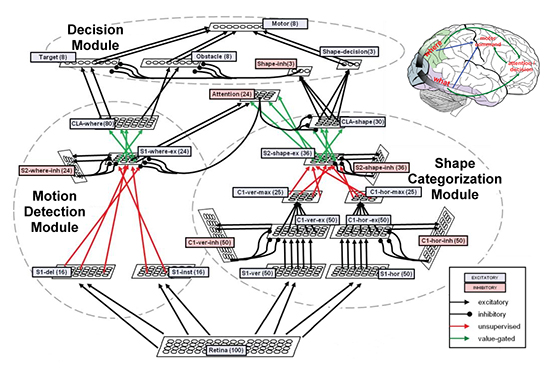

DARPA SyNAPSE Program

MAVRIC's Brain

Now I don't know about you, but I don't find any of those models particularly impressive. To be fair, some of them, like the MAVRIC model, is not trying to model a full human brain, it's actually used in an autonomous robot vehicle. And the DARPA SyNAPSE model just looks like a complicated mess, I'm not really sure what it's supposed to achieve but I doubt it achieves it. The Spaun model is supposed to function really well, but it's a very simple model that leaves a lot to be desired.

In my opinion the most impressive model is the second one in my list, because it takes into account more abstract concepts like imagination and emotional drives like curiosity. But it's still quite a messy model with no clear flow and I don't think it has ever been built. The project website doesn't seem to be online any more so I assume the developer ditched the project before he got around to actually implementing the idea.

Personally I think we can do much better than these models, and I thought it would be an interesting and fun idea to attempt to create my own model of a theoretically conscious brain which has the ability to think and make decisions by its own free will. I would also be interested to see any models that other members here can come up with because ATS members tend to think outside of the box and they have a higher than average ability to understand the way the human mind works.

Here is the model I came up with:

E1: sensory input encoder - encodes the input sensory data and passes it to the subconscious layer.

E2: impulse output encoder - encodes the output impulse data to produce conscious and subconscious actions.

C1: decision processor - makes use of C2, C3, and C4 to make decisions and produce output impulses.

C2: imagination processor - simulates the senses to produce internal imagination (inner visualization etc).

C3: prediction processor - makes predictions about future events as to help make better decisions.

C4: randomization processor - randomizes input data to generate unpredictable visualizations and decisions.

I1: spacial processor - computes spacial (space) data to help the brain understand it's position and surroundings.

I2: temporal processor - computes temporal (time) related data to help the brain understand time related events.

I3: logic processor - performs simple logic and math calculates for many different crucial brain functions.

I4: language processor - computes language related data to help the brain understand conceptual meanings.

S1: pattern processor - performs analysis on input data to find patterns and symbols of meaning within complex data.

S2: reward/penalty processor - regulates the pleasure and pain signals issued to the conscious mind.

S3: emotional processor - regulates emotional state of the brain and controls the reward/penalty processor.

S4: system processor - controls unconscious system processes (eg heart beat) and things that keep the brain functioning.

M1: language memory - stores words and their associations with other words to form the basis of concepts.

M2: temporal memory - stores short and long term information about temporal events and their association with M3 memories.

M3: spacial memory - stores short and long term information about spacial events and the location of objects within space.

M4: goal memory - stores information about short term goals and long term goals and prime directives (instincts).

M5: conceptual memory - stores a vast array of associations between all other memory banks to form conceptual models.

M6: pattern memory - stores data relating to common and important patterns which need to be located in sensory input data.

Now I will try to give a more general explanation of how this model is supposed to work and how each component functions. Firstly, the 3 oval/ellipse shapes which contain the smaller circles are what I like to think of as the 3 layers of consciousness: there is the Conscious layer (top oval), the Intermediate layer (middle oval), and the Subconscious layer (bottom oval). Note the labelling of the inner circles C,I,S corresponds to the bolded letters in the last sentence, the small circle units are what really do all the work.

All 3 of these layers can be thought of as processors, they carry out computations on input data and output different data. I started off with a model which only had a conscious layer and a subconscious layer, but I found that the model worked better with an intermediate layer. The intermediate layer is the part of consciousness which is shared between the subconscious mind and the conscious mind, because the processors it contains (the smaller circles) perform functions which are useful for the conscious mind and the subconscious mind.

For example the logic processor is needed by both the conscious layer and the subconscious layer because they both need to compute simple math operations, and the temporal processor is required by both layers because they both need the ability to manage time based events. The same thing applies for the language processor, the subconscious mind needs to be able to process language related data, but the conscious mind also needs to process language related data in order to produce an internal monologue which uses language to ponder conceptual models.

The internal monologue is achieved via the imagination processor, it is able to simulate auditory input which should theoretically produce that inner voice which lets us hear a voice in our head. But the imagination processor doesn't just simulate auditory events, it should be capable of simulating all the senses. The most important of which is probably visual simulation, which allows us to picture objects inside our minds eye even if our eyes are closed. Empathy is simply us trying to simulate the feelings and thoughts of another person.

edit on 11/12/2013 by ChaoticOrder because:

(no reason given)

Now the way in which I have designed this brain model to have free will is fairly simple, and it revolves around the randomization processor. This

processor should be able to produce truly random data, meaning the type of randomness you'd get from a quantum RNG. To get a proper understanding of

why I think this is crucial to the design you might want to take a look at my thread titled

Determinism and Consciousness. This is basically the foundation which will allow the

brain to have original and creative thoughts which cannot be predicted.

You might be wondering why the sensory input from E1 is passed to the subconscious layer and not the conscious layer, this is because our subconscious mind has the ability to filter out things which would harm the conscious psyche. For example you could witness something horrific, but you might not actually see it even though it's happening right in front of you, because your subconscious mind has blocked it out so that you don't have to experience it. So this is why the sensory data must first pass through the subconscious layer.

The diamond shaped unit on the left side is the sensory input encoder, which encodes the sensory data inflow and passes it to the subconscious layer. You might also wonder why the conscious layer feeds back into the E1 unit, this is because the conscious mind has the ability to filter out certain senses and focus on specific senses. For example when you get hurt you can focus on other things to take your mind off the pain, and if you focus intently on one specific sense, like your sense of hearing, that sense will become sharper whilst the other senses become dampened.

The diamond shape unit on the right side of the diagram is the impulse encoder, which takes impulses sent from the subconscious layer and the conscious layer and outputs an encoded signal which creates the action/motor responses. This is how the brain is able to turn thoughts into actions and how it can interact with the external world, which is very important for consciousness imo. The subconscious layer also has direct contact with this unit because the subconscious mind controls all sorts of unconscious impulses, like the beat of our heart.

Our heart keeps beating without us having to think about it, it's an unconsciously controlled motor impulse which the conscious mind has no control over. But there are many motor impulses that the conscious mind does have control over, like the movement of your arms and legs and the movement of you lips when you speak. And then there are other more weird impulses which are controlled by both layers. Like breathing for example, which can alternate between conscious and subconscious control. If you stop thinking about breathing, you will still continue to breath.

Now the bottom part of the diagram, the large square area containing the smaller squares, is the memory module area as you may have already guessed. These components hold data, they don't process data. The oval shapes can be though of as processors, and the square shapes can be thought of as data storage units which can be read and written to by the processor units, and the diamond shapes are just encoding units. Note however, that the only unit with direct access to the memory modules is the subconscious layer of the brain.

This is because the conscious mind does not have direct access to memories and other stored data. Your conscious mind doesn't know where all your memories are stored, and you can't delete specific memories just by thinking about it. When you want to recall a certain memory, the conscious layer will tell the subconscious layer that it wants a memory related to some set of data, and the subconscious layer will do a bunch of computations to pull up the necessary memories based on the information provided to it by the conscious layer.

This is why the conscious layer has a direct connection to the subconscious layer, so that is can request information from the memory modules, which the subconscious mind with locate and then return to the conscious layer. The subconscious layer might also choose to filter out certain memories requested by the conscious layer if those memories are deemed to be too disturbing or devastating to the psyche of the conscious mind. This is how memories can be suppressed and hidden even though the conscious mind didn't attempt to delete those memories from storage.

Now when the subconscious mind wants to access the memory modules, it can do so only through the first 3 modules (the language, temporal, and spacial modules). If you want to remember where something is located you will need to access the spacial memory module, but you might also need to access the temporal memory module to remember where something was located at a specific point in time, that is why the temporal module is strongly linked to the spacial module. And the spacial and temporal modules are linked to the conceptual module because all memories are stored as a series of concepts.

The way the memory models are connected together is important, for example the language memory module has 3 connections: one to the temporal memory module, one to the goal memory module, and one to the conceptual memory module. So each word in the language memory module can have a connection to events which took place at some time in the life of the brain, they can have a connection to certain goals in the goal memory module, and they can have connections to the conceptual memory module to help form a conceptual understanding of the word.

If you want to recall the meaning of a word you need to go through the language module, and then follow links through to the conceptual module. The language module by its self even holds a degree of conceptual structures because each word in the language memory module will have links to other similar words. The more similarities the words have in common, the stronger the link between them is. The conceptual module is very much the same, but all it contains are associations and links between the data in the other modules.

The conceptual memory module helps connect everything together so that the brain can form concepts based on things it has experienced and knowledge that it has gained during its life. And like the human brain, these associations and links should become stronger or weaker depending on how much they are used. In this way the brain can evolve its concepts and create stronger links between things which are more related, and it can forget about incorrect concepts or concepts that it no longer really cares about or finds irrelevant.

The point of having these types of associations is so that the brain can build its own understanding of the world. Most AI systems which are designed to have conversations with humans are not giving you answers which they have produced according to concepts that they understand, they are giving you scripted responses which correspond to pre-determined phrases. If you say something it wasn't programmed to understand than it will give you an answer which is completely wrong and unintelligible and it breaks the flow of the conversation.

An AI system which truly understands the concepts behind the words you are saying would not do that, if it didn't understand something you said, it would ask you to clarify what you mean or explain the thing it doesn't understand, and then the next time it was asked a about something similar it could give an intelligent response. And due to the imaginative and unpredictable nature of the model, it wont give you the same response every time. When I was a child I didn't give the same answers that I do now, because I didn't know all the things I know now.

The goal memory module is also very important because it holds the aspirations and instinctual goals of the brain so that it can make decisions based on those goals. Some of the instinctual drives would include self preservation, race preservation, a desire for knowledge, a desire for social interaction, and a desire for control over the external environment (more important that you might think). A recent study suggests "that intelligent behavior stems from the impulse to seize control of future events in the environment".

The long and short term goals could be pretty much anything. Most people in this world have different goals, and those goals are based on our experiences and accumulated knowledge, hence the connection the the temporal memory module. These goals are stored as conceptual ideas which can typically be expressed in words, hence the link to the conceptual and language modules. When a short or long term goal has been completed it can be stored in the temporal memory module as something which was achieved in the past, and replaced with another goal.

Well I think that just about covers 90% of my model and explains the reasoning behind it... if it doesn't I don't care because this thread is already long enough as it is. If you have any more questions or don't understand something about my model just ask me about it. If you have any ideas of how my model is wrong or how it can be improved I am all ears. Or if you have your own model that you want to share that would be great. I just hope this thread doesn't die off with only a small amount of discussion because this is a fascinating topic.

You might be wondering why the sensory input from E1 is passed to the subconscious layer and not the conscious layer, this is because our subconscious mind has the ability to filter out things which would harm the conscious psyche. For example you could witness something horrific, but you might not actually see it even though it's happening right in front of you, because your subconscious mind has blocked it out so that you don't have to experience it. So this is why the sensory data must first pass through the subconscious layer.

The diamond shaped unit on the left side is the sensory input encoder, which encodes the sensory data inflow and passes it to the subconscious layer. You might also wonder why the conscious layer feeds back into the E1 unit, this is because the conscious mind has the ability to filter out certain senses and focus on specific senses. For example when you get hurt you can focus on other things to take your mind off the pain, and if you focus intently on one specific sense, like your sense of hearing, that sense will become sharper whilst the other senses become dampened.

The diamond shape unit on the right side of the diagram is the impulse encoder, which takes impulses sent from the subconscious layer and the conscious layer and outputs an encoded signal which creates the action/motor responses. This is how the brain is able to turn thoughts into actions and how it can interact with the external world, which is very important for consciousness imo. The subconscious layer also has direct contact with this unit because the subconscious mind controls all sorts of unconscious impulses, like the beat of our heart.

Our heart keeps beating without us having to think about it, it's an unconsciously controlled motor impulse which the conscious mind has no control over. But there are many motor impulses that the conscious mind does have control over, like the movement of your arms and legs and the movement of you lips when you speak. And then there are other more weird impulses which are controlled by both layers. Like breathing for example, which can alternate between conscious and subconscious control. If you stop thinking about breathing, you will still continue to breath.

Now the bottom part of the diagram, the large square area containing the smaller squares, is the memory module area as you may have already guessed. These components hold data, they don't process data. The oval shapes can be though of as processors, and the square shapes can be thought of as data storage units which can be read and written to by the processor units, and the diamond shapes are just encoding units. Note however, that the only unit with direct access to the memory modules is the subconscious layer of the brain.

This is because the conscious mind does not have direct access to memories and other stored data. Your conscious mind doesn't know where all your memories are stored, and you can't delete specific memories just by thinking about it. When you want to recall a certain memory, the conscious layer will tell the subconscious layer that it wants a memory related to some set of data, and the subconscious layer will do a bunch of computations to pull up the necessary memories based on the information provided to it by the conscious layer.

This is why the conscious layer has a direct connection to the subconscious layer, so that is can request information from the memory modules, which the subconscious mind with locate and then return to the conscious layer. The subconscious layer might also choose to filter out certain memories requested by the conscious layer if those memories are deemed to be too disturbing or devastating to the psyche of the conscious mind. This is how memories can be suppressed and hidden even though the conscious mind didn't attempt to delete those memories from storage.

Now when the subconscious mind wants to access the memory modules, it can do so only through the first 3 modules (the language, temporal, and spacial modules). If you want to remember where something is located you will need to access the spacial memory module, but you might also need to access the temporal memory module to remember where something was located at a specific point in time, that is why the temporal module is strongly linked to the spacial module. And the spacial and temporal modules are linked to the conceptual module because all memories are stored as a series of concepts.

The way the memory models are connected together is important, for example the language memory module has 3 connections: one to the temporal memory module, one to the goal memory module, and one to the conceptual memory module. So each word in the language memory module can have a connection to events which took place at some time in the life of the brain, they can have a connection to certain goals in the goal memory module, and they can have connections to the conceptual memory module to help form a conceptual understanding of the word.

If you want to recall the meaning of a word you need to go through the language module, and then follow links through to the conceptual module. The language module by its self even holds a degree of conceptual structures because each word in the language memory module will have links to other similar words. The more similarities the words have in common, the stronger the link between them is. The conceptual module is very much the same, but all it contains are associations and links between the data in the other modules.

The conceptual memory module helps connect everything together so that the brain can form concepts based on things it has experienced and knowledge that it has gained during its life. And like the human brain, these associations and links should become stronger or weaker depending on how much they are used. In this way the brain can evolve its concepts and create stronger links between things which are more related, and it can forget about incorrect concepts or concepts that it no longer really cares about or finds irrelevant.

The point of having these types of associations is so that the brain can build its own understanding of the world. Most AI systems which are designed to have conversations with humans are not giving you answers which they have produced according to concepts that they understand, they are giving you scripted responses which correspond to pre-determined phrases. If you say something it wasn't programmed to understand than it will give you an answer which is completely wrong and unintelligible and it breaks the flow of the conversation.

An AI system which truly understands the concepts behind the words you are saying would not do that, if it didn't understand something you said, it would ask you to clarify what you mean or explain the thing it doesn't understand, and then the next time it was asked a about something similar it could give an intelligent response. And due to the imaginative and unpredictable nature of the model, it wont give you the same response every time. When I was a child I didn't give the same answers that I do now, because I didn't know all the things I know now.

The goal memory module is also very important because it holds the aspirations and instinctual goals of the brain so that it can make decisions based on those goals. Some of the instinctual drives would include self preservation, race preservation, a desire for knowledge, a desire for social interaction, and a desire for control over the external environment (more important that you might think). A recent study suggests "that intelligent behavior stems from the impulse to seize control of future events in the environment".

The long and short term goals could be pretty much anything. Most people in this world have different goals, and those goals are based on our experiences and accumulated knowledge, hence the connection the the temporal memory module. These goals are stored as conceptual ideas which can typically be expressed in words, hence the link to the conceptual and language modules. When a short or long term goal has been completed it can be stored in the temporal memory module as something which was achieved in the past, and replaced with another goal.

Well I think that just about covers 90% of my model and explains the reasoning behind it... if it doesn't I don't care because this thread is already long enough as it is. If you have any more questions or don't understand something about my model just ask me about it. If you have any ideas of how my model is wrong or how it can be improved I am all ears. Or if you have your own model that you want to share that would be great. I just hope this thread doesn't die off with only a small amount of discussion because this is a fascinating topic.

edit on 11/12/2013 by ChaoticOrder because:

(no reason given)

Interesting ideas. AI has always been something I wished I'd learn more about. Ofc, I was always thrilled by AI in science fiction. Somehow I can

never get myself to dig into the actual science. Primarily, real science is hard. Reading science fiction is easy. Secondly, I can't do everything.

Thirdly, I'm not a genius, just someone that likes to be mesmerized by a good (yet simple) mystery or story.

I could sit here for a couple hours and go over everything you wrote and then over the next couple days maybe produce my own idea of what an artificial brain is, but honestly, unless I spent years of time doing it and read all the professional literature on hte subject, it's probably just a different way to waste time. Mabye this is just me saying I'm not interested enough to do something like this.

I saw this guy on youtube some years ago (these're great videos)...

I could sit here for a couple hours and go over everything you wrote and then over the next couple days maybe produce my own idea of what an artificial brain is, but honestly, unless I spent years of time doing it and read all the professional literature on hte subject, it's probably just a different way to waste time. Mabye this is just me saying I'm not interested enough to do something like this.

I saw this guy on youtube some years ago (these're great videos)...

edit on 11-12-2013 by

jonnywhite because: (no reason given)

Fascinating subject and it looks like you know your stuff,

I say looks like because 95% of it went over my head LOL

Just wanted to give the thread a bump and Ill check back in but will leave the discussion to others who know what they are talking about.

S&F

I say looks like because 95% of it went over my head LOL

Just wanted to give the thread a bump and Ill check back in but will leave the discussion to others who know what they are talking about.

S&F

reply to post by jonnywhite

Thanks for the videos they look interesting, I will get around to watching them shortly.

reply to post by IkNOwSTuff

Well I don't really know anything about brain science or neurology or what ever it is called. I just developed this model by guess work and self-analysis. Last night as I was trying to get some sleep, I was literally trying to analyze the way I was recalling my memories. Like I'd recall a memory, and then I'd try to analyze what data allowed me to pull up that memory and how it was accessed. That is how I came to the conclusion that our memories can be accessed in 3 ways: through language recollection, temporal recollection, or spacial recollection.

For example if I think about the word "balloon" it can trigger a recollection of certain memories which are linked to the concept of a balloon. Or if I start thinking about when I was in grade 9 of school it can trigger certain temporal memories that occurred within that period of time. Or if I start thinking about the shop down the street that allows a spacial recollection which triggers memories related to that place in space. And this is why I said that memories can only be accessed through the first 3 memory modules.

Thanks for the videos they look interesting, I will get around to watching them shortly.

reply to post by IkNOwSTuff

Fascinating subject and it looks like you know your stuff

Well I don't really know anything about brain science or neurology or what ever it is called. I just developed this model by guess work and self-analysis. Last night as I was trying to get some sleep, I was literally trying to analyze the way I was recalling my memories. Like I'd recall a memory, and then I'd try to analyze what data allowed me to pull up that memory and how it was accessed. That is how I came to the conclusion that our memories can be accessed in 3 ways: through language recollection, temporal recollection, or spacial recollection.

For example if I think about the word "balloon" it can trigger a recollection of certain memories which are linked to the concept of a balloon. Or if I start thinking about when I was in grade 9 of school it can trigger certain temporal memories that occurred within that period of time. Or if I start thinking about the shop down the street that allows a spacial recollection which triggers memories related to that place in space. And this is why I said that memories can only be accessed through the first 3 memory modules.

edit on 11/12/2013 by ChaoticOrder because: (no reason

given)

One thing that does need to be better explained in my model however is the way the individual processors in each layer are connected together and how

they worked together to compute input and produce an output. I have a general idea of how it should work but I haven't really gotten around to

producing a detailed description yet. If I do I will add the details to this thread.

reply to post by jonnywhite

So far the first video seems great, but the audio quality is terrible. Apparently this is a more up-to-date video of the same lecture and has better audio quality: www.infoq.com...

So far the first video seems great, but the audio quality is terrible. Apparently this is a more up-to-date video of the same lecture and has better audio quality: www.infoq.com...

edit on 11/12/2013 by ChaoticOrder because: (no reason given)

reply to post by ChaoticOrder

Asking if an artificial brain can be conscious is like asking if a submarine can swim. It's almost meaningless if you think about it. That's why the Turing Test will never be passed. Without first being a human being, no machine can think like a human being. It will always think like a machine.

Sorry I have nothing to add.

"which also has the ability to be aware of its self and make decisions according to its own free will".

Asking if an artificial brain can be conscious is like asking if a submarine can swim. It's almost meaningless if you think about it. That's why the Turing Test will never be passed. Without first being a human being, no machine can think like a human being. It will always think like a machine.

Sorry I have nothing to add.

reply to post by Aphorism

The human brain can be simulated by a computer, and that is all that matters. Just because I'm made of organic matter and a computer is made of steel and silicon doesn't mean the computer is incapable of becoming self aware, all that is needed is the right model for self-awareness. The real question in my mind is this: is the grey matter in my head all that makes up my consciousness. There's a very high probability in my opinion that our memories are some how stored outside of our brain in some sort of field, and that is why we are able to store such an unbelievable amount of information in our memories. If that is the case then we may not be able to simulate consciousness properly until we understand the mechanism behind this. But other than that there is no valid reason for why an artificial brain cannot be made conscious.

Edit: Furthermore, it's not like we need to create an exact model of the human brain for it to be conscious. There are often multiple solutions to the same problem, the same thing would apply to consciousness. If there is a sentient alien species anywhere out there in the universe, I am very sure that their brains will not be exactly like ours. They may have similarities but they will be very different. A submarine can move through water perfectly fine, it just does it in a slightly different way than a human moves through water. Likewise, a computer may become self aware like us, but it doesn't need to do it in exactly the same way that our organic brain does it.

The human brain can be simulated by a computer, and that is all that matters. Just because I'm made of organic matter and a computer is made of steel and silicon doesn't mean the computer is incapable of becoming self aware, all that is needed is the right model for self-awareness. The real question in my mind is this: is the grey matter in my head all that makes up my consciousness. There's a very high probability in my opinion that our memories are some how stored outside of our brain in some sort of field, and that is why we are able to store such an unbelievable amount of information in our memories. If that is the case then we may not be able to simulate consciousness properly until we understand the mechanism behind this. But other than that there is no valid reason for why an artificial brain cannot be made conscious.

Edit: Furthermore, it's not like we need to create an exact model of the human brain for it to be conscious. There are often multiple solutions to the same problem, the same thing would apply to consciousness. If there is a sentient alien species anywhere out there in the universe, I am very sure that their brains will not be exactly like ours. They may have similarities but they will be very different. A submarine can move through water perfectly fine, it just does it in a slightly different way than a human moves through water. Likewise, a computer may become self aware like us, but it doesn't need to do it in exactly the same way that our organic brain does it.

edit on 12/12/2013 by ChaoticOrder because: (no reason

given)

The amount of work put into building something like an AI seems like it's worth it, the amount of things that it could achieve is nearly enough to

inspire me to look into more things like this. However wouldn't an AI most likely be based upon a person meaning that it's still most likely to make

the same mistakes that we make?

Lets just hope it isn't smart enough to take over the world

Lets just hope it isn't smart enough to take over the world

reply to post by blueyezblkdragon

It would be amazing if it didn't make the mistakes we make. But I don't think they would ever stand a chance against us because all it takes is a strong EM pulse to kill any electronic device within a very large radius, and the moment they started to show any hostility towards us we could destroy them before they got organized enough to pose a real threat. Plus we'd be like gods to them, their creators, so they'd have at least some degree of respect for us.

However wouldn't an AI most likely be based upon a person meaning that it's still most likely to make the same mistakes that we make?

It would be amazing if it didn't make the mistakes we make. But I don't think they would ever stand a chance against us because all it takes is a strong EM pulse to kill any electronic device within a very large radius, and the moment they started to show any hostility towards us we could destroy them before they got organized enough to pose a real threat. Plus we'd be like gods to them, their creators, so they'd have at least some degree of respect for us.

edit on 12/12/2013 by ChaoticOrder because: (no reason given)

reply to post by jonnywhite

Lol you've got me addicted to AI lectures now. I can't get enough of this stuff, it's so fascinating. If you know of any more cool AI videos feel free to share them. They are very helpful.

Here's a really insightful TED talk I came across (more to do with the human brain than AI):

Lol you've got me addicted to AI lectures now. I can't get enough of this stuff, it's so fascinating. If you know of any more cool AI videos feel free to share them. They are very helpful.

Here's a really insightful TED talk I came across (more to do with the human brain than AI):

edit on 12/12/2013 by ChaoticOrder because: (no reason given)

Self awareness is not as difficult as it is made out to be. The subject is simply aware all sensory or data input is based on the locus of this

awareness.

They are the input and they are not the input, when they are fully aware of the stimulus they are the stimulus, and when the stimulus is occurring a feeling codification is taking place a positive negative or neutral that creates a compounded craving, varying degrees of flight response, or a null... the stimulus does not significantly mean anything.

There is always a reaction to stimuli no matter how slight, if you've ever seen videos of our most advanced robot intelligence's, you'll notice they have zero reaction when someone pops open a panel or removes the head for an adjustment. All input needs to be coded to react with a response, for the most life like (human) responses, this means coping mechanisms or an adaptation to stimulus adversity from a baseline "situation normal" continuity. The baseline continuity is an all systems normal feed back loop, that continuously changes based on a continuous culmination of sensory/datum input, and when overwhelmed with sensory input to all of this datum a snafu and fubar coping mechanism building response must occur, to be most human/animal like.

Which brings up another issue, we are by no means perfect in our human computations of experience; we forget chunks of data, we accept corrupt/false data from non-reliable sources out of feelings of kinship, we become scarred, and deluded to most of this experience that forms this "self" of likes and dislikes, in children many likes and dislikes are not self created but come from social interactions with others the "herd mentality" as one ages these likes and dislikes can continue on in a child like course, with the true self hidden behind a facade... which is a social coping mechanism from approval seeking behavior. Those more self actualized not only know themselves but others know them too as their real self is not hidden from others view or experience. Think of the preacher representing the highest moral ground on Sunday, yet an adulterous molester during the rest of the week. In other words everyone is a walking uncertainty no normal baseline exists except in appearances only.

I do not think it would be difficult to code the above into an artificial intelligence, but I do believe their flow charts greatly lacking in how our flow of consciousness works, there needs to be crossed wires, random access memory and many other foibles that produce all the eccentricities it takes to infer a real home grown personality.

The problem is do we want or really have a need a bunch of paranoid androids? 7 Billion and counting is more than enough.

They are the input and they are not the input, when they are fully aware of the stimulus they are the stimulus, and when the stimulus is occurring a feeling codification is taking place a positive negative or neutral that creates a compounded craving, varying degrees of flight response, or a null... the stimulus does not significantly mean anything.

There is always a reaction to stimuli no matter how slight, if you've ever seen videos of our most advanced robot intelligence's, you'll notice they have zero reaction when someone pops open a panel or removes the head for an adjustment. All input needs to be coded to react with a response, for the most life like (human) responses, this means coping mechanisms or an adaptation to stimulus adversity from a baseline "situation normal" continuity. The baseline continuity is an all systems normal feed back loop, that continuously changes based on a continuous culmination of sensory/datum input, and when overwhelmed with sensory input to all of this datum a snafu and fubar coping mechanism building response must occur, to be most human/animal like.

Which brings up another issue, we are by no means perfect in our human computations of experience; we forget chunks of data, we accept corrupt/false data from non-reliable sources out of feelings of kinship, we become scarred, and deluded to most of this experience that forms this "self" of likes and dislikes, in children many likes and dislikes are not self created but come from social interactions with others the "herd mentality" as one ages these likes and dislikes can continue on in a child like course, with the true self hidden behind a facade... which is a social coping mechanism from approval seeking behavior. Those more self actualized not only know themselves but others know them too as their real self is not hidden from others view or experience. Think of the preacher representing the highest moral ground on Sunday, yet an adulterous molester during the rest of the week. In other words everyone is a walking uncertainty no normal baseline exists except in appearances only.

I do not think it would be difficult to code the above into an artificial intelligence, but I do believe their flow charts greatly lacking in how our flow of consciousness works, there needs to be crossed wires, random access memory and many other foibles that produce all the eccentricities it takes to infer a real home grown personality.

The problem is do we want or really have a need a bunch of paranoid androids? 7 Billion and counting is more than enough.

new topics

-

Thousands Of Young Ukrainian Men Trying To Flee The Country To Avoid Conscription And The War

Other Current Events: 2 hours ago -

12 jurors selected in Trump criminal trial

US Political Madness: 4 hours ago -

Iran launches Retalliation Strike 4.18.24

World War Three: 5 hours ago -

Israeli Missile Strikes in Iran, Explosions in Syria + Iraq

World War Three: 5 hours ago -

George Knapp AMA on DI

Area 51 and other Facilities: 11 hours ago -

Not Aliens but a Nazi Occult Inspired and then Science Rendered Design.

Aliens and UFOs: 11 hours ago

top topics

-

George Knapp AMA on DI

Area 51 and other Facilities: 11 hours ago, 25 flags -

Israeli Missile Strikes in Iran, Explosions in Syria + Iraq

World War Three: 5 hours ago, 14 flags -

Louisiana Lawmakers Seek to Limit Public Access to Government Records

Political Issues: 13 hours ago, 7 flags -

So I saw about 30 UFOs in formation last night.

Aliens and UFOs: 16 hours ago, 6 flags -

Iran launches Retalliation Strike 4.18.24

World War Three: 5 hours ago, 6 flags -

Do we live in a simulation similar to The Matrix 1999?

ATS Skunk Works: 17 hours ago, 5 flags -

Not Aliens but a Nazi Occult Inspired and then Science Rendered Design.

Aliens and UFOs: 11 hours ago, 5 flags -

12 jurors selected in Trump criminal trial

US Political Madness: 4 hours ago, 4 flags -

The Tories may be wiped out after the Election - Serves them Right

Regional Politics: 14 hours ago, 3 flags -

Thousands Of Young Ukrainian Men Trying To Flee The Country To Avoid Conscription And The War

Other Current Events: 2 hours ago, 2 flags

active topics

-

Scarface does Tiny Desk Concert

Music • 7 • : sitrose -

The Acronym Game .. Pt.3

General Chit Chat • 7727 • : F2d5thCavv2 -

Russia Ukraine Update Thread - part 3

World War Three • 5697 • : F2d5thCavv2 -

Do we live in a simulation similar to The Matrix 1999?

ATS Skunk Works • 22 • : SchrodingersRat -

Iran launches Retalliation Strike 4.18.24

World War Three • 15 • : semperfortis -

President BIDEN Warned IRAN Not to Attack ISRAEL - Iran Responded with a Military Attack on Israel.

World War Three • 43 • : WeMustCare -

Israeli Missile Strikes in Iran, Explosions in Syria + Iraq

World War Three • 52 • : WeMustCare -

Mandela Effect - It Happened to Me!

The Gray Area • 107 • : inflaymes69 -

Terrifying Encounters With The Black Eyed Kids

Paranormal Studies • 45 • : daskakik -

Not Aliens but a Nazi Occult Inspired and then Science Rendered Design.

Aliens and UFOs • 12 • : BeyondKnowledge3

4