It looks like you're using an Ad Blocker.

Please white-list or disable AboveTopSecret.com in your ad-blocking tool.

Thank you.

Some features of ATS will be disabled while you continue to use an ad-blocker.

share:

in a very unexpected announcement, Google has announced it moved the worlds largest network onto open flow,

this news was missed by many because of the tragedy of Boston.

my thoughts go out to my american friends, and the families of the victims.

in an announcement that went largely missed by the general population Google has announced that it altered its WAN network to work on Open Flow, a new Software Defined Networking standard (SDN)

the interesting thing is this was done without shut down or down time, and the new system uses a new paridime in network architecture to complete communications between the data centres housing the servers and switches that under pin the google server network.

first i will supply the video of the announcement,

brief explanation, the google team has managed to roll out a full SDN Open Flow implementation without taking down any data or server networks.

they did this without anyone noticing!!!!!!!

in this next video vint cerf explains why SDN will be the next networking protocol standard, and how big the development of Software Defined Networking is for the internet at large.

skip to 15.00mins for vint

because the designers wanted an Open Platform for a standards based transport, innovators from many areas can now innovate over this new platform.

the designers decided to change the way networking was implemented and realised that permission free network transports could be designed that would enhance the ability to quickly re configure network flows and paths to enable traffic shaping over networks.

and being open anyone who wanted to could be free to innovate over the transport and create new tools for this new networking protocol.

this transport can effect the operation of many layers of the internet and can also be used for efficiency gains in,

optical transports,

broadband transports,

wireless transports,

satalite transports.

software transports.

the beauty of the transport was it was designed with interoperability in mind so that current switching and routing gear can be used with the transport, without having to replace the entire backbone infrastructure supporting the operation of the internet.

the other thing the designers did was to enable a much more secure platform for network functions and programmable interfaces. the idea of standardising the transport was to enable fast passed innovation and very fast uptake to industry.

so what are the benefits of the new networking platform?

this was described by vint as a Renaissance of the internet.

if anyone wants a high level over view of how this works or what i means please feel free to ask anything.

this will be the platform for networking into the foreseeable future and will enable the internet to become highly fault tolerant and some iterations have the built in ability to mitigate DDOS attacks or load balancing.

this will enable things like high speed gaming streaming to your screen,

high speed browsing of the internet,

fast data transfers,

instant networks across the internet with little or no configuration,

this will enable the network to keep up with "on demand" virtual server spooling and load increases/decreases over very short time frames.

there is so many things i could add to this post but i think i will just answer questions rather than type out all the possibilities.

so please any questions, ask away

xploder

this news was missed by many because of the tragedy of Boston.

my thoughts go out to my american friends, and the families of the victims.

in an announcement that went largely missed by the general population Google has announced that it altered its WAN network to work on Open Flow, a new Software Defined Networking standard (SDN)

the interesting thing is this was done without shut down or down time, and the new system uses a new paridime in network architecture to complete communications between the data centres housing the servers and switches that under pin the google server network.

first i will supply the video of the announcement,

brief explanation, the google team has managed to roll out a full SDN Open Flow implementation without taking down any data or server networks.

they did this without anyone noticing!!!!!!!

in this next video vint cerf explains why SDN will be the next networking protocol standard, and how big the development of Software Defined Networking is for the internet at large.

skip to 15.00mins for vint

because the designers wanted an Open Platform for a standards based transport, innovators from many areas can now innovate over this new platform.

the designers decided to change the way networking was implemented and realised that permission free network transports could be designed that would enhance the ability to quickly re configure network flows and paths to enable traffic shaping over networks.

and being open anyone who wanted to could be free to innovate over the transport and create new tools for this new networking protocol.

this transport can effect the operation of many layers of the internet and can also be used for efficiency gains in,

optical transports,

broadband transports,

wireless transports,

satalite transports.

software transports.

the beauty of the transport was it was designed with interoperability in mind so that current switching and routing gear can be used with the transport, without having to replace the entire backbone infrastructure supporting the operation of the internet.

the other thing the designers did was to enable a much more secure platform for network functions and programmable interfaces. the idea of standardising the transport was to enable fast passed innovation and very fast uptake to industry.

so what are the benefits of the new networking platform?

this was described by vint as a Renaissance of the internet.

if anyone wants a high level over view of how this works or what i means please feel free to ask anything.

this will be the platform for networking into the foreseeable future and will enable the internet to become highly fault tolerant and some iterations have the built in ability to mitigate DDOS attacks or load balancing.

this will enable things like high speed gaming streaming to your screen,

high speed browsing of the internet,

fast data transfers,

instant networks across the internet with little or no configuration,

this will enable the network to keep up with "on demand" virtual server spooling and load increases/decreases over very short time frames.

there is so many things i could add to this post but i think i will just answer questions rather than type out all the possibilities.

so please any questions, ask away

xploder

edit on 21/4/13 by XPLodER because: (no reason given)

edit on 21/4/13 by XPLodER because: spelling

I have no idea what that means, lol, I was never any good at grasping the networking side of things.

If I plug a blue cable in and I get a green light, I'm happy.

So.. what does it really mean? Is it just a software based routing system? Because my limited knowledge remembers back in 99, using software based routers, set up in linux.

I'm guessing it's nothing like that. But that's all I can fathom - a more efficient way to manage traffic in a dynamic manner.

I dunno, if it means that I can play online games where the server is in a different country and not have 400ms pings, then I'm all for it. But as far as I know that's a limitation on the sealink from Australia to the rest of the world.

I used to tracert to, say google.com and every hop would be less than 30ms until we hit the telstra link out, then greater than 350ms.. so I dunno how that will affect us if I'm even close to getting part of the little bits I think I might know.

If I plug a blue cable in and I get a green light, I'm happy.

So.. what does it really mean? Is it just a software based routing system? Because my limited knowledge remembers back in 99, using software based routers, set up in linux.

I'm guessing it's nothing like that. But that's all I can fathom - a more efficient way to manage traffic in a dynamic manner.

I dunno, if it means that I can play online games where the server is in a different country and not have 400ms pings, then I'm all for it. But as far as I know that's a limitation on the sealink from Australia to the rest of the world.

I used to tracert to, say google.com and every hop would be less than 30ms until we hit the telstra link out, then greater than 350ms.. so I dunno how that will affect us if I'm even close to getting part of the little bits I think I might know.

edit on 21-4-2013 by winofiend because: (no reason given)

Originally posted by winofiend

I have no idea what that means, lol, I was never any good at grasping the networking side of things.

If I plug a blue cable in and I get a green light, I'm happy.

it was designed so that you still use the internet in the exact same way as a user so very user friendly

So.. what does it really mean? Is it just a software based routing system? Because my limited knowledge remembers back in 99, using software based routers, set up in linux.

faster internet, cheeper costs for hosting companies, easier networking across the internet.

(basic explanation), you split the control plane from the forwarding plane and centrally manage the control plane,

using a flow controller you route and switch packets "on the fly" and squish the network services down into the transport layer. it provides for an "end to end" network that can preform networking functions over a simplified transport for simple and fast network connection, routing and link management.

I'm guessing it's nothing like that. But that's all I can fathom - a more efficient way to manage traffic in a dynamic manner.

there are multiple differences over a virtual switch in that the layers 3-7 can be abstracted (compressed) into the transport layer 2 and network functions can be sent "on" the same packet as the data required for programs ect.

I dunno, if it means that I can play online games where the server is in a different country and not have 400ms pings, then I'm all for it. But as far as I know that's a limitation on the sealink from Australia to the rest of the world.

the transport would significantly lower latency to the point where web based high frame rate games would be playable.

and because you can "packet shape" and "traffic shape" the flows, the ability to play games wont be a problem

there are protocols like the loss avoidance algorithms that can speed up delivery of packet data over this transport to make gaming a lag free experience.

I used to tracert to, say google.com and every hop would be less than 30ms until we hit the telstra link out, then greater than 350ms.. so I dunno how that will affect us if I'm even close to getting part of the little bits I think I might know.

edit on 21-4-2013 by winofiend because: (no reason given)

thats the cool thing about this type of network transport, the network topology is "end to end" and the system is "smart" in that you can manage links and hops so that latency is minimal.

in a normal IP network all the different services required to "network" with a server are on different levels,

like link layer, ip layer transport layer ect,

each layer requires its own out bound connections, and one lost link layer packet could hold up a transport packet,

by abstracting the different network services into a single packet, and using loss avoidance you can increase through put dramatically. this also helps with latency and removes lagg from lost packets.

in theory there is no reason why the telcos themselves couldn't retro fit there old slow networks with this design

xploder

edit on 21/4/13 by XPLodER because: (no reason given)

I did not notice, but has been some internet traffic problems the past couple of weeks. So as a website administrator what does this mean to me? Are

there any specific languages or software development approaches that I need to be aware of to make the most of this new development? How am I suppose

to structure database development and administration to work in a multiple server environment? Are there any certain standards that I need to be made

aware of as a developer or is this just purely the web hosting responsibility? Do I just make my single MYSQL database and all the virtualization is

done under the hood, is there any specific software versions I need to be careful of?

Once my sinuses decide to play ball and not go boom boom i'll have a proper look at what they're doing

for the average person there will be no change as the data presented to the application will be the same but i'd imagine that by taking control more away from static routing and older networking protocols and being able adjust more on the fly and take advantages of better ways of doing things that are not implemented in classical routers/switches

for the average person there will be no change as the data presented to the application will be the same but i'd imagine that by taking control more away from static routing and older networking protocols and being able adjust more on the fly and take advantages of better ways of doing things that are not implemented in classical routers/switches

reply to post by XPLodER

This was not done from someone simply making an innovative decision. The reason they did it was to avoid paying Vringo usage rights after being sued by them for patent violations.

This was not done from someone simply making an innovative decision. The reason they did it was to avoid paying Vringo usage rights after being sued by them for patent violations.

I see. Someone did a software upgrade and there was no loss of service. No one noticed? Could that be like migrating 750,000 people who are talking on

a telephone, from one telephone switch to another while they were on a call, and nothing happened. No dropped calls. Piece of cake. Yeah, technology.

It's like that.

Originally posted by kwakakev

I did not notice, but has been some internet traffic problems the past couple of weeks. So as a website administrator what does this mean to me?

your hosting provider will in their next upgrade cycle be made aware of SDN or software defined networking,

you as an administrator may be interested in the extra functionality of being able to use the load balancing nature of SDN to provide better service, mitigate DDOS attacks, from a band width point of view, you can save by having a dynamic allocation of bandwidth. biggest change for an administrator is you dont have to manually configure your switches routers ect. in theory you should be able to serve more users over the same network/server infrastructure.

bear in mind that this is still very new but is being used in very large data environmnets.

Are there any specific languages or software development approaches that I need to be aware of to make the most of this new development?

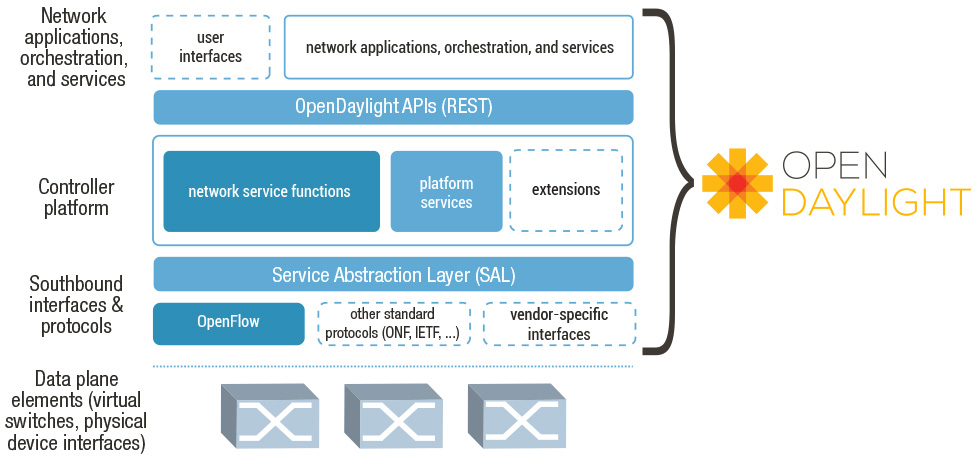

if you are into large deployments, you should look at OpenFlow and Open stack

Open flow for network fabric management, and open stack for server hardware management/deployment of compute resources, spooling up virtual servers ect.

these are both new technology developments and should be explored before commuting to deployment,

the idea here is things are changing but you can stay on the right side of the curve by investigating what others have done and recognise that SDN can be customised for almost any application.

How am I suppose to structure database development and administration to work in a multiple server environment?

well in the case of two or more servers one DB and one DC you would set up a server to be the control plane "controller" with open flow running on it,

and open flow would "manage" the network communications over the network, and to your users.

Are there any certain standards that I need to be made aware of as a developer or is this just purely the web hosting responsibility?

primarily it is the web hosting, data, compute and storage providers that are of reasonable scale that will be interested,

but be aware this will make spooling up virtual servers very easy, very fast and very cost effective.

telcos will also be using this before very long, and i suspect it will filter down to the smaller operators in time when the prices for the switches come down in price.

Do I just make my single MYSQL database and all the virtualization is done under the hood, is there any specific software versions I need to be careful of?

think about it like this, its virtualisation of the network fabric, it is complimentary to server virtualisation, and is the last mile in fully unlocking the potential of virtual environments.

www.openflow.org...

there are specific vendors for server virtulisation that already have the open flow software enabled vswitches in them,

so it would depend on what you use.

xploder

Originally posted by Maxatoria

Once my sinuses decide to play ball and not go boom boom i'll have a proper look at what they're doing

for the average person there will be no change as the data presented to the application will be the same but i'd imagine that by taking control more away from static routing and older networking protocols and being able adjust more on the fly and take advantages of better ways of doing things that are not implemented in classical routers/switches

correct,

but there are changes to the underlying transport as well,

the network services are abstracted into the transport layer, and the network design is end to end.

the packets are "coded" with routing information and link information,

in this way a new "type" of network is created

hence the new network operates differently than a traditional network connection over the internet.

xploder

Originally posted by CarbonBase

I see. Someone did a software upgrade and there was no loss of service. No one noticed? Could that be like migrating 750,000 people who are talking on a telephone, from one telephone switch to another while they were on a call, and nothing happened. No dropped calls. Piece of cake. Yeah, technology. It's like that.

yes that is a good analogy,

google changed all the internet connection protocols between its large server farms,

while they were operating, and service was not interrupted and no body knew till a few days ago

the Google WAN (wide area network) is the internet backbone connection between data centers,

they hot swapped the networking protocols for the entire backbone in 6-9 months,

without anyone noticing or realising, until Google announced it a few days ago.

xploder

edit on 21/4/13 by XPLodER because: (no reason given)

edit on 21/4/13 by XPLodER because: (no reason given)

reply to post by XPLodER

Why should anyone notice what happens between googles data centres as its their internal traffic and if they had even half a brain cell they'd still have the older gear up and running plus alternative routes between DC's so should any of the upgrades fail everything would be business as usual and all people may notice at worst is an extra 0.5 seconds before their videos started to play but i would of thought that each link would be brought up and running and just routing special internal load test data for a few weeks before the day of switch over to ensure nothing goes wrong

Why should anyone notice what happens between googles data centres as its their internal traffic and if they had even half a brain cell they'd still have the older gear up and running plus alternative routes between DC's so should any of the upgrades fail everything would be business as usual and all people may notice at worst is an extra 0.5 seconds before their videos started to play but i would of thought that each link would be brought up and running and just routing special internal load test data for a few weeks before the day of switch over to ensure nothing goes wrong

reply to post by XPLodER

As an emerging website developer there are some trends to be careful of. I am aware of some google services that do provide the same scalability as google in general, but comes attached with its privacy implications. I general I like the philosophy of if 'you cannot be honest about it you should not do it', but also aware that our reality is a bit more complicated than that.

The DDOS threat has made me quite wary and tired as a developer. Having one abstracted point of access as a developer does alleviate a lot of strain with database integration. I very much like the PHP platform as a server based script being more socially secure to the local based JavaScript approaches. Going through the database channel of stored procedures is the most secure as only variables and not code is transmitted. There is also the potential for optimizations with only predefined and known procedures allowed. It does appear that some corporate developers are having major issues with feature creep in this realm.

Where I am currently stuck as a developer is 'how to secure a session'. Personally I would like to build a strong under current before attaching a SSL layer. Even if SSL is cracked, there is a whole new layer of coding for the attackers to contend with. At the moment, PHP and SQL is what I trust as an individual, group and whole.

As an emerging website developer there are some trends to be careful of. I am aware of some google services that do provide the same scalability as google in general, but comes attached with its privacy implications. I general I like the philosophy of if 'you cannot be honest about it you should not do it', but also aware that our reality is a bit more complicated than that.

well in the case of two or more servers one DB and one DC you would set up a server to be the control plane "controller" with open flow running on it, and open flow would "manage" the network communications over the network, and to your users.

The DDOS threat has made me quite wary and tired as a developer. Having one abstracted point of access as a developer does alleviate a lot of strain with database integration. I very much like the PHP platform as a server based script being more socially secure to the local based JavaScript approaches. Going through the database channel of stored procedures is the most secure as only variables and not code is transmitted. There is also the potential for optimizations with only predefined and known procedures allowed. It does appear that some corporate developers are having major issues with feature creep in this realm.

Where I am currently stuck as a developer is 'how to secure a session'. Personally I would like to build a strong under current before attaching a SSL layer. Even if SSL is cracked, there is a whole new layer of coding for the attackers to contend with. At the moment, PHP and SQL is what I trust as an individual, group and whole.

Originally posted by Maxatoria

reply to post by XPLodER

Why should anyone notice what happens between googles data centres as its their internal traffic and if they had even half a brain cell they'd still have the older gear up and running plus alternative routes between DC's so should any of the upgrades fail everything would be business as usual and all people may notice at worst is an extra 0.5 seconds before their videos started to play but i would of thought that each link would be brought up and running and just routing special internal load test data for a few weeks before the day of switch over to ensure nothing goes wrong

the first point is that open flow is still young,

this use case is a large provider, bleeding edge case,

and open flow worked in a stable manner,

the internal staff of google were mostly unaffected by the change,

and neither internal nor external people noticed,

thats pretty good.

im sure they left the old network gear onsight just in case

but very reliable and stable enough for large scale production environments.

xploder

Originally posted by kwakakev

reply to post by XPLodER

As an emerging website developer there are some trends to be careful of. I am aware of some google services that do provide the same scalability as google in general, but comes attached with its privacy implications. I general I like the philosophy of if 'you cannot be honest about it you should not do it', but also aware that our reality is a bit more complicated than that.

open flow is not a google product, it is open sourced and open standards,

this is not just a trend, it will be used widly and globally, while there are aspects i cant talk about you can ask me direct questions if you want direct answers.

The DDOS threat has made me quite wary and tired as a developer.

with a SDN telco network (ISP), the packets can be "dropped" at the network edge,

at the telco level, or at the individual network level.

Having one abstracted point of access as a developer does alleviate a lot of strain with database integration.

can be pro-actively programmed for fail-over, or load balancing as well,

I very much like the PHP platform as a server based script being more socially secure to the local based JavaScript approaches.

i write algorithms not code, but i understand what you mean,

Going through the database channel of stored procedures is the most secure as only variables and not code is transmitted. There is also the potential for optimizations with only predefined and known procedures allowed. It does appear that some corporate developers are having major issues with feature creep in this realm.

that is interesting, using stored procedures to provide secure commands?

Where I am currently stuck as a developer is 'how to secure a session'. Personally I would like to build a strong under current before attaching a SSL layer. Even if SSL is cracked, there is a whole new layer of coding for the attackers to contend with. At the moment, PHP and SQL is what I trust as an individual, group and whole.

use SDN and build a custom API with encryption/decryption.

integrate it into open flow, force routes you trust and drop packets that dont come from known clients.

SDN gives you a topographical view of the network, that you can also use for security.

granular control at the switch level = great security and internal/external isolation

xploder

edit on 21/4/13 by XPLodER because: (no reason given)

edit on 21/4/13 by XPLodER because: (no reason given)

Originally posted by Opportunia

reply to post by XPLodER

This was not done from someone simply making an innovative decision. The reason they did it was to avoid paying Vringo usage rights after being sued by them for patent violations.

could you please elaborate?

i not sure if i understand what they were infringing on.

thanks

xploder

reply to post by XPLodER

So how is IP addressing handled? I would expect it is IP4 and IP6 compliant. Are there white, grey and black lists supported from certain IP domains or is this all in the box? No developer concerns or awareness about IP traffic?

Sounds like some programmer overheads / abstractions that need to be performed, any specifics?

This is one of the hard edges at the divide. The ability to restrict permissions is a huge plus. The structured function also provides a clear separation of powers. Ok, a hacker may be able to implement what can be done through the website, but they cannot do a table delete or basic reformat if database permissions where exposed.

My flash video player is busted at the moment, bandwidth issues prevent me for implementing a fix. I have heard of REST before, can you describe it?

while there are aspects i cant talk about you can ask me direct questions if you want direct answers.

with a SDN telco network (ISP), the packets can be "dropped" at the network edge, at the telco level, or at the individual network level.

So how is IP addressing handled? I would expect it is IP4 and IP6 compliant. Are there white, grey and black lists supported from certain IP domains or is this all in the box? No developer concerns or awareness about IP traffic?

can be pro-actively programmed for fail-over, or load balancing as well,

Sounds like some programmer overheads / abstractions that need to be performed, any specifics?

that is interesting, using stored procedures to provide secure commands?

This is one of the hard edges at the divide. The ability to restrict permissions is a huge plus. The structured function also provides a clear separation of powers. Ok, a hacker may be able to implement what can be done through the website, but they cannot do a table delete or basic reformat if database permissions where exposed.

My flash video player is busted at the moment, bandwidth issues prevent me for implementing a fix. I have heard of REST before, can you describe it?

Originally posted by XPLodER

there are protocols like the loss avoidance algorithms that can speed up delivery of packet data over this transport to make gaming a lag free experience.

Nah not 100% correct. It does not speed up the delivery it just makes sure that all the bits, that frame the packet, are delivered or can be reconstructed so you don´t need to resend the whole chunk. If you think about it, chopping data into packets is already doing a job, because corrupted packets can be resend, not the whole file or whatever you try to send. In truth, loss avoidance algorithms will make the data you have to send more bulky, thus you get longer transfer rates per chunk itself. While short transfer rates are critical to high FPS games, you may get lag free (as in smooth) playing experience but will add an offset to everything.

I opted out from telecoms loss avoidance system. years ago when I was customer to telekom (germany) it cost me a few euros a month to have this technology shut off on my line. Made me a stable 15ms latency on most servers in Germany, where as I had 45ms or more before. This was/is called fastpath.

Funny, you have to pay to make sure you don´t get a service.

You only gain end-to-end speed increase if the time needed to compute, add, send, receive and decipher the validation bits is shorter then to send the whole packet again. Of course, there is always some kind of correction system working in the back.

I would know a nice example about GSM protocols that would clearly show how this affects bandwidth (or packet window size) but increases quality but my post is already lengthy and a little bit OT.

reply to post by verschickter

The KISS (Keep It Simple Stupid) principle is the all time classic when dealing with anything. Unfortunately the internet is not a secure place and generally anyone on it has their butt exposed like some Krill in the ocean. You do make a lot of valid points how new technology is mostly not right technology, unfortunately with the realities of this world a lot of mistakes must be made before finding the right path.

Only under the test of time will the good ideas be sorted out from the bad, at the moment we are all in it together.

Balance is where it is all at. Different applications do have different needs and this must all be accounted for at the programming end. Due to the natural progression of technology, those at the bleeding edge will bleed as new ideas and methods are tried.

The KISS (Keep It Simple Stupid) principle is the all time classic when dealing with anything. Unfortunately the internet is not a secure place and generally anyone on it has their butt exposed like some Krill in the ocean. You do make a lot of valid points how new technology is mostly not right technology, unfortunately with the realities of this world a lot of mistakes must be made before finding the right path.

Only under the test of time will the good ideas be sorted out from the bad, at the moment we are all in it together.

you may get lag free (as in smooth) playing experience but will add an offset to everything.

Balance is where it is all at. Different applications do have different needs and this must all be accounted for at the programming end. Due to the natural progression of technology, those at the bleeding edge will bleed as new ideas and methods are tried.

reply to post by kwakakev

Don´t understand me wrong, I´m not against new technology at all, as long as it has it´s reason. I work for my company in research lab, constantly trying new things and developing methods.

If you know about the .NET architecture, it makes a programmers life (who´s only working on win) so easy, yet it makes delivery complicated because your end-consumer needs the whole .NET package installed on your machine even if its a Hello World.

You named it, balance is the word that I was missing in my post.

Don´t understand me wrong, I´m not against new technology at all, as long as it has it´s reason. I work for my company in research lab, constantly trying new things and developing methods.

If you know about the .NET architecture, it makes a programmers life (who´s only working on win) so easy, yet it makes delivery complicated because your end-consumer needs the whole .NET package installed on your machine even if its a Hello World.

You named it, balance is the word that I was missing in my post.

Originally posted by XPLodER

Originally posted by kwakakev

I did not notice, but has been some internet traffic problems the past couple of weeks. So as a website administrator what does this mean to me?

your hosting provider will in their next upgrade cycle be made aware of SDN or software defined networking,

you as an administrator may be interested in the extra functionality of being able to use the load balancing nature of SDN to provide better service, mitigate DDOS attacks, from a band width point of view, you can save by having a dynamic allocation of bandwidth. biggest change for an administrator is you dont have to manually configure your switches routers ect. in theory you should be able to serve more users over the same network/server infrastructure.

The Googles of the world (i.e Google, universities, and a tiny set of other companies) will use this technology to improve service and lower prices.

Everybody else (the Comcasts, Time-Warners, ad networks, Verizons, etc) will use this technology to enable fine-grained anti competitive behavior (slowing down Netflix because it competes with their own more expensive cable---this is the "dynamic allocation of bandwidth" at work), intrusive advertisement (add/replace ads on websites) and espionage.

The Chinese will take the software without paying and build it into Huawei routers (and use it for international corporate and government espionage, and improving internal censorship capabilities).

It democratizes the previously expensive technology necessary to make the internet anti-democratic.

edit on 21-4-2013 by mbkennel because: (no reason given)

edit on 21-4-2013 by mbkennel because: (no reason

given)

edit on 21-4-2013 by mbkennel because: (no reason given)

new topics

-

George Knapp AMA on DI

Area 51 and other Facilities: 4 hours ago -

Not Aliens but a Nazi Occult Inspired and then Science Rendered Design.

Aliens and UFOs: 4 hours ago -

Louisiana Lawmakers Seek to Limit Public Access to Government Records

Political Issues: 6 hours ago -

The Tories may be wiped out after the Election - Serves them Right

Regional Politics: 8 hours ago -

So I saw about 30 UFOs in formation last night.

Aliens and UFOs: 10 hours ago -

Do we live in a simulation similar to The Matrix 1999?

ATS Skunk Works: 11 hours ago -

BREAKING: O’Keefe Media Uncovers who is really running the White House

US Political Madness: 11 hours ago

top topics

-

BREAKING: O’Keefe Media Uncovers who is really running the White House

US Political Madness: 11 hours ago, 23 flags -

George Knapp AMA on DI

Area 51 and other Facilities: 4 hours ago, 19 flags -

Biden--My Uncle Was Eaten By Cannibals

US Political Madness: 12 hours ago, 18 flags -

African "Newcomers" Tell NYC They Don't Like the Free Food or Shelter They've Been Given

Social Issues and Civil Unrest: 17 hours ago, 12 flags -

"We're All Hamas" Heard at Columbia University Protests

Social Issues and Civil Unrest: 12 hours ago, 7 flags -

Louisiana Lawmakers Seek to Limit Public Access to Government Records

Political Issues: 6 hours ago, 7 flags -

Russian intelligence officer: explosions at defense factories in the USA and Wales may be sabotage

Weaponry: 16 hours ago, 6 flags -

So I saw about 30 UFOs in formation last night.

Aliens and UFOs: 10 hours ago, 5 flags -

The Tories may be wiped out after the Election - Serves them Right

Regional Politics: 8 hours ago, 3 flags -

Not Aliens but a Nazi Occult Inspired and then Science Rendered Design.

Aliens and UFOs: 4 hours ago, 3 flags

active topics

-

Truth Social goes public, be careful not to lose your money

Mainstream News • 119 • : Kaiju666 -

Biden--My Uncle Was Eaten By Cannibals

US Political Madness • 44 • : JadedGhost -

George Knapp AMA on DI

Area 51 and other Facilities • 14 • : Brotherman -

Candidate TRUMP Now Has Crazy Judge JUAN MERCHAN After Him - The Stormy Daniels Hush-Money Case.

Political Conspiracies • 383 • : xuenchen -

OUT OF THE BLUE Chilling moment pulsating blue cigar-shaped UFO is filmed hovering over PHX AZ

Aliens and UFOs • 42 • : Ophiuchus1 -

-@TH3WH17ERABB17- -Q- ---TIME TO SHOW THE WORLD--- -Part- --44--

Dissecting Disinformation • 531 • : cherokeetroy -

So I saw about 30 UFOs in formation last night.

Aliens and UFOs • 20 • : Halfswede -

MULTIPLE SKYMASTER MESSAGES GOING OUT

World War Three • 33 • : Halfswede -

Not Aliens but a Nazi Occult Inspired and then Science Rendered Design.

Aliens and UFOs • 7 • : JonnyC555 -

Mood Music Part VI

Music • 3057 • : BatCaveJoe