It looks like you're using an Ad Blocker.

Please white-list or disable AboveTopSecret.com in your ad-blocking tool.

Thank you.

Some features of ATS will be disabled while you continue to use an ad-blocker.

8

share:

In a series of three debates, my formidable oppoent Druid42 and I will argue aspects concering the potential emergence of AI ( artificial intelligence ). This, the first installment, is whether or not AI is inevitable. The second debate will titled "The WWW will become AI.". The third, and final segment will be titled "AI will be beneficial to mankind?".

Opening Statements

Thank you the ATS members, fighters, judges, and my esteemed opponent, Druid42 - all for making this debate, and forum possible and as popular as it is. Truly, thank you!

Today we gather to begin a three part discussion about artificial intelligence, or AI. There are very specific definitions involved in that label, but a simplification of it is "a computer or computer network that will posses the ability to logic as a human does." We are all somewhat familiar with the concept of AI - as there are many references to it in books, film, and television. From the "Hal 9000" of 2001, through "Mother" of the Nostromo (Aliens), to Terminator's "Skynet", Wargames "WOPR", I-Robot's "V.I.K.I., to Star Trek's "Data", and the movie AI's "Mechas" - along with a host of other books, movies, cartoons, and comics... most of us have been exposed to the idea of AI.

Taking the fiction out of it, Bing defines artificial intelligence as follows:

NOUN

1. development of intelligent machines: a branch of computer science that develops programs to allow machines to perform functions normally requiring human intelligence.

I chose the Bing definition over other sources because I feel it best conveys the common understanding of what AI would be - in everyday terms, rather than intense tech-based rhetoric.

AI Is Inevitable

It has fallen to me to convince you, dear reader, that not only is AI possible - but that it is actually an inevitability. In order to accomplish this I will need to refer to the history of computer hardware - as a means of showing the increasingly exponential growth of technology. I will also refer to Moore's Law, which helps us understand the speed at which technology is increasing. I will even discuss that there are some very bright people who are already looking past AI and towards a technological singularity - an event or invention so profound and altering that we simply cannot predict anything beyond the moment of it's creation.

The Internet can be seen as a much more mild example of this sort of singularity. Just a few decades ago few had even heard of it, much less were able to begin to imagine the impact it would come to have upon society.

Beyond the technical side of my position, I must also discuss a few philosphic thoughts, as they are relevant.

If AI were to exist, would we possess the ability to recognize it for what it truly was? Would it be able to, or care to, recognize us as sentient beings? I ask these questions in earnest. In our vanity we tend to seek to ascribe and imply our own qualities upon things... our Gods, aliens, monsters under our beds, and AI. In our imaginations these things all resemble us in form and in function. I humbly submit that a wiser species than humanity currently is, might see things from a different perspective. They might even look at our current Internet and think it a life form. After all; our computers, phones, televisions, cable boxes, cars, radios, etc. - they all speak to one another as often, if not moreso, than we tend to do with one another. They are, incidentally, probably much more honest in their interactions. Would an outside observer see the Internet as a living organism? Would that organism have the capacity or desire to acknowledge us - any more than we are capable of acknowledging it?

The fact of the matter is that our understanding of what constitutes intelligent life is limited by us. We only see, hear, smell, feel, and think within such narrow parameters that it is, in my opinion, the height of vanity to think we can define what life means. We know so little about it. We are only currently capable of knowing a finite amount.

Nonetheless, it is my personal belief that when AI comes into being, if it hasn't already on some level, eventually it will take notice of us - or us of it. And there will be a moment of mutual discovery. A moment of relief for both participants. To realize that one is not alone in the vastness of the Universe? Well that's what we all pray for. It's why we have Gods. It's why ATS has a very active aliens forum. We want to know we're not alone! I imagine that any artificial intelligence would also feel that same longing.

That moment of discovery may be like finding a long lost friend. Or it might be as esoteric and nuanced as a whale and a dolphin swimming past and making eye contact with one another.

This concludes my opening remarks.

I would like to open my position on this debate series with a sincere "Thank You" to one of ATS's most likable mods, for accepting what is in my

opinion, an under-discussed subject, as a debate series topic. With that, thanks also goes the knowledge that Hefficide will provide an interesting

discourse, a solid position, and for me, three of the most challenging debates I have ever participated in. Regardless of the outcome, I'm certain

that both my opponent and I will provide some very interesting information, and perhaps even uncover the direction that technology is leading us.

Truly, we've all thought the same thing, how cool it would be. Sure, you may have an Iphone with Siri, or an Android with several other options, and they all talk to you. That's not really enough, actually, we want a conversation. We know the current apps have a very limited reach, but are useful. We want more, and R&D is doing it's best to accommodate us.

However, research is up against a proverbial wall: In order to define what AI is, research needs to take a closer look at what intelligence actually is. Intelligence is directly related to consciousness, and without solving the mystery of consciousness, we will never be able to make an intelligent machine. Approximations, yes, with huge databases of knowledge to slug through, restrained by bandwidth limitations, but nothing close to the smoothness of human thought. True AI would be able to pass a Turing Test, with ease, yet all approximations so far have been lacking.

As an aside, the Turing Test was proposed in 1950. In retrospect, human flight was achieved in 1903, and a mere 66 years later, in 1969, we landed on the moon. Concerning the advancements in understanding intelligence, we still use the 1950 protocol. It's 62 years later, and we are no closer to solving the riddle. Technology increases, understanding the physical world around us, but we are somehow short in understanding our own thoughts. I'll appreciate clarification from my opponent if the terms intelligence and consciousness are acceptably intertwined. While awaiting clarification, I'll posit that AI is dependent upon a machine becoming aware of itself.

Sure, you can program a computer with tons of source code, out the wazoo if you will, but no matter how hard you try, no matter how many lines of source code you write, you still will not be able to grasp the essence of what makes us able to perceive reality. I'm stating that it's not a numerical function. The essence can't be encoded in bits and bytes.

As humans, we are special. We went through millions of years of conditioning, adapting to our environment, and it's not something that can be emulated by a machine, with any accuracy. Approximations yes, still inaccurate, still lacking.

Without understanding what consciousness is, the hope of creating AI is futile. We simply cannot create a machine to mimic us flawlessly without first understanding ourselves.

There's a possibility to address, and that is the untimely demise of humanity, due to a number of either natural catastrophes, or self-inflicted ones. Mankind may not survive the end of the year, let alone the next, nor any given year in the future. Our continued survival on this planet is a given, but not guaranteed, so there may be a lot about the future that is taken for granted. To say development of AI is guaranteed is related to the fact that we'll still be around to develop it.

The whole topic boils down to the inevitability of such an event, and whether mankind can weather the hurdles faced to achieve that. I lack the faith that perhaps others have.

Dreamy eyed movies may lend strength to our imaginations, but the reality is, which I'll be sharing in upcoming posts, is that no matter how hard we try to explain our self-awareness, we come up empty, and with that, we will be unable to emulate the phenomena. We can't code a computer to do something we don't understand.

With that, I rest, and turn the debate back to Hefficide.

I wish my computer would talk to me!

Truly, we've all thought the same thing, how cool it would be. Sure, you may have an Iphone with Siri, or an Android with several other options, and they all talk to you. That's not really enough, actually, we want a conversation. We know the current apps have a very limited reach, but are useful. We want more, and R&D is doing it's best to accommodate us.

However, research is up against a proverbial wall: In order to define what AI is, research needs to take a closer look at what intelligence actually is. Intelligence is directly related to consciousness, and without solving the mystery of consciousness, we will never be able to make an intelligent machine. Approximations, yes, with huge databases of knowledge to slug through, restrained by bandwidth limitations, but nothing close to the smoothness of human thought. True AI would be able to pass a Turing Test, with ease, yet all approximations so far have been lacking.

As an aside, the Turing Test was proposed in 1950. In retrospect, human flight was achieved in 1903, and a mere 66 years later, in 1969, we landed on the moon. Concerning the advancements in understanding intelligence, we still use the 1950 protocol. It's 62 years later, and we are no closer to solving the riddle. Technology increases, understanding the physical world around us, but we are somehow short in understanding our own thoughts. I'll appreciate clarification from my opponent if the terms intelligence and consciousness are acceptably intertwined. While awaiting clarification, I'll posit that AI is dependent upon a machine becoming aware of itself.

Consciousness is the quality or state of being aware of an external object or something within oneself.

Sure, you can program a computer with tons of source code, out the wazoo if you will, but no matter how hard you try, no matter how many lines of source code you write, you still will not be able to grasp the essence of what makes us able to perceive reality. I'm stating that it's not a numerical function. The essence can't be encoded in bits and bytes.

As humans, we are special. We went through millions of years of conditioning, adapting to our environment, and it's not something that can be emulated by a machine, with any accuracy. Approximations yes, still inaccurate, still lacking.

Without understanding what consciousness is, the hope of creating AI is futile. We simply cannot create a machine to mimic us flawlessly without first understanding ourselves.

There's a possibility to address, and that is the untimely demise of humanity, due to a number of either natural catastrophes, or self-inflicted ones. Mankind may not survive the end of the year, let alone the next, nor any given year in the future. Our continued survival on this planet is a given, but not guaranteed, so there may be a lot about the future that is taken for granted. To say development of AI is guaranteed is related to the fact that we'll still be around to develop it.

The whole topic boils down to the inevitability of such an event, and whether mankind can weather the hurdles faced to achieve that. I lack the faith that perhaps others have.

Dreamy eyed movies may lend strength to our imaginations, but the reality is, which I'll be sharing in upcoming posts, is that no matter how hard we try to explain our self-awareness, we come up empty, and with that, we will be unable to emulate the phenomena. We can't code a computer to do something we don't understand.

With that, I rest, and turn the debate back to Hefficide.

I would like to begin my retort with a brief aside. A short discussion of the common ant.

As this is a character limited debate, I have cherry picked the above from three paragraphs. I do not feel the context or accuracy has been altered. I offer it as support for claims made in my first post. Here we have an organic creature that displays every trait we would look for in an "intelligent" lifeform, including socialization, communication, and cooperation. Still, instead of recognizing and being amazed by this, we routinely simply poison them without a second thought - all in the name of a nicer lawn.

If we cannot recognize, respect, or accept the signs of intelligence in "life" that, like us, is organic in nature; how can we hope to do so with a lifeform that doesn't share our organic status?

Source

In this debate we are discussing artificial intelligence - not artificial humanity. Our egos, rise up, yet again - seeming to insist that "intelligence" be defined as a mirror of ourselves. A thing that even we, as sentient beings, cannot fully explain or even describe. We tend to want to use words like "consciousness" or "the ability to communicate" to these ends. Even in our fictions, AI tends to be like us. Bipedal robots, or machines with familiar, human voices. Human attributes. I offer that no human attribute is necessary for a being to qualify as intelligent. Intelligence is a function, not a form.

Besides, how can we seek to use a standard like "consciousness" as a guideline, when we cannot even define it ourselves? For that we still rely upon a 400 year old quote - cogito ergo sum... I think, therefore I am. How can we possibly recognize that, in others, which we cannot even define within ourselves except by internal observation?

In this regard, all the Touring test helps us do is to identify human like behaviors, not any indicators of intelligence, artificial or otherwise.

My opponent said the following:

Herein lay the trap - and the crux of my argument. We, as humans, posses consciousness, yet we did nothing to achieve this state. Some imagine that God(s) did it. Others that it is the result of untold generations of evolution and adaptation... that it is incidental and accidental to our condition. Through our very limited understanding and ability to perceive, we try to define that which we cannot define - and then to impose that abstract and limited template upon all else.

I offer that, much like the humble ant, with which I began this post, the Internet already displays behaviors that one would, otherwise, think of as organic life. Even as I type these words, dozens of aspects, within my computer system, are behind the scenes, talking... socializing... optimizing... working for the benefit of the whole - all without my participation, knowledge, or even my consent. The network is taking care of itself, and its own even as we speak. It is protecting itself. Strangely enough. Protecting itself mostly from us.

Human do provide the code that this organic system uses. Just as our DNA provides the code that we are based upon. Does the fact that DNA seems to be unable to understand what it creates negate us?

The truth is that our influence may have already created a lifeform, sentient and self aware, but simply beyond our comprehension. That entity may, currently, be in its own infancy - or possibly only an embryo waiting to be born. Biological terms seem to already apply to it, as it does to ants, All we await now is a recognizable sign of the spark of life to show itself.

That ends my second post.

Ants are social insects of the family Formicidae. Ants evolved and diversified after the rise of flowering plants. Ants form highly organised colonies that may occupy large territories and consist of millions of individuals. Ants appear to operate as a unified entity, collectively working together to support the colony. Ant societies have division of labour, communication between individuals, and an ability to solve complex problems. These parallels with human societies have long been an inspiration and subject of study.

As this is a character limited debate, I have cherry picked the above from three paragraphs. I do not feel the context or accuracy has been altered. I offer it as support for claims made in my first post. Here we have an organic creature that displays every trait we would look for in an "intelligent" lifeform, including socialization, communication, and cooperation. Still, instead of recognizing and being amazed by this, we routinely simply poison them without a second thought - all in the name of a nicer lawn.

If we cannot recognize, respect, or accept the signs of intelligence in "life" that, like us, is organic in nature; how can we hope to do so with a lifeform that doesn't share our organic status?

The Problem With The Touring Test

The Turing test is a test of a machine's ability to exhibit intelligent behavior, equivalent to or indistinguishable from, that of an actual human. In the original illustrative example, a human judge engages in a natural language conversation with a human and a machine designed to generate performance indistinguishable from that of a human being.

Source

In this debate we are discussing artificial intelligence - not artificial humanity. Our egos, rise up, yet again - seeming to insist that "intelligence" be defined as a mirror of ourselves. A thing that even we, as sentient beings, cannot fully explain or even describe. We tend to want to use words like "consciousness" or "the ability to communicate" to these ends. Even in our fictions, AI tends to be like us. Bipedal robots, or machines with familiar, human voices. Human attributes. I offer that no human attribute is necessary for a being to qualify as intelligent. Intelligence is a function, not a form.

Besides, how can we seek to use a standard like "consciousness" as a guideline, when we cannot even define it ourselves? For that we still rely upon a 400 year old quote - cogito ergo sum... I think, therefore I am. How can we possibly recognize that, in others, which we cannot even define within ourselves except by internal observation?

In this regard, all the Touring test helps us do is to identify human like behaviors, not any indicators of intelligence, artificial or otherwise.

The Spark

My opponent said the following:

Without understanding what consciousness is, the hope of creating AI is futile. We simply cannot create a machine to mimic us flawlessly without first understanding ourselves.

Herein lay the trap - and the crux of my argument. We, as humans, posses consciousness, yet we did nothing to achieve this state. Some imagine that God(s) did it. Others that it is the result of untold generations of evolution and adaptation... that it is incidental and accidental to our condition. Through our very limited understanding and ability to perceive, we try to define that which we cannot define - and then to impose that abstract and limited template upon all else.

I offer that, much like the humble ant, with which I began this post, the Internet already displays behaviors that one would, otherwise, think of as organic life. Even as I type these words, dozens of aspects, within my computer system, are behind the scenes, talking... socializing... optimizing... working for the benefit of the whole - all without my participation, knowledge, or even my consent. The network is taking care of itself, and its own even as we speak. It is protecting itself. Strangely enough. Protecting itself mostly from us.

Human do provide the code that this organic system uses. Just as our DNA provides the code that we are based upon. Does the fact that DNA seems to be unable to understand what it creates negate us?

The truth is that our influence may have already created a lifeform, sentient and self aware, but simply beyond our comprehension. That entity may, currently, be in its own infancy - or possibly only an embryo waiting to be born. Biological terms seem to already apply to it, as it does to ants, All we await now is a recognizable sign of the spark of life to show itself.

That ends my second post.

edit on 12/13/2012 by tothetenthpower because: -- Mod Edit -- Edited for reasons of formatting ONLY - NO information

or posting content has been altered/removed.

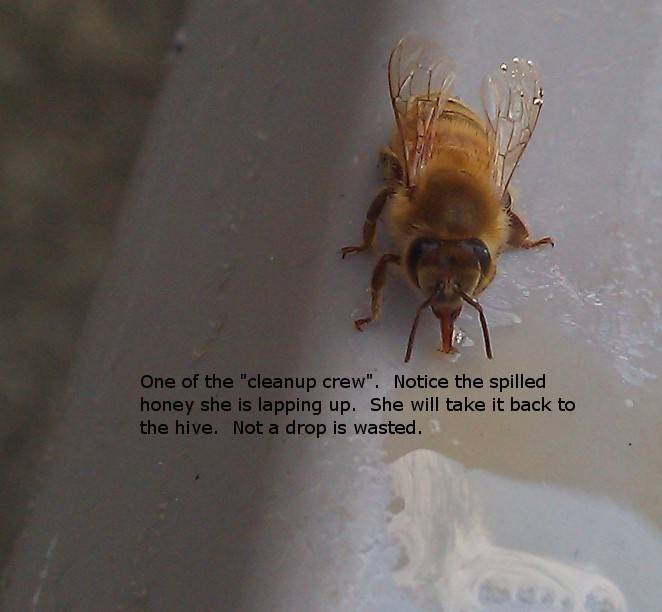

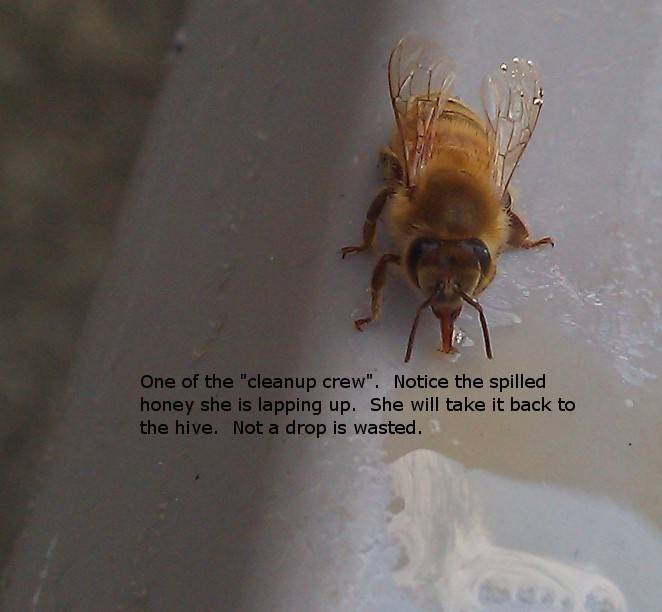

I'll match Hefficide's ant, and raise him one bee:

Yes, that WAS one of my bees. You see, I am an apiarist, a beekeeper, so I can completely relate to the ant analogy. I have a thread here about beekeeping. Bees are social insects, just like ants, cold-blooded, with a very short life cycle. The parallels between ants and bees are amazing.

The reason I emphasized WAS is because that particular bee died last year, about 30 days after that picture was taken. Foraging bees, as ants, only live about a month after they mature. What's important to realize here is that insects, no matter how seemingly organized they are, are bound by simple rules governed by their environment. If the weather is cold, they hibernate, if it's warm, they forage. They have such a short life span that there is no hope for them to develop intelligence, but yet they fill an important ecological niche. The gestalt provided by the "queen" in both a bee and ant colony is based on pheromones, and appendage articulation, not anyway connected to a higher functioning level of cognizance. Both ant and bee colonies survive by sheer numbers of reproduction, 30,000 or more per season, each relying upon simple scents and body movements. Bees and ants have been successful for millions of years, yet are not a good candidate for "intelligence", and as such, are not a factor in a debate about AI.

Alan Turing proposed the Turing Test in the 1950's. One of the earliest forms of programming addressing the issue was a computer program, ELIZA, developed in the 1980's. Basically, the Turing Test says that if the user of a computer can't determine if the output on their screen is provided by a human or a computer, then the machine has passed the Turing Test. It's a benchmark with which programming is based upon. A conversation with a computer SHOULD be indistinguishable from a human conversation.

Yes, there are nuances. Jokes have to be perceived. Computers can't understand humor. Emotions need to be represented. Pity, sympathy, empathy, and happiness all need to be present, for example, yet every program to date fails the Turing Test. Intelligence is directly linked to providing the appropriate response to whatever question asked. I'm simply not seeing that with current levels of technology, and personally, I think trying to emulate human behavior is the wrong path to choose. AI, if anything, would be an emergent behavior, but again, without understanding "thought", it's not something we can program.

I'll borrow a few quotes to refute, from my opponent:

My opponent is anthropomorphizing here, attributing human qualities to an underlying mechanism of electronics and hardware connections. There is no benefit provided to my network card when it chooses to signal a data packet across the home network, through my router, and modem, to be transmitted across probably hundreds of nodes on the internet before it reaches the IP address of ATS, and is translated by the software. It's a data transmission miracle invented by Tim Berners-Lee, a standard well accepted and revised within the RFCs of the internet.

Hogwash. The network needs revisions, updates in software, and maintenance, and without human intervention, the internet would be a complete system of outdated software, old protocols that no longer work smoothly, and a less than acceptable system of accessing information. I'll revisit the RFCs later on in this series.

That's pure speculation. A lifeform exhibits characteristics, which are definable, and has an extemporal measurement we can perceive. Without an attempt at perception, my opponent's argument in comprehension is best left to the study of ants and bees.

An intelligence equivalent, or even perhaps more advanced than our own, would have the means by which to communicate with us, matching our comprehension ability, and tailoring the conversation accordingly.

An AI, without that ability, may as well remain within the confines of our own imagination.

The ability to communicate is a defining characteristic of AI.

It's now I rest, without characters to continue my post. Over to you, Heff.

Yes, that WAS one of my bees. You see, I am an apiarist, a beekeeper, so I can completely relate to the ant analogy. I have a thread here about beekeeping. Bees are social insects, just like ants, cold-blooded, with a very short life cycle. The parallels between ants and bees are amazing.

The reason I emphasized WAS is because that particular bee died last year, about 30 days after that picture was taken. Foraging bees, as ants, only live about a month after they mature. What's important to realize here is that insects, no matter how seemingly organized they are, are bound by simple rules governed by their environment. If the weather is cold, they hibernate, if it's warm, they forage. They have such a short life span that there is no hope for them to develop intelligence, but yet they fill an important ecological niche. The gestalt provided by the "queen" in both a bee and ant colony is based on pheromones, and appendage articulation, not anyway connected to a higher functioning level of cognizance. Both ant and bee colonies survive by sheer numbers of reproduction, 30,000 or more per season, each relying upon simple scents and body movements. Bees and ants have been successful for millions of years, yet are not a good candidate for "intelligence", and as such, are not a factor in a debate about AI.

The Turing Test Revisited.

Alan Turing proposed the Turing Test in the 1950's. One of the earliest forms of programming addressing the issue was a computer program, ELIZA, developed in the 1980's. Basically, the Turing Test says that if the user of a computer can't determine if the output on their screen is provided by a human or a computer, then the machine has passed the Turing Test. It's a benchmark with which programming is based upon. A conversation with a computer SHOULD be indistinguishable from a human conversation.

Yes, there are nuances. Jokes have to be perceived. Computers can't understand humor. Emotions need to be represented. Pity, sympathy, empathy, and happiness all need to be present, for example, yet every program to date fails the Turing Test. Intelligence is directly linked to providing the appropriate response to whatever question asked. I'm simply not seeing that with current levels of technology, and personally, I think trying to emulate human behavior is the wrong path to choose. AI, if anything, would be an emergent behavior, but again, without understanding "thought", it's not something we can program.

I'll borrow a few quotes to refute, from my opponent:

Even as I type these words, dozens of aspects, within my computer system, are behind the scenes, talking... socializing... optimizing... working for the benefit of the whole - all without my participation, knowledge, or even my consent.

My opponent is anthropomorphizing here, attributing human qualities to an underlying mechanism of electronics and hardware connections. There is no benefit provided to my network card when it chooses to signal a data packet across the home network, through my router, and modem, to be transmitted across probably hundreds of nodes on the internet before it reaches the IP address of ATS, and is translated by the software. It's a data transmission miracle invented by Tim Berners-Lee, a standard well accepted and revised within the RFCs of the internet.

The network is taking care of itself, and its own even as we speak. It is protecting itself. Strangely enough. Protecting itself mostly from us.

Hogwash. The network needs revisions, updates in software, and maintenance, and without human intervention, the internet would be a complete system of outdated software, old protocols that no longer work smoothly, and a less than acceptable system of accessing information. I'll revisit the RFCs later on in this series.

The truth is that our influence may have already created a lifeform, sentient and self aware, but simply beyond our comprehension.

That's pure speculation. A lifeform exhibits characteristics, which are definable, and has an extemporal measurement we can perceive. Without an attempt at perception, my opponent's argument in comprehension is best left to the study of ants and bees.

An intelligence equivalent, or even perhaps more advanced than our own, would have the means by which to communicate with us, matching our comprehension ability, and tailoring the conversation accordingly.

An AI, without that ability, may as well remain within the confines of our own imagination.

The ability to communicate is a defining characteristic of AI.

It's now I rest, without characters to continue my post. Over to you, Heff.

I will begin my ending by addressing two statements that my opponent has made:

And:

Anthropomorphizing. Isn't that really what the Turning test really amounts to? It seems to want to gauge artificial humanity - and not truly address the myriad of potential presentations artificial intelligence might possess? The very notion limits our definition of intelligence with a mirror. It must act like us, and speak to us, to possess intelligence? To me this is rather pedestrian and vain.

Even as I write these words, as if by serendipity, I just found an article, posted a scant 39 minutes ago, announcing that Ray Kurzweil has accepted a position, as Director of Engineering for Google. The article says:

Where some might see this glass as half empty, I see it as half full. Could it be that the reality here is that where we have much further to go is not in the realm of creating AI, but, rather, in teaching ourselves to see it, or helping it understand how to see us?

My opponent has suggested that the Internet cannot qualify as a life form because it requires ( for now ) human intervention. I offer that intelligence can exist within a symbiotic relationship. After all - we consider ourselves intelligent. Yet without the lowly mitochondrion or DNA, we would not exist. One could think of the Internet as a being, our infrastructure serving as it's own mitochondria, and we, ourselves, playing the role of it's DNA. Such an intelligence would have no more cause, or ability, to communicate with us - than we have of speaking ot our own DNA. The best we can do with DNA is to analyze it, and try to compare it.

Exactly as the Internet currently does to each and every one of us right now.

My opponent offered a phrase, "extemporal measurement". It is an impressive pairing of words, but one which seems to lack inherent meaning. If I am correct, my opponent means that AI would have to surprise us by doing something that we don't expect it to do... that it might behave outside of a predetermined set of parameters. I argue that this is not necessarily a trait that intelligence would possess or that we would be able to measure even if we wished to.

After all, anyone who works with computers, even casually, will quickly tell you that they often seem to have a mind of their own. They often do things we do not expect them to. We attribute these bumps and hiccups to any variety of causes, from bad programming, to system conflicts, to viruses, to other humans using maliciously coded backdoors.

In essense, all of our interpretations, perceptions, and opinions about computers come with a built in bias of anthropomorphizing. Even if there were a ghost, screaming to reach out at us from the machine? Who would notice it? Who, among us, would not simply write it off to yet another Windows error, bad download, or Google spider misbehaving... yet again?

Closing

If one were to take all of the tech talk out of it, and write a simple description of the Internet, from an objective standpoint, it would, I think, read like a description of an organism. A reader might think "cell", or "bacteria", "virus", or even "insect colony" based upon such a description. Networks, biological systems, ecosystems, and even the human mind are all applicable analogs for Cyberspace.

Given these abstract similarities, what are the implications? Can we possibly begin to list or understand them?

Emergent AI is, I think, not just inevitable. I think it currently exists. Obviously it is in its infancy and I doubt it is self aware. But it here. Just like a human infant, we all can see and feel it. Its very presence has changed us and how we behave. We are its parents.

Kurzweil, one of the dreamers who recognizes this potential, himself, says that we've made progress, but have much further to go. He sounds like a parent to me. A parent of an intelligent and recognized life. A life that is currently new and either unable or disinterested in talking to us.

One might argue that an infant is not intelligent. After all, a baby cannot engage us in conversation. Yet they really do. Without words, they tell us exactly what they need and when they need it.

Just like our computers do.

I would like to thank ATS and Druid42 for this fun and provocative debate!

AI, if anything, would be an emergent behavior, but again, without understanding "thought", it's not something we can program.

And:

My opponent is anthropomorphizing here...

Anthropomorphizing. Isn't that really what the Turning test really amounts to? It seems to want to gauge artificial humanity - and not truly address the myriad of potential presentations artificial intelligence might possess? The very notion limits our definition of intelligence with a mirror. It must act like us, and speak to us, to possess intelligence? To me this is rather pedestrian and vain.

Even as I write these words, as if by serendipity, I just found an article, posted a scant 39 minutes ago, announcing that Ray Kurzweil has accepted a position, as Director of Engineering for Google. The article says:

Kurzweil is well-known for authoring several books about the future of technology and “the singularity,” a period when he says humans will merge with intelligent machines. He believes we have made discernible progress with artificial intelligence but have much further to go.

Where some might see this glass as half empty, I see it as half full. Could it be that the reality here is that where we have much further to go is not in the realm of creating AI, but, rather, in teaching ourselves to see it, or helping it understand how to see us?

My opponent has suggested that the Internet cannot qualify as a life form because it requires ( for now ) human intervention. I offer that intelligence can exist within a symbiotic relationship. After all - we consider ourselves intelligent. Yet without the lowly mitochondrion or DNA, we would not exist. One could think of the Internet as a being, our infrastructure serving as it's own mitochondria, and we, ourselves, playing the role of it's DNA. Such an intelligence would have no more cause, or ability, to communicate with us - than we have of speaking ot our own DNA. The best we can do with DNA is to analyze it, and try to compare it.

Exactly as the Internet currently does to each and every one of us right now.

My opponent offered a phrase, "extemporal measurement". It is an impressive pairing of words, but one which seems to lack inherent meaning. If I am correct, my opponent means that AI would have to surprise us by doing something that we don't expect it to do... that it might behave outside of a predetermined set of parameters. I argue that this is not necessarily a trait that intelligence would possess or that we would be able to measure even if we wished to.

After all, anyone who works with computers, even casually, will quickly tell you that they often seem to have a mind of their own. They often do things we do not expect them to. We attribute these bumps and hiccups to any variety of causes, from bad programming, to system conflicts, to viruses, to other humans using maliciously coded backdoors.

In essense, all of our interpretations, perceptions, and opinions about computers come with a built in bias of anthropomorphizing. Even if there were a ghost, screaming to reach out at us from the machine? Who would notice it? Who, among us, would not simply write it off to yet another Windows error, bad download, or Google spider misbehaving... yet again?

Closing

If one were to take all of the tech talk out of it, and write a simple description of the Internet, from an objective standpoint, it would, I think, read like a description of an organism. A reader might think "cell", or "bacteria", "virus", or even "insect colony" based upon such a description. Networks, biological systems, ecosystems, and even the human mind are all applicable analogs for Cyberspace.

Given these abstract similarities, what are the implications? Can we possibly begin to list or understand them?

Emergent AI is, I think, not just inevitable. I think it currently exists. Obviously it is in its infancy and I doubt it is self aware. But it here. Just like a human infant, we all can see and feel it. Its very presence has changed us and how we behave. We are its parents.

Kurzweil, one of the dreamers who recognizes this potential, himself, says that we've made progress, but have much further to go. He sounds like a parent to me. A parent of an intelligent and recognized life. A life that is currently new and either unable or disinterested in talking to us.

One might argue that an infant is not intelligent. After all, a baby cannot engage us in conversation. Yet they really do. Without words, they tell us exactly what they need and when they need it.

Just like our computers do.

I would like to thank ATS and Druid42 for this fun and provocative debate!

There are difficulties present.

There are many different considerations to include when talking about the inevitability of an event. We can say that it will rain again, because we understand that rain is a natural function the Earth's biosphere, as an example. Within the field of AI, their are no such clear cut understandings, so to find such an event definitely occurring in the future can at best be speculation.

I have previously stated that the ability to communicate with us is a requisite, but I'd like offer a few other considerations that need to be addressed.

AI would need to self-replicate. It would need to have a way to propagate, to make copies of itself, save a consideration that the original AI would become immortal. I'm very uncomfortable with the theory of an immortal sentience created by humans, and would think that religion would have a problem as well. I'll pass on further discussion of AI immortality.

I'm maintaining the position that the creation of AI would incorporate the creation of a new life form, and we'd need to address the "rights" of such a creation. The theory of a symbiotic relationship with AI is a nice premise, but that doesn't necessitate the requirement. It could be that mankind inadvertently programs a machine that sees our civilization as a plague, and functions to eradicate us from this planet. In this case, AI would be a natural evolutionary step, and not beneficial to mankind. We have enough hurdles to overcome with the threats of an economic collapse, world war, or an extraterrestrial impactor threatening our existence to create another in which to deal with. I would expect resistance to the idea of giving AI their own set of "rights" within modern society.

AI would need morality. The ability to choose between right and wrong, and not utilize logical processes as the core of it's programming. There's a certain compassion that we'd need to program into the system, and barring that, the outcome would be rather unpredictable. Again, barring our lack of understanding of consciousness, we also have no clear-cut working model of human morality, and is a decision often left to religion to dictate to us. Does that mean our AI machines would also need a religious "subroutine" in their programming, or could such be achieved by another method? Regardless, I'd feel much better if our attempts at creating an AI had a prerogative such as Asimov's 3 Laws to follow.

AI would need a "failsafe" mechanism, in case of unpredictable results. Just as we can prosecute criminals in our societies, and mete out punishments, we would need the same sort of justice system to deter what would seem to be "irregularities". I'm not implying that such events would occur, but such factors must be considered beforehand, before producing AI, and is a severe limitation on progress.

That's just a few of the hurdles that must be overcome, and each has no easy solution. It would take a collaborative effort from the experts in many different fields to accomplish this, and my faith in humanity to work together is rather sparse given the history of our very nature.

Closing.

I'd like to thank Hefficide and the readers for allowing me to present my position on this controversial, yet interesting topic.

I think my opponent closed his position nicely, with this point:

One might argue that an infant is not intelligent. After all, a baby cannot engage us in conversation. Yet they really do. Without words, they tell us exactly what they need and when they need it. Just like our computers do.

I'd like to point out that communication and perception are both inherent in the infant's development. From the moment the egg and sperm unite, chemical signals are sent at the molecular level, and while it does seem like a miracle, it proceeds by a well known and understood process. Biological life, with the proper amount of nourishment, is a self-sustaining process. It has adapted to survive. Our computers, in contrast, while needing the nourishment of electricity and software, do not concern themselves with survival. They exist. Period. There's no mechanism for survival or adaptation, which are key to developing intelligence, and until that key point is addressed, AI is due to remain a dream within our minds, the subject of fiction, and the product of our imaginations.

I'll leave you with this rhetorical question:

Do computers dream of Electric Sheep?

Judgments

This debate was a TIE. The tie was broken with...

Both have valid points, i'll give Druid42 a couple of points because he demonstrated that AI still has a long way to go for development (fundamental questions such as "I think there I am") and the limited AI we have so far publicly is still in its infancy.

However, Hefficide manged to prove that the idea of AI is limited by our own quantification of self and he shows that while only humans have human intelligence, there is limited intelligence all around us, from nature to things artificial.

I judge Hefficide as the winner. Hefficide showed that AI is slowing but surely maturing in its myriad of forms (natural or artificial), while Druid42 showed that AI is not far along enough for me to build my own Johnny-5, he did not show that AI is inevitable, just that the technology isn't mature.. yet.

Opening statements

Hefficide starts well defining the position he/she will be arguing from, attempting to explain to the reader that due to current trends in technology (moores law) that the computational power to 'Emulate' human like behavior will certainly exist at some time in the future, and points out that due to our limited understanding of the workings of life we may not recognize it , even if we did create such AI.

Druid42 opens with trying to tie down exactly what AI means to them, True Ai must become self aware (a fact that Heff acknowledges but hints that we may not recognize it) and no matter how many 0's or 1's we feed into a self contained system it would still be a mimic of real behavior and not true behavior itself. Druid argument relies on the 'Turing test' to moderate his version of AI, I then feel that Druid digresses a little from the topic with the second half of his opening posts speculating If humanity will even get to that stage due to current world workings (i feel this detracted from the debate itself and was slightly off topic..)

Verdict = Hefficide wins round 1

Round 2

Both parties here attempt to define 'AI' in further details both using organic life to drive home respective points, i feel this worked against Hefficide using an organic creature to champion a non organic ideal, although both debaters are strong in this section i think druid42 slightly edged this section of debate directly addressing points raised by Hefficide and rebuking them.

Verdict = Druid42 wins round 2

Closing statements

Again both parties attempt to drive home what 'AI' is to them, both make good points and address each others arguments well,

I am not going to say too much about the closing statements as it would mean the judges opinion would be spilling into the debate and i guess i am supposed to be impartial

Hefficide seems to be saying that something can be more than the sum of its parts no matter were those parts originate from, Druid42 says that no matter how good the parts look and interact with each other due to (external influences) the sum of the parts will NEVER be more than the whole due to the nature of self awareness..

Verdict = Druid42 wins round 3

In my opinion Druid wins this debate

I would like to end by saying this was a very difficult debate to judge due to the interpretations of 'Is AI inevitable?'

will a machine eventually be developed that can mimic every aspect of human behavior.... i say yes ... will that AI ever be self aware? that is very questionable.

I have awarded victory to druid BUT i think a better title for the debate would have been 'Will AI ever be self aware?' as AI in some form certainly exists now or will in the future but its self aware status is very debatable.

Thank you for an interesting debate!

This debate was a TIE. The tie was broken with...

That is probably one of the best debates I’ve had the pleasure of reading. Both fighters argue their respective points on solid grounds. Hefficide is firm in getting his points across and so is Druid42.

If I had a choice, I would call this debate a tie but since ties are no longer allowed then, by an extremely small margin, I have no choice but to call only one winner.

Druid42’s rebuttals were consistent and factual throughout the match and so were Hefficide’s. However, while Hefficide tried to convince me that AI is in its infancy and possibly there already, Druid42 really convinced me that it isn’t, that many important aspects are missing for such to be the case.

A fantastic debate for sure but since only one may walk away with victory, my choice is Druid42.

Big thumbs up to both fighters for a match that achieved another important point, it defined Excellence.

EPIC Debate Gentlemen.

Druid emerges as the winner by a small margin.

new topics

-

Tucker on Joe Rogan talking Kona Blue and UFOs

Aliens and UFOs: 25 minutes ago -

Remember These Attacks When President Trump 2.0 Retribution-Justice Commences.

2024 Elections: 1 hours ago -

Predicting The Future: The Satanic Temple v. Florida

Conspiracies in Religions: 1 hours ago -

WF Killer Patents & Secret Science Vol. 1 | Free Energy & Anti-Gravity Cover-Ups

General Conspiracies: 3 hours ago -

Hurt my hip; should I go see a Doctor

General Chit Chat: 4 hours ago -

Israel attacking Iran again.

Middle East Issues: 5 hours ago -

Michigan school district cancels lesson on gender identity and pronouns after backlash

Education and Media: 5 hours ago -

When an Angel gets his or her wings

Religion, Faith, And Theology: 6 hours ago -

Comparing the theology of Paul and Hebrews

Religion, Faith, And Theology: 7 hours ago -

Pentagon acknowledges secret UFO project, the Kona Blue program | Vargas Reports

Aliens and UFOs: 8 hours ago

top topics

-

The Democrats Take Control the House - Look what happened while you were sleeping

US Political Madness: 11 hours ago, 18 flags -

In an Historic First, In N Out Burger Permanently Closes a Location

Mainstream News: 13 hours ago, 16 flags -

Man sets himself on fire outside Donald Trump trial

Mainstream News: 10 hours ago, 9 flags -

Biden says little kids flip him the bird all the time.

Politicians & People: 10 hours ago, 9 flags -

Michigan school district cancels lesson on gender identity and pronouns after backlash

Education and Media: 5 hours ago, 7 flags -

WF Killer Patents & Secret Science Vol. 1 | Free Energy & Anti-Gravity Cover-Ups

General Conspiracies: 3 hours ago, 6 flags -

Pentagon acknowledges secret UFO project, the Kona Blue program | Vargas Reports

Aliens and UFOs: 8 hours ago, 6 flags -

Remember These Attacks When President Trump 2.0 Retribution-Justice Commences.

2024 Elections: 1 hours ago, 5 flags -

Israel attacking Iran again.

Middle East Issues: 5 hours ago, 5 flags -

Boston Dynamics say Farewell to Atlas

Science & Technology: 8 hours ago, 4 flags

active topics

-

Hurt my hip; should I go see a Doctor

General Chit Chat • 12 • : tarantulabite1 -

Remember These Attacks When President Trump 2.0 Retribution-Justice Commences.

2024 Elections • 13 • : xuenchen -

Man sets himself on fire outside Donald Trump trial

Mainstream News • 43 • : Vermilion -

Predicting The Future: The Satanic Temple v. Florida

Conspiracies in Religions • 6 • : Sookiechacha -

Tucker on Joe Rogan talking Kona Blue and UFOs

Aliens and UFOs • 0 • : pianopraze -

MULTIPLE SKYMASTER MESSAGES GOING OUT

World War Three • 55 • : annonentity -

Israel attacking Iran again.

Middle East Issues • 28 • : KrustyKrab -

In an Historic First, In N Out Burger Permanently Closes a Location

Mainstream News • 11 • : TheMisguidedAngel -

Michigan school district cancels lesson on gender identity and pronouns after backlash

Education and Media • 9 • : TheMisguidedAngel -

Mood Music Part VI

Music • 3064 • : MRTrismegistus

8