It looks like you're using an Ad Blocker.

Please white-list or disable AboveTopSecret.com in your ad-blocking tool.

Thank you.

Some features of ATS will be disabled while you continue to use an ad-blocker.

7

share:

we have been using TCP/IP for some time now, and its protocols allow us to communicate across the world wide web WWW

en.wikipedia.org...

this Transmission Control Protocol is how bits and bites are moved from one place to another, and are comprised of "layers" each one has a part in moving information from place to place. this Protocol was designed "before" wireless access points were in common usage, and the TCP/IP was designed to handle "wired" networks.

the three problems with this system,

1/the wireless loss/noise was not accounted for in the traditional TCP/IP and busy or noisy networks have a very slow throughput (especially wireless)

2/speed of transfer was not optimized to "account" for lost packets "on the fly"(required excessive resends)

3/there was no way of knowing if the lost packet data (transfer of bit and bites) was due to congestion or lost packets, and this resulted in "congestion control" from the TCP/IP controller, which slowed down overall throughput.

the algorithm,

the idea was to "encode" packet number data onto the back of the previously encoded/encrypted data stream.

the "encoded" packet number data is in the form of algebra, it allows for two things, first it allows each packet to be received "out of order" without signalling congestion to the TCP/IP controller and it allows for "lost" packets to be reproduced "without a resend" at the transmitters end,

by measuring network noise (congestion) and "time to" and "time from information"or "round trip time", and being able to be proactive with these parameters. we can predict where packet loss is happening "on the fly" and the answers to the algebra equations can be used to "reconstruct" the lost packet without having to signal a resend.

this avoids congestion control and throughput slowdowns

this allows for a number of things to occur.

first packets are not rejected if lost packets are encountered or are not received "in order"

second the out of order packets can be "reconstructed" on the fly without signalling congestion control (slowing down throughput) or asking to sender to "resend" lost packets

and

by knowing what the expected "packet loss" is prior to sending you can encode (algebra)off the back of the packets you suspect will reach the decoder, with the "lost" information from the packets that you suspect "wont" reach the decoder.

this is the basis for forward error correction "on the fly" or in real time so that throughput is not effected by slowdowns (congestion) and resends (lost packets)

why this changes the internet,

over the last few years "streaming content" has become a large part of life for internet users, and places a large demand on hardware infrastructure, if the "end user" is on a "lossy" or "busy" wireless network endpoint, the TCP/IP uses large resources to ensure "reliable" transport of streamed data to the end user.

so any increase of "throughput" and any decrease in "resends" has a dramatic effect on the quality and bit rate of streamed data. first the more efficient transport allows for more stable loss tolerant high quality stream, secondly you are not repeating "redundant" or lost information over and over again, which allows for low latency,

and the efficient transport completes downloads faster so you are utilising effective bandwidth better for shorter periods of time.

by altering the congestion mechanism and accounting for lost packets "on the fly" huge gains in through put and lower latency is achieved.

this transport can be modified to handle,

streaming sound with adjustable bit rate "on the fly"

streaming video with higher definition due the higher "effective bit rate" with lower "overall bits transmitted"

down loads at very high throughput, even on noisy/lossy wireless networks

secure down loads using TLS

high frame rate games "streamed" or piped to your browser (remote environment)

"on the fly" transport can increase efficiency of,

cell phone networks (more users per tower)

optical backbones (more efficient transport, more users, less power)

wireless networks (higher throughout in noisy/busy conditions)

"end to end" encrypted networks (provides a secure and efficient transport for sensitive information)

the bit most people will notice,

streaming video will be cheaper (cost in bits) and of a higher quality (higher encoding rate)

streaming music that adapts "on the fly" to network conditions to provide highest sound quality available (at bit rate)

downloads will be faster,

communications will be more secure,

lower latency, faster connections to game servers.

this is the biggest increase in transport throughput in the last few years and uses existing hardware.

please feel free to ask questions, or ask for technical details

xploder

The Internet protocol suite is the set of communications protocols used for the Internet and similar networks, and generally the most popular protocol stack for wide area networks. It is commonly known as TCP/IP, because of its most important protocols: Transmission Control Protocol (TCP) and Internet Protocol (IP), which were the first networking protocols defined in this standard. It is occasionally known as the DoD model due to the foundational influence of the ARPANET in the 1970s (operated by DARPA, an agency of the United States Department of Defense).

TCP/IP provides end-to-end connectivity specifying how data should be formatted, addressed, transmitted, routed and received at the destination. It has four abstraction layers, each with its own protocols.[1][2] From lowest to highest, the layers are:

The link layer (commonly Ethernet) contains communication technologies for a local network.

The internet layer (IP) connects local networks, thus establishing internetworking.

The transport layer (TCP) handles host-to-host communication.

The application layer (for example HTTP) contains all protocols for specific data communications services on a process-to-process level (for example how a web browser communicates with a web server).

en.wikipedia.org...

this Transmission Control Protocol is how bits and bites are moved from one place to another, and are comprised of "layers" each one has a part in moving information from place to place. this Protocol was designed "before" wireless access points were in common usage, and the TCP/IP was designed to handle "wired" networks.

the three problems with this system,

1/the wireless loss/noise was not accounted for in the traditional TCP/IP and busy or noisy networks have a very slow throughput (especially wireless)

2/speed of transfer was not optimized to "account" for lost packets "on the fly"(required excessive resends)

3/there was no way of knowing if the lost packet data (transfer of bit and bites) was due to congestion or lost packets, and this resulted in "congestion control" from the TCP/IP controller, which slowed down overall throughput.

the algorithm,

the idea was to "encode" packet number data onto the back of the previously encoded/encrypted data stream.

the "encoded" packet number data is in the form of algebra, it allows for two things, first it allows each packet to be received "out of order" without signalling congestion to the TCP/IP controller and it allows for "lost" packets to be reproduced "without a resend" at the transmitters end,

by measuring network noise (congestion) and "time to" and "time from information"or "round trip time", and being able to be proactive with these parameters. we can predict where packet loss is happening "on the fly" and the answers to the algebra equations can be used to "reconstruct" the lost packet without having to signal a resend.

this avoids congestion control and throughput slowdowns

this allows for a number of things to occur.

first packets are not rejected if lost packets are encountered or are not received "in order"

second the out of order packets can be "reconstructed" on the fly without signalling congestion control (slowing down throughput) or asking to sender to "resend" lost packets

and

by knowing what the expected "packet loss" is prior to sending you can encode (algebra)off the back of the packets you suspect will reach the decoder, with the "lost" information from the packets that you suspect "wont" reach the decoder.

this is the basis for forward error correction "on the fly" or in real time so that throughput is not effected by slowdowns (congestion) and resends (lost packets)

why this changes the internet,

over the last few years "streaming content" has become a large part of life for internet users, and places a large demand on hardware infrastructure, if the "end user" is on a "lossy" or "busy" wireless network endpoint, the TCP/IP uses large resources to ensure "reliable" transport of streamed data to the end user.

so any increase of "throughput" and any decrease in "resends" has a dramatic effect on the quality and bit rate of streamed data. first the more efficient transport allows for more stable loss tolerant high quality stream, secondly you are not repeating "redundant" or lost information over and over again, which allows for low latency,

and the efficient transport completes downloads faster so you are utilising effective bandwidth better for shorter periods of time.

by altering the congestion mechanism and accounting for lost packets "on the fly" huge gains in through put and lower latency is achieved.

this transport can be modified to handle,

streaming sound with adjustable bit rate "on the fly"

streaming video with higher definition due the higher "effective bit rate" with lower "overall bits transmitted"

down loads at very high throughput, even on noisy/lossy wireless networks

secure down loads using TLS

high frame rate games "streamed" or piped to your browser (remote environment)

"on the fly" transport can increase efficiency of,

cell phone networks (more users per tower)

optical backbones (more efficient transport, more users, less power)

wireless networks (higher throughout in noisy/busy conditions)

"end to end" encrypted networks (provides a secure and efficient transport for sensitive information)

the bit most people will notice,

streaming video will be cheaper (cost in bits) and of a higher quality (higher encoding rate)

streaming music that adapts "on the fly" to network conditions to provide highest sound quality available (at bit rate)

downloads will be faster,

communications will be more secure,

lower latency, faster connections to game servers.

this is the biggest increase in transport throughput in the last few years and uses existing hardware.

please feel free to ask questions, or ask for technical details

xploder

reply to post by XPLodER

Hi XPlodER,

I love this stuff but I am still sort of in my toddler years when it comes to understanding it all, so please excuse my bone-headed Qs.

Where does the system get the data from to replace the lost data without a resend?

On a funkier note, I am really intrigued by the 'layering' of TCP IP. I am studying histology right now and it sort of reminds me of tissue layers and enzymes in the body that allow for specific types of 'histo-communication' (I made that up).

And also, isn't it interesting that this all seems to be a DARPA project from day 1. I can't imagine that anything has changed, and in fact, there seems to be plenty of evidence that it has not.

Thanks for a wonderful piece of technical writing; you really have brought the subject down to earth.

Hi XPlodER,

I love this stuff but I am still sort of in my toddler years when it comes to understanding it all, so please excuse my bone-headed Qs.

Where does the system get the data from to replace the lost data without a resend?

On a funkier note, I am really intrigued by the 'layering' of TCP IP. I am studying histology right now and it sort of reminds me of tissue layers and enzymes in the body that allow for specific types of 'histo-communication' (I made that up).

And also, isn't it interesting that this all seems to be a DARPA project from day 1. I can't imagine that anything has changed, and in fact, there seems to be plenty of evidence that it has not.

Thanks for a wonderful piece of technical writing; you really have brought the subject down to earth.

It sounds like each packet contains a small amount of data which can be used to reconstruct missing packets (think .par files) so while the size of

each packet increases to accommodate the lost data or get smaller to fit current standards so we have to transmit more packets to transfer the same

data but this sounds like its a dynamically changing system so as the network gets crappier the amount of data used to provide the necessary error

correction increases and as it gets better it uses less error correction which to me sounds like someones ported 1960-1970's data transmission over

crappy telegraph systems onto wireless

Originally posted by Xoanon

reply to post by XPLodER

Hi XPlodER,

I love this stuff but I am still sort of in my toddler years when it comes to understanding it all, so please excuse my bone-headed Qs.

i will try to answer as simply as i can

Where does the system get the data from to replace the lost data without a resend?

if there are eight packets of data encoding "data information" for reconstructing a picture,

and the fourth packet is lost, then there is enough "algebra" encoded from the seven other "packets" to compute what the "value" of the lost packet is, this allows for "reconstruction" of picture while continuing transposition without interruption or slow downs

this is done "at" the receiver end, and removes the need for a resend request, and costs very little overhead (computational power and or time ms)

On a funkier note, I am really intrigued by the 'layering' of TCP IP. I am studying histology right now and it sort of reminds me of tissue layers and enzymes in the body that allow for specific types of 'histo-communication' (I made that up).

maby it would be an idea to get someone to study the transport to draw an analogy with TCP/IP,

we may gain insight from nature

And also, isn't it interesting that this all seems to be a DARPA project from day 1. I can't imagine that anything has changed, and in fact, there seems to be plenty of evidence that it has not.

as they get the best engineers and scientists, it is hardly surprising that DARPA designed most of the really cool toys we as consumers eventually get to use.

Thanks for a wonderful piece of technical writing; you really have brought the subject down to earth.

thank you for your complement

xploder

Originally posted by Xoanon

reply to post by XPLodER

Hi XPlodER,

Where does the system get the data from to replace the lost data without a resend?

it can recalculate the lost data with the check sums of all the other packets sent, but it cant do it a lot.

Here what a TCP packet looks like.

Originally posted by Xoanon

reply to post by XPLodER

On a funkier note, I am really intrigued by the 'layering' of TCP IP. I am studying histology right now and it sort of reminds me of tissue layers and enzymes in the body that allow for specific types of 'histo-communication' (I made that up).

A good analogy of the layers and how they work is sending a letter. As the data (the letter) is processed, the layers responsible for the sending of it add a bit of information. Like the letter being put into an envelope (Packet) , getting labeled with a destination address, (address) a source address (return address) and being put on the wire (put in the mail box.) Once the packet reaches the destination the layers are stripped off by the corresponding layers at the other end (checking the mailbox, reading who its from and who its to, opening the envelop and reading the letter.)

The difference in sending a letter and sending data is that the computer at the receiving end can take instructions from each letter that tells it how to handle the packet. An example would be the 3rd layer (network layer) where things like the urgency or priority of a packet can be understood or even things like how many packets can be sent at a time without verification. (tcp sliding window).

Hope this helps

edit on 23-11-2012 by Mike.Ockizard because: (no reason given)

Originally posted by Maxatoria

It sounds like each packet contains a small amount of data which can be used to reconstruct missing packets (think .par files) so while the size of each packet increases to accommodate the lost data or get smaller to fit current standards so we have to transmit more packets to transfer the same data but this sounds like its a dynamically changing system so as the network gets crappier the amount of data used to provide the necessary error correction increases and as it gets better it uses less error correction which to me sounds like someones ported 1960-1970's data transmission over crappy telegraph systems onto wireless

well the effect on the send/receive is very different,

the coding layer "receives" notification of receipt, and removes the need for each receipt to ack

the sender continues to send until the coding buffer can reconstruct missing or out of order packets in "chain blocks" and send an ack for the whole chain. if packet loss is detected the algebra can be decoded from the collected packets, and reconstruction of the lost packet can be achived, and this is at the receivers end without the need for resend,

you save the round trip time, of resend and changes to window size

if you know network conditions ahead of time you can "adjust on the fly" to predict packet loss, and compensate with more simple or more complex algebra to account for larger or smaller "perceived" losses of packets

this gives the forward error correction the ability to "scale to losses" (instead of congestion window size changes)

and to estimate "when" to increase the complexity of the algebra to account for higher numbers of "lost packets" or

"on the fly" forward error correction that responds to throughput, not individual packet AKS.

xploder

Thanks for bringing me back to the days of newsgroups and irc.

Thanks for the post OP

Thanks for the post OP

edit on 23-11-2012 by TrainDispatcher because: (no reason given)

Originally posted by zroth

Nice.

I imagine this discovery will find its way to the traditional transport layer as well.

yes

there are universities all round the world playing with this new transport,

on WIFI (loss avoidance)

on end to end (increased throughput)

on streaming sound (variable bit rate)

on streaming movies (higher encoding for the same bit rate)

on wireless carriers (increased throughput per cell tower per user)

each has a different forward facing function, but uses a similar algebra/numbering system to keep in contact in real time without packet loss slowing down the exchange.

xploder

Theres always going to be a certain point of cut off when the amount of parity data to recover the data stream will be more than the ability of the

system to provide such data and then you'll be stuffed but it sounds good for system where theres a reasonable quality of connection as it'll save

both sides some effort and on wired based systems i sense that there wont be much use unless you are near the physical ends of the media's ability

aka running token ring over 1920's phone wiring

Originally posted by Maxatoria

Theres always going to be a certain point of cut off when the amount of parity data to recover the data stream will be more than the ability of the system to provide such data and then you'll be stuffed but it sounds good for system where theres a reasonable quality of connection as it'll save both sides some effort and on wired based systems i sense that there wont be much use unless you are near the physical ends of the media's ability aka running token ring over 1920's phone wiring

your right, there is only so many lost packets that can be accounted for,

and congestion does come into the equation

but more and more users are mobile and or wireless,

this will have a marked impact on the growing number of mobiles and the increasing use of streaming technology

xploder

reply to post by XPLodER

There is also the bandwidth penalty for sending the data that can be used to reconstruct missing packets.

If there is enough extra data in 8 packets to reconstruct one missing packet, that means there is 12.5% overhead in additional data being sent.

The issue I see with this is, ALWAYS sending the additional 12.5%, even when the connection is good, may be more of an overhead overall to the networks than just resending the lost packet data, which let's say involves a 1% overall overhead. So, this could make network traffic 12 times worse, instead of better.

The best technical solution is to fix the connectivity issues so you don't have lost packets, but where you do have some lost packets, I'm not sure it makes sense to penalize the entire network with an additional 12.5% overhead for those cases, most of which may not be losing any packets at all.

There is also the bandwidth penalty for sending the data that can be used to reconstruct missing packets.

If there is enough extra data in 8 packets to reconstruct one missing packet, that means there is 12.5% overhead in additional data being sent.

The issue I see with this is, ALWAYS sending the additional 12.5%, even when the connection is good, may be more of an overhead overall to the networks than just resending the lost packet data, which let's say involves a 1% overall overhead. So, this could make network traffic 12 times worse, instead of better.

The best technical solution is to fix the connectivity issues so you don't have lost packets, but where you do have some lost packets, I'm not sure it makes sense to penalize the entire network with an additional 12.5% overhead for those cases, most of which may not be losing any packets at all.

Originally posted by Arbitrageur

reply to post by XPLodER

There is also the bandwidth penalty for sending the data that can be used to reconstruct missing packets.

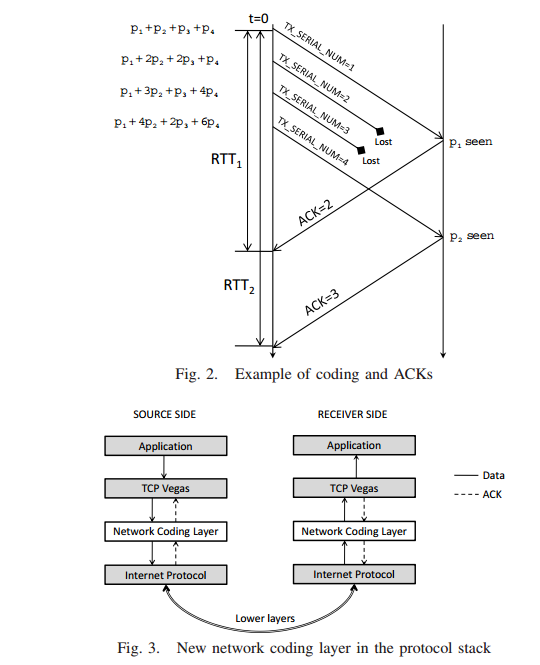

there is a novel way of encoding the "algebra" "onto the back" of the already encoded/encrypted packets.

a transmit buffer and receive buffer allow for "re encoding" of the already finished packet, with

packet number, time to, time from, algebraic equation and "chains packets together for transport"

there is no ACK (from receive buffer) untill the receive buffer can "reconstruct" or compile "the chain",

missing packets can be "computed" with the algebra. and an ACK sent instead of resend

If there is enough extra data in 8 packets to reconstruct one missing packet, that means there is 12.5% overhead in additional data being sent.

the eight packet example was only to illustrate the concept, and not intended as a technical explanation,

the overhead is not 12.5% in this example as the data (RTT, packet number and algebra) are "re encoded",

back onto the back of the "data to be transported" and the receive buffer decodes this and returns the packet to it pre coded state for assembly.

example,

you write a letter and then encrypt it, (data to be transported)

you give me the letter (i am the code buffer)

i user your encoded letter and "re encode" some of the previously encoded (by you) data into an algebraic formula that signifies RTT, packet number and window state. think "cryptographic signature"

then i send the letter (transport layer)

and the receiver buffer unpacks the algebra (returns your message to your state)

and holds the packets in buffer untill "all algebra" (packets) are accounted for or

uses the algebra to "account" for lost packets using the "cryptographic signature"

what this means is that for the length of the "chain" (packets grouped together) the transmit continues over riding the ACK (acknowlege) until complete chain is accounted for, and then sends ACK,

The issue I see with this is, ALWAYS sending the additional 12.5%, even when the connection is good, may be more of an overhead overall to the networks than just resending the lost packet data, which let's say involves a 1% overall overhead. So, this could make network traffic 12 times worse, instead of better.

by proactive measuring "noise" and "loss" information, the strength of the algebra can be altered "on the fly"

to compute larger equations for larger packet losses, or smaller equations for more noise interference

this provides a "forward facing error correction" function that anticipates packet loss and modifies the packets to account for "expected loss" and does not require a resend (round trip time)

The best technical solution is to fix the connectivity issues so you don't have lost packets, but where you do have some lost packets, I'm not sure it makes sense to penalize the entire network with an additional 12.5% overhead for those cases, most of which may not be losing any packets at all.

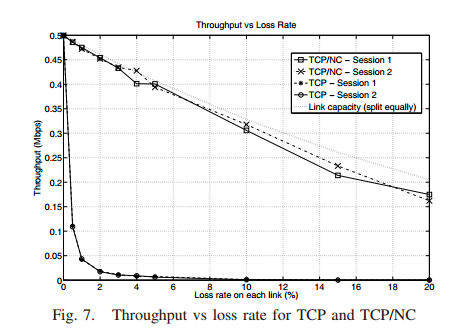

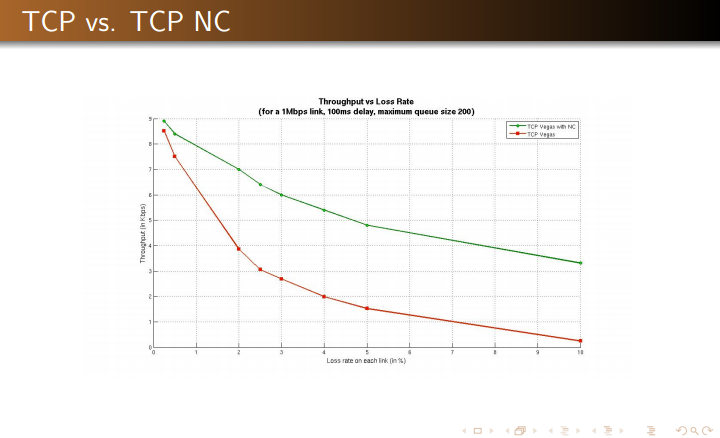

the preliminary results point to an increase of through put at approx 50%,

and the numbers suggest the back bone over head could decrease by about the same rate.

this example uses forward facing error correction but does not encode changing algebraic equations based on predicted loss

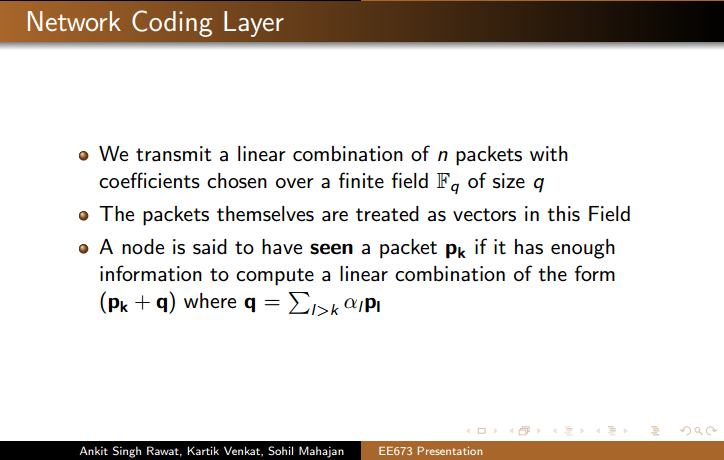

The network

coding layer intercepts and modifies TCP’s acknowledgment

(ACK) scheme such that random erasures does not affect

the transport layer’s performance. To do so, the encoder,

the network coding unit under the sender TCP, transmits R

random linear combinations of the buffered packets for every

transmitted packet from TCP sender. The parameter R is

the redundancy factor. Redundancy factor helps TCP/NC

to recover from random losses; however, it cannot mask correlated losses, which are usually due to congestion. The

decoder, the network coding unit under the receiver TCP,

acknowledges degrees of freedom instead of individual packets, as shown in Figure 1. Once enough degrees of freedoms

are received at the decoder, the decoder solves the set of

linear equations to decode the original data transmitted by

the TCP sender, and delivers the data to the TCP receiver.

We briefly note the overhead associated with network coding. The main overhead associated with network coding can

be considered in two parts: 1) the coding vector (or coeffi-

cients) that has to be included in the header; 2) the encoding/decoding complexity. For receiver to decode a network

coded packet, the packet needs to indicate the coding coeffi-

cients used to generate the linear combination of the original

data packets. The overhead associated with the coefficients

depend on the field size used for coding as well as the number

of original packets

MIT PDF

xploder

reply to post by Arbitrageur

same mit pdf

We have presented an analytical study and compared the

performance of TCP and E2E-TCP/NC. Our analysis characterizes the throughput of TCP and E2E as a function of

erasure rate, round-trip time, maximum window size, and

the duration of the connection. We showed that network

coding, which is robust against erasures and failures, can

prevent TCP’s performance degradation often observed in

lossy networks. Our analytical model shows that TCP with

network coding has significant throughput gains over TCP.

E2E is not only able to increase its window size faster but

also to maintain a large window size despite losses within the

network; on the other hand, TCP experiences window closing as losses are mistaken to be congestion. Furthermore,

NS-2 simulations verify our analysis on TCP’s and E2E’s

performance. Our analysis and simulation results both support that E2E is robust against erasures and failures. Thus,

E2E is well suited for reliable communication in lossy wireless networks.

same mit pdf

Originally posted by XPLodER

why this changes the internet,

over the last few years "streaming content" has become a large part of life for internet users, and places a large demand on hardware infrastructure, if the "end user" is on a "lossy" or "busy" wireless network endpoint, the TCP/IP uses large resources to ensure "reliable" transport of streamed data to the end user.

I have several major issues understanding your post or your point:

1) Voice or video transmits are never requiring 100% accuracy

If packet (also called datagram) gets lost, what happens due this loss is something you barely notice on the YouTube video you are watching or on your Skype call.

Streaming videos use buffering - meaning in plain words that the video you are watching is being downloaded on the background to your local computer and you are watching the video from that local buffer.

Unless you deliberately configure your local buffer value to be zero, you never stream the video you are watching directly to your computer screen from the Internet.

2) UDP protocol

UDP is part of TCP/IP protocol suite, it has been since long used to transmit video or voice over Internet.

Unlike TCP, the UDP protocol does not do any error correcting, because as mentioned, there is simply no need for any error correcting when it comes to video or voice.

3) Factors which impact quality of online streaming video

The quality of the video you are watching from the Internet is merely a question of following:

* Deinterlacing, which impacts the digital video quality. You can get idea how it works on this link (Wikipedia).

* how fast your computer can process the video for you to watch (due compression, some digital formats are more resource-consuming than others)

* how big is your buffer on your local computer that can store the video while you are watching it

* how fast your network link can download new content to that local computer buffer for you to watch.

None of those require developing any new algorithm.

4) How TCP as protocol works

TCP on the TCP/IP protocol suite is, in high-level, responsible for data transfer and IP does only addressing.

The TCP protocol works so that the data is divided into packets. Those packets are then sent to the network.

When the packet (datagram) is sent to destination (SYN), destination notifies the source that it got the transmit (SYN-ACK) and source finalizes the transfer by replying to this notification (ACK). If anything gets lost during the trasmit, the data is resent.

Packets - again in high-level - have three fields: header, data field and footer. The footer has the CRC, or error-checking data, that currently determines if TCP retransmit is needed or not or was the transfer successful.

There is a method of handling packets which arrive out of order to destination, but there is no method to construct back together packets which are lost during the transfer - or "on the fly" - as you say. If data is lost, it is lost during the transfer.

If it is missing it cannot be made up: unless the next and previous packet would be able to in some way determine what the missing packet had in it's data field. In practice it would mean that there would be an algorithm that can recover anything by just knowing two parts of the whole, no matter how large or complex that whole might be.

And when saying that, I can bet it would not be first used to stream videos from the Internet.

I have several major issues understanding your post or your point:

thats ok its a complex system

1) Voice or video transmits are never requiring 100% accuracy

the prime thing in this situation is that lost packets hold up throughput, without the round trip time of a resend,

the transport layer can continue passing packets for that amount of time. ie 200ms.

if you stop the exchange everytime a packet is lost the "total throughput" is slowed down.

its no so much about 100% accuracy, its about holding the "congestion window" open inspite of loss without triggering conjestion, allowing for more use of effective bandwidth.

If packet (also called datagram) gets lost, what happens due this loss is something you barely notice on the YouTube video you are watching or on your Skype call.

actually a higher quality (higher video encoding rate) video can be streamed for the same overall cost of bandwidth. with less corruption of data, with lower latency

Streaming videos use buffering - meaning in plain words that the video you are watching is being downloaded on the background to your local computer and you are watching the video from that local buffer.

but how often do people sit there and wait for buffering?

the coding buffer takes up the job of the transmit/receive buffer,

Unless you deliberately configure your local buffer value to be zero, you never stream the video you are watching directly to your computer screen from the Internet.

WHY NOT?

high throughput and low latency....... stable high speed transport,

the overhead at the consumers end is low, and faster than a round trip

higher effective bandwidth usage = less time utilising resources/time

2) UDP protocol

UDP is part of TCP/IP protocol suite, it has been since long used to transmit video or voice over Internet.

UDP is part of the TCP/IP protocol suite,

Unlike TCP, the UDP protocol does not do any error correcting, because as mentioned, there is simply no need for any error correcting when it comes to video or voice.

it does from a stability and quality veiw point, for customers

and a lower overhead for network operators

the faster each transport transaction can conclude, the more "clients" over time you can serve

3) Factors which impact quality of online streaming video

is your end point consumer, accessing the internet over a noisy, lossy, or busy wireless network?

then you can free up existing network resources.

4) How TCP as protocol works

TCP on the TCP/IP protocol suite is, in high-level, responsible for data transfer and IP does only addressing.

The TCP protocol works so that the data is divided into packets. Those packets are then sent to the network.

When the packet (datagram) is sent to destination (SYN), destination notifies the source that it got the transmit (SYN-ACK) and source finalizes the transfer by replying to this notification (ACK). If anything gets lost during the trasmit, the data is resent.

Packets - again in high-level - have three fields: header, data field and footer. The footer has the CRC, or error-checking data, that currently determines if TCP retransmit is needed or not or was the transfer successful.

this system "subverts" TCP for its own transport, and can "control its own throughput" to hold congestion window as open as possible, while still responding to "actual" congestion.

not every lost packet should hold up transport,

There is a method of handling packets which arrive out of order to destination, but there is no method to construct back together packets which are lost during the transfer - or "on the fly" - as you say. If data is lost, it is lost during the transfer.

it is resilient to loss, loss causes throughput slowdowns

If it is missing it cannot be made up: unless the next and previous packet would be able to in some way determine what the missing packet had in it's data field. In practice it would mean that there would be an algorithm that can recover anything by just knowing two parts of the whole, no matter how large or complex that whole might be.

And when saying that, I can bet it would not be first used to stream videos from the Internet.

GAMING lol

stream games at high FRPS into the browser,

references

www.stanford.edu...

wsl.stanford.edu...

hal.inria.fr...

dandelion-patch.mit.edu...

xploder

edit on 25-11-2012 by XPLodER because: (no reason given)

edit on 25-11-2012 by XPLodER because: (no reason

given)

edit on 25-11-2012 by XPLodER because: (no reason given)

edit on 25-11-2012 by XPLodER because: (no reason

given)

Originally posted by definity

Originally posted by Xoanon

reply to post by XPLodER

Hi XPlodER,

Where does the system get the data from to replace the lost data without a resend?

it can recalculate the lost data with the check sums of all the other packets sent, but it cant do it a lot.

Here what a TCP packet looks like.

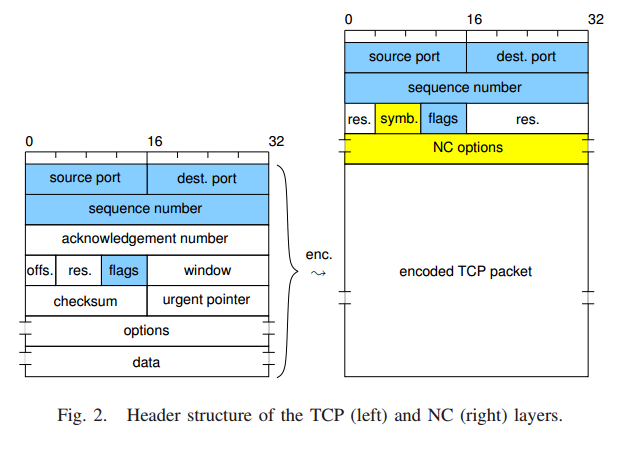

here is the difference, in the MDS version

A. Header Structure

The NC layer protocol reuses parts of the TCP header

without any modification. To reduce protocol overhead, these

common header parts can be stripped off the TCP header

before encoding of the TCP segments. They can be easily

reconstructed at the receiver side by simple extraction from

the NC header. The common and stripped–off header parts are

source port, destination port, all control flags except ACK, and

sequence number. See notes about the latter one in Section IIIB. The reused and stripped–off header fields are blue shaded

in Figure 2.

The remaining TCP header fields are not required by the

NC layer. Thus, they are not part of the NC layer header

and become part of the encoded TCP segment, i.e. the NC

layer payload data. The TCP header fields which are encoded

include acknowledgment number, offset, reserved, window,

checksum, urgent pointer and options. They are non-shaded

on the left-hand side of Figure 2.

Besides the reused TCP header fields, the NC layer adds

two additional header fields, i.e. symbol indicator (symb.) and

NC options. The new fields are yellow shaded on the righthand side of Figure 2. The symbol indicator is responsible to

determine the position of a segment within an MDS codeword,

for details see the following section. The NC options are not

used in the current basic version of our protocol. They might

be used to signal adaptions of code rate or speculative ACK

threshold in later versions.

hal.inria.fr...

xploder

edit on 25-11-2012 by XPLodER because: (no reason given)

new topics

-

Supreme Court Oral Arguments 4.25.2024 - Are PRESIDENTS IMMUNE From Later Being Prosecuted.

Above Politics: 58 minutes ago -

Krystalnacht on today's most elite Universities?

Social Issues and Civil Unrest: 1 hours ago -

Chris Christie Wishes Death Upon Trump and Ramaswamy

Politicians & People: 1 hours ago -

University of Texas Instantly Shuts Down Anti Israel Protests

Education and Media: 3 hours ago -

Any one suspicious of fever promotions events, major investor Goldman Sachs card only.

The Gray Area: 5 hours ago -

God's Righteousness is Greater than Our Wrath

Religion, Faith, And Theology: 10 hours ago

top topics

-

VP's Secret Service agent brawls with other agents at Andrews

Mainstream News: 14 hours ago, 11 flags -

Nearly 70% Of Americans Want Talks To End War In Ukraine

Political Issues: 15 hours ago, 6 flags -

Sunak spinning the sickness figures

Other Current Events: 15 hours ago, 5 flags -

Electrical tricks for saving money

Education and Media: 13 hours ago, 4 flags -

Late Night with the Devil - a really good unusual modern horror film.

Movies: 17 hours ago, 3 flags -

Krystalnacht on today's most elite Universities?

Social Issues and Civil Unrest: 1 hours ago, 3 flags -

Supreme Court Oral Arguments 4.25.2024 - Are PRESIDENTS IMMUNE From Later Being Prosecuted.

Above Politics: 58 minutes ago, 3 flags -

Any one suspicious of fever promotions events, major investor Goldman Sachs card only.

The Gray Area: 5 hours ago, 2 flags -

University of Texas Instantly Shuts Down Anti Israel Protests

Education and Media: 3 hours ago, 2 flags -

Chris Christie Wishes Death Upon Trump and Ramaswamy

Politicians & People: 1 hours ago, 1 flags

active topics

-

University of Texas Instantly Shuts Down Anti Israel Protests

Education and Media • 74 • : DBCowboy -

Chris Christie Wishes Death Upon Trump and Ramaswamy

Politicians & People • 6 • : mysterioustranger -

"We're All Hamas" Heard at Columbia University Protests

Social Issues and Civil Unrest • 282 • : 5thHead -

British TV Presenter Refuses To Use Guest's Preferred Pronouns

Education and Media • 152 • : PorkChop96 -

Candidate TRUMP Now Has Crazy Judge JUAN MERCHAN After Him - The Stormy Daniels Hush-Money Case.

Political Conspiracies • 745 • : network dude -

Nearly 70% Of Americans Want Talks To End War In Ukraine

Political Issues • 71 • : SchrodingersRat -

President BIDEN Vows to Make Americans Pay More Federal Taxes in 2025 - Political Suicide.

2024 Elections • 141 • : Euronymous2625 -

-@TH3WH17ERABB17- -Q- ---TIME TO SHOW THE WORLD--- -Part- --44--

Dissecting Disinformation • 664 • : 777Vader -

Hate makes for strange bedfellows

US Political Madness • 46 • : network dude -

The Reality of the Laser

Military Projects • 48 • : 5thHead

7