It looks like you're using an Ad Blocker.

Please white-list or disable AboveTopSecret.com in your ad-blocking tool.

Thank you.

Some features of ATS will be disabled while you continue to use an ad-blocker.

10

share:

this is cool, ever get really bad connections from wifi?

this could remove some of your stress,

www.extremetech.com...

the packet loss is reduced and the missing packets dont hold up everyone,

its pretty cool, by using virtual software the packets are chained together and coded with algebra,

and any missing packets data can be "accounted" for without submitting a request for a resend.

how cool would it be if hot spots were super fast and all it took was a software download

anything that speeds up content with no change in gear is all good

xploder

this could remove some of your stress,

A team of researchers from MIT, Caltech, Harvard, and other universities in Europe, have devised a way of boosting the performance of wireless networks by up to 10 times — without increasing transmission power, adding more base stations, or using more wireless spectrum. This is expected to have huge repercussions on the performance of LTE and WiFi networks.

In essence, the innovation — called coded TCP — makes packet loss completely disappear

www.extremetech.com...

the packet loss is reduced and the missing packets dont hold up everyone,

its pretty cool, by using virtual software the packets are chained together and coded with algebra,

and any missing packets data can be "accounted" for without submitting a request for a resend.

how cool would it be if hot spots were super fast and all it took was a software download

To be honest, these improvements aren’t all that surprising. TCP was designed for wired networks, where lost packets are generally a sign of congestion. Wireless networks are in desperate need for forward error correction (FEC), and that’s exactly what coded TCP provides.

anything that speeds up content with no change in gear is all good

xploder

edit on 15-11-2012 by XPLodER because: (no reason given)

reply to post by XPLodER

That will be interesting to see if they implement something like this. It would be nice to see tech increase in speed while still running under the bandwith. Now are they going be interesting if they did, will companies charge more for speed increase even though they are still using the same bandwith?

Grim

That will be interesting to see if they implement something like this. It would be nice to see tech increase in speed while still running under the bandwith. Now are they going be interesting if they did, will companies charge more for speed increase even though they are still using the same bandwith?

Grim

I would imagine faster adsl/adsl/cable/cat5 networking also would be able to achieve this, this packet coding isn't limited to wireless I think.

reply to post by amraks

You should take a look at new fiber-optic network tech developed by Bangor University scientist. They've boosted bandwidth speeds to at-least 20Gbps, which is 2000 times faster than the average UK bandwidth connection.

Bangor University Scientists Boost Fibre Speeds To 20Gbps

You should take a look at new fiber-optic network tech developed by Bangor University scientist. They've boosted bandwidth speeds to at-least 20Gbps, which is 2000 times faster than the average UK bandwidth connection.

Bangor University Scientists Boost Fibre Speeds To 20Gbps

Crazy thing is, I know how they're going about it. At least I think. A warrant officer (someone who is ridiculously proficient at their job) taught a

few other Marines and myself how to do such a thing. I could be wrong of course, but when I know more, I will be able to specify of my method is the

same. But I'm betting it's not the same.

Originally posted by Raelsatu

reply to post by amraks

You should take a look at new fiber-optic network tech developed by Bangor University scientist. They've boosted bandwidth speeds to at-least 20Gbps, which is 2000 times faster than the average UK bandwidth connection.

Bangor University Scientists Boost Fibre Speeds To 20Gbps

They will do this on the back hauls I reckon. same with this wireless software. could be a good back up/temporary gateway at peak times.

I know where I am in Tasmania we suffer from bandwidth problem even know we have 2 fiber cables.

There cheaper way would be use wireless over to the mainland.

edit on 15-11-2012 by amraks because: (no reason given)

Originally posted by Grimmley

reply to post by XPLodER

That will be interesting to see if they implement something like this. It would be nice to see tech increase in speed while still running under the bandwith. Now are they going be interesting if they did, will companies charge more for speed increase even though they are still using the same bandwith?

Grim

the isps in my country charge by data cap, so i guess it would use less "data" and be faster,

ie stream a movie in high quality instead of lower rates using the same amount of data overall,

or sound streamed at higher quality ect.

the "spectrum crunch" will be less of a problem and low latency would be easy to achieve.

so if anything it should reduce the cost as this will lower overall demand (less resent packets)

but people would use more data because you can download faster, so.............

would make a great gamer network

xploder

edit on 15-11-2012 by XPLodER because: (no reason given)

Originally posted by amraks

I would imagine faster adsl/adsl/cable/cat5 networking also would be able to achieve this, this packet coding isn't limited to wireless I think.

in theory this could benefit "wired" networks and backbones,

it works best "end to end"

it would be cool if this becomes a standard for tcp/ip

xploder

Originally posted by amraks

They will do this on the back hauls I reckon. same with this wireless software. could be a good back up/temporary gateway at peak times.

What do you mean? You think this will only be a backup? As far as I can tell they plan on implementing this into the network infrastructure so that the average user will have these speeds.

Have you seen the other tech that allows for 2.5 tbps speeds using "twisted light"?

"Twisted Light" Paves the Way for Ultra-Fast Internet

reply to post by Raelsatu

I should of stated thats what our government would use it for instead of running more cables.

I should of stated thats what our government would use it for instead of running more cables.

Originally posted by amraks

I would imagine faster adsl/adsl/cable/cat5 networking also would be able to achieve this, this packet coding isn't limited to wireless I think.

transport layer,

improvements at the "transport layer" yield vast improvements,

prioritising packets "on the fly" would allow for forward facing error correction,

better quality sound video and lower latency games and less "load" on the infrastructure backbone.

streaming movies to your phone "on the move"

high frame rate games from your browser!!!!!!!!!!

xploder

Originally posted by amraks

reply to post by Raelsatu

I should of stated thats what our government would use it for instead of running more cables.

So you're saying that only the government will use the high-speed cables & that people will use the wi-fi upgrade?

OFDM, a method for encoding digital data, has the advantage that it is already widely used in other settings. OOFDM technology could be used to offer faster speeds over currently installed fibre networks, making it more cost-effective than systems which would involve modifying the encoding and decoding hardware or the fibre-optic cables themselves, and according to the university.

Apparently no new cabling needs to be run for these 20gbps speeds, and it cost about the same as current internet pricing. I think the only thing that will need to be distributed is the transceiver/receiver modules. Anyway, broadband speeds will naturally continue to rise as the demand does. Eventually people will have insane bandwidth to accommodate the metaverse, interactive virtual reality, & massive multiplayer platforms -- among other facets.

Originally posted by Raelsatu

Originally posted by amraks

They will do this on the back hauls I reckon. same with this wireless software. could be a good back up/temporary gateway at peak times.

What do you mean? You think this will only be a backup? As far as I can tell they plan on implementing this into the network infrastructure so that the average user will have these speeds.

Have you seen the other tech that allows for 2.5 tbps speeds using "twisted light"?

"Twisted Light" Paves the Way for Ultra-Fast Internet

very interesting ideas, and would be very useful for future space networks ect.

the physics behind it is very cool to,

Orbital angular momentum, when applied to fiber-optic and potentially wi-fi data streams, takes the principle of splitting different streams to different polarizations and alters it so that rather than having different polarizations, the data streams are "twisted" together, packed in more tightly, and can therefore carry more stuff. "The idea is not to create light waves wiggling in different directions but rather with different amounts of twist, like screws with different numbers of threads," says the BBC.

www.escapistmagazine.com...

from your link

this would only apply to "optical" networks and devices,

and sounds like an excellent advancement more better service over the same hardware

xploder

reply to post by Raelsatu

I was implying with our new NBN in Australia the link from tas to mainland is only via fiber cable.

They will use wireless tech to overcome this, when they reach maxim capacity for bandwidth on that fiber.

should save them the cost of the 2 more fiber cables we are currently using.

I was implying with our new NBN in Australia the link from tas to mainland is only via fiber cable.

They will use wireless tech to overcome this, when they reach maxim capacity for bandwidth on that fiber.

should save them the cost of the 2 more fiber cables we are currently using.

A fiber link would be nice, probably with my next hardware upgrade. A quick check for current fiber service pricing seems to indicate that the

providers are still building infrastructure and not in a competitive pricing war yet. Companies like Comcast and Verizon FIOS will eventually cause

an extinction level event for everything except last mile mobile device users but who knows when? This wireless algo doesn't change the peak

bandwidth just improves speed in areas that previously had poor reception doesn't it?

Originally posted by XPLodER

improvements at the "transport layer" yield vast improvements,

prioritising packets "on the fly" would allow for forward facing error correction

xploder

Considering the high percentage of overhead in the OSI, I would think this would be a good area to investigate.

Originally posted by Cauliflower

A fiber link would be nice, probably with my next hardware upgrade. A quick check for current fiber service pricing seems to indicate that the providers are still building infrastructure and not in a competitive pricing war yet.

i would expect that most providers will offer more data and faster speeds at the same price, we may see "unlimited" make a comeback

Companies like Comcast and Verizon FIOS will eventually cause an extinction level event for everything except last mile mobile device users but who knows when?

you still require the physical hardware infrastructure to support the "last mile" providers.

This wireless algo doesn't change the peak bandwidth just improves speed in areas that previously had poor reception doesn't it?

nothing can help when you have "poor reception" no signal conditions and low signal conditions can not be "overcome" by this transport, the efficiency comes when lost packets dont slow down processing,

you still require "most" of the packets to arrive in some semblance of order, and it does take time to "account" for lost packets, but it can be done at the decoder end for very little overhead, and in short spaces of time.

in a hotel or coffie shop or train or lossy network you would see vast improvements,

where as at home by yourself it would only yeild very small increases in throughput.

this is designed for "end to end" transport, and takes into account the differences in wired and wireless networks by changing the normal behaviour of tcp/ip to be "more tolerant of loss" over the wireless network conditions

it is good we have multiple advances across wire, wireless, fibre and WIFI.

and most of it is cross comparable with existing infrastructure,

this should yield increased quality across the whole network,

even people on dailup could use a similar forward facing error correction system (in theory) and see improvements in throughput.

xploder

Originally posted by explorer14

Originally posted by XPLodER

improvements at the "transport layer" yield vast improvements,

prioritising packets "on the fly" would allow for forward facing error correction

xploder

Considering the high percentage of overhead in the OSI, I would think this would be a good area to investigate.

so far i have read about,

sound/music with bit rate adjusting "on the fly" depending on network conditions

video/streaming with less data costs or higher quality for same bit rate,

now WIFI using a similar method shaped for noisy/busy network conditions

i know on a telecommunications level the local cell sights could use this to increase coverage and reliability "possibly" using existing hardware with simple software upgrades. that provide better service without more towers.

when you decrease redundant traffic across a whole network, you get a lower over head with less "load" meaning faster speeds, ironically this also increases throughput.

xploder

edit on 15-11-2012 by XPLodER because: (no reason given)

You want to know why they haven't used more of the wireless spectrum? huh? because its interfering with the way we think. Its like mind control, but

we're too stupid to understand/figure out what is being done to us. Plus a way to speed up the phones is to stop putting #ty products out year after

year. Have you ever noticed that the old bar phones have so much better signal strength than the "most powerful, latest phones" even on the same

Goddamn network? See for yourselves. They are just putting hype on # so they can milk the # out of your wallet

edit on

11/12/2011 by sparky8580 because: grammar

reply to post by explorer14

here is the mit pdf, for nc tcp/ip

www.mit.edu...

low over heads

here is the mit pdf, for nc tcp/ip

www.mit.edu...

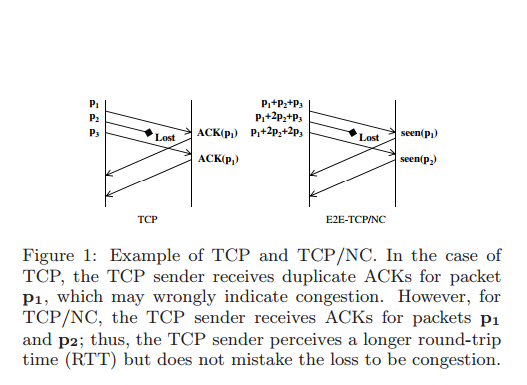

We briefly note the overhead associated with network coding. The main overhead associated with network coding can

be considered in two parts: 1) the coding vector (or coeffi-

cients) that has to be included in the header; 2) the encoding/decoding complexity. For receiver to decode a network

coded packet, the packet needs to indicate the coding coeffi-

cients used to generate the linear combination of the original

data packets. The overhead associated with the coefficients

depend on the field size used for coding as well as the number

of original packets combined. It has been shown that even

a very small field size of F256 (i.e. 8 bits = 1 byte per coef-

ficient) can provide a good performance [11, 19]. Therefore,

even if we combine 50 original packets, the coding coeffi-

cients amount to 50 bytes over all. Note that a packet is

typically around 1500 bytes. Therefore, the overhead associated with coding vector is not substantial.

low over heads

new topics

-

George Knapp AMA on DI

Area 51 and other Facilities: 3 hours ago -

Not Aliens but a Nazi Occult Inspired and then Science Rendered Design.

Aliens and UFOs: 3 hours ago -

Louisiana Lawmakers Seek to Limit Public Access to Government Records

Political Issues: 5 hours ago -

The Tories may be wiped out after the Election - Serves them Right

Regional Politics: 6 hours ago -

So I saw about 30 UFOs in formation last night.

Aliens and UFOs: 8 hours ago -

Do we live in a simulation similar to The Matrix 1999?

ATS Skunk Works: 9 hours ago -

BREAKING: O’Keefe Media Uncovers who is really running the White House

US Political Madness: 10 hours ago -

Biden--My Uncle Was Eaten By Cannibals

US Political Madness: 11 hours ago -

"We're All Hamas" Heard at Columbia University Protests

Social Issues and Civil Unrest: 11 hours ago

top topics

-

BREAKING: O’Keefe Media Uncovers who is really running the White House

US Political Madness: 10 hours ago, 23 flags -

Biden--My Uncle Was Eaten By Cannibals

US Political Madness: 11 hours ago, 18 flags -

George Knapp AMA on DI

Area 51 and other Facilities: 3 hours ago, 17 flags -

African "Newcomers" Tell NYC They Don't Like the Free Food or Shelter They've Been Given

Social Issues and Civil Unrest: 16 hours ago, 12 flags -

Russia Flooding

Fragile Earth: 17 hours ago, 7 flags -

"We're All Hamas" Heard at Columbia University Protests

Social Issues and Civil Unrest: 11 hours ago, 7 flags -

Louisiana Lawmakers Seek to Limit Public Access to Government Records

Political Issues: 5 hours ago, 7 flags -

Russian intelligence officer: explosions at defense factories in the USA and Wales may be sabotage

Weaponry: 15 hours ago, 6 flags -

So I saw about 30 UFOs in formation last night.

Aliens and UFOs: 8 hours ago, 5 flags -

The Tories may be wiped out after the Election - Serves them Right

Regional Politics: 6 hours ago, 3 flags

active topics

-

Not Aliens but a Nazi Occult Inspired and then Science Rendered Design.

Aliens and UFOs • 7 • : JonnyC555 -

So I saw about 30 UFOs in formation last night.

Aliens and UFOs • 19 • : rigel4 -

Candidate TRUMP Now Has Crazy Judge JUAN MERCHAN After Him - The Stormy Daniels Hush-Money Case.

Political Conspiracies • 366 • : Annee -

MULTIPLE SKYMASTER MESSAGES GOING OUT

World War Three • 31 • : annonentity -

Mood Music Part VI

Music • 3057 • : BatCaveJoe -

George Knapp AMA on DI

Area 51 and other Facilities • 10 • : Echo007 -

The Tories may be wiped out after the Election - Serves them Right

Regional Politics • 19 • : alwaysbeenhere2 -

"We're All Hamas" Heard at Columbia University Protests

Social Issues and Civil Unrest • 125 • : KrustyKrab -

Biden--My Uncle Was Eaten By Cannibals

US Political Madness • 40 • : Kaiju666 -

Alabama Man Detonated Explosive Device Outside of the State Attorney General’s Office

Social Issues and Civil Unrest • 57 • : watchitburn

10