It looks like you're using an Ad Blocker.

Please white-list or disable AboveTopSecret.com in your ad-blocking tool.

Thank you.

Some features of ATS will be disabled while you continue to use an ad-blocker.

share:

reply to post by SassyCat

Isn't it funny how programmers understand these things, but it's always people who aren't programmers and don't understand what it means to program are the ones who push things like this?

I notice the majority of time those who think of science as the end all of everything generally aren't the scientists themselves, but those who don't have anything to do with science. How often people forget if there were no scientists to observe and understand it, there wouldn't be any science.

Truth is, consciousness is needed for free will, and free will is needed for intelligence. Consciousness creates and understands logic, there is no logic(programming) which can create consciousness.

I think people are going to end up fooled by AI one day. Because it is possible to mimic things, but I guess only programmers will realize it's just a bunch of patterns and logic.

Isn't it funny how programmers understand these things, but it's always people who aren't programmers and don't understand what it means to program are the ones who push things like this?

I notice the majority of time those who think of science as the end all of everything generally aren't the scientists themselves, but those who don't have anything to do with science. How often people forget if there were no scientists to observe and understand it, there wouldn't be any science.

Truth is, consciousness is needed for free will, and free will is needed for intelligence. Consciousness creates and understands logic, there is no logic(programming) which can create consciousness.

I think people are going to end up fooled by AI one day. Because it is possible to mimic things, but I guess only programmers will realize it's just a bunch of patterns and logic.

Originally posted by king9072

Originally posted by justsomeboreddude

So how did they evolve? Do these robots have the ability to reproduce?

Hey, it helps when you read the article in it's entirety. Those who survived had their programming passed on to all the new robots. The robots were then sent back into the pen of food and poison, those who survived were then the next generation. Repeat that 50 times, and they found that the robots started working in teams and even intentionally putting other robots in danger to further their own survival.

Sounds like the human experience!

[atsimg]http://files.abovetopsecret.com/images/member/9fa02105cdf9.jpg[/atsimg]

Originally posted by EverythingYouKnowIsWrong

Originally posted by badmedia

However, it is impossible that these AI are actually "learning" anything.

I understand your arguments in the rest of your post and agree that without a consciousness a machine cannot necessarily be considered intelligent, but I do believe what these machines are doing could proprerly be considered learning. The robots are gaining information about their environment through experience and using that information to modify their future behavior, and if that's not learning I don't know what is.

The program swapping between generations seems like something that could be automated without too much trouble, so the only reason the robots aren't their own AI species is because a human wrote the program and put it together. That's a pretty big obstacle to overcome, but definitely doesn't seem impossible. The more I think about it the more it seems frighteningly close to AI...

Well for me, learning means understanding. And without consciousness they can't have understanding. I don't consider processing more and more information based on the set patterns to be "learning", but I can see why you would think it was.

I think of things in 2 different realms. There is creation/universe. The universe works off action and reaction. This is the realm of logic. If this happens, then this happens. The laws of physics for example are logical. This is the realm of science, as with logic these things are repeatable in a lab over and over.

The other realm is based on understanding and reasoning. And that is the realm of consciousness, philosophy, spirituality and so forth. Science and logic are just not equipped to deal with this realm. This is the realm of the scientist, rather than the science. As the scientist is required in order to understand and reason with the logic of science.

So, lets take a look at google. Any program will do, but we are all familiar with google. Google bot goes out and finds new websites and content. This new information is put into the database. Now when you do a google search, the search results have changed and a new site is "learned". But it didn't really learn anything new, it just has a different dataset from which it operates from. See what I mean? The logic google uses didn't change, it didn't learn to show search results in a different way or logic, it's just that the amount of data it uses has changed.

Does the programming behind this website learn new things as we enter in new threads? No, only the data it operates on changes, aka only it's memory changes.

I came up with lots of ways to make AI appear intelligent with logic. Even a way to give different AI different personalities. It was similiar to D&D where you roll random numbers and then base the characteristics/tendencies of the AI based on those random numbers. Thought I was well on my way to creating real intelligence. Of course until I realized without consciousness it is nothing. After that, I completely abandoned and had no desire to create AI anymore, haven't worked on it sense. I realized I would only be recreating that which is already created, and that I would eventually have to put my own consciousness into it(or someone elses).

Don't get me wrong, while this limitation of AI is true, what AI can do will be nothing short of miraculous in many cases. The ability to drive cars, and do many things that humans do will all be possible in the future. Because of their speed and processing power they will be able to do things faster, better and more consistently than humans. But without it's own consciousness, it will forever be slave to the logic given to it by something which is consciousness.

And to the person who said robots are going to be it in the future. Nah, no way. The only way that would be possible would be if you could somehow put your own consciousness into the robot. And that is actually something that is being worked on. Not sure how they are going to be able to do it, as if they put someones brain in there, then the brain becomes a bottleneck. But people who do understand what I am talking about in terms of AI are actually working on exactly that.

Here's a little documentary about it. But as someone else said, this is actually already done. Our bodies are a much more advanced biotechnology and cells are just nanobots and DNA is just the code our bodies run on. Consciousness is separate from creation so I personally think this stuff is silly and pointless. But I guess people who are scared of dieing want to try and find a way to live forever.

Google Video Link |

Originally posted by ModernAcademia

Originally posted by justsomeboreddude

This whole thing is a hoax. A program written by a human does not evolve unless the human changes the programming, which is not evolution.

This is incorrect

There's something called adaptive software

take voice recognition for example

softwares where you speak and it types a word doc

the software learns your speech patterns and/or accent and other variables.

Voice recognition software doesnt learn anything. It simply associates the spelling of a word with a file of sound data that you have basically told it when you hear this sound type this word. You could just as easily "train" it to to type "dog" when you said "monkey". It is no different than if you build a mapping table when you do data conversion. You associate one value with another and then the code goes and looks up the value you give it and replaces it with the value you have told it you want.

There is no way this simple device is going to be able to start placing value on what is good or bad beyond what the programmer placed in the code or in some table what is good or bad. You would need a supercomputer to make true decisions and even those decisions would be limited by any programming that the machine was using to "make decisions". It is impossible that a device this simple could have the computing power to be "aware" of its surrounding. In fact, no machine can be aware beyond what awareness it was programmed to have. A machine cant place value you on something it can only make really quick calculations based on the formula you programmed it to use.

Some people will believe anything.

[edit on 5/20/2009 by justsomeboreddude]

Originally posted by mahtoosacks

Originally posted by justsomeboreddude

reply to post by king9072

This whole thing is a hoax. A program written by a human does not evolve unless the human changes the programming, which is not evolution.

nah man sorry but you are wrong on this.

the ai adapts to its surroundings. just like in halo. those creatures running around are "thinking" and the code updates itself.

they dont run around and whichever ones survive get a tune up. they update each other and run it again. technically it is evolution, but obviously not their bodies, just their minds

there was another interesting thing i found, but it wasnt for evolving ai. they put a bunch of goldfish in aquariums that had sensors that would move the whole fish bowl as the fish swam in the bowl. they were allowed to roam an area with wheels, 1 goldfish per tank with about 5 goldfish all together.

they actually adapted to this and started to move in a "school" with their external selves.

do some research before you start hating. i dont care if it goes against your beliefs or not.

I am pretty sure I wasnt "hating". I was just voicing my opinion. Personally, I could care less whether or not this is true, so it is not worth "hating" over. Maybe you should do some learning before you keep believing everything you read on a website.

Actually, if you read the article all they are doing is going down a path to eliminate the random code that the scientists decided was bad. This is how it works.

1. You start with random code.

2. A human starts eliminating the ones whose code produced results that the human deemed undesirable.

3. A human combines the new set of less random code and lets this new code run in the robots. These obviously make better "decisions" because a human eliminated the code of the ones that didnt produce desired results.

4. Repeat over and over, thus continually lowering the randomness with each successive run.

This would be no different then saying computers evolved to pick the best number. You would start out with a full set of numbers, then a human (or human developed code) would decide that all negative numbers are bad and so they get removed, and on and on until you gave so much criteria that the only numbers the computers could pick from would be a set that the programmer deemed desirable.

[edit on 5/20/2009 by justsomeboreddude]

[edit on 5/20/2009 by justsomeboreddude]

Originally posted by justsomeboreddude

reply to post by king9072

This whole thing is a hoax. A program written by a human does not evolve unless the human changes the programming, which is not evolution.

Huh? Check this out.....

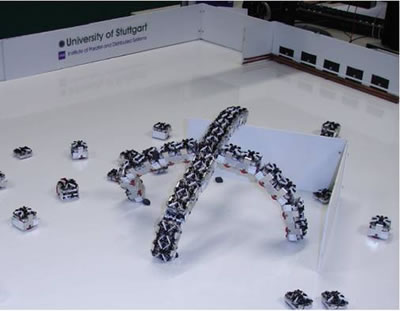

SYMBRION - Symbiotic Evolutionary Robot Organisms is a project intended to investigate and enable adaptation and evolution of multiple robot systems. A swarm of robots will create what amounts to a new, artificial "life-form."

The SYMBRION project is trying to see if robots in swarms could evolve new behaviors, and become self-healing and self-protecting. Such robots could reprogram themselves without the need for direct supervision by human beings.

Cooperative swarm of bots.

Courtesy: Technovelgy

The REPLICATOR Project covers an intelligent, reconfigurable and adaptable “carrier” of sensors (sensors network).

...robotic organisms become self-configuring, self-healing, self-optimizing and self-protecting from both hardware and software perspectives. This leads not only to extremely adaptive, evolve-able and scalable robotic systems, but also enables robot organisms to reprogram themselves without human supervision and for new, previously unforeseen, functionality to emerge.

So, do you still think this is all a hoax?

Cheers!

More

Originally posted by justsomeboreddude

reply to post by king9072

This whole thing is a hoax. A program written by a human does not evolve unless the human changes the programming, which is not evolution.

I tend to agree, otherwise if this is a phenomenon that is actual, it hints at something other than mechanics causing an obligatory 'pact' type traits..

And I doubt that.

Passing the coding from one down to another, still will not cause the single function mechanical items to know right from wrong, which is needed to ensure martyrdom or help in any form.

It would still be gobble up food, avoid poisons. If no coding to cater to looking after 'kin' or 'close bots' to prevent them hitting poisons were present, it would result in nothing more than random activity as the game of life shows.

If this was mentioned in the article, I admit I didnt read it.. yet..

just wanted to peruse the thread first.

May edit my post later!!

Edited for spelling and faulty sentences...

[edit on 20/5/2009 by badw0lf]

I've found a paper describing some of their early experiments; here's a technical description of their methodology:

Evolutionary Conditions for the Emergence of Communication in Robots (PDF)

Neural Controller

The control system of each robot consisted of a feed-forward neural network with ten input and three output neurons. Each input neuron was connected to every output neuron with a synaptic weight representing the strength of the connection (Figure S1). One of the input neurons was devoted to the sensing of food and the other to the sensing of poison. Once a robot had detected the food or poison source, the corresponding neuron was set to 1. This value decayed to 0 by a factor of 0.95 every 50 ms and thereby provided a short-term memory even after the robot’s sensors were no longer in contact with the gray and black paper circles placed below the food and poison. The remaining eight neurons were used for encoding the 360° visual-input image, which was divided into four sections of 90° each. For each section, the average of the blue and red channels was calculated and normalized within the range of 0 and 1 such that one neural input was used for the blue and one for the red value. The activation of each of the output neurons was computed as the sum of all inputs multiplied by the weight of the connection and passed through the continuous tanh(x) function (i.e., their output was between -1 and 1).

Two of the three output neurons were used for controlling the two tracks, where the output value of each neuron gave the direction of rotation (forward if > 0 and backward if < 0) and velocity (the absolute value) of one of the two tracks. The third output neuron determined whether to emit blue light; such was the case if the output was greater than 0.

The 30 genes of an individual each controlled the synaptic weights of one of the 30 neural connections. Each synaptic weight was encoded in 8 bits, giving 256 values that were mapped onto the interval [21, 1]. The total length of the genetic string of an individual was therefore 8 bits x 10 input neurons x 3 output neurons (i.e., 240 bits).

Selection and Recombination

For each of the four treatments, selection experiments were repeated in 20 independent selection lines (replicates), each consisting of 100 colonies of 10 robots. In the individual-level selection treatment, we selected the best 20% of individuals from the population of 1000 robots (Figure S2). This selected pool of 200 robots was used for creating the new generation of robots. To form colonies of related individuals r = 1, we randomly created (with replacement) 100 pairs of robots. A crossover operator was applied to their genomes with a probability of 0.05 at a randomly chosen point, and one of the two newly formed genomes was randomly selected and subjected to mutation (probability of mutation 0.01 for each of the 240 bits) [22]. The other genome was discarded. This procedure led to the formation of 100 new genomes that were each cloned ten times to construct 100 new colonies of 10 identical robots. To form colonies of unrelated individuals r = 0, we followed the same procedure but created 1000 pairs of robots from the selected pool of 200 robots. The 1000 new robots were randomly distributed among the 100 new colonies.

In the colony-level selection treatment, we followed exactly the same procedure as in the individual-level selection treatment, but the selected pool of 200 robots was formed with all of the robots from the best 20% of the 100 colonies (Figure S2).

Evolutionary Conditions for the Emergence of Communication in Robots (PDF)

Sooo freaken cool it's like a Real life spore game. i can't wait tell Milton Bradly makes a boxed version so i can conduct my own experiments.

i find it really funny that people who can barely post up images on a forum are claiming that this is a hoax, because how could it possibly work?

www.aihorizon.com...

why dont you start off there trying to decipher how to program game bots to hunt you and find health, ammo packs, re-route themselves if taking fire from a direction.

seriously get out there and play some first person shooters. youll see pretty quick that these machines are pretty weak for what has already been around for a while.

the reason why i mentioned halo is because it was one of the first games to use a truely "intelligent" enemy

news.teamxbox.com...

electronics.howstuffworks.com...

seriously how can this be a hoax when its been duplicated so many times in video games that even little kids can understand

also, the AIs have been able to learn your playing style so it can get you from an angle you've not tried to defend yet. these things are getting smarter and harder to kill. and the only difference is the world they live in.

put that same thought process into a little robot with a shotgun and see what happens lol

[edit on 5/20/2009 by mahtoosacks]

www.aihorizon.com...

why dont you start off there trying to decipher how to program game bots to hunt you and find health, ammo packs, re-route themselves if taking fire from a direction.

seriously get out there and play some first person shooters. youll see pretty quick that these machines are pretty weak for what has already been around for a while.

the reason why i mentioned halo is because it was one of the first games to use a truely "intelligent" enemy

...for an epic battle (or if we're playing co-op) I pick Legendary. it took me about 60 hours of continuous play to finish the game on this difficulty, and that's after months of developing and playtesting the game. it's a very different kind of challenge to, say, myth's legendary difficulty. many of your enemies will be outflanking you and firing from multiple directions, and they'll retreat and build up their shields if you damage any individual too heavily. also, if you don't know what you're doing, or you get taken unaware, it's very possible for a single covenant elite warrior to nail you (and don't talk to me about the commanders)...

news.teamxbox.com...

"I wrote the artificial intelligence for Halo 1," Chris explains. "Basically, it is a very specialized type of intelligence. There was a custom piece of code for each character." In "Halo 2," Chris broadened the AI he built for the first game. The first thing to understand about the AI characters in Halo is this: "The AI lives in a simulated world." Most first person shooter games, such as Quake or Unreal, are built on a graphical engine. The player is essentially a stationary "camera," and the engine creates the sensation of moving through a world by rendering graphics that create that effect. Halo is different, Chris explains. "Halo is a simulation engine. The engine creates the world, then puts the player and the AI in it ... [The] characters and their code are isolated from the world." Each character is written to do certain things, but despite their individual roles, they all function in the same way. It breaks down like this: * The character uses its AI "senses" to perceive the world -- to detect what's going on around it. * The AI takes the raw information that it gets based on its perception and interprets the data. * The AI turns that interpreted data into more processed information * The AI makes decisions about what its actions should be based on that information. * Then the AI figures out how it can best perform those actions to achieve the desired result based on the physical state of the world around it.

electronics.howstuffworks.com...

seriously how can this be a hoax when its been duplicated so many times in video games that even little kids can understand

also, the AIs have been able to learn your playing style so it can get you from an angle you've not tried to defend yet. these things are getting smarter and harder to kill. and the only difference is the world they live in.

put that same thought process into a little robot with a shotgun and see what happens lol

[edit on 5/20/2009 by mahtoosacks]

reply to post by mikesingh

Your very own post and the attached article says: TRYING.. which by definition means they havent accomplished it.

From your post and your source:

"The SYMBRION project is TRYING to see if robots in swarms could evolve new behaviors, and become self-healing and self-protecting. Such robots could reprogram themselves without the need for direct supervision by human beings."

Read your source carefully, it also says

"We'd have to ASSUME, of course, that these machines were capable of producing other machines according to their specific needs of the moment. ...the robots would have perfectly adapted to life on the continents of the planet. "

Your very own post and the attached article says: TRYING.. which by definition means they havent accomplished it.

From your post and your source:

"The SYMBRION project is TRYING to see if robots in swarms could evolve new behaviors, and become self-healing and self-protecting. Such robots could reprogram themselves without the need for direct supervision by human beings."

Read your source carefully, it also says

"We'd have to ASSUME, of course, that these machines were capable of producing other machines according to their specific needs of the moment. ...the robots would have perfectly adapted to life on the continents of the planet. "

reply to post by mahtoosacks

So you are saying your video game can rewrite its own programming? Where do I get one of those?

So you are saying your video game can rewrite its own programming? Where do I get one of those?

reply to post by Ian McLean

That is simply amazing, I wonder how far robot technology can go? AI possibly? It kind of shows that all actions and instincts are forms of code and adapted code by learning, whether if it is biological or virtual. Great post, star and flag.

That is simply amazing, I wonder how far robot technology can go? AI possibly? It kind of shows that all actions and instincts are forms of code and adapted code by learning, whether if it is biological or virtual. Great post, star and flag.

As I see it, this is not really evolution at all. Evolution doesn't pass memories or character traits on to its offspring. This is more like learning

in a society where the survivors in these virtual worlds are forced to pass off their knowledge to others. Of course the teaching is actually done by

the programmers when they download the combined knowledge into fresh brains. The brains though, are already programmed. Does that brain actually

mutate? Probably not. You never get a new species, only interesting survival patterns.

reply to post by justsomeboreddude

"I shall not commit the fashionable stupidity of regarding everything I cannot explain as a fraud." - Carl Gustav Jung

ok ... where to start here... this is going to take way too long to catch you up on YEARS of gaming ai, and the eventual buildup to robotics with ai. ill take it slow so all you non-computer junkies (no living on ats doesnt count) can soak this up. ill run down the basics.

hit this up first cus i dont think you even went to the site i posted and tried to figure it out on your own. prolly too much of a stretch anyways....

first off. the game is hard-coded to have certain tasks that it can do. like run walk shoot duck dodge jump activate buttons and all the likes. Kinda like you were programmed with talking, walking, hitting, pooping, farting.

second they make a series of algorithms that take these tasks and place a priority to them. this priority is the "deciding" factor as to watch the machine will do.

see a friend = wave

see an enemy = shoot

see enemy shoot at me = jump and run while shooting

have to cross a blocked path = find another path

a tally of all these are kept when you play them.

do you charge and shoot every time with the biggest weapon possible and are extremely deadly? well it will probably run when it sees you and try to hide.

do you really suck at what you do and miss if you even fire off a shot? well it will probably run up and cap you in the face.

it stores this information and uses the history to determine what the best case scenario for its survival is.

games that started out all had "fake" ai. they were straight programmed to attack when a switch was hit when you walked up. they didnt care what tactics you used, they would run straight at you firing. think original doom, quake, hexen.

as they got better, paths were introduced to have the bots (what you call a fake game character with ai, instead of a real player aka robot) run a course. this bot could hold ground, attack, or retreat on this path. quake3 unreal tournament, most fps games around 2000.

when halo came out, they introduced an ai that actually had a sense of the environment to them. they still had objectives such as patrol, defend, attack, retreat, but they did it within an area rather than locked on a path like a train. these guys are smarter than anything else. it seems more real. if you are blasting them bad, they will run back and call reinforcements or sound an alarm first before trying to defend till the end.

now we are to the robotic ai systems....

these guys build on the previous systems of ai while taking a very huge hit at the same time. these robots arent running a path in a world tailored just to them. they are in our world. they need real "eyes" "ears" "touch" "smell" to work here. and the routes arent placed out for them.

once they determine kind of where they are (ie they continue to live cus they didnt run off a cliff) they start to develop the traits they need to survive. food spots recharge battery so they try to find these first. poison spots drain battery, so they learn to run away from these if they can.

as all of this is happening they start to "realize" not everyone can survive, and in order to do this, it has to be better than the others (sound familiar?)

after it has all of its abilities on lock, it will contemplate ways to surpass the others. one way is to be faster to the spots, another is to trick or lie to the others and make them think the poison is food (which truely shows that robots are evil and should never be trusted).

so there we have the tour around the world in too much time. my hands hurt.

id love to hear your rebuttle to this, as it is becoming rather amusing to watch you call what i have been playing with for over 10 years easy, a GIANT HOAX lol

as games

"I shall not commit the fashionable stupidity of regarding everything I cannot explain as a fraud." - Carl Gustav Jung

ok ... where to start here... this is going to take way too long to catch you up on YEARS of gaming ai, and the eventual buildup to robotics with ai. ill take it slow so all you non-computer junkies (no living on ats doesnt count) can soak this up. ill run down the basics.

hit this up first cus i dont think you even went to the site i posted and tried to figure it out on your own. prolly too much of a stretch anyways....

first off. the game is hard-coded to have certain tasks that it can do. like run walk shoot duck dodge jump activate buttons and all the likes. Kinda like you were programmed with talking, walking, hitting, pooping, farting.

second they make a series of algorithms that take these tasks and place a priority to them. this priority is the "deciding" factor as to watch the machine will do.

see a friend = wave

see an enemy = shoot

see enemy shoot at me = jump and run while shooting

have to cross a blocked path = find another path

a tally of all these are kept when you play them.

do you charge and shoot every time with the biggest weapon possible and are extremely deadly? well it will probably run when it sees you and try to hide.

do you really suck at what you do and miss if you even fire off a shot? well it will probably run up and cap you in the face.

it stores this information and uses the history to determine what the best case scenario for its survival is.

games that started out all had "fake" ai. they were straight programmed to attack when a switch was hit when you walked up. they didnt care what tactics you used, they would run straight at you firing. think original doom, quake, hexen.

as they got better, paths were introduced to have the bots (what you call a fake game character with ai, instead of a real player aka robot) run a course. this bot could hold ground, attack, or retreat on this path. quake3 unreal tournament, most fps games around 2000.

when halo came out, they introduced an ai that actually had a sense of the environment to them. they still had objectives such as patrol, defend, attack, retreat, but they did it within an area rather than locked on a path like a train. these guys are smarter than anything else. it seems more real. if you are blasting them bad, they will run back and call reinforcements or sound an alarm first before trying to defend till the end.

now we are to the robotic ai systems....

these guys build on the previous systems of ai while taking a very huge hit at the same time. these robots arent running a path in a world tailored just to them. they are in our world. they need real "eyes" "ears" "touch" "smell" to work here. and the routes arent placed out for them.

once they determine kind of where they are (ie they continue to live cus they didnt run off a cliff) they start to develop the traits they need to survive. food spots recharge battery so they try to find these first. poison spots drain battery, so they learn to run away from these if they can.

as all of this is happening they start to "realize" not everyone can survive, and in order to do this, it has to be better than the others (sound familiar?)

after it has all of its abilities on lock, it will contemplate ways to surpass the others. one way is to be faster to the spots, another is to trick or lie to the others and make them think the poison is food (which truely shows that robots are evil and should never be trusted).

so there we have the tour around the world in too much time. my hands hurt.

id love to hear your rebuttle to this, as it is becoming rather amusing to watch you call what i have been playing with for over 10 years easy, a GIANT HOAX lol

as games

reply to post by theyreadmymind

those are much more advanced ai traits that come with millions of generations. give those robots enough time and better sensors and i bet you will see them take us over.

once again.... never trust robots.

those are much more advanced ai traits that come with millions of generations. give those robots enough time and better sensors and i bet you will see them take us over.

once again.... never trust robots.

Originally posted by mahtoosacks

reply to post by justsomeboreddude

...

it stores this information and uses the history to determine what the best case scenario for its survival is.

...

now we are to the robotic ai systems....

these guys build on the previous systems of ai while taking a very huge hit at the same time. these robots arent running a path in a world tailored just to them. they are in our world. they need real "eyes" "ears" "touch" "smell" to work here. and the routes arent placed out for them.

once they determine kind of where they are (ie they continue to live cus they didnt run off a cliff) they start to develop the traits they need to survive. food spots recharge battery so they try to find these first. poison spots drain battery, so they learn to run away from these if they can.

as all of this is happening they start to "realize" not everyone can survive, and in order to do this, it has to be better than the others (sound familiar?)

after it has all of its abilities on lock, it will contemplate ways to surpass the others. one way is to be faster to the spots, another is to trick or lie to the others and make them think the poison is food (which truely shows that robots are evil and should never be trusted).

so there we have the tour around the world in too much time. my hands hurt.

id love to hear your rebuttle to this, as it is becoming rather amusing to watch you call what i have been playing with for over 10 years easy, a GIANT HOAX lol

as games

This doesn't explain the "angelic" robots blocking the poison so others can't consume it. I'd like to hear your thoughts on that. These "angelic" robots won't survive to pass off their thoughts to other offspring and they didn't get these ideas from "angelic" ancestors. So where did the idea come from?

reply to post by badmedia

your so called intelligence wouldnt be here without successful attempts of passing on info.

your free will is only free within the confines of your abilities.

you consciousness is a collection of senses all wired together in your cpu.

why are you so arrogant?

your so called intelligence wouldnt be here without successful attempts of passing on info.

your free will is only free within the confines of your abilities.

you consciousness is a collection of senses all wired together in your cpu.

why are you so arrogant?

reply to post by theyreadmymind

empathy is a bummer i know, but HEY thats why sociopaths are called an evolutionary leap.

and every hear of nice guy finishes last?

i dunno maybe theres a dummy in every crowd?

maybe a recessive trait was programmed in to take advantage of this "motherly" attribute.

perhaps the robot knew that in order to better its species that it would need to ensure survival of the smarter faster better robot.

[edit on 5/20/2009 by mahtoosacks]

empathy is a bummer i know, but HEY thats why sociopaths are called an evolutionary leap.

and every hear of nice guy finishes last?

i dunno maybe theres a dummy in every crowd?

maybe a recessive trait was programmed in to take advantage of this "motherly" attribute.

perhaps the robot knew that in order to better its species that it would need to ensure survival of the smarter faster better robot.

[edit on 5/20/2009 by mahtoosacks]

new topics

-

2024 Pigeon Forge Rod Run - On the Strip (Video made for you)

Automotive Discussion: 26 minutes ago -

Gaza Terrorists Attack US Humanitarian Pier During Construction

Middle East Issues: 52 minutes ago -

The functionality of boldening and italics is clunky and no post char limit warning?

ATS Freshman's Forum: 1 hours ago -

Meadows, Giuliani Among 11 Indicted in Arizona in Latest 2020 Election Subversion Case

Mainstream News: 2 hours ago -

Massachusetts Drag Queen Leads Young Kids in Free Palestine Chant

Social Issues and Civil Unrest: 2 hours ago -

Weinstein's conviction overturned

Mainstream News: 4 hours ago -

Supreme Court Oral Arguments 4.25.2024 - Are PRESIDENTS IMMUNE From Later Being Prosecuted.

Above Politics: 5 hours ago -

Krystalnacht on today's most elite Universities?

Social Issues and Civil Unrest: 5 hours ago -

Chris Christie Wishes Death Upon Trump and Ramaswamy

Politicians & People: 6 hours ago -

University of Texas Instantly Shuts Down Anti Israel Protests

Education and Media: 8 hours ago

top topics

-

Krystalnacht on today's most elite Universities?

Social Issues and Civil Unrest: 5 hours ago, 8 flags -

Weinstein's conviction overturned

Mainstream News: 4 hours ago, 6 flags -

University of Texas Instantly Shuts Down Anti Israel Protests

Education and Media: 8 hours ago, 5 flags -

Supreme Court Oral Arguments 4.25.2024 - Are PRESIDENTS IMMUNE From Later Being Prosecuted.

Above Politics: 5 hours ago, 5 flags -

Meadows, Giuliani Among 11 Indicted in Arizona in Latest 2020 Election Subversion Case

Mainstream News: 2 hours ago, 4 flags -

Massachusetts Drag Queen Leads Young Kids in Free Palestine Chant

Social Issues and Civil Unrest: 2 hours ago, 3 flags -

Any one suspicious of fever promotions events, major investor Goldman Sachs card only.

The Gray Area: 10 hours ago, 2 flags -

Chris Christie Wishes Death Upon Trump and Ramaswamy

Politicians & People: 6 hours ago, 2 flags -

Gaza Terrorists Attack US Humanitarian Pier During Construction

Middle East Issues: 52 minutes ago, 1 flags -

God's Righteousness is Greater than Our Wrath

Religion, Faith, And Theology: 15 hours ago, 1 flags

active topics

-

Breaking Baltimore, ship brings down bridge, mass casualties

Other Current Events • 488 • : xuenchen -

2024 Pigeon Forge Rod Run - On the Strip (Video made for you)

Automotive Discussion • 2 • : nerbot -

Krystalnacht on today's most elite Universities?

Social Issues and Civil Unrest • 3 • : StudioNada -

Candidate TRUMP Now Has Crazy Judge JUAN MERCHAN After Him - The Stormy Daniels Hush-Money Case.

Political Conspiracies • 772 • : matafuchs -

University of Texas Instantly Shuts Down Anti Israel Protests

Education and Media • 178 • : theatreboy -

Gaza Terrorists Attack US Humanitarian Pier During Construction

Middle East Issues • 10 • : CarlLaFong -

The functionality of boldening and italics is clunky and no post char limit warning?

ATS Freshman's Forum • 8 • : grey580 -

Fossils in Greece Suggest Human Ancestors Evolved in Europe, Not Africa

Origins and Creationism • 84 • : whereislogic -

Ditching physical money

History • 21 • : SprocketUK -

Meadows, Giuliani Among 11 Indicted in Arizona in Latest 2020 Election Subversion Case

Mainstream News • 4 • : ElitePlebeian2