It looks like you're using an Ad Blocker.

Please white-list or disable AboveTopSecret.com in your ad-blocking tool.

Thank you.

Some features of ATS will be disabled while you continue to use an ad-blocker.

18

share:

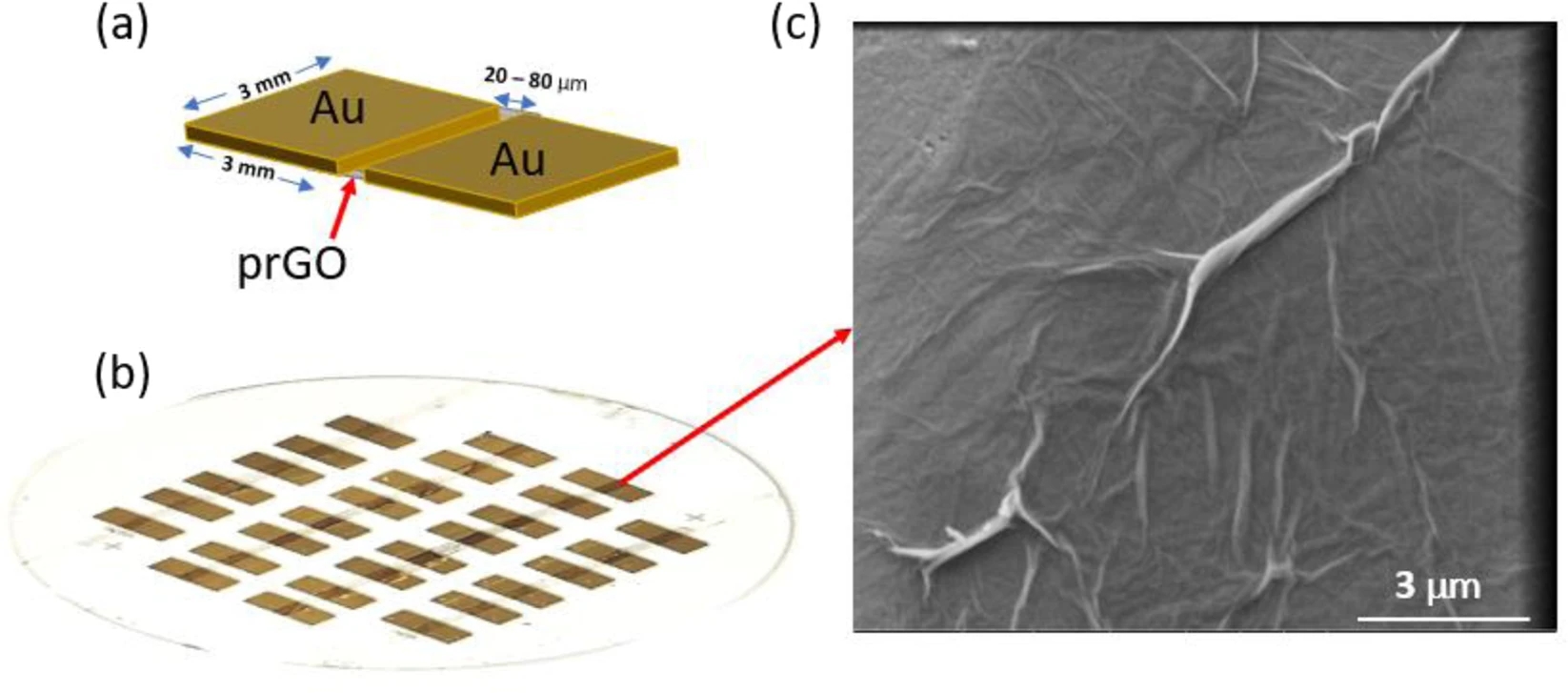

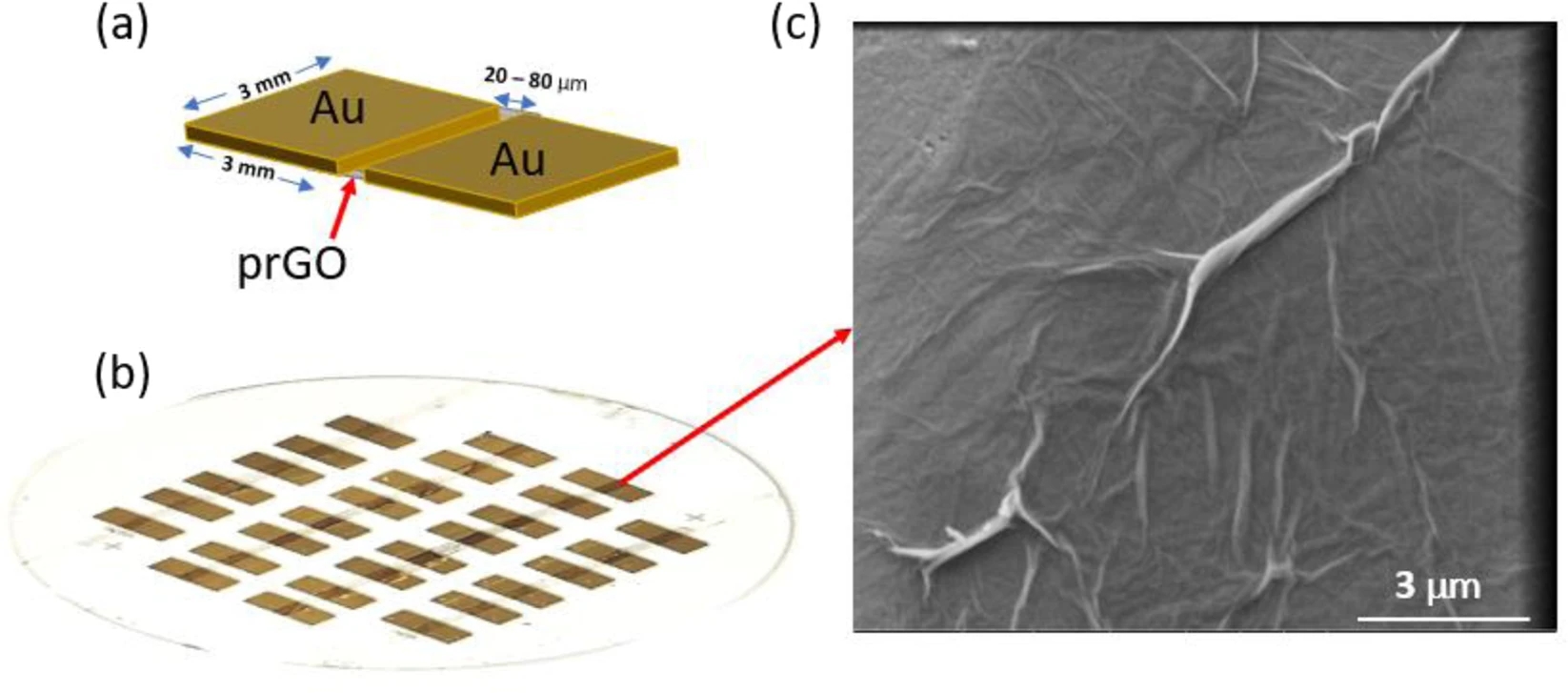

Neuromorphic graphene-based AI chips use the cutting edge in commercially available computing devices containing bio-mimicking neurons. They operate

far faster than the biological-based neurons they are patterned upon. In human neurons, a signal passes through them at about 250 miles per hour. In

graphene-based bio-mimicking neurons, the signal passes through them at a fairly large component of the speed of light. With these new graphene

neurons, they have about 4 times the packing density of neurons in the human brain. Neurons in the human brain have a spiking of rate about 2Hz while

the bio-mimicking neurons have a spiking rate of about 65MHz. In the image below (c) shows an image of a graphene neuron shown under a scanning

electron microscope.

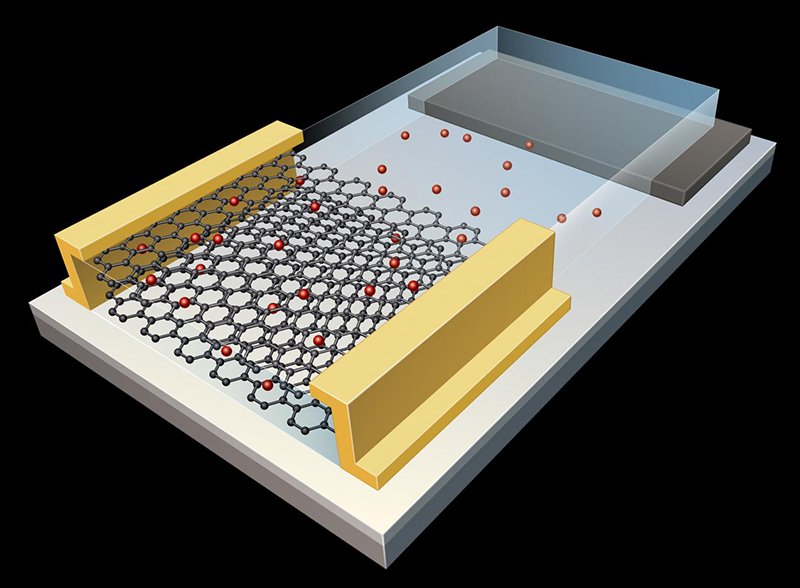

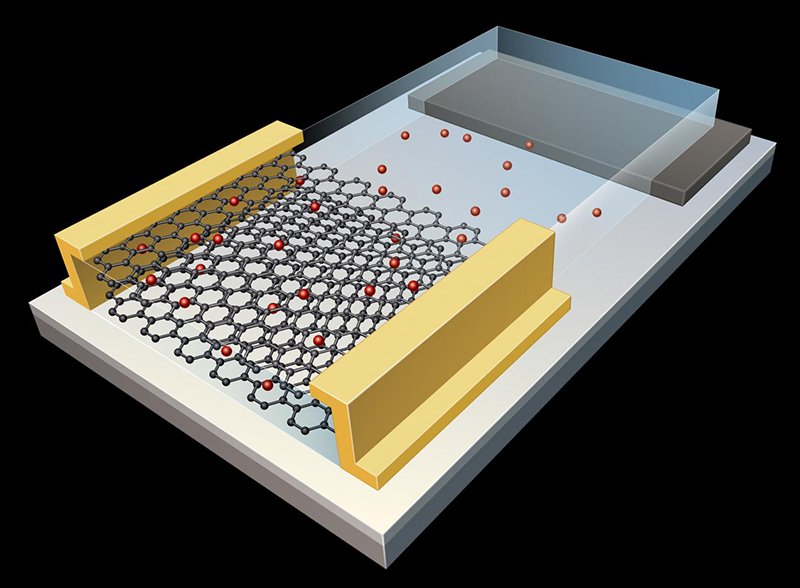

If it looks kind of familiar that is due to the fact it is based on human neurons. On the left side of the image (a), it shows the layout of a single neuron in the cluster (b). The next image shows a closer view of a single layer of the neuromorphic chip.

My company is very small but the cost of these chips has come down to the price where we are working on applications and devices using these chips for image and signal processing. They can not be benchmarked next to CMSO type neuron chips like those currently produced by Intel. Even with their shaver core devices. For one thing these, graphene based neuromorphic AI cores do not have the same issue of the von Neumann bottleneck as CMOS based architectures do.

If it looks kind of familiar that is due to the fact it is based on human neurons. On the left side of the image (a), it shows the layout of a single neuron in the cluster (b). The next image shows a closer view of a single layer of the neuromorphic chip.

My company is very small but the cost of these chips has come down to the price where we are working on applications and devices using these chips for image and signal processing. They can not be benchmarked next to CMSO type neuron chips like those currently produced by Intel. Even with their shaver core devices. For one thing these, graphene based neuromorphic AI cores do not have the same issue of the von Neumann bottleneck as CMOS based architectures do.

Still not AI

And , as long as a program has to exist for anything to function , never will be.

And , as long as a program has to exist for anything to function , never will be.

a reply to: machineintelligence

Damn, I wish I was born a few decades later so I could utilize the the cybernetics and advanced prosthetics.

Damn, I wish I was born a few decades later so I could utilize the the cybernetics and advanced prosthetics.

a reply to: Gothmog

Artificial Intelligence is defined as a simulation of intelligence. By that definition, we will have AI. Computers already augment human intelligence. As technology continues to improve that augmentation will also improve. The systems my company is working on, for instance, can do things no human can do. As an augmentation of human learning, memory, image, and signal processing and event or object recognition it becomes a very valuable tool for a great many technology sectors.

For example, your smartphone has more data, signal, and image processing capability than a room full of machines from about 30 years ago. The technology is changing, improving, and expanding now so that CMOS based neurons from 10 years ago that would fill an entire rack-mounted server could barely perform the same tasks as a 4 layer graphene-based neuromorphic device that will fit on a USB stick today.

Artificial Intelligence is defined as a simulation of intelligence. By that definition, we will have AI. Computers already augment human intelligence. As technology continues to improve that augmentation will also improve. The systems my company is working on, for instance, can do things no human can do. As an augmentation of human learning, memory, image, and signal processing and event or object recognition it becomes a very valuable tool for a great many technology sectors.

For example, your smartphone has more data, signal, and image processing capability than a room full of machines from about 30 years ago. The technology is changing, improving, and expanding now so that CMOS based neurons from 10 years ago that would fill an entire rack-mounted server could barely perform the same tasks as a 4 layer graphene-based neuromorphic device that will fit on a USB stick today.

a reply to: machineintelligence

Are these general purpose CPU's or more like ASIC specific use chips?

And do you have a press release or something? Cuz this is way cool.

Are these general purpose CPU's or more like ASIC specific use chips?

And do you have a press release or something? Cuz this is way cool.

a reply to: machineintelligence

Some technology's filter down to the public but other's never will.

Back in the 70's the oil industry was raping the world for money, oil crisis drove country's to the brink and allowed them to over inflate there product to ridiculous level's and indeed it got so bad that the US even considered building a fleet of space planes in the 1970's to be used to construct solar power stations that would then beam energy back down to the US, enough energy that it would have solved the crisis immediately and removed the need for oil, of course it never came to pass since if you threaten the very powerful elite's source of control and power then you will either disappear or be made out to be a fool.

www.pinterest.co.uk...

A similar story as Salters Duck a wave generator that would have if implemented by the mid 1980's have completely freed Britain from the need for oil, gas or coal and once again the vested interests worked to prevent it ever being implemented.

en.wikipedia.org...

But in the consumer market on a more humble scale there are also many suppressed technology's, take the Holographic storage medium a little like a three dimensional laser disc this was going to revolutionise personal data storage before Hard Drives were even a thing but because it had the potential to hold such huge amount's of data AND be very cheap once in production and despite millions being poured into it's development by the likes of Grundig etc it simply never saw the light of day, it would have rendered many other storage technology's obsolete before they had even hit the shop's so that is another one that is being sat on somewhere.

And likewise you will never see a TRUE quantum processing home PC, it would have the potential to be too powerful and put an end to the upgrade path that makes people buy a new computer ever two or three years or so (or in my case every five to ten).

Vested interests have held back many technology's or by today our world may have looked a lot more like something from star wars than it does in our lives (Well maybe not that advanced but you catch my meaning).

Some technology's filter down to the public but other's never will.

Back in the 70's the oil industry was raping the world for money, oil crisis drove country's to the brink and allowed them to over inflate there product to ridiculous level's and indeed it got so bad that the US even considered building a fleet of space planes in the 1970's to be used to construct solar power stations that would then beam energy back down to the US, enough energy that it would have solved the crisis immediately and removed the need for oil, of course it never came to pass since if you threaten the very powerful elite's source of control and power then you will either disappear or be made out to be a fool.

www.pinterest.co.uk...

A similar story as Salters Duck a wave generator that would have if implemented by the mid 1980's have completely freed Britain from the need for oil, gas or coal and once again the vested interests worked to prevent it ever being implemented.

en.wikipedia.org...

But in the consumer market on a more humble scale there are also many suppressed technology's, take the Holographic storage medium a little like a three dimensional laser disc this was going to revolutionise personal data storage before Hard Drives were even a thing but because it had the potential to hold such huge amount's of data AND be very cheap once in production and despite millions being poured into it's development by the likes of Grundig etc it simply never saw the light of day, it would have rendered many other storage technology's obsolete before they had even hit the shop's so that is another one that is being sat on somewhere.

And likewise you will never see a TRUE quantum processing home PC, it would have the potential to be too powerful and put an end to the upgrade path that makes people buy a new computer ever two or three years or so (or in my case every five to ten).

Vested interests have held back many technology's or by today our world may have looked a lot more like something from star wars than it does in our lives (Well maybe not that advanced but you catch my meaning).

a reply to: machineintelligence

Can they run Crysis Remastered with all the bells and whistles on?

On a more serious note, this type of technology is always of interest.

Can they run Crysis Remastered with all the bells and whistles on?

On a more serious note, this type of technology is always of interest.

a reply to: andy06shake

Apparently ENB's are being developed to take advantage of ray tracing, I would not try using it of course unless you have one of those fancy shmancy RTX card's because even though they have now enabled it at driver level on GTX gards it drags there performance right down.

But you should be able to get an ENB (kind of like a re-shade but better though that really is a matter of taste) for Crisis that will allow you to change the feel of the whole game at least graphically.

And yes I too am hoping to see this particular technology eventually reach we humble consumers though I would not hold my breath for it.

In the meantime even if you don't have RTX if your graphic's card and rig are still pretty decent then there may be something similar to this early attempt at simulating ray tracing without actually ray tracing anything, this was made for fallout 4 but look at that lighting and all thanks to an enb.

Apparently ENB's are being developed to take advantage of ray tracing, I would not try using it of course unless you have one of those fancy shmancy RTX card's because even though they have now enabled it at driver level on GTX gards it drags there performance right down.

But you should be able to get an ENB (kind of like a re-shade but better though that really is a matter of taste) for Crisis that will allow you to change the feel of the whole game at least graphically.

And yes I too am hoping to see this particular technology eventually reach we humble consumers though I would not hold my breath for it.

In the meantime even if you don't have RTX if your graphic's card and rig are still pretty decent then there may be something similar to this early attempt at simulating ray tracing without actually ray tracing anything, this was made for fallout 4 but look at that lighting and all thanks to an enb.

edit on 21-7-2020 by LABTECH767 because: (no reason given)

a reply to: andy06shake

Keep an eye on the ENB page for whatever games you play and sooner or later you will definitely start to see REAL ray tracing ENB's even for older games in which it was of course not a feature.

enbdev.com...

I am still chugging along with an old I5, 8 gig and an old 1050ti, not very good by today's standards but for me it still just about does it, still I am actually currently looking at buying an RTX rig with at least 16gb and an RTX probably either a 2060 or 2070 super since they seem to hit the sweet spot, and yes I know it would be cheaper to build my own but technology has moved on since I used to self build years ago, I can not really be bothered with all that bios tweaking and installing - though you know at one time I used to love doing that kind of stuff.

If I was going to self build though I would probably go down the Ryzen route since AMD seem's to be kicking intell's but at the moment and intel are doing some pretty damned stupid thing's to there consumers.

Keep an eye on the ENB page for whatever games you play and sooner or later you will definitely start to see REAL ray tracing ENB's even for older games in which it was of course not a feature.

enbdev.com...

I am still chugging along with an old I5, 8 gig and an old 1050ti, not very good by today's standards but for me it still just about does it, still I am actually currently looking at buying an RTX rig with at least 16gb and an RTX probably either a 2060 or 2070 super since they seem to hit the sweet spot, and yes I know it would be cheaper to build my own but technology has moved on since I used to self build years ago, I can not really be bothered with all that bios tweaking and installing - though you know at one time I used to love doing that kind of stuff.

If I was going to self build though I would probably go down the Ryzen route since AMD seem's to be kicking intell's but at the moment and intel are doing some pretty damned stupid thing's to there consumers.

edit on 21-7-2020 by LABTECH767 because: (no reason given)

a reply to: grey580

This is a link to the web site of the company in South Korea that invented them.

nepes AI

They have since sold the technology to General Vision Inc. in Petaluma, CA. They have lots of papers on the technology on their respective sites.

It has received very little press or fanfare in the US because very big companies have contracts with the big tech companies as well as defense contracts to protect from this disruptive technology.

It also bears noting that these chips do not lose efficiency all the way up to °158f while CMOS loses efficiency at °99f.

This is a link to the web site of the company in South Korea that invented them.

nepes AI

They have since sold the technology to General Vision Inc. in Petaluma, CA. They have lots of papers on the technology on their respective sites.

It has received very little press or fanfare in the US because very big companies have contracts with the big tech companies as well as defense contracts to protect from this disruptive technology.

It also bears noting that these chips do not lose efficiency all the way up to °158f while CMOS loses efficiency at °99f.

edit on 7/21/2020 by machineintelligence because: added content

a reply to: LABTECH767

"Contol" uses the new ray-tracing to decent effect on the RTX cards and "Battlefield" if memory serves.

Ive got Ryzen 3600X with the RTX card, and 32Gb ram.

Gave the boy my old GTX 1070 rig, with an old i5 2500K(OC 4.8Ghz) still doing the job believe it or not.

Ryzen are great CPU, bang for buck wise, sound to build with also, 1st gen were a bit iffy dependant on board and bios all the same.

By the looks of it, the 4000 series is a bit wonky so far given the lineup and addition of onboard GPU to ceratin CPU in the series.

"Contol" uses the new ray-tracing to decent effect on the RTX cards and "Battlefield" if memory serves.

Ive got Ryzen 3600X with the RTX card, and 32Gb ram.

Gave the boy my old GTX 1070 rig, with an old i5 2500K(OC 4.8Ghz) still doing the job believe it or not.

Ryzen are great CPU, bang for buck wise, sound to build with also, 1st gen were a bit iffy dependant on board and bios all the same.

By the looks of it, the 4000 series is a bit wonky so far given the lineup and addition of onboard GPU to ceratin CPU in the series.

edit on

21-7-2020 by andy06shake because: (no reason given)

originally posted by: machineintelligence

a reply to: Gothmog

Artificial Intelligence is defined as a simulation of intelligence. By that definition, we will have AI. Computers already augment human intelligence. As technology continues to improve that augmentation will also improve. The systems my company is working on, for instance, can do things no human can do. As an augmentation of human learning, memory, image, and signal processing and event or object recognition it becomes a very valuable tool for a great many technology sectors.

For example, your smartphone has more data, signal, and image processing capability than a room full of machines from about 30 years ago. The technology is changing, improving, and expanding now so that CMOS based neurons from 10 years ago that would fill an entire rack-mounted server could barely perform the same tasks as a 4 layer graphene-based neuromorphic device that will fit on a USB stick today.

IMO a delineation should be made between AI in the classic sense, and the new scientific discipline labeled ML (machine learning).

Alan Turing had very brilliantly defined the threshold for Artificial Intelligence many years ago, and in my thinking that is still the bar to label a computational system as 'intelligent'.

What you're describing, 'augmentation of human learning', and advancements in processing and storage resources that enable it, to me is better termed 'Machine Learning'.

a reply to: SleeperHasAwakened

I agree with your premise of course thus I have the username here machineintelligence but AI is what the industry calls it so I use that when discussing it.

I agree with your premise of course thus I have the username here machineintelligence but AI is what the industry calls it so I use that when discussing it.

a reply to: machineintelligence

Thanks for this information. Please explain spiking as used in your article? Is this a MAX speed?

Is this a MAX speed?

" Neurons in the human brain have a spiking of rate about 2Hz while the bio-mimicking neurons have a spiking rate of about 65MHz."

Thanks for this information. Please explain spiking as used in your article? Is this a MAX speed?

Is this a MAX speed?

" Neurons in the human brain have a spiking of rate about 2Hz while the bio-mimicking neurons have a spiking rate of about 65MHz."

a reply to: machineintelligence

Great idea!!

The “what AI men’s” questions aside, the brain is wide open and will try to make sense of ‘any’ signal presented to it!!

So the audio brain function portion will take a signal turn it into an “audio” signal. Even if you were born without being able to hear that section still makes “audio” signals for the rest of the brain to do with what it wants!! And each major area does the same. Basically, the brain is a signal interpreter! And add a graphene assisted signal processor in and the brain will adjust and use it like any signal it receives now!

From brain damage to hallucinogenic drugs to the absence of input (isolation tank), the brain can cope! Even a speed mismatch (our eyes shift back and forth to triangulate depth. You filter it out and ignore it. Until you try LDS and then wood grain ‘moves and waves’ because you are paying attention to what you normally ignore! And that all autonomous to you! Might help to explain when time “slows down” in an accident... hmmmn)

Your brain is a terrible thing not have to want to waste... strategery!!

Great idea!!

The “what AI men’s” questions aside, the brain is wide open and will try to make sense of ‘any’ signal presented to it!!

So the audio brain function portion will take a signal turn it into an “audio” signal. Even if you were born without being able to hear that section still makes “audio” signals for the rest of the brain to do with what it wants!! And each major area does the same. Basically, the brain is a signal interpreter! And add a graphene assisted signal processor in and the brain will adjust and use it like any signal it receives now!

From brain damage to hallucinogenic drugs to the absence of input (isolation tank), the brain can cope! Even a speed mismatch (our eyes shift back and forth to triangulate depth. You filter it out and ignore it. Until you try LDS and then wood grain ‘moves and waves’ because you are paying attention to what you normally ignore! And that all autonomous to you! Might help to explain when time “slows down” in an accident... hmmmn)

Your brain is a terrible thing not have to want to waste... strategery!!

originally posted by: machineintelligence

a reply to: SleeperHasAwakened

I agree with your premise of course thus I have the username here machineintelligence but AI is what the industry calls it so I use that when discussing it.

So I was thinking, haven't more companies investigated quantum processors? I'm more into software so I don't know what the hardware heavy hitters (Intel, AMD, NVIDIA) are doing, as far as research, or smaller operations for that matter.

This is still a very 'cutting edge', new discipline, but if/when this matures, the amount of bandwidth and processing power will dwarf even that which the neurons in the human brain can provide. The lithography (i.e. how small they can shrink the CPU circuitry) on many CPUs is like 7nm or so right now. That is the width of say several silicon atoms. With quantum CPU we're talking about using /photons/ sub-atomic particles to represent processing state, so that is much smaller than even 1nm.

Seems comparing CPU size and power to a neuron is kind of aiming low....

a reply to: SleeperHasAwakened

I am no research scientist just an entrepreneur, and an engineer but I think the hard part of scaling on quantum computers is they require a very particular environment in order to operate. Things like Brownian motion electrons from the heat in the materials disable the cubit. Quantum tunneling of electrons in the structure is another issue. They can not really be manufactured by lithography I think. This makes it kind of expensive for a commercial application. I think the cubit cores right now are built using high vacuum epitaxy deposition using atomic force tweezer steering of the materials used to place them just right in order to construct and build the particle trap used in the cubit. I could be way off as I have not researched that technology for a while now. They might have moved onto other approaches which would allow for a less expensive build.

I am no research scientist just an entrepreneur, and an engineer but I think the hard part of scaling on quantum computers is they require a very particular environment in order to operate. Things like Brownian motion electrons from the heat in the materials disable the cubit. Quantum tunneling of electrons in the structure is another issue. They can not really be manufactured by lithography I think. This makes it kind of expensive for a commercial application. I think the cubit cores right now are built using high vacuum epitaxy deposition using atomic force tweezer steering of the materials used to place them just right in order to construct and build the particle trap used in the cubit. I could be way off as I have not researched that technology for a while now. They might have moved onto other approaches which would allow for a less expensive build.

a reply to: montybd

The bus speed on this technology at present operates at 700MHz but that is to process in this case 4 layers of cores. The chip operates at a typical system clock of 35 MHz. If multiple chips are connected in parallel the typical system clock is 18 Mhz according to the company that builds them. If you are like me and have mostly researched CMOS based neurons like Intel shaver cores it is not possible to directly benchmark them next to each other. I recommend going to their site and download all the manuals and papers they publish there to get a handle on how these neuromorphic neuron clusters work.

The NM500 chip we are using has 576 neurons per core and has a total of 2,304 neurons in total. The speed of this chip for producing an inference is about 5 picoseconds. Using live video input a trained module can resolve a model in about 1/100th of a second. It will resolve every object in one frame of a 1080p video stream so faster than the 60 frames per second we are operating at.

The bus speed on this technology at present operates at 700MHz but that is to process in this case 4 layers of cores. The chip operates at a typical system clock of 35 MHz. If multiple chips are connected in parallel the typical system clock is 18 Mhz according to the company that builds them. If you are like me and have mostly researched CMOS based neurons like Intel shaver cores it is not possible to directly benchmark them next to each other. I recommend going to their site and download all the manuals and papers they publish there to get a handle on how these neuromorphic neuron clusters work.

The NM500 chip we are using has 576 neurons per core and has a total of 2,304 neurons in total. The speed of this chip for producing an inference is about 5 picoseconds. Using live video input a trained module can resolve a model in about 1/100th of a second. It will resolve every object in one frame of a 1080p video stream so faster than the 60 frames per second we are operating at.

edit on 7/21/2020 by machineintelligence because: added content

new topics

-

Las Vegas UFO Spotting Teen Traumatized by Demon Creature in Backyard

Aliens and UFOs: 2 hours ago -

2024 Pigeon Forge Rod Run - On the Strip (Video made for you)

Automotive Discussion: 3 hours ago -

Gaza Terrorists Attack US Humanitarian Pier During Construction

Middle East Issues: 3 hours ago -

The functionality of boldening and italics is clunky and no post char limit warning?

ATS Freshman's Forum: 4 hours ago -

Meadows, Giuliani Among 11 Indicted in Arizona in Latest 2020 Election Subversion Case

Mainstream News: 5 hours ago -

Massachusetts Drag Queen Leads Young Kids in Free Palestine Chant

Social Issues and Civil Unrest: 5 hours ago -

Weinstein's conviction overturned

Mainstream News: 6 hours ago -

Supreme Court Oral Arguments 4.25.2024 - Are PRESIDENTS IMMUNE From Later Being Prosecuted.

Above Politics: 8 hours ago -

Krystalnacht on today's most elite Universities?

Social Issues and Civil Unrest: 8 hours ago -

Chris Christie Wishes Death Upon Trump and Ramaswamy

Politicians & People: 8 hours ago

top topics

-

Krystalnacht on today's most elite Universities?

Social Issues and Civil Unrest: 8 hours ago, 9 flags -

Weinstein's conviction overturned

Mainstream News: 6 hours ago, 7 flags -

Supreme Court Oral Arguments 4.25.2024 - Are PRESIDENTS IMMUNE From Later Being Prosecuted.

Above Politics: 8 hours ago, 7 flags -

University of Texas Instantly Shuts Down Anti Israel Protests

Education and Media: 11 hours ago, 6 flags -

Massachusetts Drag Queen Leads Young Kids in Free Palestine Chant

Social Issues and Civil Unrest: 5 hours ago, 4 flags -

Meadows, Giuliani Among 11 Indicted in Arizona in Latest 2020 Election Subversion Case

Mainstream News: 5 hours ago, 4 flags -

Gaza Terrorists Attack US Humanitarian Pier During Construction

Middle East Issues: 3 hours ago, 3 flags -

Chris Christie Wishes Death Upon Trump and Ramaswamy

Politicians & People: 8 hours ago, 2 flags -

Any one suspicious of fever promotions events, major investor Goldman Sachs card only.

The Gray Area: 13 hours ago, 2 flags -

2024 Pigeon Forge Rod Run - On the Strip (Video made for you)

Automotive Discussion: 3 hours ago, 1 flags

active topics

-

Meadows, Giuliani Among 11 Indicted in Arizona in Latest 2020 Election Subversion Case

Mainstream News • 10 • : chr0naut -

Supreme Court Oral Arguments 4.25.2024 - Are PRESIDENTS IMMUNE From Later Being Prosecuted.

Above Politics • 75 • : Vermilion -

University student disciplined after saying veganism is wrong and gender fluidity is stupid

Education and Media • 50 • : watchitburn -

VP's Secret Service agent brawls with other agents at Andrews

Mainstream News • 55 • : CarlLaFong -

Cats Used as Live Bait to Train Ferocious Pitbulls in Illegal NYC Dogfighting

Social Issues and Civil Unrest • 23 • : Ravenwatcher -

-@TH3WH17ERABB17- -Q- ---TIME TO SHOW THE WORLD--- -Part- --44--

Dissecting Disinformation • 680 • : 777Vader -

Is there a hole at the North Pole?

ATS Skunk Works • 40 • : Oldcarpy2 -

Fossils in Greece Suggest Human Ancestors Evolved in Europe, Not Africa

Origins and Creationism • 89 • : whereislogic -

University of Texas Instantly Shuts Down Anti Israel Protests

Education and Media • 197 • : NorthOS -

Candidate TRUMP Now Has Crazy Judge JUAN MERCHAN After Him - The Stormy Daniels Hush-Money Case.

Political Conspiracies • 790 • : Oldcarpy2

18