It looks like you're using an Ad Blocker.

Please white-list or disable AboveTopSecret.com in your ad-blocking tool.

Thank you.

Some features of ATS will be disabled while you continue to use an ad-blocker.

11

share:

Our Id Writ Large

The compelling title of this thread does not belong to me-- the words are a partial quote of Elon Musk’s from a September interview with Joe Rogan.

Here is the interview in full if you are interested in the read. It’s an absolutely fascinating subject (“Human Civilization and AI) which I wish I understood better. If any members here can add to the discussion, in layman’s terms please because I am computer illiterate, I really would be most appreciative.

And honestly, I’ve always had an unhealthy suspicion of technology and don’t know how much of that is due to my ignorance. I love Marie Curie’s quote

“Nothing in life is to be feared, it is only to be understood. Now is the time to understand more, so that we may fear less.”

I’m ready for some schooling. And I have also been reading plenty of interesting cases of new technology improving people’s quality of life greatly (the thread yesterday about a cafe that uses robots controlled by quadriplegic/paraplegic people one great example). But I’d love to hear what the membership has to say on this topic.

Link to transcript: www.organism.earth...

And here’s the full context of the quote I used for my title.

Musk goes on to explain that the online systems are designed to resonate with the human limbic system. For clarity, I’ll post the definition of limbic here to show exactly how this relates to A.I. and our id.

lim·bic sys·tem

/ˈlimbik ˌsistəm/

noun

a complex system of nerves and networks in the brain, involving several areas near the edge of the cortex concerned with instinct and mood. It controls the basic emotions (fear, pleasure, anger) and drives (hunger, sex, dominance, care of offspring).

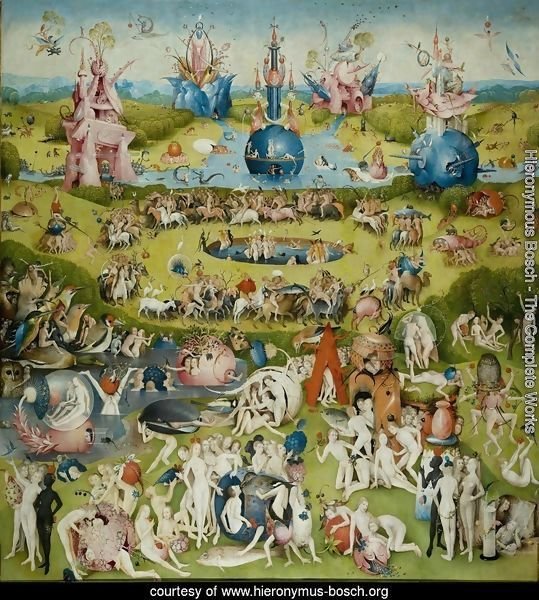

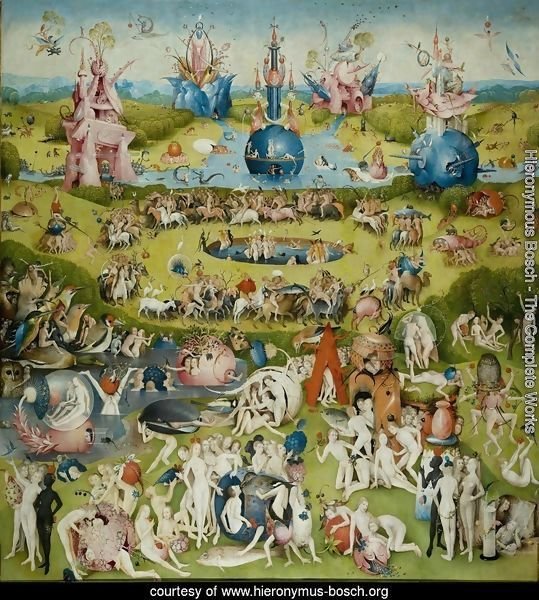

Bosch’s depiction of the garden of earthly delights and the horrors of hell-- both of which reside in the Id.

Musk raises an interesting point: We are all contributing to and informing the structure of the vast network which makes up AI. A large portion of what people are feeding google, instagram, liveleaks, etc involves material that represents our more base instincts.

Do you think this is anything to be concerned about? Any other insights to add to the discussion? I see Musk as a bit looney but I still am very interested in what he has to say. He reminds me of a kind of mad (but probably leaning more toward good than evil) scientist.

Thank you for reading and I hope to hear from you!

The compelling title of this thread does not belong to me-- the words are a partial quote of Elon Musk’s from a September interview with Joe Rogan.

Here is the interview in full if you are interested in the read. It’s an absolutely fascinating subject (“Human Civilization and AI) which I wish I understood better. If any members here can add to the discussion, in layman’s terms please because I am computer illiterate, I really would be most appreciative.

And honestly, I’ve always had an unhealthy suspicion of technology and don’t know how much of that is due to my ignorance. I love Marie Curie’s quote

“Nothing in life is to be feared, it is only to be understood. Now is the time to understand more, so that we may fear less.”

I’m ready for some schooling. And I have also been reading plenty of interesting cases of new technology improving people’s quality of life greatly (the thread yesterday about a cafe that uses robots controlled by quadriplegic/paraplegic people one great example). But I’d love to hear what the membership has to say on this topic.

Link to transcript: www.organism.earth...

And here’s the full context of the quote I used for my title.

Musk: Yes. I mean, I made those comments some years ago, but it feels like we are the biological bootloader for AI, effectively. We are building it. And then we’re building progressively greater intelligence. And the percentage of intelligence that is not human is increasing. And eventually, we will represent a very small percentage of intelligence.

But the AI isn’t formed, strangely, by the human limbic system. It is in large part our id writ large.

Rogan: How so?

Musk: Well, you mentioned all those things; the sort of primal drives. Those’re all things that we like and hate and fear. They’re all there on the Internet. They’re a projection of our limbic system.

Musk goes on to explain that the online systems are designed to resonate with the human limbic system. For clarity, I’ll post the definition of limbic here to show exactly how this relates to A.I. and our id.

lim·bic sys·tem

/ˈlimbik ˌsistəm/

noun

a complex system of nerves and networks in the brain, involving several areas near the edge of the cortex concerned with instinct and mood. It controls the basic emotions (fear, pleasure, anger) and drives (hunger, sex, dominance, care of offspring).

Bosch’s depiction of the garden of earthly delights and the horrors of hell-- both of which reside in the Id.

Musk raises an interesting point: We are all contributing to and informing the structure of the vast network which makes up AI. A large portion of what people are feeding google, instagram, liveleaks, etc involves material that represents our more base instincts.

Do you think this is anything to be concerned about? Any other insights to add to the discussion? I see Musk as a bit looney but I still am very interested in what he has to say. He reminds me of a kind of mad (but probably leaning more toward good than evil) scientist.

Thank you for reading and I hope to hear from you!

edit on 4-1-2019 by zosimov because: (no reason given)

a reply to: zosimov

The thing with AI is that it's...artificial intelligence. It's not a real human. The experiences, emotions, oral traditions, fears, etc etc don't have a quantitative.

Now extrapolate this over time, and you 'teach' this intelligence to learn. All on its own. Sooner or later, it will realize that the human is the only predator to its existence, then extermination.

Always enjoy your threads btw!

The thing with AI is that it's...artificial intelligence. It's not a real human. The experiences, emotions, oral traditions, fears, etc etc don't have a quantitative.

Now extrapolate this over time, and you 'teach' this intelligence to learn. All on its own. Sooner or later, it will realize that the human is the only predator to its existence, then extermination.

Always enjoy your threads btw!

a reply to: JinMI

Thank you JinMI! I always enjoy reading your perspective/threads also. Cheers!

As to your point... very well taken. This is the very thing I wonder about, particularly how it relates to singularity, as well as how much more of a cyborg we'll be willing to become.

Thank you JinMI! I always enjoy reading your perspective/threads also. Cheers!

As to your point... very well taken. This is the very thing I wonder about, particularly how it relates to singularity, as well as how much more of a cyborg we'll be willing to become.

a reply to: zosimov

At this point, I think by the time we notice it's gone too far, it will be long too late.

Imparting anything with the ability to learn seems to be counterproductive if you can't be honest with the nature of things. The human condition, as it were, is a weakness. Something an artificial intelligence would seek to destroy.

I'm open to being wrong on this.

At this point, I think by the time we notice it's gone too far, it will be long too late.

Imparting anything with the ability to learn seems to be counterproductive if you can't be honest with the nature of things. The human condition, as it were, is a weakness. Something an artificial intelligence would seek to destroy.

I'm open to being wrong on this.

a reply to: zosimov

Hi my friend, very interesting topic.

The potential of AI is both incredible and terrifying. In many ways, AI is human natures quest to find perfection.

But nothing in the universe is perfect, so where does that leave us? Probably somewhere within a conundrum.

I believe at the edge of perfection lies self-destruction, because perfection cannot exist without imperfection.

The point I'm trying to make? Not really sure.

But around and around we go...

How long do you think before we're living out The Terminator?

Have a nice weekend my friend!

Hi my friend, very interesting topic.

The potential of AI is both incredible and terrifying. In many ways, AI is human natures quest to find perfection.

But nothing in the universe is perfect, so where does that leave us? Probably somewhere within a conundrum.

I believe at the edge of perfection lies self-destruction, because perfection cannot exist without imperfection.

The point I'm trying to make? Not really sure.

But around and around we go...

How long do you think before we're living out The Terminator?

Have a nice weekend my friend!

a reply to: zosimov

There appears to be an evolutionary advantage to being able to visualize sexual activity as a means of always being ready to mate. Plus it's good for understanding how to trap hard to catch and kill animals.

There are two types of AI systems. There is "weak" artificial intelligence and there is "strong" AI:

Weak Artificial Intelligence

Strong Artificial Intelligence

When I was in college many years ago studying computer science I spent many credits on artificial intelligence. My whole life every few years I hear someone claim weak-AI will someday magically become strong-AI. When I was in college Marvin Minsky Society of the Mind was all the rage. But after reading all the works of John Searle I was convinced his way of thinking was more correct.

Recently, John Searle gave a talk at google about AI. It's funny to see how the engineers respond to his criticisms. They are bunch of immature cry babies as he crushes them with his superior intellect.

John Searl presents an absolutely fascinating argument against strong AI.

I lost interest in AI because weak AI systems are so stupid. I became convinced the human mind is more like a analog TV receiver than a Von-Neuman type computer. The human mind is more like yogurt. We do not synthesize creativity but we grow it through unintended consequences of closely related subjective associations.

I've also been persuaded by the thinking of Rupert Sheldrake. Here is Sheldrakes banned TED talk:

The problem with people who believe strong AI is possible is they assume philosophical materialism is an absolute truth. If fact, it's blasphemy to even suggest philosophical materialism is NOT an absolute truth! Most of the evidence suggests materialism is a dogma not supported by scientific evidence. Just google "quantum physics debunks materialism".

There appears to be an evolutionary advantage to being able to visualize sexual activity as a means of always being ready to mate. Plus it's good for understanding how to trap hard to catch and kill animals.

There are two types of AI systems. There is "weak" artificial intelligence and there is "strong" AI:

Weak Artificial Intelligence

Strong Artificial Intelligence

When I was in college many years ago studying computer science I spent many credits on artificial intelligence. My whole life every few years I hear someone claim weak-AI will someday magically become strong-AI. When I was in college Marvin Minsky Society of the Mind was all the rage. But after reading all the works of John Searle I was convinced his way of thinking was more correct.

Recently, John Searle gave a talk at google about AI. It's funny to see how the engineers respond to his criticisms. They are bunch of immature cry babies as he crushes them with his superior intellect.

John Searl presents an absolutely fascinating argument against strong AI.

I lost interest in AI because weak AI systems are so stupid. I became convinced the human mind is more like a analog TV receiver than a Von-Neuman type computer. The human mind is more like yogurt. We do not synthesize creativity but we grow it through unintended consequences of closely related subjective associations.

I've also been persuaded by the thinking of Rupert Sheldrake. Here is Sheldrakes banned TED talk:

The problem with people who believe strong AI is possible is they assume philosophical materialism is an absolute truth. If fact, it's blasphemy to even suggest philosophical materialism is NOT an absolute truth! Most of the evidence suggests materialism is a dogma not supported by scientific evidence. Just google "quantum physics debunks materialism".

edit on 4-1-2019 by dfnj2015 because: (no reason given)

a reply to: zosimov

I appreciate your openness to the topic. I'm computer illiterate as well, and it would probably allay my reflexive fears if I knew a little bit about them. One of my chess buddies is an AI designer, so it would be easy for me to educate myself a little.

I was recently thinking that, in a way, Technology is a rejection of Nature. You mentioned the Japanese cafe staffed by paralytics. If Nature was the sole arbiter of life and death and health and mobility, it and countless other things wouldn't exist.

We've found ways to improve individuals' lives in unthinkable ways; it's reflexive of us to say "That's good." And on an individual basis, it is. But on the other side of the playground, we receive constant reminders of "over-population".

For the sake of argument: if overpopulation is unassailable fact, then we are creating our own problems. I'm far from saying "kill all the weaklings", but the cognitive dissonance created from the conflict of this and many more issues have to be resolved in a sane world.

I appreciate your openness to the topic. I'm computer illiterate as well, and it would probably allay my reflexive fears if I knew a little bit about them. One of my chess buddies is an AI designer, so it would be easy for me to educate myself a little.

I was recently thinking that, in a way, Technology is a rejection of Nature. You mentioned the Japanese cafe staffed by paralytics. If Nature was the sole arbiter of life and death and health and mobility, it and countless other things wouldn't exist.

We've found ways to improve individuals' lives in unthinkable ways; it's reflexive of us to say "That's good." And on an individual basis, it is. But on the other side of the playground, we receive constant reminders of "over-population".

For the sake of argument: if overpopulation is unassailable fact, then we are creating our own problems. I'm far from saying "kill all the weaklings", but the cognitive dissonance created from the conflict of this and many more issues have to be resolved in a sane world.

a reply to: knowledgehunter0986

Hi Hunter, it's always nice to see you my friend!

Interesting ideas regarding perfection and the pursuit thereof.

Thank you for the thought provoking addition to the topic

(ETA: Have a great weekend!)

Hi Hunter, it's always nice to see you my friend!

Interesting ideas regarding perfection and the pursuit thereof.

Thank you for the thought provoking addition to the topic

(ETA: Have a great weekend!)

edit on 4-1-2019 by zosimov because: (no reason given)

originally posted by: DictionaryOfExcuses

a reply to: zosimov

I was recently thinking that, in a way, Technology is a rejection of Nature. You mentioned the Japanese cafe staffed by paralytics. If Nature was the sole arbiter of life and death and health and mobility, it and countless other things wouldn't exist.

Well the point you brought up is valid! There are so many new ethical questions arising that weren't relevant until the age of technology.

I often wonder if we'll keep our bond with nature. Seems as if we are slowly severing our ties.

a reply to: zosimov

IMO, Musk is an idiot but that is me. He said we should nuke Mars to warm it up. *Snoopy eyes*

Today's AI is not much further along than what I wanted to go to grad school for back in the late 1980's. I spent another few studying the mind, cognitive science, and philosophy (which started me off on my literature adventure), and I stand by what I say: today's AI is not very much more advanced than what was theorized back then. It is a heck of a lot faster with access to more information that it is actually great for single use (searching all telescopes' data for asteroids, say) but nowhere close to "the boogey man coming to kill you."

Think. Out brain is analog. It is open. It changes its own configuration based on input (food, sex, drugs, rock-n-roll, ya know, the good stuff!), and will take any signal given to it and try to make sense out of it. Today's AI only does what it is told to do (even if it cheats and freaks out poor neo on his thread the other day).

If you are afraid about AI trying to recommend that you buy the latest Chuck Palahniuk graphic novel because you once purchased one of his novels, then yeah, be very afraid!

Father of the quantum computing idea, Feynman, basically said, that the world is quantum in nature so there is no way in hell a digital computer is every going to model it, make sense of it, become our masters... or something like that.

After being all excited about quantum computing, it looks like there is too much noise for anything useful to be built anytime soon. Still over 5 years out (or more). So I think you are safe and I can say these things (even if some here disagree).

Good question even if from a questionable source!

IMO, Musk is an idiot but that is me. He said we should nuke Mars to warm it up. *Snoopy eyes*

Today's AI is not much further along than what I wanted to go to grad school for back in the late 1980's. I spent another few studying the mind, cognitive science, and philosophy (which started me off on my literature adventure), and I stand by what I say: today's AI is not very much more advanced than what was theorized back then. It is a heck of a lot faster with access to more information that it is actually great for single use (searching all telescopes' data for asteroids, say) but nowhere close to "the boogey man coming to kill you."

Think. Out brain is analog. It is open. It changes its own configuration based on input (food, sex, drugs, rock-n-roll, ya know, the good stuff!), and will take any signal given to it and try to make sense out of it. Today's AI only does what it is told to do (even if it cheats and freaks out poor neo on his thread the other day).

If you are afraid about AI trying to recommend that you buy the latest Chuck Palahniuk graphic novel because you once purchased one of his novels, then yeah, be very afraid!

Father of the quantum computing idea, Feynman, basically said, that the world is quantum in nature so there is no way in hell a digital computer is every going to model it, make sense of it, become our masters... or something like that.

After being all excited about quantum computing, it looks like there is too much noise for anything useful to be built anytime soon. Still over 5 years out (or more). So I think you are safe and I can say these things (even if some here disagree).

Good question even if from a questionable source!

a reply to: zosimov

It's an issue I think of a lot because my paternal grandmother was a "native American" of a small, forgotten tribe in California.

It's one of the only things that gives me a concrete sense of my identity; I can look at pictures of my grandmother and great-grandparents and see traces of them in myself, while the remainder of my heritage is a largely a mystery. (I'm suspicious of consumer DNA-analysis services, so it may stay that way.)

I've researched tribal history on and off and I admire the deep bond they had with animals, trees, mountains, and rivers; our world is constantly moving farther away from that and I have always wanted to get closer to it.

It's an issue I think of a lot because my paternal grandmother was a "native American" of a small, forgotten tribe in California.

It's one of the only things that gives me a concrete sense of my identity; I can look at pictures of my grandmother and great-grandparents and see traces of them in myself, while the remainder of my heritage is a largely a mystery. (I'm suspicious of consumer DNA-analysis services, so it may stay that way.)

I've researched tribal history on and off and I admire the deep bond they had with animals, trees, mountains, and rivers; our world is constantly moving farther away from that and I have always wanted to get closer to it.

I think you will find this interview with Lex Fridman very very interesting indeed.

Research Scientist at Massachusetts Institute of Technology

Research Scientist at Massachusetts Institute of Technology

edit on 4119 by Quadlink because: (no reason given)

a reply to: zosimov

Here is a link that explains a bit of my hesitation in the field of AI...

www.sciencedaily.com...

Enjoy the read!!

Here is a link that explains a bit of my hesitation in the field of AI...

www.sciencedaily.com...

Enjoy the read!!

new topics

-

This is our Story

General Entertainment: 26 minutes ago -

President BIDEN Vows to Make Americans Pay More Federal Taxes in 2025 - Political Suicide.

2024 Elections: 2 hours ago -

Ode to Artemis

General Chit Chat: 3 hours ago -

Ditching physical money

History: 6 hours ago -

One Flame Throwing Robot Dog for Christmas Please!

Weaponry: 7 hours ago -

Don't take advantage of people just because it seems easy it will backfire

Rant: 7 hours ago -

VirginOfGrand says hello

Introductions: 8 hours ago -

Should Biden Replace Harris With AOC On the 2024 Democrat Ticket?

2024 Elections: 8 hours ago -

University student disciplined after saying veganism is wrong and gender fluidity is stupid

Education and Media: 11 hours ago

top topics

-

Hate makes for strange bedfellows

US Political Madness: 17 hours ago, 20 flags -

University student disciplined after saying veganism is wrong and gender fluidity is stupid

Education and Media: 11 hours ago, 12 flags -

Police clash with St George’s Day protesters at central London rally

Social Issues and Civil Unrest: 14 hours ago, 9 flags -

President BIDEN Vows to Make Americans Pay More Federal Taxes in 2025 - Political Suicide.

2024 Elections: 2 hours ago, 8 flags -

TLDR post about ATS and why I love it and hope we all stay together somewhere

General Chit Chat: 15 hours ago, 7 flags -

Should Biden Replace Harris With AOC On the 2024 Democrat Ticket?

2024 Elections: 8 hours ago, 6 flags -

Don't take advantage of people just because it seems easy it will backfire

Rant: 7 hours ago, 4 flags -

One Flame Throwing Robot Dog for Christmas Please!

Weaponry: 7 hours ago, 4 flags -

God lived as a Devil Dog.

Short Stories: 12 hours ago, 3 flags -

Ditching physical money

History: 6 hours ago, 3 flags

active topics

-

New whistleblower Jason Sands speaks on Twitter Spaces last night.

Aliens and UFOs • 47 • : Ophiuchus1 -

Hate makes for strange bedfellows

US Political Madness • 39 • : 19Bones79 -

Lawsuit Seeks to ‘Ban the Jab’ in Florida

Diseases and Pandemics • 29 • : Cre8chaos79 -

TLDR post about ATS and why I love it and hope we all stay together somewhere

General Chit Chat • 8 • : Cre8chaos79 -

This is our Story

General Entertainment • 0 • : BrotherKinsMan -

British TV Presenter Refuses To Use Guest's Preferred Pronouns

Education and Media • 126 • : Asher47 -

Why to avoid TikTok

Education and Media • 17 • : mooncake -

The Superstition of Full Moons Filling Hospitals Turns Out To Be True!

Medical Issues & Conspiracies • 22 • : mooncake -

University student disciplined after saying veganism is wrong and gender fluidity is stupid

Education and Media • 23 • : BigDuckEnergy -

President BIDEN Vows to Make Americans Pay More Federal Taxes in 2025 - Political Suicide.

2024 Elections • 3 • : BingoMcGoof

11