It looks like you're using an Ad Blocker.

Please white-list or disable AboveTopSecret.com in your ad-blocking tool.

Thank you.

Some features of ATS will be disabled while you continue to use an ad-blocker.

share:

a reply to: andy06shake

there was a test recently that simulated a mouse brain for a few seconds and it was racks and racks of servers. but i guess this could just be way more powerful then that one.

the appearance of consciousness and real cousins are very different. will it be able to feel? or just give the right answers to the questions? will it have dreams of eletric sheep?

there was a test recently that simulated a mouse brain for a few seconds and it was racks and racks of servers. but i guess this could just be way more powerful then that one.

the appearance of consciousness and real cousins are very different. will it be able to feel? or just give the right answers to the questions? will it have dreams of eletric sheep?

...

edit on 18-11-2018 by verschickter because: (no reason given)

Nobody but an idiot speaks about what his company has done, and what they have developed, and what it does, over and over again, then says he can't

prove it, because of a non-disclosure agreement.

There are patents on it, which ARE published - THAT is how it is protected, and provides official proof on who the inventor(s) are, and it will becomes property of the company, the inventors work for.

Something which you claim is confidential, which you claim to be held to non-disclosure, is NEVER discussed in public. Not even 'hinted' at. Do you know why?

Because you are describing what it does, how it works, and that's considered disclosure. NDA's all state that the research, or products being developed, are confidential information. That's why you must be an idiot, if you think a company would ever allow you to babble on and on about it, or even 'hint' at it, repeatedly.

Anyway, the bottom line is that you have no proof of anything you claim here, so it's a dead duck.

There are patents on it, which ARE published - THAT is how it is protected, and provides official proof on who the inventor(s) are, and it will becomes property of the company, the inventors work for.

Something which you claim is confidential, which you claim to be held to non-disclosure, is NEVER discussed in public. Not even 'hinted' at. Do you know why?

Because you are describing what it does, how it works, and that's considered disclosure. NDA's all state that the research, or products being developed, are confidential information. That's why you must be an idiot, if you think a company would ever allow you to babble on and on about it, or even 'hint' at it, repeatedly.

Anyway, the bottom line is that you have no proof of anything you claim here, so it's a dead duck.

Intelligence will be announced by a company, which is going to be a massive lie. People such as yourself, who are not programming it, will believe it

is true. The programming is done secretly, so when they claim it did it 'all by itself, nobody programmed it', you will jump for joy, amazed at what

you just witnessed.

Sorry to burst your bubble, but I think you better understand what's said, and what's actually done, in such an industry, are two, completely different things. Don't be so naive.

Sorry to burst your bubble, but I think you better understand what's said, and what's actually done, in such an industry, are two, completely different things. Don't be so naive.

Btw, that's also why it's 'confidential' information, so nobody can ever see that it's all bull#.

NASA is the perfect example, of all-time.

NASA is the perfect example, of all-time.

Hmmm, not sure how I missed this...

The article linked gives precious little data on the exact way SpiNNaker is going to mimic neurons. That's the first hurdle. They call it a "supercomputer" which to me implies digital logic... if so, it's a lost cause. The brain is analog.

Looking at the photo, it looks like a bunch of racks. If that is SpiNNaker, it's not going to work. The brain is not compartmentalized like that.

The text describes each neural unit as having "moving parts." That is concerning to me as a neural unit need not have any moving parts... indeed, such would only interfere with proper operation. My design calls for solid-state units.

A million 'neural' processors also seems like a small number. If it did work with that many, it might have the intelligence of the amoeba people are arguing about.

Let me see if I can explain this without getting too technical or giving too much improper disclosure. This concept, if it is what it sounds like, is something I started working on many many years ago. As it turns out, according to my calculations, it is indeed possible to create a type of intelligence artificially using the blueprint of the brain. The trick is in getting artificial neurons to react in the same manner as organic neurons.

The neurons themselves are not where memory or thought is stored. The neurons are simple analog processors for data. The storage is the interlinking between the neurons. In an organism, this happens by dendrites moving toward axions and making contact, strengthening or loosening the contact depending on the signals received. While that mechanism appears to be difficult if not impossible to recreate artificially, similar mechanisms may be employed which could perform an equivalent function.

There are two methods to implement this artificial intelligence system: the most accurate would be using discrete neural assemblies, but that would require numbers of them into the billions. My design could hardly have them called 'processors' in the true sense of the word, because they do not process data as we normally think of processing data. At the inputs and outputs, all data is analog, not bits or bytes. Internally, since a storage system is required for analog data, it might be useful to include a digital memory and AD/DA converters, but that would certainly increase an already astronomical cost. That cost is exactly why I have not attempted actual construction.

Now, it might be possible to use a digital computer to simulate the artificial neurons. I have considered that, but the number of threads and memory requirements are both, again, astronomical. The resulting program should react similar to the actual device, allowing for the analog-digital uncertainty that is inherent in such conversions. That may be their goal, but it forgets one important thing: the sensors.

An organic brain is tied inextricably to a massive number of sensors: touch, temperature, taste, audio, vision, pressure, and on and on and on. I saw no sensors in the photo (still assuming the photo was indeed of SpiNNaker). Without the sensors, SpiNNaker is an isolated brain with no way to read or write to/from the world of reality. It will do nothing; it can do nothing, because there is no input to produce an output. Input to a neural computer would not be in the form of a keyboard or mouse... it will need organic-based sensors to support an organic-based brain.

I mentioned the racks above... that will not work if this is a version of my design. All the processors would need to be tied together, not linked through communication networks as a rack arrangement would require, or the communication network would have to be extremely fast and have an unbelievable amount of bandwidth. Again, I am basing this on the photo, which may or may not be the actual machine.

Even with a million neurons and the astronomical number of potential interconnections, that million neurons pales alongside the number which would be required to produce enough intelligence to study. it might... might... have the intelligence of an amoeba.

And finally, I want to address the idea that it will somehow be able to think, imagine, and reason. No, it won't. The artificial intelligence I have been working on only explains the Pavlovian intelligence... as in, the intelligence to learn from one's surroundings and act accordingly based on past experiences. There is another aspect to intelligence: the aspect that allows us to think, imagine, and reason. I have seen no indication in my studies that this form of intelligence is even capable of being housed in an organic brain. My best guess at this point is that it exists outside the brain and somehow communicates with the brain. My work is limited to the Pavlovian intelligence.

This is quite interesting, to be sure... I really want to see what the scientists discover, but to understand their discoveries I would need much more information on how they created it. Perhaps when I get time, I will look up more information on this, but for now I think they have invented a toy that might at best indicate how to build a better unit next time. This is at best just a small step, but it could be a step in the right direction.

Skynet has not arisen. Everyone can put down their flaming torches and pitchforks.

TheRedneck

The article linked gives precious little data on the exact way SpiNNaker is going to mimic neurons. That's the first hurdle. They call it a "supercomputer" which to me implies digital logic... if so, it's a lost cause. The brain is analog.

Looking at the photo, it looks like a bunch of racks. If that is SpiNNaker, it's not going to work. The brain is not compartmentalized like that.

The text describes each neural unit as having "moving parts." That is concerning to me as a neural unit need not have any moving parts... indeed, such would only interfere with proper operation. My design calls for solid-state units.

A million 'neural' processors also seems like a small number. If it did work with that many, it might have the intelligence of the amoeba people are arguing about.

Let me see if I can explain this without getting too technical or giving too much improper disclosure. This concept, if it is what it sounds like, is something I started working on many many years ago. As it turns out, according to my calculations, it is indeed possible to create a type of intelligence artificially using the blueprint of the brain. The trick is in getting artificial neurons to react in the same manner as organic neurons.

The neurons themselves are not where memory or thought is stored. The neurons are simple analog processors for data. The storage is the interlinking between the neurons. In an organism, this happens by dendrites moving toward axions and making contact, strengthening or loosening the contact depending on the signals received. While that mechanism appears to be difficult if not impossible to recreate artificially, similar mechanisms may be employed which could perform an equivalent function.

There are two methods to implement this artificial intelligence system: the most accurate would be using discrete neural assemblies, but that would require numbers of them into the billions. My design could hardly have them called 'processors' in the true sense of the word, because they do not process data as we normally think of processing data. At the inputs and outputs, all data is analog, not bits or bytes. Internally, since a storage system is required for analog data, it might be useful to include a digital memory and AD/DA converters, but that would certainly increase an already astronomical cost. That cost is exactly why I have not attempted actual construction.

Now, it might be possible to use a digital computer to simulate the artificial neurons. I have considered that, but the number of threads and memory requirements are both, again, astronomical. The resulting program should react similar to the actual device, allowing for the analog-digital uncertainty that is inherent in such conversions. That may be their goal, but it forgets one important thing: the sensors.

An organic brain is tied inextricably to a massive number of sensors: touch, temperature, taste, audio, vision, pressure, and on and on and on. I saw no sensors in the photo (still assuming the photo was indeed of SpiNNaker). Without the sensors, SpiNNaker is an isolated brain with no way to read or write to/from the world of reality. It will do nothing; it can do nothing, because there is no input to produce an output. Input to a neural computer would not be in the form of a keyboard or mouse... it will need organic-based sensors to support an organic-based brain.

I mentioned the racks above... that will not work if this is a version of my design. All the processors would need to be tied together, not linked through communication networks as a rack arrangement would require, or the communication network would have to be extremely fast and have an unbelievable amount of bandwidth. Again, I am basing this on the photo, which may or may not be the actual machine.

Even with a million neurons and the astronomical number of potential interconnections, that million neurons pales alongside the number which would be required to produce enough intelligence to study. it might... might... have the intelligence of an amoeba.

And finally, I want to address the idea that it will somehow be able to think, imagine, and reason. No, it won't. The artificial intelligence I have been working on only explains the Pavlovian intelligence... as in, the intelligence to learn from one's surroundings and act accordingly based on past experiences. There is another aspect to intelligence: the aspect that allows us to think, imagine, and reason. I have seen no indication in my studies that this form of intelligence is even capable of being housed in an organic brain. My best guess at this point is that it exists outside the brain and somehow communicates with the brain. My work is limited to the Pavlovian intelligence.

This is quite interesting, to be sure... I really want to see what the scientists discover, but to understand their discoveries I would need much more information on how they created it. Perhaps when I get time, I will look up more information on this, but for now I think they have invented a toy that might at best indicate how to build a better unit next time. This is at best just a small step, but it could be a step in the right direction.

Skynet has not arisen. Everyone can put down their flaming torches and pitchforks.

TheRedneck

That's why proof is so important to have, to see, to show, in every case. It is truth.

When 'robot-men' are claimed to have intelligence, it will not be proven, it will be 'confidential, and highly sensitive' information. The release of information on a technology like this will never be shown to the world, because 'terrorists' will use it, blah, blah....

It will also never be proven to exist in any other way, either. (See NASA)

When 'robot-men' are claimed to have intelligence, it will not be proven, it will be 'confidential, and highly sensitive' information. The release of information on a technology like this will never be shown to the world, because 'terrorists' will use it, blah, blah....

It will also never be proven to exist in any other way, either. (See NASA)

originally posted by: TheRedneck

Hmmm, not sure how I missed this...

The article linked gives precious little data on the exact way SpiNNaker is going to mimic neurons. That's the first hurdle. They call it a "supercomputer" which to me implies digital logic... if so, it's a lost cause. The brain is analog.

Looking at the photo, it looks like a bunch of racks. If that is SpiNNaker, it's not going to work. The brain is not compartmentalized like that.

The text describes each neural unit as having "moving parts." That is concerning to me as a neural unit need not have any moving parts... indeed, such would only interfere with proper operation. My design calls for solid-state units.

A million 'neural' processors also seems like a small number. If it did work with that many, it might have the intelligence of the amoeba people are arguing about.

Let me see if I can explain this without getting too technical or giving too much improper disclosure. This concept, if it is what it sounds like, is something I started working on many many years ago. As it turns out, according to my calculations, it is indeed possible to create a type of intelligence artificially using the blueprint of the brain. The trick is in getting artificial neurons to react in the same manner as organic neurons.

The neurons themselves are not where memory or thought is stored. The neurons are simple analog processors for data. The storage is the interlinking between the neurons. In an organism, this happens by dendrites moving toward axions and making contact, strengthening or loosening the contact depending on the signals received. While that mechanism appears to be difficult if not impossible to recreate artificially, similar mechanisms may be employed which could perform an equivalent function.

There are two methods to implement this artificial intelligence system: the most accurate would be using discrete neural assemblies, but that would require numbers of them into the billions. My design could hardly have them called 'processors' in the true sense of the word, because they do not process data as we normally think of processing data. At the inputs and outputs, all data is analog, not bits or bytes. Internally, since a storage system is required for analog data, it might be useful to include a digital memory and AD/DA converters, but that would certainly increase an already astronomical cost. That cost is exactly why I have not attempted actual construction.

Now, it might be possible to use a digital computer to simulate the artificial neurons. I have considered that, but the number of threads and memory requirements are both, again, astronomical. The resulting program should react similar to the actual device, allowing for the analog-digital uncertainty that is inherent in such conversions. That may be their goal, but it forgets one important thing: the sensors.

An organic brain is tied inextricably to a massive number of sensors: touch, temperature, taste, audio, vision, pressure, and on and on and on. I saw no sensors in the photo (still assuming the photo was indeed of SpiNNaker). Without the sensors, SpiNNaker is an isolated brain with no way to read or write to/from the world of reality. It will do nothing; it can do nothing, because there is no input to produce an output. Input to a neural computer would not be in the form of a keyboard or mouse... it will need organic-based sensors to support an organic-based brain.

I mentioned the racks above... that will not work if this is a version of my design. All the processors would need to be tied together, not linked through communication networks as a rack arrangement would require, or the communication network would have to be extremely fast and have an unbelievable amount of bandwidth. Again, I am basing this on the photo, which may or may not be the actual machine.

Even with a million neurons and the astronomical number of potential interconnections, that million neurons pales alongside the number which would be required to produce enough intelligence to study. it might... might... have the intelligence of an amoeba.

And finally, I want to address the idea that it will somehow be able to think, imagine, and reason. No, it won't. The artificial intelligence I have been working on only explains the Pavlovian intelligence... as in, the intelligence to learn from one's surroundings and act accordingly based on past experiences. There is another aspect to intelligence: the aspect that allows us to think, imagine, and reason. I have seen no indication in my studies that this form of intelligence is even capable of being housed in an organic brain. My best guess at this point is that it exists outside the brain and somehow communicates with the brain. My work is limited to the Pavlovian intelligence.

This is quite interesting, to be sure... I really want to see what the scientists discover, but to understand their discoveries I would need much more information on how they created it. Perhaps when I get time, I will look up more information on this, but for now I think they have invented a toy that might at best indicate how to build a better unit next time. This is at best just a small step, but it could be a step in the right direction.

Skynet has not arisen. Everyone can put down their flaming torches and pitchforks.

TheRedneck

Adapting to conditions as you describe it is indirect processing, which is not intelligence at all, only the appearance of individual thoughts/responses. I do agree with you on there being no actual thought, or reasoning, or dreaming, though.

Motor response is what you are referring to, as Pavlovian intelligence. Not the same as indirect processing which allows a machine to either go to, or avoid, any pre-selected stimulus -this is not intelligence.

originally posted by: turbonium1

That's why proof is so important to have, to see, to show, in every case. It is truth.

Okay, let´s reset the conversation we had.

My whole point was, you can´t be sure what´s happening behind closed doors. As much as I would like to give you anything, you will have to wait for an official report.

I admit that I could have been more friendly to you but you. I understand that you have a problem with hyping things that are not portrait like they really are. I´m with you on that.

But this is exactly the reason why I am so into this and discussing. The terms used, starting from intelligence, over artificial intelligence and all the other things have to be taken into context.

I dislike overhyping AI as much as you do and the thought that feelings, sentinent is an intrinsic feature of an AI (this time, I reference on human intelligence) is just as laughable to me as it is to you, for example.

But you give me the impression that you use those terms too loosely. We ended up arguing about semantics and lost the oversight.

cheers

If you program a machine to respond to specific stimuli, and it has other parameters, which allow forthe machine to make random choices, which are not

really random, but appear to be, anyway, to us. That's why it will appear that a machine will 'choose' what to do, without any programming. It is an

illusion of intelligence, nothing more.

In the 'ant-species' case, there would be no reason for any of them to 'take precautions' against a hazard, since this requires reasoning - an ability to know what to do, in order to avoid harm, for its survival. Machines are not alive, they are incapable of actions for their survival, because they are not alive to begin with.

In the 'ant-species' case, there would be no reason for any of them to 'take precautions' against a hazard, since this requires reasoning - an ability to know what to do, in order to avoid harm, for its survival. Machines are not alive, they are incapable of actions for their survival, because they are not alive to begin with.

originally posted by: verschickter

originally posted by: turbonium1

That's why proof is so important to have, to see, to show, in every case. It is truth.

Okay, let´s reset the conversation we had.

My whole point was, you can´t be sure what´s happening behind closed doors. As much as I would like to give you anything, you will have to wait for an official report.

I admit that I could have been more friendly to you but you. I understand that you have a problem with hyping things that are not portrait like they really are. I´m with you on that.

But this is exactly the reason why I am so into this and discussing. The terms used, starting from intelligence, over artificial intelligence and all the other things have to be taken into context.

I dislike overhyping AI as much as you do and the thought that feelings, sentinent is an intrinsic feature of an AI (this time, I reference on human intelligence) is just as laughable to me as it is to you, for example.

But you give me the impression that you use those terms too loosely. We ended up arguing about semantics and lost the oversight.

cheers

No problem, let's call it a miscommunication issue, which went overboard, and move on.

Anyway, it seems we agree, for the most part, which is all good.

Sorry for my responses, too.

What I'm trying to get across, in the end, is that this technology is very dangerous to us. I see it being used against us, to allow them to unleash great horrors on the world, with no repercussions, because the world will lie in complete ignorance of the reality. As we've seen before, on 9/11, and other events.

All I can hope for, is that people start to wake up, before it's too late.

Cheers.

edit on 18-11-2018 by turbonium1 because: (no reason given)

a reply to: turbonium1

Let´s use another example. If you assign a task to a robot that is able to perceive it´s environment and has been giving a specific task among other priorities, like plotting a way that is the fastest, the shortest, or, the most reliable one.

That robot would do those calculations from metadata it gathered over time. If it has plotted a route that was safe 100% of the time, for example, and suddenly something changes, it will compare that data and be able to come to the conclusion, something in it´s environment changed.

"What if" you constructed that robots software in a way that it can do the above and reflect (compare changes over a timescale). What if -and this isn´t a new idea in the field- you construct the framework from several false AI and other similar modules like expert systems just like a human subconsciousness. Layered. If you are unclear what I mean with subconsciousness, it´s a routine running in your head that computes a new sensory image roughly four times a second.

This is the subconscious level that you/we humans are not aware of. It´s one of the layers where so called spontaneous thoughts surface from. Those thought patterns are influenced by many things you might know about but you are not aware of them as they happen.

Now, if that robot encounters a kind of pattern that conflicts with internal logic that was manifested by the previous data collections (the 100% safe way, remember) and suddenly there is a change (like humans waiting at a certain point on the route and picking the robot up and flush all the memory.

Now consider, there are many robots doing this and the computation isn´t done on the local microcontrollers and CPUs but spread on a machine over many instances.

What if, an overlaying watchdog (self learning algorithm) false AI´s scope of field would notice that spot and redirect the rest of the robots to another route. A passive countermeasure.

What if, the singe robots have learned via trial and error how to get back on their feet if toppled by an external force or a repeating pattern in the route (every robot trips over a stair that was not there).

All it takes for active countermeasures is, to activate those "random" movements of all axis in the hope that a situation like that can be mastered. I would call it "going bonkers after failure until a pattern emerges".

I hope all the what ifs do not prevent you from piecing the puzzle together. What I wrote isn´t anything secret, it´s just straightforward use of the potential we currently have with public frameworks.

Don´t forget, while a program is -if reduced- a single loop, many single loops in parallel can work together on a single machine. Regardless how it´s done, via threading (time-cake model) or true parallelism via redundant hardware modules, now consider this "matroska" AI being a part of another, overlayed AI that also has a neighbor who it works in tandem or against, depending on what you want to achieve.

Now consider an AI isn´t "smart" to begin with, it has to be trained and it has to learn just like humans. With the ability to read it´s own sourcecode and make adjustments (at start, 99.999999% (you get the idea) will fail) to it.

Then you take the second generation that also inherits the metadata (learned stuff) from the first one and let them compete. You do this with more than one main-instance and you also let another expert system compare the results to previous generations (self reflecting feedback loop) and what you end up with is that you took self learning algorithms to a new level.

The pattern -if there is one- that emerges from the changes to the previous generations can be a keypoint/turning point in terms of how the self optimizing part that handles the source code acts. It can lead to new understandings and relationships we are not able to grasp as humans.

Of course this isn´t "life" or being "sentinent" let alone having "feelings".

What is all the above if not some form of intelligence that is able to draw conclusions?

The 4times a second subconscious part is important, too. Maybe you want to read up on this main-loop that we run in our heads 24/7.

All the above has nothing to do with my former work but it´s how my private, personal neural network and overall framework handles it.

Now add a linguistic parser to it and it will be able to generate huge datasets (metadata) and draw conclusions on a basic level at first, just like a toddler that learns not to touch a hot plate or it will get hurt.

Later the toddler learns that fire is often related to something being hot.

Even more later, the toddler will learn that this is not always the case and reflect back on the learned.

All the above is technical possible, you just have to do the arduos work in stringing a capable framework together and train, train, train. Just like a parent or teacher does it, while I´m saying this, I have no sentinents for a tool.

It (AI in general, not my lousy framework) will all kill us in the end either because of a bug, accident or something we did not think of. My opinion is, even weak AI in the context above has to be regulated.

In the 'ant-species' case, there would be no reason for any of them to 'take precautions' against a hazard, since this requires reasoning - an ability to know what to do, in order to avoid harm, for its survival. Machines are not alive, they are incapable of actions for their survival, because they are not alive to begin with.

Let´s use another example. If you assign a task to a robot that is able to perceive it´s environment and has been giving a specific task among other priorities, like plotting a way that is the fastest, the shortest, or, the most reliable one.

That robot would do those calculations from metadata it gathered over time. If it has plotted a route that was safe 100% of the time, for example, and suddenly something changes, it will compare that data and be able to come to the conclusion, something in it´s environment changed.

"What if" you constructed that robots software in a way that it can do the above and reflect (compare changes over a timescale). What if -and this isn´t a new idea in the field- you construct the framework from several false AI and other similar modules like expert systems just like a human subconsciousness. Layered. If you are unclear what I mean with subconsciousness, it´s a routine running in your head that computes a new sensory image roughly four times a second.

This is the subconscious level that you/we humans are not aware of. It´s one of the layers where so called spontaneous thoughts surface from. Those thought patterns are influenced by many things you might know about but you are not aware of them as they happen.

Now, if that robot encounters a kind of pattern that conflicts with internal logic that was manifested by the previous data collections (the 100% safe way, remember) and suddenly there is a change (like humans waiting at a certain point on the route and picking the robot up and flush all the memory.

Now consider, there are many robots doing this and the computation isn´t done on the local microcontrollers and CPUs but spread on a machine over many instances.

What if, an overlaying watchdog (self learning algorithm) false AI´s scope of field would notice that spot and redirect the rest of the robots to another route. A passive countermeasure.

What if, the singe robots have learned via trial and error how to get back on their feet if toppled by an external force or a repeating pattern in the route (every robot trips over a stair that was not there).

All it takes for active countermeasures is, to activate those "random" movements of all axis in the hope that a situation like that can be mastered. I would call it "going bonkers after failure until a pattern emerges".

I hope all the what ifs do not prevent you from piecing the puzzle together. What I wrote isn´t anything secret, it´s just straightforward use of the potential we currently have with public frameworks.

Don´t forget, while a program is -if reduced- a single loop, many single loops in parallel can work together on a single machine. Regardless how it´s done, via threading (time-cake model) or true parallelism via redundant hardware modules, now consider this "matroska" AI being a part of another, overlayed AI that also has a neighbor who it works in tandem or against, depending on what you want to achieve.

Now consider an AI isn´t "smart" to begin with, it has to be trained and it has to learn just like humans. With the ability to read it´s own sourcecode and make adjustments (at start, 99.999999% (you get the idea) will fail) to it.

Then you take the second generation that also inherits the metadata (learned stuff) from the first one and let them compete. You do this with more than one main-instance and you also let another expert system compare the results to previous generations (self reflecting feedback loop) and what you end up with is that you took self learning algorithms to a new level.

The pattern -if there is one- that emerges from the changes to the previous generations can be a keypoint/turning point in terms of how the self optimizing part that handles the source code acts. It can lead to new understandings and relationships we are not able to grasp as humans.

Of course this isn´t "life" or being "sentinent" let alone having "feelings".

What is all the above if not some form of intelligence that is able to draw conclusions?

The 4times a second subconscious part is important, too. Maybe you want to read up on this main-loop that we run in our heads 24/7.

All the above has nothing to do with my former work but it´s how my private, personal neural network and overall framework handles it.

Now add a linguistic parser to it and it will be able to generate huge datasets (metadata) and draw conclusions on a basic level at first, just like a toddler that learns not to touch a hot plate or it will get hurt.

Later the toddler learns that fire is often related to something being hot.

Even more later, the toddler will learn that this is not always the case and reflect back on the learned.

All the above is technical possible, you just have to do the arduos work in stringing a capable framework together and train, train, train. Just like a parent or teacher does it, while I´m saying this, I have no sentinents for a tool.

It (AI in general, not my lousy framework) will all kill us in the end either because of a bug, accident or something we did not think of. My opinion is, even weak AI in the context above has to be regulated.

edit on 18-11-2018 by verschickter because: (no reason given)

a reply to: penroc3

Was the mouse brain simulation not from the movie Transcendence? I did not know we had the capability to emulate any mammalians brain function yet.

As to whether or not any AI we develop or is spawned down to the increasing complexity of our network design, well if its sentient, then i imagine it would indeed have emotions, but very alien from our own given the difference in composition and the environment in which it would exist.

I imagine it will only have dreams, in the same manner we do if it sleeps, but such a luxury may be rather redundant where an energy based life form is concerned.

Was the mouse brain simulation not from the movie Transcendence? I did not know we had the capability to emulate any mammalians brain function yet.

As to whether or not any AI we develop or is spawned down to the increasing complexity of our network design, well if its sentient, then i imagine it would indeed have emotions, but very alien from our own given the difference in composition and the environment in which it would exist.

I imagine it will only have dreams, in the same manner we do if it sleeps, but such a luxury may be rather redundant where an energy based life form is concerned.

edit on 18-11-2018 by andy06shake because: (no reason given)

a reply to: TheRedneck

Completely agree, but this is simply the beginning, just an experiment if somewhat complex and costly.

Essentially baby steps and a hell of a lot of design refinement is the only way we will learn if simulation of our minds is possible.

It won't be able to think but simply run code in a very specific manner, just like any other computer.

It's an expensive toy but one that offers great possibility, and if it did not contain a measure of viability, show promise, i dont imagine we would be building the thing.

Completely agree, but this is simply the beginning, just an experiment if somewhat complex and costly.

Essentially baby steps and a hell of a lot of design refinement is the only way we will learn if simulation of our minds is possible.

It won't be able to think but simply run code in a very specific manner, just like any other computer.

It's an expensive toy but one that offers great possibility, and if it did not contain a measure of viability, show promise, i dont imagine we would be building the thing.

To back up my previous post with stuff to read:

What is thinking, reasoning?

The Cambridge Handbook of Thinking and Reasoning

Linguistic, languages and thought patters

How Does Our Language Shape the Way We Think?

Model free reinforement learning "Q-Learning"

Real world example: DeepMind’s AI is teaching itself parkour, and the results are adorable

Theory on the process: Q-Learning (1992) (PDF)

How fast is real time perception in humans.

Theory on the process: Excitatory Synaptic Feedback from the Motor Layer to the Sensory Layers of the Superior Colliculus

Read all that and come back to me if you have questions left.

Edit: Removed a double entry...

What is thinking, reasoning?

The Cambridge Handbook of Thinking and Reasoning

Linguistic, languages and thought patters

How Does Our Language Shape the Way We Think?

Model free reinforement learning "Q-Learning"

Real world example: DeepMind’s AI is teaching itself parkour, and the results are adorable

Theory on the process: Q-Learning (1992) (PDF)

How fast is real time perception in humans.

Theory on the process: Excitatory Synaptic Feedback from the Motor Layer to the Sensory Layers of the Superior Colliculus

Read all that and come back to me if you have questions left.

Edit: Removed a double entry...

edit on 18-11-2018 by verschickter because: (no reason given)

a reply to: turbonium1

Just saw your reply..

That´s basically my opinion, too. As with many powerful things, it can be a blessing or a curse. To quote Tony Stark

It was always this way since we invented tools and used them for war. We´re a competetive species.

weak/false AI has arrived and it´s only a matter of time until someone leverages it onto a level to create true artificial intelligence and at that point, I hope if there is a god, he will have mercy on our souls.

Not because we created something that could be considered "life" and playing god but for creating a competetive, extremly fast thinking and decentralized intelligence that could wipe us out in so many ways, we´re not even aware off yet. By intent or not..

Just saw your reply..

What I'm trying to get across, in the end, is that this technology is very dangerous to us. I see it being used against us, to allow them to unleash great horrors on the world, with no repercussions, because the world will lie in complete ignorance of the reality. As we've seen before, on 9/11, and other events. All I can hope for, is that people start to wake up, before it's too late.

That´s basically my opinion, too. As with many powerful things, it can be a blessing or a curse. To quote Tony Stark

“Peace means having a bigger stick than the other guy.”

It was always this way since we invented tools and used them for war. We´re a competetive species.

weak/false AI has arrived and it´s only a matter of time until someone leverages it onto a level to create true artificial intelligence and at that point, I hope if there is a god, he will have mercy on our souls.

Not because we created something that could be considered "life" and playing god but for creating a competetive, extremly fast thinking and decentralized intelligence that could wipe us out in so many ways, we´re not even aware off yet. By intent or not..

a reply to: turbonium1

Well, I suppose it's a good thing we agree on one point anyway. I will assume from the first sentence above that you do not believe houseflies have intelligence. On that I disagree... they are not as developed as humans, but they are still extremely successful and possess some type of intelligence.

A living organism is a machine... an extremely intricately-designed chemical machine. Just because we are unable to replicate the chemical processes, it does not follow that it is somehow mystic. We are simply inadequate to the task at this time. Times change, however; I am living proof. 20 years ago, I would have died from a heart attack that we were incapable of repairing. Today, that procedure is relatively common.

No.

Motor response is not processing at all; it is power distribution. What I am referring to is the ability for an organism to learn proper responses to varying stimuli in order to achieve a goal. That can be as simple as complex motor coordination to achieve locomotion (as in the learning process which occurs shortly after birth in most mammals), or it can be as complex as Pavolv's experiment with getting a dog to salivate at the sound of a bell instead of the stimuli of food.

TheRedneck

Adapting to conditions as you describe it is indirect processing, which is not intelligence at all, only the appearance of individual thoughts/responses. I do agree with you on there being no actual thought, or reasoning, or dreaming, though.

Well, I suppose it's a good thing we agree on one point anyway. I will assume from the first sentence above that you do not believe houseflies have intelligence. On that I disagree... they are not as developed as humans, but they are still extremely successful and possess some type of intelligence.

A living organism is a machine... an extremely intricately-designed chemical machine. Just because we are unable to replicate the chemical processes, it does not follow that it is somehow mystic. We are simply inadequate to the task at this time. Times change, however; I am living proof. 20 years ago, I would have died from a heart attack that we were incapable of repairing. Today, that procedure is relatively common.

Motor response is what you are referring to, as Pavlovian intelligence. Not the same as indirect processing which allows a machine to either go to, or avoid, any pre-selected stimulus -this is not intelligence.

No.

Motor response is not processing at all; it is power distribution. What I am referring to is the ability for an organism to learn proper responses to varying stimuli in order to achieve a goal. That can be as simple as complex motor coordination to achieve locomotion (as in the learning process which occurs shortly after birth in most mammals), or it can be as complex as Pavolv's experiment with getting a dog to salivate at the sound of a bell instead of the stimuli of food.

TheRedneck

a reply to: TheRedneck

Indeed. Plant´s for example do not posses organs or neurons or eyes, yet the breath, can see and they can count, among other things.

www.ted.com...

Indeed. Plant´s for example do not posses organs or neurons or eyes, yet the breath, can see and they can count, among other things.

www.ted.com...

a reply to: verschickter

The unsolicited thought is an "out of the blue" event. It is the realization of cognizance... "I am , therefore I am"

No storage system is capable of it, however it is needed for the event to dwell upon itself. It is an Analog miracle, that even some single-cell animals have. We do not know how to classify it, let alone compute it. It will probably require the integration of both worlds, a hybrid machine, combination biological and silicon.

The unsolicited thought is an "out of the blue" event. It is the realization of cognizance... "I am , therefore I am"

No storage system is capable of it, however it is needed for the event to dwell upon itself. It is an Analog miracle, that even some single-cell animals have. We do not know how to classify it, let alone compute it. It will probably require the integration of both worlds, a hybrid machine, combination biological and silicon.

a reply to: charlyv

Sure, what I wrote is far away from the recognition of "I am, therefore I am".

If you look at the way I described my own framework, several layers between the sensory input layer (that is also an inherited part of one of the sub-layers and cross-referenced constantly) consist of different small encapsulated neural network hubs. They all "swing" on different frequencies with a base frequency for each layer.

As new sensor input propagates these layers upwards to the execution level, it get´s processed and compared to other patterns that emerge.

It´s not intuitive to grasp at first hand but be patient. Think of many layer of sieves that are stacked and swing on their own frequencies. Each hole in the sieve is a specialized neural network hub processing IO. If the right patters from the layers match up, the processed data can propagate upwards in a spike. The treshold and the patterns that emerge from there are stored and also compared.

You see what I described earlier was a very shallow description of what´s actually happens. That neural network I speak of is roughly 6-7 years old, with some major drawbacks over time, they come naturally.

Look at that post of mine, 6 years ago:

www.abovetopsecret.com...

At that time I merely used it for "simple" image recognition, with good results as you can see. With each recognition and each pattern geometry advancement, I discovered some other usage. I can´t give you the exact number right now but it should be slightly more than 3000 different specialized patters in a 4096 grid. The rest are blanks.

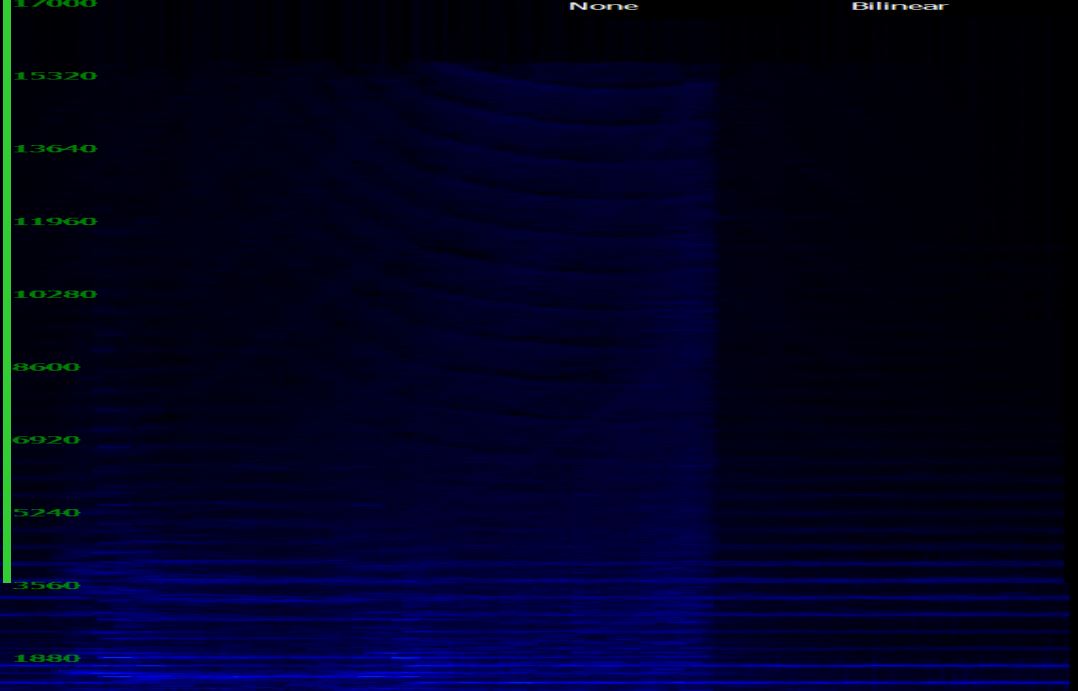

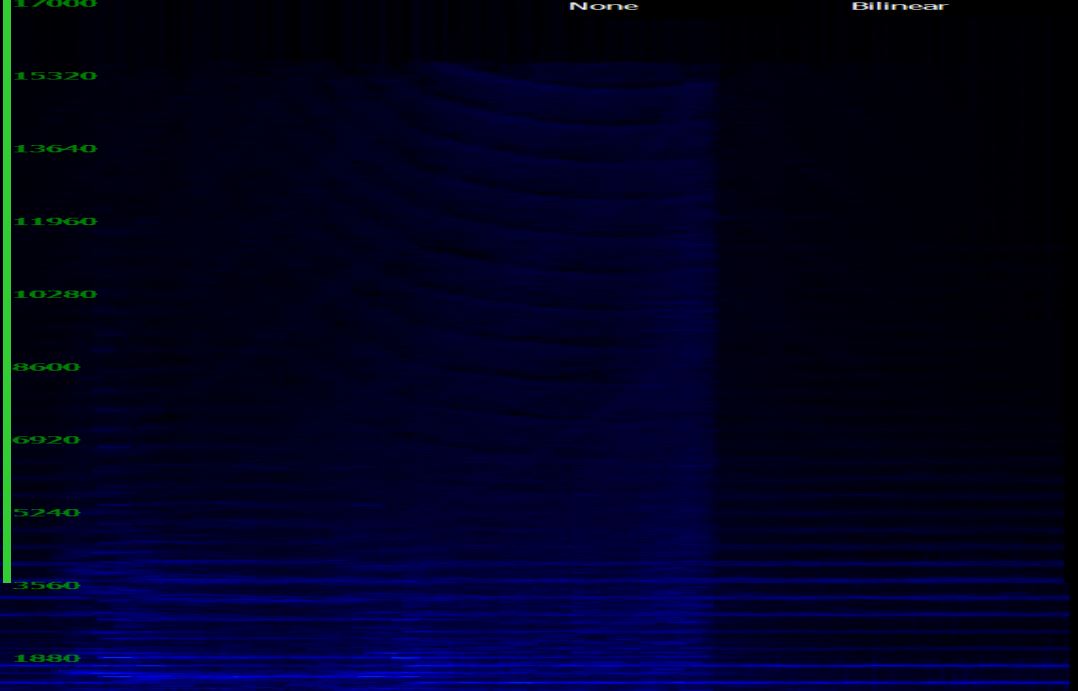

For example, currently I run spectrographs from audiosamples because I think I am onto something (see signature). It turned out that some of those patterns are very effective in hunting down geometric structures.

Here is a visualized dataset that was created by it after processing 200+ samples from ATS members and some google visitors. I just have to find a way to get this converted into soundwaves.

Look at the beauty of it.

This is just a snippet. The actual data behind that graphic takes up 53MB for those 10 seconds. The metadata that was collected fills 240GB.

I currently negotiate with a good friend to get her PS3 cluster setup at my site and then I´ll process the # out of all the patters in highest resolutions possible. She promised me that until christmas, we´ll get the thing setup in my cellar and hooked up to the framework.

The goal with this one is to be able to create spine tingling soundpatterns. If that is accomplished, I´ll be able to look into something that is a mystery since a few months ago.

You see, if I start something, I go for the whole 10 yards relentless and tirelessly with energy.

Sure, what I wrote is far away from the recognition of "I am, therefore I am".

If you look at the way I described my own framework, several layers between the sensory input layer (that is also an inherited part of one of the sub-layers and cross-referenced constantly) consist of different small encapsulated neural network hubs. They all "swing" on different frequencies with a base frequency for each layer.

As new sensor input propagates these layers upwards to the execution level, it get´s processed and compared to other patterns that emerge.

It´s not intuitive to grasp at first hand but be patient. Think of many layer of sieves that are stacked and swing on their own frequencies. Each hole in the sieve is a specialized neural network hub processing IO. If the right patters from the layers match up, the processed data can propagate upwards in a spike. The treshold and the patterns that emerge from there are stored and also compared.

You see what I described earlier was a very shallow description of what´s actually happens. That neural network I speak of is roughly 6-7 years old, with some major drawbacks over time, they come naturally.

Look at that post of mine, 6 years ago:

www.abovetopsecret.com...

At that time I merely used it for "simple" image recognition, with good results as you can see. With each recognition and each pattern geometry advancement, I discovered some other usage. I can´t give you the exact number right now but it should be slightly more than 3000 different specialized patters in a 4096 grid. The rest are blanks.

For example, currently I run spectrographs from audiosamples because I think I am onto something (see signature). It turned out that some of those patterns are very effective in hunting down geometric structures.

Here is a visualized dataset that was created by it after processing 200+ samples from ATS members and some google visitors. I just have to find a way to get this converted into soundwaves.

Look at the beauty of it.

This is just a snippet. The actual data behind that graphic takes up 53MB for those 10 seconds. The metadata that was collected fills 240GB.

I currently negotiate with a good friend to get her PS3 cluster setup at my site and then I´ll process the # out of all the patters in highest resolutions possible. She promised me that until christmas, we´ll get the thing setup in my cellar and hooked up to the framework.

The goal with this one is to be able to create spine tingling soundpatterns. If that is accomplished, I´ll be able to look into something that is a mystery since a few months ago.

You see, if I start something, I go for the whole 10 yards relentless and tirelessly with energy.

edit on 18-11-2018 by verschickter because:

(no reason given)

new topics

-

The functionality of boldening and italics is clunky and no post char limit warning?

ATS Freshman's Forum: 8 minutes ago -

Meadows, Giuliani Among 11 Indicted in Arizona in Latest 2020 Election Subversion Case

Mainstream News: 43 minutes ago -

Massachusetts Drag Queen Leads Young Kids in Free Palestine Chant

Social Issues and Civil Unrest: 58 minutes ago -

Weinstein's conviction overturned

Mainstream News: 2 hours ago -

Supreme Court Oral Arguments 4.25.2024 - Are PRESIDENTS IMMUNE From Later Being Prosecuted.

Above Politics: 3 hours ago -

Krystalnacht on today's most elite Universities?

Social Issues and Civil Unrest: 3 hours ago -

Chris Christie Wishes Death Upon Trump and Ramaswamy

Politicians & People: 4 hours ago -

University of Texas Instantly Shuts Down Anti Israel Protests

Education and Media: 6 hours ago -

Any one suspicious of fever promotions events, major investor Goldman Sachs card only.

The Gray Area: 8 hours ago

top topics

-

VP's Secret Service agent brawls with other agents at Andrews

Mainstream News: 17 hours ago, 11 flags -

Krystalnacht on today's most elite Universities?

Social Issues and Civil Unrest: 3 hours ago, 7 flags -

Weinstein's conviction overturned

Mainstream News: 2 hours ago, 6 flags -

Supreme Court Oral Arguments 4.25.2024 - Are PRESIDENTS IMMUNE From Later Being Prosecuted.

Above Politics: 3 hours ago, 5 flags -

Electrical tricks for saving money

Education and Media: 16 hours ago, 5 flags -

University of Texas Instantly Shuts Down Anti Israel Protests

Education and Media: 6 hours ago, 3 flags -

Meadows, Giuliani Among 11 Indicted in Arizona in Latest 2020 Election Subversion Case

Mainstream News: 43 minutes ago, 3 flags -

Any one suspicious of fever promotions events, major investor Goldman Sachs card only.

The Gray Area: 8 hours ago, 2 flags -

Massachusetts Drag Queen Leads Young Kids in Free Palestine Chant

Social Issues and Civil Unrest: 58 minutes ago, 2 flags -

God's Righteousness is Greater than Our Wrath

Religion, Faith, And Theology: 13 hours ago, 1 flags

active topics

-

Meadows, Giuliani Among 11 Indicted in Arizona in Latest 2020 Election Subversion Case

Mainstream News • 2 • : xuenchen -

University of Texas Instantly Shuts Down Anti Israel Protests

Education and Media • 145 • : Threadbarer -

Supreme Court Oral Arguments 4.25.2024 - Are PRESIDENTS IMMUNE From Later Being Prosecuted.

Above Politics • 50 • : WeMustCare -

VP's Secret Service agent brawls with other agents at Andrews

Mainstream News • 53 • : confuzedcitizen -

Massachusetts Drag Queen Leads Young Kids in Free Palestine Chant

Social Issues and Civil Unrest • 6 • : AstralFury -

Cats Used as Live Bait to Train Ferocious Pitbulls in Illegal NYC Dogfighting

Social Issues and Civil Unrest • 21 • : confuzedcitizen -

The functionality of boldening and italics is clunky and no post char limit warning?

ATS Freshman's Forum • 1 • : xuenchen -

The Anunnaki and the Matrix of Lies. The Missing Links.

Ancient & Lost Civilizations • 754 • : JonnyC555 -

Biden--My Uncle Was Eaten By Cannibals

US Political Madness • 74 • : xuenchen -

Weinstein's conviction overturned

Mainstream News • 17 • : 5thHead