It looks like you're using an Ad Blocker.

Please white-list or disable AboveTopSecret.com in your ad-blocking tool.

Thank you.

Some features of ATS will be disabled while you continue to use an ad-blocker.

share:

Could not find a section dedicated to the Computer Apocalypse So off to the skunk it goes. (YASS: Yet Another Sunday Skunk)

I have been interested in all the talk about the AI singularity for a while, and have come up with a theory on why we may not see it in our life time. I am not saying it can not happen just that it will be flawed as long as we are in a binary system. This is my theory on it.

Currently the vast majority of computers and devices use Binary for their calumniation this means a clear cut Yes or a Clear Cut no, this is at both the hardware Level and the Software level. This limitation stops most computers from handling Paradox and fuzzy logic that the Human Mind can dig though.

I present that until we get to a Five State Logic system computers will not become self aware. Five States would be Yes, Maybe Yes, Maybe, Maybe No, No. Humans can think in the logic loops that would give a Maybe or degree of Maybe answer computers can not.

Back at the start of logic arithmetic systems say about the 1840s three state logic systems made of wood existed, Thomas Fowler invented it. Other systems were made up to around the 1973 with as far as I can tell TERNAC a FORTRAN based program that ran on a Binary System with Emulated Three State logic was the last major test of such a system, with less memory use and less power consumption then the equivalent binary program.

What has interested me is the fact that while Ternary systems are superior they lost out to Binary.

This makes me wonder could the Singularity have already Occurred, and was stopped with a simple axe to it's power line, Then the safer Binary system was implemented full force?

Something to Chew on late on a Sunday night while you enjoy a

CoBaZ

I have been interested in all the talk about the AI singularity for a while, and have come up with a theory on why we may not see it in our life time. I am not saying it can not happen just that it will be flawed as long as we are in a binary system. This is my theory on it.

Currently the vast majority of computers and devices use Binary for their calumniation this means a clear cut Yes or a Clear Cut no, this is at both the hardware Level and the Software level. This limitation stops most computers from handling Paradox and fuzzy logic that the Human Mind can dig though.

I present that until we get to a Five State Logic system computers will not become self aware. Five States would be Yes, Maybe Yes, Maybe, Maybe No, No. Humans can think in the logic loops that would give a Maybe or degree of Maybe answer computers can not.

Back at the start of logic arithmetic systems say about the 1840s three state logic systems made of wood existed, Thomas Fowler invented it. Other systems were made up to around the 1973 with as far as I can tell TERNAC a FORTRAN based program that ran on a Binary System with Emulated Three State logic was the last major test of such a system, with less memory use and less power consumption then the equivalent binary program.

What has interested me is the fact that while Ternary systems are superior they lost out to Binary.

This makes me wonder could the Singularity have already Occurred, and was stopped with a simple axe to it's power line, Then the safer Binary system was implemented full force?

Something to Chew on late on a Sunday night while you enjoy a

CoBaZ

This has crossed my mind before. Binary logic does not allow for "maybe."

a reply to: CoBaZ

Well get ready for some serious changes then, because fuzzy logic computers are here. Started by professor Lofti Zadeh way back in 1964, fuzzy logic has been relatively obscure up until now. After a few notable advances, it's finally getting the recognition it deserves. A lot of research is being done in Asia with fuzzy logic computers. We may see true a.i. in our lifetime.

Well get ready for some serious changes then, because fuzzy logic computers are here. Started by professor Lofti Zadeh way back in 1964, fuzzy logic has been relatively obscure up until now. After a few notable advances, it's finally getting the recognition it deserves. A lot of research is being done in Asia with fuzzy logic computers. We may see true a.i. in our lifetime.

Well, binary logic does and does not ... it depends on how you think about it.

Take a simple Verilog program, for an example ... it is a VHDL system, to create a binary system. It has three states ... 1/0/x and actually the fourth z.

These three states are, the voltage lever of equivalent 1, equivalent 0. Then undefined value of either zero or one, and the high impedance state. So binary systems, will take into account the 'z' state in a binary system.

What I think is a much more interesting advancement, is a biological computer, where each cell can hold all possible values at the same time.

Take a simple Verilog program, for an example ... it is a VHDL system, to create a binary system. It has three states ... 1/0/x and actually the fourth z.

These three states are, the voltage lever of equivalent 1, equivalent 0. Then undefined value of either zero or one, and the high impedance state. So binary systems, will take into account the 'z' state in a binary system.

What I think is a much more interesting advancement, is a biological computer, where each cell can hold all possible values at the same time.

edit on 7/12/2015 by bjarneorn because: (no reason given)

originally posted by: BlackmoonJester

a reply to: CoBaZ

Well get ready for some serious changes then, because fuzzy logic computers are here. Started by professor Lofti Zadeh way back in 1964, fuzzy logic has been relatively obscure up until now. After a few notable advances, it's finally getting the recognition it deserves. A lot of research is being done in Asia with fuzzy logic computers. We may see true a.i. in our lifetime.

Put into use in Japan for washing machines to balance the load and vacuum cleaners to adjust for carpet height I think in the late 90s

All systems use binary . The 1s and 0s (on and off) actually flow through logic gates (and,or , nand ,etc) The "gate" is what allows maybe....and

modern systems do have that built in as part of the chip technology

edit on 7-12-2015 by Gothmog because: (no reason given)

The thing with "maybe" is it's only a delay for the yes/no on/off state and favours more towards the no/off state

Yes/on/doing is absolute

No/off/not doing is absolute

Maybe is still off/not doing while you're deciding between the yes/no you are in effect choosing the "not doing" state

So I do not think that the "maybe" state is an issue when it comes to AI

Yes/on/doing is absolute

No/off/not doing is absolute

Maybe is still off/not doing while you're deciding between the yes/no you are in effect choosing the "not doing" state

So I do not think that the "maybe" state is an issue when it comes to AI

a reply to: CoBaZ

I suggest you look up quantum computers because they don't use binary. AI will be quantum computers using qubits

heres some information

A classical computer has a memory made up of bits, where each bit represents either a one or a zero. A quantum computer maintains a sequence of qubits. A single qubit can represent a one, a zero, or any quantum superposition of those two qubit states; a pair of qubits can be in any quantum superposition of 4 states, and three qubits in any superposition of 8 states.

No need for biological computers however human integration with AI may require a biological solution aka Humans with AI to artificially enhance human intelligence to super human levels

I suggest you look up quantum computers because they don't use binary. AI will be quantum computers using qubits

heres some information

A classical computer has a memory made up of bits, where each bit represents either a one or a zero. A quantum computer maintains a sequence of qubits. A single qubit can represent a one, a zero, or any quantum superposition of those two qubit states; a pair of qubits can be in any quantum superposition of 4 states, and three qubits in any superposition of 8 states.

What I think is a much more interesting advancement, is a biological computer, where each cell can hold all possible values at the same time.

No need for biological computers however human integration with AI may require a biological solution aka Humans with AI to artificially enhance human intelligence to super human levels

edit on 7-12-2015 by jobless1 because: (no reason given)

edit on 7-12-2015 by jobless1 because: (no reason

given)

Binary, tertiary, ha! That's such 80's thinking, man. Get with the 90's. It's about proceses, like the guy who made mathematica said. How simple

rules create complex behavior over iterations.

Take the life program, and the glider configuration.

Or look at fractals. Self similarity everywhere.

Is that binary, or even trinary? No. It is this whole other thing. And it is sort of different from logic as well. Being more organic than logic. But even a procedurally generated planet still lacks a sense of self awareness. More so the program that generated it. A lack of self awareness in software, prevents the detection of self similarity in it's environment.

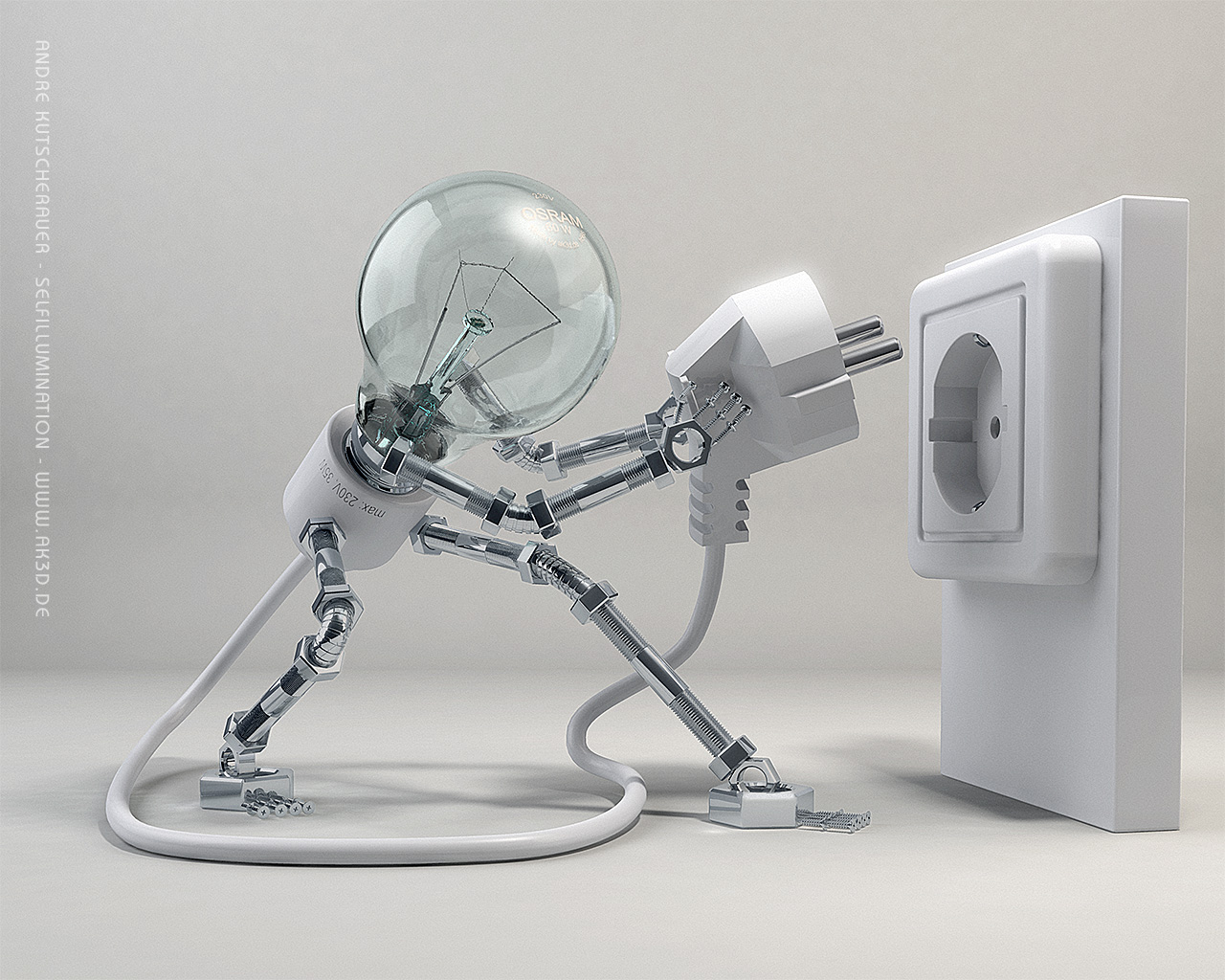

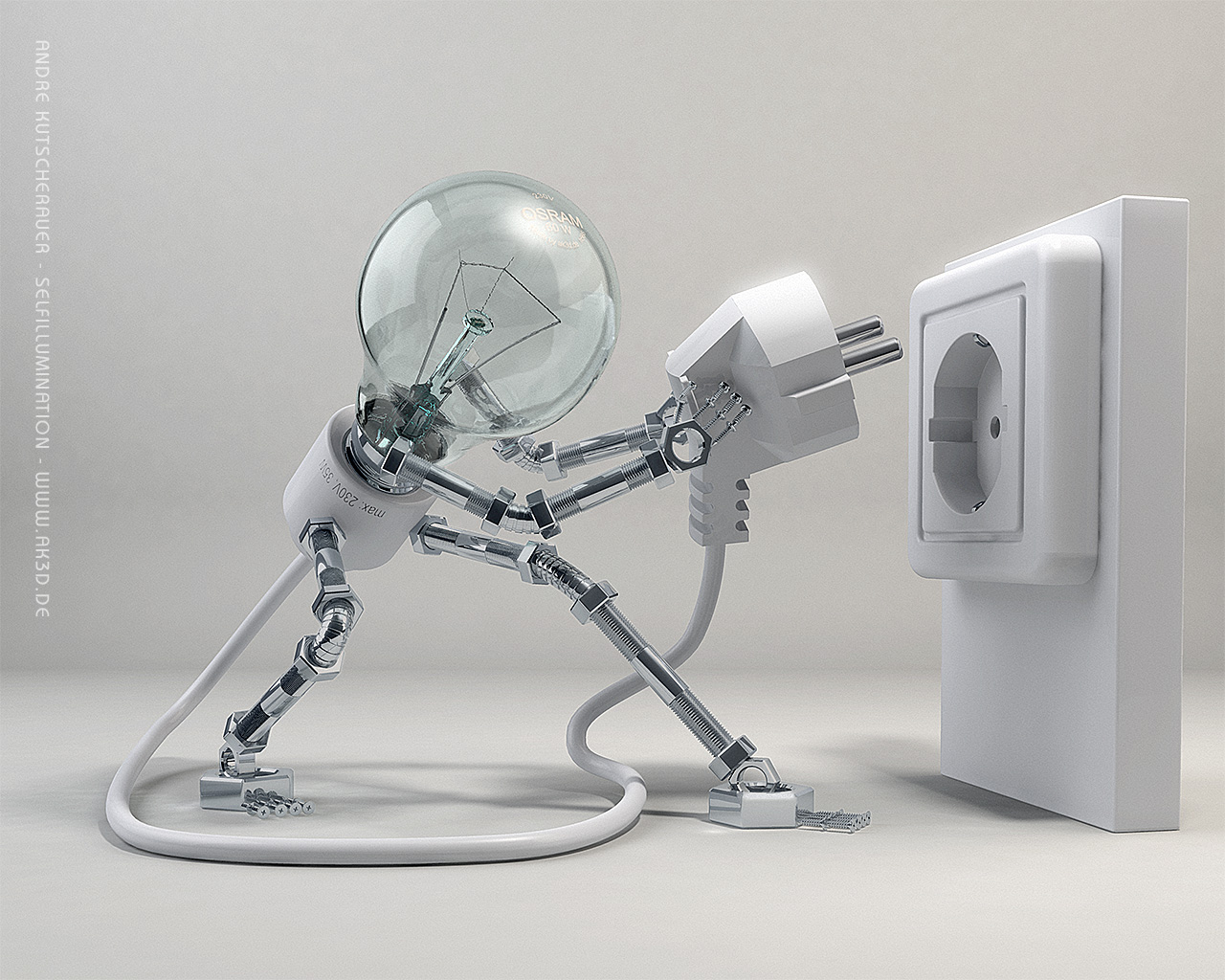

The current state of robotics would have to be preprogramed with the parameters that would allow it to detect that the plug will fit. That the negative space of the socket will fit the prongs of the plug. To check and see that the prongs are still straight and haven't been bent. And pretty soon we are using up too much memory doing one specific little thing. Let alone being aware of external existence and our place in it.

Mike Grouchy

Take the life program, and the glider configuration.

Or look at fractals. Self similarity everywhere.

Is that binary, or even trinary? No. It is this whole other thing. And it is sort of different from logic as well. Being more organic than logic. But even a procedurally generated planet still lacks a sense of self awareness. More so the program that generated it. A lack of self awareness in software, prevents the detection of self similarity in it's environment.

The current state of robotics would have to be preprogramed with the parameters that would allow it to detect that the plug will fit. That the negative space of the socket will fit the prongs of the plug. To check and see that the prongs are still straight and haven't been bent. And pretty soon we are using up too much memory doing one specific little thing. Let alone being aware of external existence and our place in it.

Mike Grouchy

originally posted by: BlackmoonJester

a reply to: CoBaZ

Well get ready for some serious changes then, because fuzzy logic computers are here. Started by professor Lofti Zadeh way back in 1964, fuzzy logic has been relatively obscure up until now. After a few notable advances, it's finally getting the recognition it deserves. A lot of research is being done in Asia with fuzzy logic computers. We may see true a.i. in our lifetime.

Exactly, but those fuzzy logic systems still use binary. It's easy to create an algorithm which can answer "maybe" like the OP suggests. It doesn't matter if you're restricted to binary, if you're clever enough in how you design your algorithm then you can do almost anything. As already pointed out by jobless1, what the OP is really seeking is quantum qubits.

edit on 7/12/2015 by ChaoticOrder because: (no reason given)

One of the main reasons for binary is that of signal loss, normally with computers you send an electrical signal down the line and if its over a

certain value its a 1 and if not its a zero with a ground value to determine the cut off point, with a lot of old systems the parts of the machine

were electrically separate and maybe even in another cabinet in the room which introduces other possibilities of error due to signal delays etc due to

slight differences in cable makeup etc (ah the good old days).

There is no real demand for multistate machines as if you want to have 3 states you could just use 2 binary bits to cover the range and also the baking of such chips probably cause a lot of problems as we'd lose a lot of the last 50 years worth of advances having to work out new solutions.

There is no real demand for multistate machines as if you want to have 3 states you could just use 2 binary bits to cover the range and also the baking of such chips probably cause a lot of problems as we'd lose a lot of the last 50 years worth of advances having to work out new solutions.

Maybe (z), is this a parameter letting a machine know that it cant suddenly take control and become aware, maybe.

originally posted by: CoBaZ

Could not find a section dedicated to the Computer Apocalypse So off to the skunk it goes. (YASS: Yet Another Sunday Skunk)

I have been interested in all the talk about the AI singularity for a while, and have come up with a theory on why we may not see it in our life time. I am not saying it can not happen just that it will be flawed as long as we are in a binary system. This is my theory on it.

Currently the vast majority of computers and devices use Binary for their calumniation this means a clear cut Yes or a Clear Cut no, this is at both the hardware Level and the Software level. This limitation stops most computers from handling Paradox and fuzzy logic that the Human Mind can dig though.

I present that until we get to a Five State Logic system computers will not become self aware. Five States would be Yes, Maybe Yes, Maybe, Maybe No, No. Humans can think in the logic loops that would give a Maybe or degree of Maybe answer computers can not.

Back at the start of logic arithmetic systems say about the 1840s three state logic systems made of wood existed, Thomas Fowler invented it. Other systems were made up to around the 1973 with as far as I can tell TERNAC a FORTRAN based program that ran on a Binary System with Emulated Three State logic was the last major test of such a system, with less memory use and less power consumption then the equivalent binary program.

What has interested me is the fact that while Ternary systems are superior they lost out to Binary.

This makes me wonder could the Singularity have already Occurred, and was stopped with a simple axe to it's power line, Then the safer Binary system was implemented full force?

Something to Chew on late on a Sunday night while you enjoy a

CoBaZ

There is a computer that predicted the position of bin laden and it was very accurate

originally posted by: Maxatoria

One of the main reasons for binary is that of signal loss, normally with computers you send an electrical signal down the line and if its over a certain value its a 1 and if not its a zero with a ground value to determine the cut off point, with a lot of old systems the parts of the machine were electrically separate and maybe even in another cabinet in the room which introduces other possibilities of error due to signal delays etc due to slight differences in cable makeup etc (ah the good old days).

There is no real demand for multistate machines as if you want to have 3 states you could just use 2 binary bits to cover the range and also the baking of such chips probably cause a lot of problems as we'd lose a lot of the last 50 years worth of advances having to work out new solutions.

attenuation is accounted for when the data is set in packets. Error checking is in the encapsulation process which sets the data into packets and accounts for the bits in the data. aka if the packet gets changed in the transmission due to signal loss "attenuation" the computer knows because it can see it when it receives the packet.

there is a very real demand for multistate machines

here is a glimpse of what a quantum computer is capable of

Integer factorization, which underpins the security of public key cryptographic systems, is believed to be computationally infeasible with an ordinary computer for large integers if they are the product of few prime numbers (e.g., products of two 300-digit primes).[13] By comparison, a quantum computer could efficiently solve this problem using Shor's algorithm to find its factors. This ability would allow a quantum computer to decrypt many of the cryptographic systems in use today, in the sense that there would be a polynomial time (in the number of digits of the integer) algorithm for solving the problem. In particular, most of the popular public key ciphers are based on the difficulty of factoring integers or the discrete logarithm problem, both of which can be solved by Shor's algorithm. In particular the RSA, Diffie-Hellman, and Elliptic curve Diffie-Hellman algorithms could be broken. These are used to protect secure Web pages, encrypted email, and many other types of data. Breaking these would have significant ramifications for electronic privacy and security.

It would make every computer on the planet vulnerable.

Indeed, the potential processing power of quantum computers truly boggles the mind. Because a quantum computer essentially operates as a massive parallel processing machine, it can work on millions of calculations simultaneously (whereas a traditional computer works on one calculation at a time, in sequence).

edit on 7-12-2015 by jobless1 because: (no reason given)

edit on 7-12-2015 by jobless1 because: (no reason given)

originally posted by: skunkape23

This has crossed my mind before. Binary logic does not allow for "maybe."

Non-binary computing already exists. Quantum computing is capable of factoring in the "maybe."

Where a bit can store either a zero or a 1, a qubit can store a zero, a one, both zero and one, or an infinite number of values in between—and be in multiple states (store multiple values) at the same time!

www.explainthatstuff.com...

edit on 12/7/2015 by clay2 baraka because: (no reason given)

Here's hoping they never figure it out. Can't believe people are foolish enough to try to go there.

a reply to: jobless1

we're talking about n-state computers based on a non quantum system if i read the op right, he wants a 5 state system which will be doable but by the gods i'd hate to have to do the working out at the low level gates to make it work, there has been decimal based systems developed but never really took off.

Quantum systems are another area of study and a rather fun area to read about but due to me not having the space/resources to slow atoms down to near absolute zero etc i'll leave it to the lab rats.

we're talking about n-state computers based on a non quantum system if i read the op right, he wants a 5 state system which will be doable but by the gods i'd hate to have to do the working out at the low level gates to make it work, there has been decimal based systems developed but never really took off.

Quantum systems are another area of study and a rather fun area to read about but due to me not having the space/resources to slow atoms down to near absolute zero etc i'll leave it to the lab rats.

It all depends I suppose on whether we want to build a machine that mimics human intelligence or whether we build one that has its own kind of

intelligence that it different than something generated by a living organism. There may be a value in creating a machine in our image -- it may be

less likely to immediately kill us upon reaching sentience. On the other hand, human intelligence is limited in size and scope, neither of which

would be an inherent problem for a machine intelligence.

We're still running into the problem of how to measure (or even recognize) intelligence that is significantly different than our own.

We're still running into the problem of how to measure (or even recognize) intelligence that is significantly different than our own.

a reply to: Maxatoria

yeah but do you really believe AI will be achieved by someone tinkering in their spare time? It will most likely be achieved under lab conditions at a college like MIT on a computer custom built for the AI or at some large corporation.

I agree slowing atoms to near absolute zero isn't something achievable now for customer use computers. Who knows what breakthroughs will happen in the next ten years with companies like google, Microsoft and the defense industry all working on solutions including colleges like Harvard, Yale, MIT, cal tech, Stanford ect all working on different solutions to different problems with Quantum computers. Sorry to our European counterparts I haven't mentioned who are also making progress in quantum computers.

However at first only a few countries will have quantum computers like the atom bomb even the researching will be classified. But once the bubble is popped by other countries figuring how to make them the government will scrap the program and make it available to us all which will be completely unlike the atom bomb. It will be the second tech industry boom the money will be outrageous.

yeah but do you really believe AI will be achieved by someone tinkering in their spare time? It will most likely be achieved under lab conditions at a college like MIT on a computer custom built for the AI or at some large corporation.

I agree slowing atoms to near absolute zero isn't something achievable now for customer use computers. Who knows what breakthroughs will happen in the next ten years with companies like google, Microsoft and the defense industry all working on solutions including colleges like Harvard, Yale, MIT, cal tech, Stanford ect all working on different solutions to different problems with Quantum computers. Sorry to our European counterparts I haven't mentioned who are also making progress in quantum computers.

However at first only a few countries will have quantum computers like the atom bomb even the researching will be classified. But once the bubble is popped by other countries figuring how to make them the government will scrap the program and make it available to us all which will be completely unlike the atom bomb. It will be the second tech industry boom the money will be outrageous.

new topics

-

The functionality of boldening and italics is clunky and no post char limit warning?

ATS Freshman's Forum: 20 minutes ago -

Meadows, Giuliani Among 11 Indicted in Arizona in Latest 2020 Election Subversion Case

Mainstream News: 55 minutes ago -

Massachusetts Drag Queen Leads Young Kids in Free Palestine Chant

Social Issues and Civil Unrest: 1 hours ago -

Weinstein's conviction overturned

Mainstream News: 2 hours ago -

Supreme Court Oral Arguments 4.25.2024 - Are PRESIDENTS IMMUNE From Later Being Prosecuted.

Above Politics: 3 hours ago -

Krystalnacht on today's most elite Universities?

Social Issues and Civil Unrest: 4 hours ago -

Chris Christie Wishes Death Upon Trump and Ramaswamy

Politicians & People: 4 hours ago -

University of Texas Instantly Shuts Down Anti Israel Protests

Education and Media: 6 hours ago -

Any one suspicious of fever promotions events, major investor Goldman Sachs card only.

The Gray Area: 8 hours ago

top topics

-

VP's Secret Service agent brawls with other agents at Andrews

Mainstream News: 17 hours ago, 11 flags -

Krystalnacht on today's most elite Universities?

Social Issues and Civil Unrest: 4 hours ago, 7 flags -

Weinstein's conviction overturned

Mainstream News: 2 hours ago, 6 flags -

Supreme Court Oral Arguments 4.25.2024 - Are PRESIDENTS IMMUNE From Later Being Prosecuted.

Above Politics: 3 hours ago, 5 flags -

Electrical tricks for saving money

Education and Media: 16 hours ago, 5 flags -

University of Texas Instantly Shuts Down Anti Israel Protests

Education and Media: 6 hours ago, 3 flags -

Meadows, Giuliani Among 11 Indicted in Arizona in Latest 2020 Election Subversion Case

Mainstream News: 55 minutes ago, 3 flags -

Any one suspicious of fever promotions events, major investor Goldman Sachs card only.

The Gray Area: 8 hours ago, 2 flags -

Massachusetts Drag Queen Leads Young Kids in Free Palestine Chant

Social Issues and Civil Unrest: 1 hours ago, 2 flags -

God's Righteousness is Greater than Our Wrath

Religion, Faith, And Theology: 13 hours ago, 1 flags

active topics

-

President BIDEN Vows to Make Americans Pay More Federal Taxes in 2025 - Political Suicide.

2024 Elections • 145 • : ImagoDei -

Massachusetts Drag Queen Leads Young Kids in Free Palestine Chant

Social Issues and Civil Unrest • 7 • : nugget1 -

University of Texas Instantly Shuts Down Anti Israel Protests

Education and Media • 149 • : xuenchen -

Nearly 70% Of Americans Want Talks To End War In Ukraine

Political Issues • 86 • : Consvoli -

-@TH3WH17ERABB17- -Q- ---TIME TO SHOW THE WORLD--- -Part- --44--

Dissecting Disinformation • 674 • : Thoughtful3 -

Supreme Court Oral Arguments 4.25.2024 - Are PRESIDENTS IMMUNE From Later Being Prosecuted.

Above Politics • 51 • : Threadbarer -

Meadows, Giuliani Among 11 Indicted in Arizona in Latest 2020 Election Subversion Case

Mainstream News • 2 • : xuenchen -

VP's Secret Service agent brawls with other agents at Andrews

Mainstream News • 53 • : confuzedcitizen -

Cats Used as Live Bait to Train Ferocious Pitbulls in Illegal NYC Dogfighting

Social Issues and Civil Unrest • 21 • : confuzedcitizen -

The functionality of boldening and italics is clunky and no post char limit warning?

ATS Freshman's Forum • 1 • : xuenchen