It looks like you're using an Ad Blocker.

Please white-list or disable AboveTopSecret.com in your ad-blocking tool.

Thank you.

Some features of ATS will be disabled while you continue to use an ad-blocker.

share:

originally posted by: Steffer

What would be the theoretical computer (processing power) required to render a 1920x1080 HD resolution screen at 1.855e+43 frames per second?

I'm very curious as to what some of the responses might be on this even if there isn't any possible answers.

Thanks.

The universe runs at approximately 1.855e+43 fps - perhaps a quantum computer in the future? If a universe was created in the lab, I wonder if it would run at the same fps. Interesting question.

Proof there is no conscious perception of time: This MUST BE TRUE, at least from our consciousness's point of view. Let me explain this... The human brain is connected to the outside world through millions of NEURONS. Each neuron is eventually connected to some kind of sensing device, like an eyeball or an eardrum. But here's the thing... all neural signals are DIGITIZED!!! That's how they operate; by digitally switching on and off. From this, we can conclude that even if the Universe were analog, our consciousness perception only sees digital. What makes it appear to be realistic and to seemingly flow from the past into the future is the resolution our Universe operates on. How many frames per second does our real Universe operate upon? The derivation for this is discussed below. Our Universe operates at about 1.855e+43 frames per second... amazingly fast... so fast that it's impossible for us to tell that time itself is DIGITIZED. I liken our perception of our Universe to the frames in a CGI movie... it doesn't matter how long it takes to compute each successive frame... nor does it matter if there are pauses in between the recording of the frames. Upon playback, although it appears that time is flowing smoothly, in reality, it is the sequence of frames that create this perceived tempo of time. The video can be paused and continued, and the characters within the movie (within the VR of the CGI) are none the wiser... each frame is a separate state, frozen in time, just like our mini-Universe examples.

spikersystems.com...

I would say the question doesn't make much sense without further explanation or context.

originally posted by: Steffer

What would be the theoretical computer (processing power) required to render a 1920x1080 HD resolution screen at 1.855e+43 frames per second?

I'm very curious as to what some of the responses might be on this even if there isn't any possible answers.

Thanks.

My thought process is this; the implied purpose of rendering an image on a display is for a human to view it and humans probably can't perceive frame rates much over 1000 frames per second. Here is some research pointing to human flicker perception being better than was once thought (50-90 Hz), but still only about 500 frames per second:

Humans perceive flicker artifacts at 500 Hz

Humans perceive a stable average intensity image without flicker artifacts when a television or monitor updates at a sufficiently fast rate. This rate, known as the critical flicker fusion rate, has been studied for both spatially uniform lights, and spatio-temporal displays. These studies have included both stabilized and unstablized retinal images, and report the maximum observable rate as 50–90 Hz. A separate line of research has reported that fast eye movements known as saccades allow simple modulated LEDs to be observed at very high rates. Here we show that humans perceive visual flicker artifacts at rates over 500 Hz when a display includes high frequency spatial edges. This rate is many times higher than previously reported. As a result, modern display designs which use complex spatio-temporal coding need to update much faster than conventional TVs, which traditionally presented a simple sequence of natural images.

That's just the tip of the iceburg on the problems with that question, but there's probably not much point in going into detail on all the other problems until this important issue is resolved. Let's say 10,000 frames per second is serious overkill for what humans can perceive, that's 1.0e+4, so I don't see the point in asking about 1.855e+43.

edit on 2018120 by Arbitrageur because: clarification

Ques:

Hey what would it take to generate cosmic rays in a lab?

Hey what would it take to generate cosmic rays in a lab?

a reply to: Hyperboles

If you did, they wouldn't be "cosmic" per the name. But we make high energy protons, similar to many cosmic rays, with particle accelerators, like those in Fermilab and CERN.

If you did, they wouldn't be "cosmic" per the name. But we make high energy protons, similar to many cosmic rays, with particle accelerators, like those in Fermilab and CERN.

Yeah, I didn't think my question would make all too much sense but I at least wanted to try.

For example, my computer is 3.4 GHz and is capable of 1080 HD video playback at about 30 frames per second.

I'm not sure what the low end specs of a computer are needed for this type of playback though.

Anyway, I was just wondering how much energy (theoretical GHz I guess) would be required to generate that intense framerate on such a small scale.

I guess what I'm asking is, how much power would it require if reality was a written script (computer code) of the highest design but only in a 1080 HD setting.

I know it's a nonsensical and silly question but thank you for taking time on reading this.

For example, my computer is 3.4 GHz and is capable of 1080 HD video playback at about 30 frames per second.

I'm not sure what the low end specs of a computer are needed for this type of playback though.

Anyway, I was just wondering how much energy (theoretical GHz I guess) would be required to generate that intense framerate on such a small scale.

I guess what I'm asking is, how much power would it require if reality was a written script (computer code) of the highest design but only in a 1080 HD setting.

I know it's a nonsensical and silly question but thank you for taking time on reading this.

I could really write a book on this subject in general but obviously not for a question that you call a "nonsensical and silly question". I said the human perception problem was the tip of the iceberg in the problems with this question, and you just touched on the main part of the iceberg.

originally posted by: Steffer

Yeah, I didn't think my question would make all too much sense but I at least wanted to try.

For example, my computer is 3.4 GHz and is capable of 1080 HD video playback at about 30 frames per second.

I'm not sure what the low end specs of a computer are needed for this type of playback though.

Anyway, I was just wondering how much energy (theoretical GHz I guess) would be required to generate that intense framerate on such a small scale.

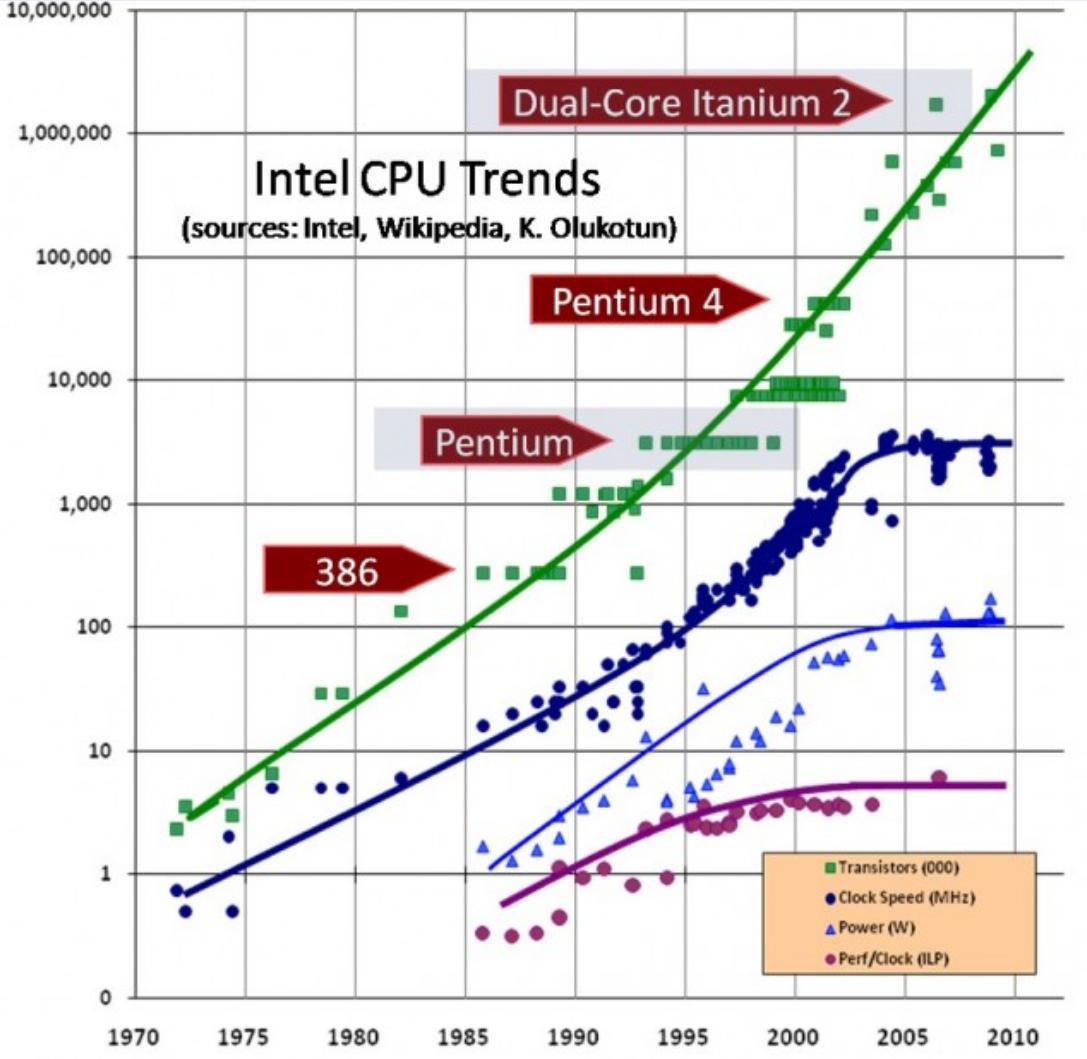

The CPU GHz hit a technological wall about the speed of your processor in 2005 and I expect we will still be at that wall in 2020 so that's 15 years with no significant increase in the GHz of the CPU. See the top blue line underneath the green line on this graph, which shows how GHz flattened out from 2005-2010 and it's still flat.

The death of CPU scaling: From one core to many — and why we’re still stuck

As that article explains once we miniaturized to 90nm transistors the gates were so thin they could not prevent current leakage into the substrate. So this means you can't say that if you need 41 powers of 10 more computing power all you have to so is run at 41 powers of 10 more gigahertz, since it seems we are struggling to run at any more gigahertz with the current technology.

The green line on the graph is still going up, but this is adding transistors and cores, not increasing the frequency in Gigahertz. So if you're stuck at the same frequency and trying to use a simple CPU based model then you might say you need 41 powers of 10 more cores. Got any idea what the wiring on that would be like? It's hard to even imagine 41 powers of 10.

Lastly your specs don't even include the graphics card which in modern computers is an additional computer on a card, sometimes even more powerful than the CPU at rendering certain types of graphics, so it depends on what type of graphics you're rendering. Some of the modern computers are made of massively parallel GPUs (Graphics Processors) instead of CPUs, like this computer which was the most powerful supercomputer on the planet in 2010:

www.theregister.co.uk...

So it uses 7168 GPUs which deliver the same performance as 50,000 CPUs. I'm not going to do exact calculations for your silly question but if ballpark you want a trillion trillion trillion times more performance than these 7168 GPUs then you'd need a trillion trillion trillion of these monsters, each of which uses roughly the same amount of power consumed by 4,000 homes, and that's presuming you could even hook all that processing power up to a display which might not even be possible with present technology, plus we don't have display technology that can effectively handle such high frame rates, so again the problems with this whole concept are so numerous that it's way more complex than just increasing gigahertz of the CPU.

7,168 NVIDIA Tesla M2050 GPUs coupled with Xeon and SPARC processors. According to center officials, it would have taken 50,000 CPUs and twice as much floor space to deliver the same performance using CPUs alone. In addition to its floating point performance, the Tianhe-1A machine has continued to deliver excellent performance per watt, consuming 4.04 megawatts.

What we might be able to do with entirely different technology in the distant future, that we're not even aware of yet, is an entirely different matter, but it's difficult to predict that since it's unknown.

edit on 2018121 by Arbitrageur because: clarification

originally posted by: Hyperboles

Ques:

Hey what would it take to generate cosmic rays in a lab?

Depends - want to make a Primary Cosmic ray - you will have to make a beam of particles mixed roughly 95% protons, 4% helium and then the rest made up of random atomic nuclei.

You need to then accelerate those in energies between 1GeV and 10^9 TeV... thats like 9 orders of magnitude higher energy than current accelerators.

If you are talking about cosmic rays that we have or tend to observe at the Earths surface, you are mostly taking about muons. You create these by proton collision to produce pions, you focus the pions and let them decay, once decayed you need to focus the resulting muons. again you need to accelerate these to rather high energies also.

I have a question about entanglement. Can particles which exist in superposition ever entangle by themselves naturally? I understand that

entanglement was predicted and that experiments show it to be true. But does entanglement occur in nature or is it an artifact of observation and

measurement?

One more question: If 2 particles are entangled and a group of unentangled particles is nearby, will the unentangled particles become entangled? In other words, can an entangled pair influence other particles and become a larger entangled set of particles?

Thanks

One more question: If 2 particles are entangled and a group of unentangled particles is nearby, will the unentangled particles become entangled? In other words, can an entangled pair influence other particles and become a larger entangled set of particles?

Thanks

edit on 21-1-2018 by Phantom423 because: (no reason given)

a reply to: Arbitrageur

The logic we are using here is similar to the reasoning behind the security of antique rotor cipher machines. The Pols sold the enigma cipher machines to the Nazi's based on sequential processing calculations.

In 1964 when the movie Mary Poppins came out they were giving 6 to 10 year olds little polarized strips to play with.

One of my favorite examples of how a quantum computer might be so much faster than a serial processing computer is the game of chess.

Initially there are only 20 possible first moves that can be made by white.

20 times 20 possible board configurations after the black response.

All those first moves result in pieces ending up within just 4 rows of the chess board.

If you were to view the chess board edge on in 2 dimensions and observe the motions of the 8 pawns..

At 40 plys there would be more possible board configurations than there are atoms in the universe so you need a quantum computer to represent that much Hilbert space.

I could really write a book on this subject in general but obviously not for a question that you call a "nonsensical and silly question".

The logic we are using here is similar to the reasoning behind the security of antique rotor cipher machines. The Pols sold the enigma cipher machines to the Nazi's based on sequential processing calculations.

In 1964 when the movie Mary Poppins came out they were giving 6 to 10 year olds little polarized strips to play with.

One of my favorite examples of how a quantum computer might be so much faster than a serial processing computer is the game of chess.

Initially there are only 20 possible first moves that can be made by white.

20 times 20 possible board configurations after the black response.

All those first moves result in pieces ending up within just 4 rows of the chess board.

If you were to view the chess board edge on in 2 dimensions and observe the motions of the 8 pawns..

At 40 plys there would be more possible board configurations than there are atoms in the universe so you need a quantum computer to represent that much Hilbert space.

edit on 21-1-2018 by Cauliflower because: (no reason given)

a reply to: Phantom423

You get entanglement whenever two particles interact. The problem is keeping them entangled. Interaction with the environment messes it up rather quickly.

You get entanglement whenever two particles interact. The problem is keeping them entangled. Interaction with the environment messes it up rather quickly.

a reply to: Phantom423

I've read about experiments were 8 particles have been entangled.

a reply to: moebius

Good answer, in fact some I hate to even call it fringe, let's say pseudoscientific claims that entanglement is involved in consciousness were addressed by Max Tegmark in this paper saying that decoherence occurs in less than a tenth of a trillionth of a second when the relevant timescales of neurons are over a billion times longer, such as a thousandth of a second or more:

The importance of quantum decoherence in brain processes

On a related note this is why nearly all entanglement experiments on particles other than photons involve temperatures approaching absolute zero or very low temperatures. There was an experiment in 2015 that observed entanglement of electrons at room temperature, which was presented as somewhat of a breakthrough.

Macroscopic quantum entanglement achieved at room temperature

I've read about experiments were 8 particles have been entangled.

a reply to: moebius

Good answer, in fact some I hate to even call it fringe, let's say pseudoscientific claims that entanglement is involved in consciousness were addressed by Max Tegmark in this paper saying that decoherence occurs in less than a tenth of a trillionth of a second when the relevant timescales of neurons are over a billion times longer, such as a thousandth of a second or more:

The importance of quantum decoherence in brain processes

Based on a calculation of neural decoherence rates, we argue that that the degrees of freedom of the human brain that relate to cognitive processes should be thought of as a classical rather than quantum system, i.e., that there is nothing fundamentally wrong with the current classical approach to neural network simulations. We find that the decoherence timescales ~10^[-13]-10^[-20] seconds are typically much shorter than the relevant dynamical timescales (~0.001-0.1 seconds), both for regular neuron firing and for kink-like polarization excitations in microtubules. This conclusion disagrees with suggestions by Penrose and others that the brain acts as a quantum computer, and that quantum coherence is related to consciousness in a fundamental way.

On a related note this is why nearly all entanglement experiments on particles other than photons involve temperatures approaching absolute zero or very low temperatures. There was an experiment in 2015 that observed entanglement of electrons at room temperature, which was presented as somewhat of a breakthrough.

Macroscopic quantum entanglement achieved at room temperature

So this is an accomplishment but still it was done on a semiconductor chip which doesn't occur naturally.

In quantum physics, the creation of a state of entanglement in particles any larger and more complex than photons usually requires temperatures close to absolute zero and the application of enormously powerful magnetic fields to achieve. Now scientists working at the University of Chicago (UChicago) and the Argonne National Laboratory claim to have created this entangled state at room temperature on a semiconductor chip, using atomic nuclei and the application of relatively small magnetic fields.

edit on 2018121 by Arbitrageur because: clarification

a reply to: Arbitrageur

I'm just beginning to research this, its a long way from the intuitive childhood parallel processing experiments to processing within a super cooled plasma. Chess unlike simple factoring or code cracking as per the CIA examples, would seem to require some kind of wave decoherence and remodulation after every move that changes the chess decision tree?

Most chess players visualize opening games differently from middle games and the end game trunk certainly seems a good allegory for quantum computing applications.

There is some information that suggests "functional quantum repeater nodes" have been implemented in hardware.

Buried in what appears to be a lot of disinfo so I guess its still unobtainium.

I'm just beginning to research this, its a long way from the intuitive childhood parallel processing experiments to processing within a super cooled plasma. Chess unlike simple factoring or code cracking as per the CIA examples, would seem to require some kind of wave decoherence and remodulation after every move that changes the chess decision tree?

Most chess players visualize opening games differently from middle games and the end game trunk certainly seems a good allegory for quantum computing applications.

There is some information that suggests "functional quantum repeater nodes" have been implemented in hardware.

Buried in what appears to be a lot of disinfo so I guess its still unobtainium.

edit on 21-1-2018 by Cauliflower because: (no reason

given)

originally posted by: moebius

a reply to: Phantom423

You get entanglement whenever two particles interact. The problem is keeping them entangled. Interaction with the environment messes it up rather quickly.

If entanglement occurs every time two particles interact, then are most particles in the state of entanglement? Do the particles transition between superposition and entanglement?

I don't understand what you mean by "interaction with the environment". If a pair of photons is entangled and separate in opposite directions, they could be anywhere in the universe. What type of environment would cause them to untangle (or detangle - not sure of the terminology).

Do you have a few references where I could get some detail about this. Thanks for the reply.

One term is "decoherence" which can degrade or terminate entanglement and it can result from interaction with the environment. Here are a couple of links with excerpts:

originally posted by: Phantom423

I don't understand what you mean by "interaction with the environment". If a pair of photons is entangled and separate in opposite directions, they could be anywhere in the universe. What type of environment would cause them to untangle (or detangle - not sure of the terminology).

Do you have a few references where I could get some detail about this. Thanks for the reply.

phys.org...

Since entanglement is critical factor in quantum information, and decoherence can degrade or terminate entanglement (the latter referred to as entanglement sudden death), preserving coherence is vital to the development of quantum computing, quantum cryptography, quantum teleportation, quantum metrology and other quantum information applications.

stahlke.org/dan/publications/qm652-project.pdf

Decoherence attempts to explain the transition from quantum to classical by analyzing the interaction of a system with a measuring device or with the environment. It is convenient to imagine a quantum mechanical particle or system of particles as an isolated system floating in empty space. This simplification may be fine in some cases but in the real world there is no such thing as an isolated system. Typically a particle in flight will collide with air molecules or will emit thermal radiation that gets absorbed by the environment. Any interaction with the environment leads to an entanglement between the particle's state and the environment's state. As the entanglement diffuses throughout the environment the total state can no longer be separated into the direct product of a particle state and an environment state. What was once a superposition of particle states becomes a superposition of particle X environment states. At this point the particle ceases to act as if it were in a quantum superposition of states, instead acting as a statistical ensemble of states.

The end result of the decoherence process is that the particle will appear to have collapsed in a manner described by the Born probability law...

Decoherence tends to happen on an extremely fast timescale in most situations. The decoherence rate depends on several factors including temperature, uncertainty in position, and number of particles surrounding the system. Temperature affects the rate of blackbody radiation each radiated photon will interact with the environment. Uncertainty in position tends to create a wide range of interaction energies and thus a rapid spread in vector components. The number of particles in the surroundings affects the rate at which interactions can happen. The rule of thumb is that decoherence occurs when the environment gains enough information to learn something about an observable. In any case it takes only a few interactions before a system has become completely decoherent. A single collision with an air molecule is enough to cause a chain reaction of decoherence as the collision molecule in turn collides with its neighbors.

edit on 2018122 by Arbitrageur because: clarification

All this talk of particles. Particle farticle.

Where do particles come from?

Do particles exist in dreams?

Where do particles come from?

Do particles exist in dreams?

originally posted by: mbkennel

a reply to: joelr

Hey, was I on or off target on my description of quantum fields? It's not really my specialty at all---I'm pretty sure you know more.

You take classical field theory and move to perturbative quantum field theory through lagrangians, symmetries, observables, phase space, propagators, gauge symmetries, quantization, interacting quantum fields, renormalization (among other things) and then get to something like quantum electrodynamics.

So it's a whole bunch of mathematical steps that eventually makes a predictive model that works really well.

a reply to: Arbitrageur

I am wondering about the transfer of energy though a system.

I know I can raise a block of material which weighs 500 pounds by using a block and tackle with 5 pulleys. Giving me a 5:1 mechanical advantage in so far as lifting. My question is -- If I raise the block to a height of 10 feet and suddenly release it, what amount of energy is then imparted to the rope as it falls ? Does the 5:1 ratio still apply to the long end of the rope; would a 100 pound resistance stop the fall, or would a much larger force be needed to stop the block from reaching the ground ?

I am wondering about the transfer of energy though a system.

I know I can raise a block of material which weighs 500 pounds by using a block and tackle with 5 pulleys. Giving me a 5:1 mechanical advantage in so far as lifting. My question is -- If I raise the block to a height of 10 feet and suddenly release it, what amount of energy is then imparted to the rope as it falls ? Does the 5:1 ratio still apply to the long end of the rope; would a 100 pound resistance stop the fall, or would a much larger force be needed to stop the block from reaching the ground ?

In classroom examples, the mass and energy of the rope is often neglected, however like all simplifications that doesn't mean the mass and energy of the rope is zero, but if the rope is say 5 pounds that is fairly small compared to 500 pounds. Since kinetic energy is related to mass times velocity squared, if the mass of the rope is low compared to the block, so is its energy. So, most of the kinetic energy is in the falling block and not the rope.

originally posted by: tinymind

a reply to: Arbitrageur

I am wondering about the transfer of energy though a system.

I know I can raise a block of material which weighs 500 pounds by using a block and tackle with 5 pulleys. Giving me a 5:1 mechanical advantage in so far as lifting. My question is -- If I raise the block to a height of 10 feet and suddenly release it, what amount of energy is then imparted to the rope as it falls ?

The rope is a means of transferring momentum and energy but it doesn't have much itself.

Obviously if you attach the 100 pound weight before the fall while the 500 pound block is still stationary, that would prevent the fall.

Does the 5:1 ratio still apply to the long end of the rope; would a 100 pound resistance stop the fall, or would a much larger force be needed to stop the block from reaching the ground ?

If you attach the 100 pound block immediately after the fall starts, like after the 500 pound block falls an inch, then it's not going very fast and the 100 pound block plus the friction and other losses in the rope/pulley system might be enough to slow down and stop the fall, especially if you use the not very good block and tackle of mine which has a fair amount of friction.

If however you attach the 100 pound weight when the 500 pound block has already fallen 8 feet, the 500 pound block has reached a velocity of about 23 feet per second, and that is not going to stop the block from falling. It will stop the acceleration of the block, and if the 100 pound weight was stationary when you attached it to the rope, some momentum and energy will be transferred from the 500 pound block to the 100 pound weight, so the 500 pound block will be going slower than 23 feet per second for the remaining 2 feet, but it won't stop; it will still travel the remaining 2 feet at a slower velocity and hit the ground.

new topics

-

WF Killer Patents & Secret Science Vol. 1 | Free Energy & Anti-Gravity Cover-Ups

General Conspiracies: 19 minutes ago -

Hurt my hip; should I go see a Doctor

General Chit Chat: 1 hours ago -

Israel attacking Iran again.

Middle East Issues: 2 hours ago -

Michigan school district cancels lesson on gender identity and pronouns after backlash

Education and Media: 2 hours ago -

When an Angel gets his or her wings

Religion, Faith, And Theology: 3 hours ago -

Comparing the theology of Paul and Hebrews

Religion, Faith, And Theology: 4 hours ago -

Pentagon acknowledges secret UFO project, the Kona Blue program | Vargas Reports

Aliens and UFOs: 5 hours ago -

Boston Dynamics say Farewell to Atlas

Science & Technology: 5 hours ago -

I hate dreaming

Rant: 5 hours ago -

Man sets himself on fire outside Donald Trump trial

Mainstream News: 7 hours ago

top topics

-

The Democrats Take Control the House - Look what happened while you were sleeping

US Political Madness: 8 hours ago, 18 flags -

In an Historic First, In N Out Burger Permanently Closes a Location

Mainstream News: 10 hours ago, 16 flags -

A man of the people

Medical Issues & Conspiracies: 15 hours ago, 11 flags -

Biden says little kids flip him the bird all the time.

Politicians & People: 7 hours ago, 8 flags -

Man sets himself on fire outside Donald Trump trial

Mainstream News: 7 hours ago, 7 flags -

Pentagon acknowledges secret UFO project, the Kona Blue program | Vargas Reports

Aliens and UFOs: 5 hours ago, 6 flags -

Israel attacking Iran again.

Middle East Issues: 2 hours ago, 5 flags -

Michigan school district cancels lesson on gender identity and pronouns after backlash

Education and Media: 2 hours ago, 4 flags -

4 plans of US elites to defeat Russia

New World Order: 17 hours ago, 4 flags -

Boston Dynamics say Farewell to Atlas

Science & Technology: 5 hours ago, 4 flags

active topics

-

Hurt my hip; should I go see a Doctor

General Chit Chat • 9 • : rickymouse -

Silent Moments --In Memory of Beloved Member TDDA

Short Stories • 48 • : Encia22 -

MULTIPLE SKYMASTER MESSAGES GOING OUT

World War Three • 52 • : cherokeetroy -

Israel attacking Iran again.

Middle East Issues • 22 • : Boomer1947 -

WF Killer Patents & Secret Science Vol. 1 | Free Energy & Anti-Gravity Cover-Ups

General Conspiracies • 1 • : WakeofPoseidon -

The Democrats Take Control the House - Look what happened while you were sleeping

US Political Madness • 67 • : WeMustCare -

Thousands Of Young Ukrainian Men Trying To Flee The Country To Avoid Conscription And The War

Other Current Events • 53 • : ghandalf -

Boston Dynamics say Farewell to Atlas

Science & Technology • 5 • : Caver78 -

Biden says little kids flip him the bird all the time.

Politicians & People • 16 • : stelth2 -

When an Angel gets his or her wings

Religion, Faith, And Theology • 2 • : stelth2