It looks like you're using an Ad Blocker.

Please white-list or disable AboveTopSecret.com in your ad-blocking tool.

Thank you.

Some features of ATS will be disabled while you continue to use an ad-blocker.

share:

originally posted by: Arbitrageur

I just demonstrated nearly 200 sigma with a not so great measurement system giving us plus or minus 3 sigma of plus or minus 3%. Do you see this now?

Yes. I see what the 23 sigma claim is now. Thank you.

I did what so many do to me, it is too easy to do! 1) I saw a claim I believed to be fantasy (faster than light motion). 2) I saw a further claim (in this case a claim of extreme accuracy, 23 sigma) to support the first claim. 3) I rushed to judge that the second claim was incorrect as well (in this case believing it was an assertion that a measurement was made to 23 sigma). I appreciate the clarity of your explanation of my misstep.

edit on 29-6-2017 by delbertlarson because: Better phrasing

There are several issues with this. Your first comment about infinite numbers between 0 and 1 was so abstract as to be almost meaningless, which you have somewhat addressed here and maybe you should have used this phrasing to begin with. You have removed some of the abstraction by talking about "a indestructible machine could start counting from 0 and never reaches 1". It's still somewhat abstract since it's likely impossible to build and the numbers don't seem to correlate with anything in the real world, but it's a valid thought experiment like Maxwell's demon which is also unlikely to be built.

originally posted by: zundier

The fact that density can vary within random values from the exact number of molecules that you pointed, doesn't prove that a indestructible machine could start counting from 0 and never reaches 1.

If you set aside the impossibility of building the indestructible machine, I don't think anybody familiar with math would object to the rest of your thought experiment, however I don't see the point. If the numbers don't relate to anything in the real world it's still somewhat abstract. You didn't seem to understand "abstract" so I tried to explain this via providing an example that wasn't abstract, namely measurements of the density of water. So once you get rid of the abstraction, at least in that example there's no longer an infinity between a density of 0 and 1 of a cc of water where you can only add or subtract whole water molecules of which there are a finite number so there are only a finite number of densities possible in that case.

Quantum mechanics provides another smaller barrier to infinite variability because of packets of energy called "quanta". They are the smallest steps you can have between energy levels and they do not permit infinite variability in energy so again the idea that there are infinite numbers between 1 and 0 is an abstraction not really consistent with what we know about "stepped" energy levels resulting from quanta of energy.

My analysis is that you are using a mathematical abstraction to suggest math is somehow a problem dealing with the real world. If you just want to play with math and see what it can do, abstractions are fine, but if you want to say it's a problem with how science describes the real world then you have to abandon your abstractions and discuss some examples where you think the application of math is a problem in the real world.

Therefore, the suggestion of this dilemma is that math - the fundamental tool to physics - it's explicitly contrary to its very essential objective - that is logic. Since science is mostly based on it, and math itself has this irrationality embeded within it, I wonder if we're missing an additional tool beyond mathematics?

So I think that this whole idea about how many numbers are between 0 and 1 is a complete red herring which you should never have discussed at all as it's completely irrelevant to the real problems with science, and there are real problems.

George Box said something like "All models are wrong, some are useful." That sums up the problem with science for me. We have nature on the one hand, and on the other hand we want to make predictions about how it works so we make models. The problem with models is they will always be imperfect representations of nature itself. As Box astutely observed, this problem can be disregarded if you can make the model accurate enough to be useful. The most famous example of a wrong but useful model still in use today is Newton's model. It still does a good job predicting most motions of the planets, but it won't predict the precession of Mercury, you need Einstein's improved relativity model to do that.

I think Einstein himself felt that his model was still an imperfect representation of nature and wanted to find a better one, and I think most scientists probably agree there are problems with our models in trying to predict things like the structure or density of the center of a black hole.

So our models are wrong, we know that or at least I do and I take it as a given, but they are extremely useful within their respective range of applicability. We just need to be aware of what that range of applicability is and not try to use the model outside it, so you can probably use Newtons model to plan a mission to Mars but not to build a particle accelerator because it works pretty well in the first example but not at all in the second.

So to me there's no question all models are wrong, imperfect representations of nature. The question isn't are they wrong, the question is how do you make the model less wrong, so it is useful over a wider range of applications, along the lines of Einstein's model being an improvement over improving Newton's model? The better model is still going to be very dependent on the math and there's no getting around that that I can see.

So how do we find these better models? The same way Einstein did, challenge existing assumptions. I don't know if he'll come up with a better model or not but Nima Arkani Hamed is certainly doing this and he like me is well aware that our current models are wrong, and he's also aware that is not a very helpful thing to say and what is really needed from theoretical physicists isn't just to say it's wrong, but to then suggest what instead is right. As he accurately describes, that is very, very hard to do, so this in a nutshell is one of the barriers to advancement in science, at least in his field of theoretical physics. We need to keep coming up with better wrong models that are less wrong than the last one but this is getting harder and harder to do as Hamed explains here:

In conversation with Nima Arkani-Hamed

41:50 How does your job work?

(Nima explains how it might seem like it would be easy to just dream up new stuff like the Higgs then wait around 50 years for the experiment to be conducted which proves 99% of the ideas wrong)

44:30 "things don't work that way...we don't know the answers to all the questions, in fact we have very profound mysteries. But what we already know about the way the world works is so constraining that it's almost impossible (since we have to change something...), it's almost impossible to have a new idea which doesn't destroy everything that came before it. Even without a single new experiment, just agreement with all the old experiments, is enough to kill almost every idea that you might have....

It's almost impossible to solve these problems, precisely because we know so much already that anything you do is bound to screw everything up. So if you manage to find one idea that's not obviously wrong, it's a big accomplishment. Now that's not to say that it's right. But not obviously being wrong is already a huge accomplishment in this field. That's the job of a theoretical physicist."

a reply to: delbertlarson

Thanks for the reply. I'm glad my explanation helped.

edit on 2017629 by Arbitrageur because: clarification

a reply to: pfishy

I understand the logic of what you're trying to do (sort of, maybe), however your approach is somewhat flawed. For example if you think the motion of the Milky Way and Andromeda galaxies are offsetting expansion, then what's offsetting the expansion of a meter long measuring stick? If the expansion was occurring everywhere, the meter stick would also be getting longer, but the expansion is not occurring everywhere and the meter stick is not getting longer due to other forces keeping the stick the same length.

math.ucr.edu...

Hubble's Law

To figure out what happens after a few hundred megaparsecs, you need to assume a model like ΛCDM which is used in the reference I posted a few posts before your question:

Expanding Confusion: common misconceptions of cosmological horizons and the superluminal expansion of the Universe

See Figures 1 and 2 which show ΛCDM model projections beyond the few hundred megaparsec limit of Hubble's Law applicability.

I understand the logic of what you're trying to do (sort of, maybe), however your approach is somewhat flawed. For example if you think the motion of the Milky Way and Andromeda galaxies are offsetting expansion, then what's offsetting the expansion of a meter long measuring stick? If the expansion was occurring everywhere, the meter stick would also be getting longer, but the expansion is not occurring everywhere and the meter stick is not getting longer due to other forces keeping the stick the same length.

math.ucr.edu...

(ditto the galaxy, the local group)? Probably. you have to go outside the local group to measure cosmological expansion. I think using the 10 megaparsec distance as a starting point would be a good idea, which is way farther than Andromeda at only 0.45 megaparsecs away:

In newtonian terms, one says that the Solar System is "gravitationally bound" (ditto the galaxy, the local group). So the Solar System is not expanding.

Hubble's Law

So you can apply Hubble's law to not less than 10 megaparsecs and not more than a few hundred megaparsecs. Within that range my guess would be somewhere around 70 km/s per megaparsec as a value for "Hubble's constant" which is the relationship between recessional velocity versus distance, but you can see a whole list of computed values at that link. 70 is about what WMAP was suggesting, but Planck Mission came up with a little less and Hubble Telescope came up with a little more. Any of those could be right or they could all be wrong but I don't think 70 is too far off if it's wrong.

Hubble's law is the name for the observation in physical cosmology that:

Objects observed in deep space (extragalactic space, 10 megaparsecs (Mpc) or more) are found to have a Doppler shift interpretable as relative velocity away from Earth;

This Doppler-shift-measured velocity, of various galaxies receding from the Earth, is approximately proportional to their distance from the Earth for galaxies up to a few hundred megaparsecs away.

To figure out what happens after a few hundred megaparsecs, you need to assume a model like ΛCDM which is used in the reference I posted a few posts before your question:

Expanding Confusion: common misconceptions of cosmological horizons and the superluminal expansion of the Universe

See Figures 1 and 2 which show ΛCDM model projections beyond the few hundred megaparsec limit of Hubble's Law applicability.

edit on 2017629 by Arbitrageur because: clarification

a reply to: Arbitrageur

Thank you again for the response. As a quick side note, this thread is the only one I have continuously monitored and have interacted with consistently since joining 2 years ago. I absolutely love this discussion. Thank you.

The references I read listed Andromeda as .78 mpc

Which seems to equate nicely with the 2.5 mly distance it is normally measured as. But that is not the important point here.

From what I thought I knew, gravity will hold galaxies and structures together, but the fabric of spacetime expands regardless. Basically, that is to say that although likely impossible to discern at such scales, the length between your fingertip and wrist is expanding at the same rate as an equal length of empty space, glue, Axlotl tanks or fingers anywhere else in the Cosmos.

Thank you again for the response. As a quick side note, this thread is the only one I have continuously monitored and have interacted with consistently since joining 2 years ago. I absolutely love this discussion. Thank you.

The references I read listed Andromeda as .78 mpc

Which seems to equate nicely with the 2.5 mly distance it is normally measured as. But that is not the important point here.

From what I thought I knew, gravity will hold galaxies and structures together, but the fabric of spacetime expands regardless. Basically, that is to say that although likely impossible to discern at such scales, the length between your fingertip and wrist is expanding at the same rate as an equal length of empty space, glue, Axlotl tanks or fingers anywhere else in the Cosmos.

edit on 30-6-2017 by pfishy because: (no reason

given)

a reply to: Arbitrageur

Thanks for sharing the video from Nima Arkani-Hamed. It continually helps to get more information on how the status quo is thinking, and you post some very good videos with such information. Ever since grad school I have believed that the turn away from classical thinking was a wrong turn, and the video brought up a few things that trigger the following comments.

1. Nima commented how the old thinking concerning the classical radius of the electron was wrong. I think he used terminology similar to "wrong", but in any event I was left with that impression. Not "experiment suggests that it is wrong", but rather, "wrong". The video is long, and I don't want to be a lawyer here, so others can correct me if I am a bit off base on that. He also said the classical theory was that the electron was a shell of charge. My thoughts have always been that experiment has simply indicated that the charge was more centralized than the classical radius of the electron. That would indicate that the electron might itself be made of something much smaller, and that something might have much more mass, than what the original classical model stated. Yes, it could be the B preon, but this isn't a plug for my model, the point is that it might be something else, perhaps even smaller and more massive than the B preon. I also believe it would not be a shell of charge, but more likely a solid sphere, or even more likely some spherically symmetric distribution, perhaps a Gaussian. Or the size, shape, and density itself might be determined by the forces present and any binding it is a part of. But the critical elements of my thinking on this are two: I believe nature not to consist of point-like particles; and my thinking is classical.

1.a. In my view it is the nature of point-like particles that is getting us into all the problems we have with infinities. Yet, special relativity is a point-like theory in four space. If entities have any finite extent, then things could happen at one extreme end of the entity "before" they happen at the other extreme end, but with relativity we don't know what "before" is, since it is relative. Hence, relativity is a point-like theory. However, we don't have that problem with Lorentz's derivation of the Lorentz equations. Additionally, I have shown that even the length contraction is not needed to arrive at the Lorentz equations.

1.b. My discussion here is classical. It uses terms such as shell of charge, solid sphere, spherically symmetric distribution, point-like, finite extent, extreme end, and before. These terms are all classical descriptions of things. Modern physics uses such terms on occasion, but it is more to dumb things down for the public than to describe the thinking. Rather, modern physics starts with principles, develops math, and compares the mathematical predictions against experimental results. Modern physics abandons the underlying conceptual models of physical entities and instead uses the underlying principles as a basis for the math. This leads to a rather confusing description when trying to explain what is going on. The clearest explanation Nima gave was his definition of the electron as being a spin one half solution of some specific group. I, being a classical physicist, have forgotten the name of the group, but I am quite certain he was exactly correct in his description - and he was describing math, not the physical bodies I describe here.

2. Nima made mention of how the "big microscope" of the LHC was looking at what the vacuum was made of. I have never looked at things that way at all. My view is that we are accelerating existing entities and smashing them off of one another. When we do so we create a lot of energy in very small volumes during collisions. We then see what that energy makes. It is not required that the vacuum is constantly making these things (even virtually) when the beams are not present. At least that is my view.

3. Space-time must not exist. One of the "wow" moments was the discussion involving an assertion that we now know that space-time does not exist. (Again, my recollection of the exact words might be a bit off, but I recall that being the gist of it.) From the discussion, what I believe was meant was that at some level, relativity must be wrong. But such statements are two entirely different things. To say a theory (relativity) must at some level be wrong is completely different from saying that space-time doesn't exist. In my view, this use of language is what a magician does, which is to distract so as to induce awe. I am not a fan of such things in science.

I also follow this thread here and only occasionally check in on others. Thanks for maintaining it, and I look forward to any comments you or others may have on the above thoughts.

Thanks for sharing the video from Nima Arkani-Hamed. It continually helps to get more information on how the status quo is thinking, and you post some very good videos with such information. Ever since grad school I have believed that the turn away from classical thinking was a wrong turn, and the video brought up a few things that trigger the following comments.

1. Nima commented how the old thinking concerning the classical radius of the electron was wrong. I think he used terminology similar to "wrong", but in any event I was left with that impression. Not "experiment suggests that it is wrong", but rather, "wrong". The video is long, and I don't want to be a lawyer here, so others can correct me if I am a bit off base on that. He also said the classical theory was that the electron was a shell of charge. My thoughts have always been that experiment has simply indicated that the charge was more centralized than the classical radius of the electron. That would indicate that the electron might itself be made of something much smaller, and that something might have much more mass, than what the original classical model stated. Yes, it could be the B preon, but this isn't a plug for my model, the point is that it might be something else, perhaps even smaller and more massive than the B preon. I also believe it would not be a shell of charge, but more likely a solid sphere, or even more likely some spherically symmetric distribution, perhaps a Gaussian. Or the size, shape, and density itself might be determined by the forces present and any binding it is a part of. But the critical elements of my thinking on this are two: I believe nature not to consist of point-like particles; and my thinking is classical.

1.a. In my view it is the nature of point-like particles that is getting us into all the problems we have with infinities. Yet, special relativity is a point-like theory in four space. If entities have any finite extent, then things could happen at one extreme end of the entity "before" they happen at the other extreme end, but with relativity we don't know what "before" is, since it is relative. Hence, relativity is a point-like theory. However, we don't have that problem with Lorentz's derivation of the Lorentz equations. Additionally, I have shown that even the length contraction is not needed to arrive at the Lorentz equations.

1.b. My discussion here is classical. It uses terms such as shell of charge, solid sphere, spherically symmetric distribution, point-like, finite extent, extreme end, and before. These terms are all classical descriptions of things. Modern physics uses such terms on occasion, but it is more to dumb things down for the public than to describe the thinking. Rather, modern physics starts with principles, develops math, and compares the mathematical predictions against experimental results. Modern physics abandons the underlying conceptual models of physical entities and instead uses the underlying principles as a basis for the math. This leads to a rather confusing description when trying to explain what is going on. The clearest explanation Nima gave was his definition of the electron as being a spin one half solution of some specific group. I, being a classical physicist, have forgotten the name of the group, but I am quite certain he was exactly correct in his description - and he was describing math, not the physical bodies I describe here.

2. Nima made mention of how the "big microscope" of the LHC was looking at what the vacuum was made of. I have never looked at things that way at all. My view is that we are accelerating existing entities and smashing them off of one another. When we do so we create a lot of energy in very small volumes during collisions. We then see what that energy makes. It is not required that the vacuum is constantly making these things (even virtually) when the beams are not present. At least that is my view.

3. Space-time must not exist. One of the "wow" moments was the discussion involving an assertion that we now know that space-time does not exist. (Again, my recollection of the exact words might be a bit off, but I recall that being the gist of it.) From the discussion, what I believe was meant was that at some level, relativity must be wrong. But such statements are two entirely different things. To say a theory (relativity) must at some level be wrong is completely different from saying that space-time doesn't exist. In my view, this use of language is what a magician does, which is to distract so as to induce awe. I am not a fan of such things in science.

I also follow this thread here and only occasionally check in on others. Thanks for maintaining it, and I look forward to any comments you or others may have on the above thoughts.

I'm afraid your comprehension of what Hamad said is way off. He said theoreticians of a century ago or so realized that there was a problem if the electron was a point, which was that the energy density of the point would tend toward infinity, so how could the electron move around if it's dragging this infinite energy around it. He also said they were right to be concerned about that because with the model they were using, it was a problem. They tried to solve the problem by considering the electron to not be a point-like particle which would require the size to be on the order of 10^-13 cm to not have the point-like problem, but they could never make that work and further we now know that penning trap experiments have constrained the electron size to less than 10^-22m.

originally posted by: delbertlarson

1. Nima commented how the old thinking concerning the classical radius of the electron was wrong. I think he used terminology similar to "wrong", but in any event I was left with that impression. Not "experiment suggests that it is wrong", but rather, "wrong". The video is long, and I don't want to be a lawyer here, so others can correct me if I am a bit off base on that. He also said the classical theory was that the electron was a shell of charge. My thoughts have always been that experiment has simply indicated that the charge was more centralized than the classical radius of the electron. That would indicate that the electron might itself be made of something much smaller, and that something might have much more mass, than what the original classical model stated. Yes, it could be the B preon, but this isn't a plug for my model, the point is that it might be something else, perhaps even smaller and more massive than the B preon.

The ultimate solution according to Hamed was to come up with a different model using quantum mechanics which showed that at a scale of 10^-11 cm, 100x larger than the 10^-13 cm needed to solve the energy density problem, the roiling cloud of quantum mechanical fluctuations removes the point-like picture of the electron.

Your view is correct, if somewhat limited. The more comprehensive model which your view doesn't seem to include (correct me if I'm wrong) is that the Higgs boson is an excitation of the Higgs field which theoretically has a non-zero vacuum expectation value of 246 GeV, which underlies the Higgs mechanism of the standard model. So I suppose you could say that if you had a different model which predicts the Higgs Boson observed at the LHC and the model doesn't include any such vacuum expectation value and it's consistent with other results that Hamed's statement about "looking at the vacuum" through the "lens" of the LHC was not correct, his statement was somewhat of a metaphor for how the experimental result can be viewed looking through the lens of the standard model which predicted the Higgs.

2. Nima made mention of how the "big microscope" of the LHC was looking at what the vacuum was made of. I have never looked at things that way at all. My view is that we are accelerating existing entities and smashing them off of one another. When we do so we create a lot of energy in very small volumes during collisions. We then see what that energy makes. It is not required that the vacuum is constantly making these things (even virtually) when the beams are not present. At least that is my view.

Going back to my earlier explanation of Newton's model, whether you consider that right or wrong depends on how you look at it so I still prefer George Box's context that all models are wrong and in that view we have no doubt that space-time and relativity in addition to Newton's laws are all wrong. Clearly Hamed thinks space-time is wrong, and to the extent they are a part of both relativity and quantum mechanics, both relativity and quantum mechanics are wrong or you could say they are using them as a crutch.

3. Space-time must not exist. One of the "wow" moments was the discussion involving an assertion that we now know that space-time does not exist. (Again, my recollection of the exact words might be a bit off, but I recall that being the gist of it.) From the discussion, what I believe was meant was that at some level, relativity must be wrong. But such statements are two entirely different things. To say a theory (relativity) must at some level be wrong is completely different from saying that space-time doesn't exist. In my view, this use of language is what a magician does, which is to distract so as to induce awe.

You have to also put "wrong" in the context of Einstein's model showing Newton's model wrong, because as Hamed points out we no longer have any real revolutions in physics which show the old models completely wrong, all the new models have to reduce to the old models in some way which is at the foundation of Einstein's theory and how it reduces to Newton's model.

Maybe this will help, in another lecture he uses the analogy of the view of determinism in something like 1780, that physicists at that time had no way to doubt determinism yet we now know that determinism is "wrong" in the sense that it has been shown to not exist in our quantum mechanics experiments. What we can also show is how apparent determinism "emerges" from the QM model on large scales that physicists of 1780 would have been looking at, so in this sense determinism does exist but since it "emerges" it's referred to as "emergent" and is not fundamental, in fact fundamentally it doesn't exist.

It is in this context of the determinism example and analogy that Hamed and his colleagues feel that time might also be "emergent", so as with determinism you can't say the "old models" of relativity and QM give wrong predictions using space time, they obviously don't give wrong predictions, neither did determinism of the 1780's using experiments at that time. Somewhere around 29 minutes Hamed is asked "You're fairly certain space-time has got to go?". His reply was "I think all of us are almost certain that space-time has got to go" and I was wondering who he meant by "all of us". I don't know if he meant all theoretical physicists but maybe he just meant his colleagues working at the institute for advanced studies? Unfortunately I don't know who he meant by "all of us" but I found that somewhat intriguing and makes it sound like this is not an isolated renegade idea.

In these discussions as with many physics discussions, there is much detailed information to be considered which is simply beyond simple characterizations like "right" or "wrong" and the only way to understand the real issues is to dig past those simple characterizations into the details of the real issues. This is also a big part of the reply I'm about to write to pfishy on his question about the metric expansion of space.

Yes we often do give glib characterizations to the general public because most people in the general public would prefer to have it dumbed down for them and either don't have the aptitude or the time to dig into the details to understand the complexity of issues beyond that, but it is in that field of rich details where physicists do their work, and 'm afraid most of the general population won't understand that. In fact Physics is so specialized now that it can become difficult for physics specialists to understand the work of other specialists working in different areas of physics.

Thanks for the feeback, glad you find it interesting.

originally posted by: pfishy

a reply to: Arbitrageur

Thank you again for the response. As a quick side note, this thread is the only one I have continuously monitored and have interacted with consistently since joining 2 years ago. I absolutely love this discussion. Thank you.

I'd like to say I was just testing you to see if you were on your toes but that was just pure laziness on my part because I already spent enough time on that reply and I was too lazy to click another link. My off the top and incorrect idea of the distance was about 2mly which I knew should have been well above .45 mpc, so I probably should have investigated further but I just copied the .45 from the search result without clicking the link. Now that you pointed out the discrepancy, I can inform you the search box wasn't completely wrong, rather the result was obsolete. .45mpc was actually the first distance estimate published in 1922 but of course I concur with your corrected more recent estimates. Anyway the point I was trying to make was that anything under 10 MPC should probably be avoided and that point stands whether using the 1922 estimate or the more recent one.

The references I read listed Andromeda as .78 mpc

Which seems to equate nicely with the 2.5 mly distance it is normally measured as. But that is not the important point here.

Yes this view was apparent in the context of what you wrote which is why I provided the link refuting it, so I can consider one of three things might have happened regarding the link I included in my reply to try to help you correct that view:

From what I thought I knew, gravity will hold galaxies and structures together, but the fabric of spacetime expands regardless. Basically, that is to say that although likely impossible to discern at such scales, the length between your fingertip and wrist is expanding at the same rate as an equal length of empty space, glue, Axlotl tanks or fingers anywhere else in the Cosmos.

1. You either didn't read the link at all, or

2. You read the link and didn't understand it, or

3. You read the link and understood that it was telling you your understanding was wrong, but you rejected the explanation.

So, which was it?

Anyway in the context of my earlier reply to DelbertLarson, the link's explanation while correct to some extent was somewhat dumbed-down or simplified so maybe slightly incorrect which inevitably dumbed-down explanations usually are, so I'll try to present a more accurate view here, but first a simpler example to illustrate the concept.

True or false: If you push a paper clip off your desk and it falls to the floor, the earth also moves toward the paper clip.

Now if I say "true" can be a correct answer and "false" can also be a correct answer, this sounds totally illogical because simple logic would dictate that the true and false answers are mutually exclusive and if one is right the other must be wrong. The answer to that question can't be understood using a dumbed down "true" or false", and while it's an extremely simple question, understanding all the issues involved in answering it can get complex.

So how can true be a correct answer? If you consider a two body gravitational analysis the earth is one body and the paper clip is anther body and they each have mass and are therefore attracted to each other. Let's start with bigger bodies like Earth and Venus, and let's say you're omnipotent so you can temporarily move Earth and Venus to somewhere out in the boonies where they are nearly free from other gravitational influences so you can see what happens. So you put them "motionless" relative to each other about the Earth-moon distance apart and let go and what happens? Both bodies start "falling" toward each other, and since Venus only has about 81% of the mass of the Earth it falls a little faster.

So you could make the analogy that the paper clip is analogous to Venus in a two body falling problem, but the paper clip is much less massive than Earth so most of the motion will be in the paper clip and only a tiny bit in earth. You can use the same math you used to calculate Earth's motion toward Venus to calculate the Earth's motion toward the paper clip and you'll get an answer about how far the earth moves, which is a very small amount, but mathematically you would be right in saying it's calculated from the same model.

That sounds reasonable at first glance, so how can a "false" answer be true?

Well for one thing you've got elevators all over the world with masses far greater than a paper clip moving "up and down" in various orientations which probably don't cancel each other all out completely so you've probably got some small "wiggling" motions of the center of mass of the earth from all these perturbations, and there are even more from the many 1.0 magnitude earthquakes occurring all the time which again probably dwarf any effect of the earth moving toward a falling paper clip, and I'm sure you can think of many other perturbations like waves crashing at beaches with masses thousands of times greater than a paper clip etc. So you have these tiny motions many of which cancel each other out but not all, causing small "wiggling" motions of the Earth. Whether they can be measured or not is not as important as the fact that they are orders of magnitude more significant than the paper clip, so we could say any effect of the falling paper clip would be impossible to distinguish in any real experiment because the "signal" of the motion toward the paper clip is completely drowned out by the "noise" from moving elevators, earthquakes, waves crashing, etc. so in a very real sense it is false to say the Earth moves toward the paper clip when it falls.

The accurate answer would be to say the Earth is affected by many influences, most of which are so much larger than any possible motion toward the paper clip that any such motion of the Earth toward the paper clip can be disregarded as immeasurable and irrelevant. I believe that this "false" answer is probably more correct than the "true" answer and it is in this type of context that the link I provided was written.

I think the claim that intramolecular forces prohibit expansion in things like meter sticks is likely to be completely true. When you get into things like the Solar system, you find that the moon is moving away from the Earth and the Earth is moving away from the sun so this confirms expansion right? Wrong, those motions are a result of Newtonian mechanics and the tidal transfer of energy to the orbiting bodies.

So let's say you want to do the math like in the paper clip example, to see how much the solar system is expanding. Here is a paper which has done that:

On the influence of the global cosmological expansion on the local dynamics in the Solar System

You can read the paper yourself, but my interpretation is that the answer is much the same as the answer to whether the Earth falls toward the paper clip, which is this: You can calculate a value, but it is likely to be completely impossible to measure and any "signal" drowned by the "noise" of other effects, so the link saying you can assume the value is zero is essentially correct, but feel free to do the math now that you have it.

edit on 2017630 by Arbitrageur because: clarification

originally posted by: Arbitrageur

... but they could never make that work and further we now know that penning trap experiments have constrained the electron size to less than 10^-22m.

From my understanding the 10^22m number is an extrapolation, derived from composite particles like the proton:

|g - 2| = radius / compton wavelength

The question would be whether it applies to the electron at all.

That size limit I referred to was derived from an experiment using an electron in a Penning trap. Maybe you can show me exactly where they use a proton to extract the size limit but I see no mention of it here:

originally posted by: moebius

From my understanding the 10^22m number is an extrapolation, derived from composite particles like the proton:

|g - 2| = radius / compton wavelength

A Single Atomic Particle Forever Floating at Rest in Free Space: New Value for Electron Radius

Note they say this result is 10^4 x smaller, so even if you question this result, the 10^4 larger result which preceded this is still a problem for the old model, since that's a limit of less than 10^-16 cm and it was determined that 10^-13 cm was required to solve the energy density problem of the old model according to Hamed.

Received 28 August 1987

The quantum numbers of the geonium "atom", an electron in a Penning trap, have been continuously monitored in a non-destructive way by the new "continuous" Stern-Gerlach effect. In this way the g-factors of electron and positron have been determined to unprecedented precision,

½g ≡ vs/vc ≡ 1.001 159 652 188(4),

providing the most severe tests of QED and of the CPT symmetry theorem, for charged elementary particles. From the close agreement of experimental and theoretical g-values a new, 10^4 × smaller, value for the electron radius, Rg < 10^-20 cm, may be extracted.

edit on 201771 by Arbitrageur because: clarification

a reply to: Arbitrageur

I don't believe the Bohr radius has anything to do with the question of whether the electron itself is pointlike. This would only help if we viewed the wavefunction as being the square root of the density of the electron, rather than the square root of the probability of where we find it. For decades I thought the former might be true, since the proton is quite small in the hydrogen atom, so if the force center (from the proton, as it too has a wavefunction) is smeared a little bit it likely wouldn't affect the spectral data much. However I realized that if the density of the electron was smeared out over the wavefunction this would have a significant effect in the positronium spectral data. I did the calculation and came to the conclusion that no, the electron charge does not have a size corresponding to the wavefunction radius. The quantum calculation (to get the spectrum right) requires that the potential function assumes the full charge at each point. But then the question becomes, as our region of analysis gets smaller can there be a smearing that indicates a small finite size? One much smaller than the Bohr radius or the classical radius? And I don't believe that question is answered yet.

As a further note on this - I did not prepare my positronium work for publication, and so it may have an error. I have prepared for publication my high velocity quantum mechanics work, it has been reviewed and accepted for publication, and I believe it is sound. You can have a pointlike electron within quantum mechanics as far as Coulomb is concerned - but not Ampere. The magnetic dipole-dipole potential (from the spins) goes as the inverse of r cubed, and that leads to infinities within quantum mechanics. This has bothered me since my undergrad days (when we have clarity of thought). So both from a standpoint of infinite self energy and from the standpoint of the dipole-dipole potential a point-like electron is really untenable.

On the Higgs - my preon model predicts decay channels and mass for the observed events known as the Higgs (I show how it is really evidence of free preons being produced). However, my model predicts a mass consistent with only two of the four measurements made. We (you, I and ErosA433) discussed the state of that measurement some time ago, and I still think the standard deviation claimed is not correct. (There was never a further rebuttal of my last post on that. I tried to make my point clear, and don't know if it was accepted or not.)

The exposition of my thoughts on the Higgs is in one of my eighteen threads on the preon model. Unfortunately I don't know if anyone read that far. It was kind of a nasty problem. There was a lot to cover, and so things got too long to hold anyone's attention. But if I didn't cover it all, then the assumption would be (and should be) that the whole thing is likely just wrong. A bit of a catch-22. But yes, what is thought to be the Higgs was covered in my preon model.

But for the present purpose, even if my preon model is wrong (as it could be) there can of course be other explanations for what is presently known as the Higgs. It doesn't necessarily mean that the vacuum is creating and destroying whole universes on a continual basis and that sort of thing.

For me, time is the parameter that orders events. That's it. Purely classical. Space is Euclidean and three dimensional. Purely classical. It then becomes our job to explain things on that theatre. I get what you are saying, and brilliant people have been working on other concepts ever since Einstein. I simply believe we can still do it the old way. Just because light bends past a star doesn't mean space itself is warped - it could simply mean that the gravitational attraction law involves energy as well as mass. Stuff like that. We don't need to overthrow everything that is obvious to us from the time of our birth. We can, of course, do so, and it can even help to explore such things. But I think we can also make great progress from a classical footing. It's just not "in" at the moment.

I'm afraid your comprehension of what Hamad said is way off.....

I don't believe the Bohr radius has anything to do with the question of whether the electron itself is pointlike. This would only help if we viewed the wavefunction as being the square root of the density of the electron, rather than the square root of the probability of where we find it. For decades I thought the former might be true, since the proton is quite small in the hydrogen atom, so if the force center (from the proton, as it too has a wavefunction) is smeared a little bit it likely wouldn't affect the spectral data much. However I realized that if the density of the electron was smeared out over the wavefunction this would have a significant effect in the positronium spectral data. I did the calculation and came to the conclusion that no, the electron charge does not have a size corresponding to the wavefunction radius. The quantum calculation (to get the spectrum right) requires that the potential function assumes the full charge at each point. But then the question becomes, as our region of analysis gets smaller can there be a smearing that indicates a small finite size? One much smaller than the Bohr radius or the classical radius? And I don't believe that question is answered yet.

As a further note on this - I did not prepare my positronium work for publication, and so it may have an error. I have prepared for publication my high velocity quantum mechanics work, it has been reviewed and accepted for publication, and I believe it is sound. You can have a pointlike electron within quantum mechanics as far as Coulomb is concerned - but not Ampere. The magnetic dipole-dipole potential (from the spins) goes as the inverse of r cubed, and that leads to infinities within quantum mechanics. This has bothered me since my undergrad days (when we have clarity of thought). So both from a standpoint of infinite self energy and from the standpoint of the dipole-dipole potential a point-like electron is really untenable.

The more comprehensive model which your view doesn't seem to include (correct me if I'm wrong) is that the Higgs boson is an excitation of the Higgs field.....

On the Higgs - my preon model predicts decay channels and mass for the observed events known as the Higgs (I show how it is really evidence of free preons being produced). However, my model predicts a mass consistent with only two of the four measurements made. We (you, I and ErosA433) discussed the state of that measurement some time ago, and I still think the standard deviation claimed is not correct. (There was never a further rebuttal of my last post on that. I tried to make my point clear, and don't know if it was accepted or not.)

The exposition of my thoughts on the Higgs is in one of my eighteen threads on the preon model. Unfortunately I don't know if anyone read that far. It was kind of a nasty problem. There was a lot to cover, and so things got too long to hold anyone's attention. But if I didn't cover it all, then the assumption would be (and should be) that the whole thing is likely just wrong. A bit of a catch-22. But yes, what is thought to be the Higgs was covered in my preon model.

But for the present purpose, even if my preon model is wrong (as it could be) there can of course be other explanations for what is presently known as the Higgs. It doesn't necessarily mean that the vacuum is creating and destroying whole universes on a continual basis and that sort of thing.

Hamed and his colleagues feel that time might also be "emergent"....

For me, time is the parameter that orders events. That's it. Purely classical. Space is Euclidean and three dimensional. Purely classical. It then becomes our job to explain things on that theatre. I get what you are saying, and brilliant people have been working on other concepts ever since Einstein. I simply believe we can still do it the old way. Just because light bends past a star doesn't mean space itself is warped - it could simply mean that the gravitational attraction law involves energy as well as mass. Stuff like that. We don't need to overthrow everything that is obvious to us from the time of our birth. We can, of course, do so, and it can even help to explore such things. But I think we can also make great progress from a classical footing. It's just not "in" at the moment.

Maybe not. Hamad's solution relies on the same model that seems to suggest according to him that you and I and everyone else should collapse into tiny black holes and we don't understand exactly why we don't do that (do you remember that from his discussion?) so until that question is answered I think it's fair to say more research is needed.

originally posted by: delbertlarson

I don't believe that question is answered yet.

We (you, I and ErosA433) discussed the state of that measurement some time ago, and I still think the standard deviation claimed is not correct. (There was never a further rebuttal of my last post on that. I tried to make my point clear, and don't know if it was accepted or not.)

I vaguely remember that discussion and I haven't gone back and re-read it which I might do at some point, but my vague recollection is that Eros and I didn't seem to convince you that our views about standard deviation interpretation (which happened to agree with the research team that wrote the paper) were correct. I didn't think I was going to be able to convince you so I dropped it and I was a little surprised when you accepted my explanation about the 26 sigma which you initially objected to in a more recent discussion.

I'm not saying Hamad is right, just that I'm open-minded to the line of research investigating whether time and/or space-time might be emergent. He explained his rationale for why he thinks space-time has to be emergent and he's more convinced by it than I am. I'm unconvinced, just open-minded.

For me, time is the parameter that orders events. That's it. Purely classical. Space is Euclidean and three dimensional. Purely classical. It then becomes our job to explain things on that theatre.

However, some researchers have claimed to have found some evidence of time being emergent, which even if true can only be said to apply to these specific experimental conditions and doesn't necessarily apply to to the wide range of theories and phenomena that Hamad and his colleagues are investigating with respect to their ideas about "the doom of space-time".

Quantum Experiment Shows How Time ‘Emerges’ from Entanglement

Time is an emergent phenomenon that is a side effect of quantum entanglement, say physicists. And they have the first experimental results to prove it

Time from quantum entanglement: an experimental illustration

edit on 201771 by Arbitrageur because: clarification

originally posted by: Arbitrageur

Feel free now to ask any physics questions, and hopefully some of the people on ATS who know physics can help answer them.

Many things in physics make no sense.

Here's a question I asked my Physics Professor years ago. Maybe someone can answer this today.

Einstein's special relativity claims the mass of an object increases with speed, as seen by an observer that sees it moving.

Now that "mass" increases with the formula: m = m0/sqrt(1 - (v/c)^2), so becomes "infinite" when the object moves at the speed of light, v=c. So, we say nothing can travel at the speed of light that has mass, only massless things like the photon can travel at lightspeed.

However, although nothing can travel "at" the speed of light, that has mass, there's nothing in physics that prevents it from traveling very fast, near the speed of light.

Then, Einstein's General Relativity has equated "inertial mass", such as appears to the observer attempting to make the object go faster, with "gravitational mass", that causes two bodies to attract each other. These two types of mass are equivalent, in the theory of general relativity. Einstein says nobody can distinguish between them, because they have the same "effects".

Now, people working on Einstein's General Relativity discovered that when any object's "gravitational mass" increases beyond a certain point, that object "collapses" into a "black hole."

But, an observer watching a speeding object go faster and faster, will also see the mass of that object increase, until at some point that object will reach the "critical mass" for it to "collapse" into a "black hole", as seen by the observer.

Now Quantum Mechanics has introduced another twist, in that the vacuum is teaming with "particle + anti-particle" pairs popping in and out of existence all the time. Normally, these two annihilate each other, almost as soon as they are created, so we don't notice this phenomena, except in very careful experiments on the very small scale that can "see" these "vacuum fluctuations."

However, when this "vacuum fluctuation" occurs near a black hole, in many cases the "anti-particle" is sucked into the black hole, and the "particle" is expelled, causing the black hole to appear to "radiate a stream of particles" from it's surface, or "event horizon" as it's called. This phenomena is referred to in the literature: "that black holes have hair."

Now, here's the problem, and the question.

Einstein's relativity says that the "laws of physics remain the same, in any inertial reference frame."

An inertial reference frame is one that is moving with constant velocity relative to any other inertial reference frame.

So, here's a "paradox". In one reference frame, observer "A" sees object with mass just less than the critical mass to become a black hole, so he sees just "one" particle in the universe, when looking in that direction, around the moving object.

But, a second observer "B", moving with constant speed relative to "A", sees the same object moving slightly faster, just enough to observe that object exceed the "critical mass" and become a "black hole" in his reference frame. Thus, he sees the whole soup of particles being radiated away from this black hole that now has "hair."

Here's the question: How can two observers, A and B, both in inertial reference frames, disagree on the number of particles in the universe, when the laws of physics must remain the same in each reference frame?

How could B claim to see a "black hole", while A says there is none?

This question has puzzled me for decades, with no clear answer.

Maybe some smart person here can answer it.

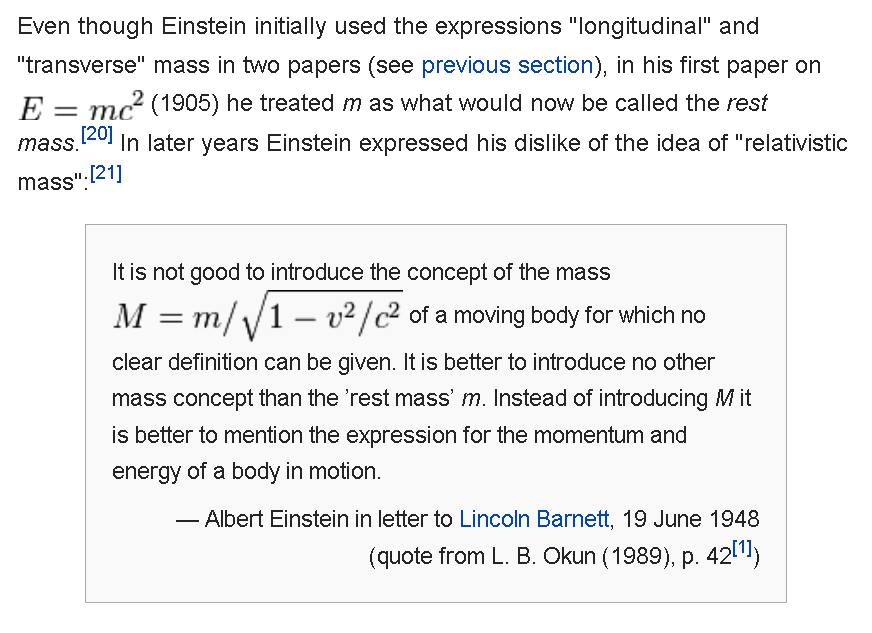

No it doesn't and Einstein specifically said he thought that idea which was being promoted by some others was not the correct way to look at it.

originally posted by: AMPTAH

Einstein's special relativity claims the mass of an object increases with speed, as seen by an observer that sees it moving.

So the problem was some professors who incorrectly in my opinion and in Einstein's opinion taught that idea about mass increasing, but most recent textbooks do not teach this idea anymore and some specifically say it's wrong. Mass does not increase, and people who say it does are contradicting Einstein. That's the answer.

www.abovetopsecret.com...

originally posted by: Arbitrageur

Here is the actual quote from Einstein:

Mass in special relativity

What increases at relativistic velocities are energy and momentum, not mass.

edit on 201771 by Arbitrageur because: clarification

originally posted by: Arbitrageur

No it doesn't and Einstein specifically said he thought that idea which was being promoted by some others was not the correct way to look at it.

...

What increases at relativistic velocities are energy and momentum, not mass.

Ok. But, Einsteins relativity says MASS == ENERGY.

E = m.c^2

A body that increases its energy, will increase its mass, by the equivalence of mass and energy.

A hot star will "weigh" more than a cold star with the same number of particles.

The kinetic energy of those particles "contribute" to the total gravitational mass.

So, the problem still exists, that the observer B will see a heavier object because it has more energy.

The math is the same, even if we "re-label" the terms used.

a reply to: AMPTAH

The equivalence relationship does not mean mass and energy are the same thing, it means one can be converted to the other.

To see the difference look at a nuclear bomb video on youtube before and after it detonates. Before the detonation you see mass, after you see some of that mass has been converted to energy. It doesn't look the same before and after, because it's not the same.

However you have a point that should an object attain enough relativistic energy and momentum it might attain the appearance of a black hole to an observer, but it doesn't actually become a black hole. All that is explained here:

Can a Really, Really Fast Spacecraft Turn Into A Black Hole?

A real black hole appears to be a black hole from any reference frame, a fast moving object does not, so there's a huge difference. If you travel alongside the fast moving object (in the same reference frame) you can see it's not really a black hole, and it will never turn into a real black hole just because of its relativistic energy because in its rest frame it has none. In the other frames are observer effects.

The equivalence relationship does not mean mass and energy are the same thing, it means one can be converted to the other.

To see the difference look at a nuclear bomb video on youtube before and after it detonates. Before the detonation you see mass, after you see some of that mass has been converted to energy. It doesn't look the same before and after, because it's not the same.

However you have a point that should an object attain enough relativistic energy and momentum it might attain the appearance of a black hole to an observer, but it doesn't actually become a black hole. All that is explained here:

Can a Really, Really Fast Spacecraft Turn Into A Black Hole?

A real black hole appears to be a black hole from any reference frame, a fast moving object does not, so there's a huge difference. If you travel alongside the fast moving object (in the same reference frame) you can see it's not really a black hole, and it will never turn into a real black hole just because of its relativistic energy because in its rest frame it has none. In the other frames are observer effects.

edit on 201771 by Arbitrageur because: clarification

a reply to: Arbitrageur

Your exposition on the 23 sigma matter was clear, and I was clearly incorrect. I regret you were surprised that I would admit error in an area where I was clearly incorrect, as that has nothing but negative implications for my online presence, but OK, we move on.

On the standard deviation issue, I tried to be clear several times but I could not convince you nor Eros of what I view as a problem, nor could either of you show me where I was wrong. So let me try again, by following your earlier lead and use a very simple numerical example to illustrate my point. Lets look at just two data sets A: (1.1, 1) and B: (1.3, 1.2). Now the mean of A is 1.05 and the mean of B is 1.25.

For A, the standard deviation is: sd_A = sqrt[[(1.1-1.05)^2+(1-1.05)^2]/2] = sqrt[[.05^2+.05^2]/2] = .05. For B, since I chose a simple case, sd_B also is .05.

Now the overall mean is 1.15, whether you take the mean of the means, or the mean of the data directly. And I assert that the overall standard deviation is sd_Total = sqrt[[(1.1-1.15)^2 + (1-1.15)^2 + (1.3-1.15)^2 + (1.2-1.15)^2]/4]

= sqrt[[.05^2 + .15^2 + .15^2 + .05^2]/4] = sqrt[[.0025 + .0225 + .0225 + .0025]/4] = sqrt[.05/4] = 0.1118.

But what I believe the two collaborations are doing is to first adjust (data correct) the means of the individual data sets so that each data set is centered on the overall weighted mean, and only then calculating the standard deviation after that data correction. So they adjust A by adding .1 to each point, A': (1.2, 1.1) so that the mean of that data is now the overall mean, and they adjust B by subtracting .1 from each point: B': (1.2, 1.1), again to make the mean of that data match the overall mean.

And then they calculate the standard deviation:

sd_Total' = sqrt[[(1.2-1.15)^2 + (1.1-1.15)^2 + (1.2-1.15)^2 + (1.1-1.15)^2]/4]

= sqrt[[.05^2 + .05^2 + .05^2 + .05^2]/4] = sqrt[[4 x .05^2]/4] = .05

Now in the collaboration case there are four data sets, and vastly more data points in each set than I have here. The additional data results in a situation where the overall standard deviation they state is less than the standard deviations of any of the individual sets. But the point is the same. If you have data sets that don't have aligned means, but rather, the individual means are separated by more than their own individual standard deviations, I believe the overall standard deviation should be larger than that of the individual sets, not smaller.

Do you see my point? Do you see a flaw? This seems pretty straight-forward to me.

I do admit that each of the data sets must have some systematic error that will need to be corrected. Once corrected, all data sets will indeed lie upon the same mean, and the standard deviation will be improved due to the additional statistics. But I don't believe it is honest to assume that the eventual systematic error will be that one that results in all individual sets lying on top of the weighted mean. One of the experiments might be more correct than the others, for instance.

Your exposition on the 23 sigma matter was clear, and I was clearly incorrect. I regret you were surprised that I would admit error in an area where I was clearly incorrect, as that has nothing but negative implications for my online presence, but OK, we move on.

On the standard deviation issue, I tried to be clear several times but I could not convince you nor Eros of what I view as a problem, nor could either of you show me where I was wrong. So let me try again, by following your earlier lead and use a very simple numerical example to illustrate my point. Lets look at just two data sets A: (1.1, 1) and B: (1.3, 1.2). Now the mean of A is 1.05 and the mean of B is 1.25.

For A, the standard deviation is: sd_A = sqrt[[(1.1-1.05)^2+(1-1.05)^2]/2] = sqrt[[.05^2+.05^2]/2] = .05. For B, since I chose a simple case, sd_B also is .05.

Now the overall mean is 1.15, whether you take the mean of the means, or the mean of the data directly. And I assert that the overall standard deviation is sd_Total = sqrt[[(1.1-1.15)^2 + (1-1.15)^2 + (1.3-1.15)^2 + (1.2-1.15)^2]/4]

= sqrt[[.05^2 + .15^2 + .15^2 + .05^2]/4] = sqrt[[.0025 + .0225 + .0225 + .0025]/4] = sqrt[.05/4] = 0.1118.

But what I believe the two collaborations are doing is to first adjust (data correct) the means of the individual data sets so that each data set is centered on the overall weighted mean, and only then calculating the standard deviation after that data correction. So they adjust A by adding .1 to each point, A': (1.2, 1.1) so that the mean of that data is now the overall mean, and they adjust B by subtracting .1 from each point: B': (1.2, 1.1), again to make the mean of that data match the overall mean.

And then they calculate the standard deviation:

sd_Total' = sqrt[[(1.2-1.15)^2 + (1.1-1.15)^2 + (1.2-1.15)^2 + (1.1-1.15)^2]/4]

= sqrt[[.05^2 + .05^2 + .05^2 + .05^2]/4] = sqrt[[4 x .05^2]/4] = .05

Now in the collaboration case there are four data sets, and vastly more data points in each set than I have here. The additional data results in a situation where the overall standard deviation they state is less than the standard deviations of any of the individual sets. But the point is the same. If you have data sets that don't have aligned means, but rather, the individual means are separated by more than their own individual standard deviations, I believe the overall standard deviation should be larger than that of the individual sets, not smaller.

Do you see my point? Do you see a flaw? This seems pretty straight-forward to me.

I do admit that each of the data sets must have some systematic error that will need to be corrected. Once corrected, all data sets will indeed lie upon the same mean, and the standard deviation will be improved due to the additional statistics. But I don't believe it is honest to assume that the eventual systematic error will be that one that results in all individual sets lying on top of the weighted mean. One of the experiments might be more correct than the others, for instance.

If you know absolutely nothing about your data, this is probably the calculation method you would need to use and the calculation is correct. However as I already explained by painstakingly providing a detailed example where we know more about the data, which I think was a good analogy for the data on the Higgs, the problem with this method is that it ignores the additional information we have about the Higgs data and it arrives at the wrong result.

originally posted by: delbertlarson

For A, the standard deviation is: sd_A = sqrt[[(1.1-1.05)^2+(1-1.05)^2]/2] = sqrt[[.05^2+.05^2]/2] = .05. For B, since I chose a simple case, sd_B also is .05.

Now the overall mean is 1.15, whether you take the mean of the means, or the mean of the data directly. And I assert that the overall standard deviation is sd_Total = sqrt[[(1.1-1.15)^2 + (1-1.15)^2 + (1.3-1.15)^2 + (1.2-1.15)^2]/4]

= sqrt[[.05^2 + .15^2 + .15^2 + .05^2]/4] = sqrt[[.0025 + .0225 + .0225 + .0025]/4] = sqrt[.05/4] = 0.1118.

There are more ways of combining data than you can shake a stick at if you know something about the data and your guess is probably a little off on how the authors combined the Higgs data, and I don't know exactly how they did it either but my guess is they probably used something like Pooled Variance.

Yes of course I see a huge flaw in the assumption that we don't know more about the data when we do know more about the data, and the variances in the data are similar which certainly supports the idea that the data sets may be considered to have the same variance and are therefore candidates for pooled variance or some similar technique, and one of the features of the pooled variance method is that unlike your 2nd example where the combined standard deviation ends up the same as the individual deviations, the pooled variance method results in a standard deviation that is lower, which is exactly how you described the published results in the paper: "The additional data results in a situation where the overall standard deviation they state is less than the standard deviations of any of the individual sets." That is exactly one of the features of using the pooled variance method.

And then they calculate the standard deviation:

sd_Total' = sqrt[[(1.2-1.15)^2 + (1.1-1.15)^2 + (1.2-1.15)^2 + (1.1-1.15)^2]/4]

= sqrt[[.05^2 + .05^2 + .05^2 + .05^2]/4] = sqrt[[4 x .05^2]/4] = .05

Now in the collaboration case there are four data sets, and vastly more data points in each set than I have here. The additional data results in a situation where the overall standard deviation they state is less than the standard deviations of any of the individual sets.

Do you see my point? Do you see a flaw? This seems pretty straight-forward to me.

Using the pooled variance method, here's the calculation I come up with for standard deviation using your numbers:

Standard deviation using pooled variance method = sqrt [(.0025 + .0025)/(2+2)] = 0.035

So the pooled standard deviation of 0.035 is indeed less than the .05 and .05 standard deviations of the individual data sets which is one of the features of this method. Again I don't know if this is the exact technique used but if not this it was probably something similar.

The means are another story. They are different and in the example I already gave you in the other thread, I explained exactly how the means might be different in two data sets, and yet the variances would be the same and in this case the data should NOT be combined as in your first calculation because the calculated variance is unrealistically large in such a situation. I'm much more familiar with the example I gave you than I am with the Higgs data, but I suspect the Higgs situation is statistically somewhat similar from my review of the data plots.

I hope this helps.

edit on 201771 by Arbitrageur because: clarification

a reply to: delbertlarson

Try to answer this question: Fundamentally, why is 'higgs concept' necessary? Why can't mass just be mass on its own?

Keep this in mind always: Space must be full of substance/mass for gravity to be possible. What keeps the Earth continuously near the Sun? Spooky action at a distance magic? Or necessarily substance/mass/stuff?

Space-time was just a conceptual tool like x-y axis is a conceptual tool that data can be plotted on. "Look at how convenient it is to plot data on this x-y (and z) axis, that must mean x-y-z (and w) axis really exists as a real thing in/as nature"... well yea, there are your 4 "dimensions".

Consider this: Governments/top research and developers don't want the public/competing private industry interests/competing governments to know the absolute true understanding of fundamental nature so there is some nonsense thrown in, so that maybe russia, china, and you will chase nonexistent rabbit holes

If the Universe was Gods Simulation (we do not know if this is the case, but if it was): some effects claimed to be present as 'quantum mechanics' might be expected. In other words, irrational, nonsensical, non classical, non 'physical', 'video game mechanics', 'lack of cause and effect', may be possible, in a Universe/realm created by a God. There could be ulterior motives for people in power to want the public to believe they have proof/evidence that the universe is a simulation, and that this type of Quantum mechanic stuff is proof. They could be lying and faking this because they believe ends and means.

If in this universe it is possible to avoid cause and effect, the universe is a high tech intelligent invention.

I have never encountered evidence or proof that the 'physically impossible' (as in, if the universe was real/natural: quantum mechanic claim x,y,z would be a priori eternally impossible) claims of quantum mechanic spouters were actually witnessed, experienced, experimented, understood, and I have done plenty of looking into the literature and arguments (double slit, bells theorem, delay experiment etc.)

To summarize: There are certain apriori eternal limits (having to due with the possible nature of possible object/geometry, 'type of substance') on possible physical scenarios that can possibly occur in a real natural physical universe.

Unmediated spooky action at a distance/action without reason/'breaking/escaping' of cause and effect is eternally impossible to occur in a purely real physical natural universe

Heres the big important kicker!! Which makes the 3rd side of the coin

It is possible for there to be a purely real natural physical universe: And! For scientists in that universe to observe phenomenon which Appear!!!! to break causality! Which appear to be spooky action at a distance. It is possible in a real natural universe, for a scientist to do experiments, to be unsure if they live in a purely real, or God Created "Fake" reality, to make math equations, and maps of physical information and data, and watch reactions and results, and do everything perfectly, and witness time and time again, Particle A effecting particle B at a distance without touching: and go in his notebook, and write : Classical physics is over, it is possible for an object to effect another object at a distance with no substance in between the objects, with no mediation, this is evidence the universe is not purely natural (though the ones here are to bashful to make such steps, because they cant for the death of them imagine why in a purely physical natural reality it would be eternally apriroi impossible for absolutely nothing to exist in between two objects, and for one object without anything transferring between the space to the other, to effect it, though I suspect they, I hope they, are playing dumb)

And, it is possible for them to be wrong!!

It is possible for a scientist to say: Absolutely Nothing exists between the Earth and the Sun, on average, maybe a little tiny particles, whatever, certainly not enough to push the earth. oh yeah, particle pairs always out of nothing, yeah vacuum, but this isnt enough to push the earth..

Well yeah, Space time pushes the Earth and Sun! But I am not going to think about what spacetime might be or be made of, just the word space time, its just words, words push the sun and earth, the words space time pushs the sun and earth. No what do the words represent in physical reality, how much material is in the vacuum, how much substance/mass/material/stuff/energy (besides radiation from sun) is in-between and surrounding immediately the sun and earth,

It must be an amount, a quantity, of physical material enough to keep the Earth near the Sun... to keep all the planets near the sun (near, as in, continuously in a system)

So in conclusion: Either the universe is purely/naturally/physically real (which means in certain parameters it is limited in the possible types of behavior of the substance within it/of it/as it)

(ultimately reality ultimately must be real... but it may be possible in the real reality to create fake realities, which is what is being questioned)

Or the universe is a technological creation (I suppose it also could be fully natural, physical, but still organized by God somehow...dunno), which still ultimately would have limitations, but if you are familiar with video games, you can make 'unrealistic physical occurrences, by simulating a real reality',

And it is possible: in a simulated universe, for scientists in it, to be unable to determine if they are in a fake universe or not?

And it is possible: in a real universe, for scientists to perform experiments which appear to suggest that there might be evidence that the universe is a simulation: but these scientists being wrong, because not having the full present data of reality: i.e. optical illusions: errors of model/math/experiment concept

Please Quote every line I have written in this post, and respond to each one please. It is possible any errors in understanding, conceptualizing, are errors in understanding, conceptualizing, the most absolutely fundamental and foundational.

Try to answer this question: Fundamentally, why is 'higgs concept' necessary? Why can't mass just be mass on its own?

Keep this in mind always: Space must be full of substance/mass for gravity to be possible. What keeps the Earth continuously near the Sun? Spooky action at a distance magic? Or necessarily substance/mass/stuff?

Space-time was just a conceptual tool like x-y axis is a conceptual tool that data can be plotted on. "Look at how convenient it is to plot data on this x-y (and z) axis, that must mean x-y-z (and w) axis really exists as a real thing in/as nature"... well yea, there are your 4 "dimensions".

Consider this: Governments/top research and developers don't want the public/competing private industry interests/competing governments to know the absolute true understanding of fundamental nature so there is some nonsense thrown in, so that maybe russia, china, and you will chase nonexistent rabbit holes

If the Universe was Gods Simulation (we do not know if this is the case, but if it was): some effects claimed to be present as 'quantum mechanics' might be expected. In other words, irrational, nonsensical, non classical, non 'physical', 'video game mechanics', 'lack of cause and effect', may be possible, in a Universe/realm created by a God. There could be ulterior motives for people in power to want the public to believe they have proof/evidence that the universe is a simulation, and that this type of Quantum mechanic stuff is proof. They could be lying and faking this because they believe ends and means.

If in this universe it is possible to avoid cause and effect, the universe is a high tech intelligent invention.

I have never encountered evidence or proof that the 'physically impossible' (as in, if the universe was real/natural: quantum mechanic claim x,y,z would be a priori eternally impossible) claims of quantum mechanic spouters were actually witnessed, experienced, experimented, understood, and I have done plenty of looking into the literature and arguments (double slit, bells theorem, delay experiment etc.)

To summarize: There are certain apriori eternal limits (having to due with the possible nature of possible object/geometry, 'type of substance') on possible physical scenarios that can possibly occur in a real natural physical universe.

Unmediated spooky action at a distance/action without reason/'breaking/escaping' of cause and effect is eternally impossible to occur in a purely real physical natural universe

Heres the big important kicker!! Which makes the 3rd side of the coin

It is possible for there to be a purely real natural physical universe: And! For scientists in that universe to observe phenomenon which Appear!!!! to break causality! Which appear to be spooky action at a distance. It is possible in a real natural universe, for a scientist to do experiments, to be unsure if they live in a purely real, or God Created "Fake" reality, to make math equations, and maps of physical information and data, and watch reactions and results, and do everything perfectly, and witness time and time again, Particle A effecting particle B at a distance without touching: and go in his notebook, and write : Classical physics is over, it is possible for an object to effect another object at a distance with no substance in between the objects, with no mediation, this is evidence the universe is not purely natural (though the ones here are to bashful to make such steps, because they cant for the death of them imagine why in a purely physical natural reality it would be eternally apriroi impossible for absolutely nothing to exist in between two objects, and for one object without anything transferring between the space to the other, to effect it, though I suspect they, I hope they, are playing dumb)

And, it is possible for them to be wrong!!

It is possible for a scientist to say: Absolutely Nothing exists between the Earth and the Sun, on average, maybe a little tiny particles, whatever, certainly not enough to push the earth. oh yeah, particle pairs always out of nothing, yeah vacuum, but this isnt enough to push the earth..

Well yeah, Space time pushes the Earth and Sun! But I am not going to think about what spacetime might be or be made of, just the word space time, its just words, words push the sun and earth, the words space time pushs the sun and earth. No what do the words represent in physical reality, how much material is in the vacuum, how much substance/mass/material/stuff/energy (besides radiation from sun) is in-between and surrounding immediately the sun and earth,