It looks like you're using an Ad Blocker.

Please white-list or disable AboveTopSecret.com in your ad-blocking tool.

Thank you.

Some features of ATS will be disabled while you continue to use an ad-blocker.

share:

Damn my milage has tanked in the last two days. The weather suddenly got hot n humid.

I have been going to a bargain gas station with low prices. But the fuel seemed ok from there a week ago. Took my car into the shop. Nothing wrong with the engine. Got plenty of oil and fluids.

Trying to figure out why my cars gas milage has suddenly gone kaput. Driving disposition has been the same as before this started happening so im not jamming on the gas pedal or anything.

I have been going to a bargain gas station with low prices. But the fuel seemed ok from there a week ago. Took my car into the shop. Nothing wrong with the engine. Got plenty of oil and fluids.

Trying to figure out why my cars gas milage has suddenly gone kaput. Driving disposition has been the same as before this started happening so im not jamming on the gas pedal or anything.

edit on 27-6-2017 by BASSPLYR because: (no reason given)

a reply to: BASSPLYR

You didn't mention tire pressure. Low tire pressure can cause bad gas mileage. It doesn't have to be a complete flat, maybe you picked up a nail and have a slow leak.

Even without a pressure gauge you can put your hand on your tires after driving at highway speeds and see if any are hotter than others, they might have lower pressure, and generating all that heat is where your extra fuel is going. It's happened to me.

a reply to: DanteGaland

Headlights don't use anywhere near the power of air conditioning, but yes theoretically anything that uses power including headlights can affect gas mileage. I never felt like I had that much discretion using headlights, maybe just for a few minutes where they are of marginal benefit at dusk or dawn but generally you either need them, or you don't, so they are more a safety consideration.

Car stereo doesn't use much power if it's stock, but if you have a custom install with 1000W amplifier and subwoofers that will shatter glass as you drive through the neighborhood, that could use more power than headlights.

Go easy on the custom stereo BASSPLYR.

You didn't mention tire pressure. Low tire pressure can cause bad gas mileage. It doesn't have to be a complete flat, maybe you picked up a nail and have a slow leak.

Even without a pressure gauge you can put your hand on your tires after driving at highway speeds and see if any are hotter than others, they might have lower pressure, and generating all that heat is where your extra fuel is going. It's happened to me.

a reply to: DanteGaland

Headlights don't use anywhere near the power of air conditioning, but yes theoretically anything that uses power including headlights can affect gas mileage. I never felt like I had that much discretion using headlights, maybe just for a few minutes where they are of marginal benefit at dusk or dawn but generally you either need them, or you don't, so they are more a safety consideration.

Car stereo doesn't use much power if it's stock, but if you have a custom install with 1000W amplifier and subwoofers that will shatter glass as you drive through the neighborhood, that could use more power than headlights.

Go easy on the custom stereo BASSPLYR.

a reply to: Arbitrageur

To prior poster. Nope not using headlights this is during daytime .

Arbitrageur- good looking put with the tire thing. One of my left side tires has seemingly normal pressure but yet i still hear the sound of something going clack clack clack as i drive. . Ive searched the tires and cant find anything. Perplexing

To prior poster. Nope not using headlights this is during daytime .

Arbitrageur- good looking put with the tire thing. One of my left side tires has seemingly normal pressure but yet i still hear the sound of something going clack clack clack as i drive. . Ive searched the tires and cant find anything. Perplexing

originally posted by: zundier

Have you ever measured the Speed of Light? If so, did you measure again?

Righto a question - we can get started for information, the question was not ignored, I don't always check ATS every day and I certainly don't sit hitting refresh over and over as I don't go checking the log on list and have no idea when a reply will come.

From here you can see a few measurements that have been made.

math.ucr.edu...

First page that popped up in google, it is information from a reference book,

Never measured and resulted in the same measurement twice... well such a thing would be quite rare thanks to experimental uncertainties. Typically a measurement made by a lab will do well to get within a few % of the accepted value, going for specialist equipment/methods you might get a few 10ths maybe 100ths of % but two measurements are unlikely to be identical, like, ever. This is the experimental method. You will always get statistical and systematic uncertainties.

Maybe you need to elaborate more?

Difference between 0 and 1

It depends on your counting system, decimal orders there are indeed an infinite number OF numbers between 0 and 1, but like i said, depends on the counting system. If you add it all together via integration you should get... 1.

If you are talking about a Boolean true / false, then by definition the system cannot be in a state other than 0 or 1

If you are talking about computing, then floating point minimum value that is not zero is something like 2**(-149) or 1.4e-45 (if i am not mistaken) which means there are 7.136238464E44 different numbers between 0 and 1

Again it is about how you define the question

Forgive me for bad english. Of course we're certain about lot's of things in life. I'm just curious of your opinion as a professional scientist regarding the possibility of an ultimate truth? Surely we're are Zillions from it, from Science. But is it achievable?

Just like to add: I'm no one, not scientist, not even close. Just curious and love science. I don't mean to be aggressive, sorry if english writing may look like it.

Nothing to apologize for language barriers can be frustrating and do unfortunately lead to misunderstandings. Your question is one of a philosophical nature. Can we know everything? My answer is that I really don't know. It is the case that at an empirical and theoretical nature we do know quite a lot of phenomenology, exactly how it all works at a fundamental level is a little bit less filled in.

Ultimately there are lots of open questions, some of them are quite obvious ones, but understanding them at a fundamental level requires a hell of a lot of work. So for a none expert, it is sometimes quite funny to say "Well obviously the universe exists, so why are you even bothering to make sure?" And what I am referring to here is the matter/anti-matter asymmetry of the observed universe.

We look at the universe and only see matter... yet... if the big bang was true, we would expect equal anti-matter (roughly) it would all cancel and we would be living(or not living) in an universe of energy and no mass.

My answer, I am not sure we will get to a point were we know everything. This is mostly because of the difference between known-knowns, known-unknowns and unknown-unknowns changing such that we have known-knowns and unknown-unknowns and so we interpret it as we know it all. But within our lifetimes, I doubt we would touch it. In terms of a fundamental Theory of physics, we are trying to get there, but still a bit of a ways off at the moment.

a reply to: Arbitrageur

These are really two quite different questions. Traveling in a fourth space dimension is one thing, and going to another universe is another. Let's do the easy one first. If you think of universe as everything currently visible, out to the edge of the Hubble expansion, and assume there is another one somewhere, several times farther than the farthest point in our universe, but similarly expanding, getting to it would be about as difficult as getting to the edge of the expansion. The edge of the universe is expanding at near c, so you would need inordinate amounts of energy to get there, but once there you could sail on out to Universe-2 just by waiting, assuming you aimed correctly.

If there is another space dimension, traveling any distance in it would break the physics laws of our three-dimensional slice of the total package. That would explain why we don't know of any such dimension, and there is nothing in known physics which would indicate how to do it. If someone finds a new force which does relate to a fourth space dimension, it might be easy to do, with little energy, or it might be the opposite. There is no clue available as to where to find such a force, as physicists have managed to explain everything using the existing ones. Strong and weak forces were discovered only recently, however, so perhaps you should wait a couple of decades and ask your question again. Even electricity was recognized only a century or two ago. You might be doing the analog of asking a caveman about neutrinos.

These are really two quite different questions. Traveling in a fourth space dimension is one thing, and going to another universe is another. Let's do the easy one first. If you think of universe as everything currently visible, out to the edge of the Hubble expansion, and assume there is another one somewhere, several times farther than the farthest point in our universe, but similarly expanding, getting to it would be about as difficult as getting to the edge of the expansion. The edge of the universe is expanding at near c, so you would need inordinate amounts of energy to get there, but once there you could sail on out to Universe-2 just by waiting, assuming you aimed correctly.

If there is another space dimension, traveling any distance in it would break the physics laws of our three-dimensional slice of the total package. That would explain why we don't know of any such dimension, and there is nothing in known physics which would indicate how to do it. If someone finds a new force which does relate to a fourth space dimension, it might be easy to do, with little energy, or it might be the opposite. There is no clue available as to where to find such a force, as physicists have managed to explain everything using the existing ones. Strong and weak forces were discovered only recently, however, so perhaps you should wait a couple of decades and ask your question again. Even electricity was recognized only a century or two ago. You might be doing the analog of asking a caveman about neutrinos.

a reply to: BASSPLYR

The energy in the gas is the same, so the question is, is your car getting the energy out of the gas or is it wasting it and not using it for moving. The first would be happening if the compression is failing in one of your cylinders, or if the timing is off. I would hope the garage mechanic would check both of these. The second would be happening if you are wasting energy in the transmission or by your brakes dragging. These wouldn't be found by an engine check. Have your mechanic make sure these four things are checked well.

Conspiracy wise, maybe the gas station has a jimmied their pumps to indicate more is coming out than actually is. Very illegal and very unlikely.

The energy in the gas is the same, so the question is, is your car getting the energy out of the gas or is it wasting it and not using it for moving. The first would be happening if the compression is failing in one of your cylinders, or if the timing is off. I would hope the garage mechanic would check both of these. The second would be happening if you are wasting energy in the transmission or by your brakes dragging. These wouldn't be found by an engine check. Have your mechanic make sure these four things are checked well.

Conspiracy wise, maybe the gas station has a jimmied their pumps to indicate more is coming out than actually is. Very illegal and very unlikely.

Your whole answer is good. Regarding that list of speed of light measurements, there's an unsolved mystery in an experiment not listed.

originally posted by: ErosA433

From here you can see a few measurements that have been made.

math.ucr.edu...

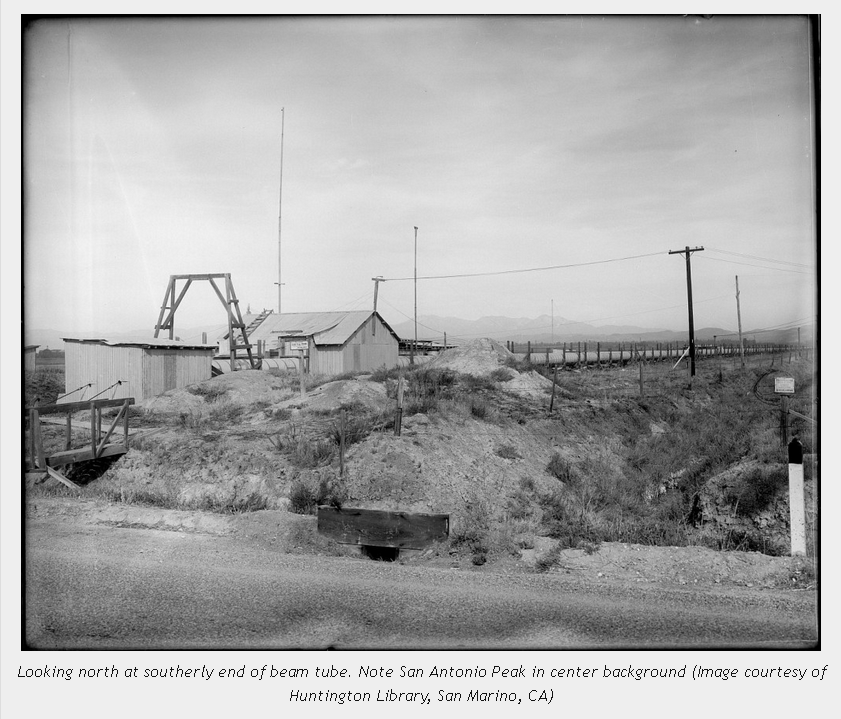

See the 1926 measurement by Albert Michelson on that list? A few years later he attempted to make a more accurate measurement. He had grant money available totaling what would be in today's money about a million dollars, and built a mile long pipeline in which he attempted to evacuate most of the air to make a better measurement than the 1926 measurement between mountaintops, but for reasons which are still unknown the experiment failed to provide a better measurement. Some believe that had Michelson not had a stroke and died before he got all the "bugs" out that he would have eventually done so and the experiment would have been a success. His colleagues attempted to do that but they didn't have Michelson's gift as an experimental genius. Here's a picture of the pipeline that was built for the later experiment, not a project that was easy in any way:

Irv ine Ranch measurements (1929 – 1933)

One thing that really helped in more recent times with speed of light measurements was the development of lasers in the late 1950s, which weren't available in Michelson's time.

We don't know if the universe has an edge or not, it may not. Even if it does have an edge it is almost certainly not expanding at near c. The edge of the observable universe is expanding at about 3 times c, and if there's an edge to the universe it's got to be beyond the observable universe and therefore likely to be expanding at not less than 3c. Think about how the edge of the observable universe could be 46.5 billion light years away. If it was only receding at c and the universe is only 13.8 billion years old, how could it be more than 13.8 billion light years away?

originally posted by: StanFL

a reply to: Arbitrageur

The edge of the universe is expanding at near c, so you would need inordinate amounts of energy to get there, but once there you could sail on out to Universe-2 just by waiting, assuming you aimed correctly.

The wormhole idea I mentioned would likely require exotic matter and I'd have to see that to believe it, but with that caveat while I'm far less optimistic about traversable wormholes than the author of this paper, I don't completely reject his ideas if he's right that exotic matter is not an impossibility. Exotic matter would also be required for a "warp drive", also discussed in that paper.

He can discuss exotic matter (and its applications), but making it is another story. I'll have to see it to believe it.

The methods of producing effective exotic matter (EM) for a traversable wormhole (TW) are discussed. Also, the approaches of less necessity of TWs to EM are considered. The result is, TW and similar structures; i.e., warp drive (WD) and Krasnikov tube are not just theoretical subjects for teaching general relativity (GR) or objects only an advanced civilization would be able to manufacture anymore, but a quite reachable challenge for current technology

edit on 2017628 by Arbitrageur because: clarification

originally posted by: Arbitrageur

The edge of the observable universe is expanding at about 3 times c, and if there's an edge to the universe it's got to be beyond the observable universe and therefore likely to be expanding at not less than 3c. Think about how the edge of the observable universe could be 46.5 billion light years away. If it was only receding at c and the universe is only 13.8 billion years old, how could it be more than 13.8 billion light years away?

By the special theory nothing can go faster than c. We work very hard with accelerators to speed up particles, and the limit is always c, which we can approach but never exceed. So the claims made above must rely on some theorizing that we can't actually test in our terrestrial labs - which for me puts this more in the realm of speculation than science. I am missing something?

It sounds like you meant to reply to me instead of Stan. Putting the best vacuum in a pipe he could was the best Michelson could do in 1930, because there was no "vacuum of space" available then. The space age didn't even begin until 1957.

originally posted by: Cauliflower

a reply to: StanFL

Why didn't Michelson choose a metric pipe length for the interferometer, or better than that the vacuum of space?

They had been working on the meter standard pre US civil war.

Don't get me started on the metric system, it's a huge embarrassment that the US still hasn't converted even though it should have happened a long time ago, in the 1970s anyway. Even more embarrassing was the crash of the Mars climate orbiter which resulted from confusion between the English and Metric systems.

All hope is not lost though. I recently looked at a bottle of Pepsi in the US, and it's labeled "2L(2.1Qt.)", so people are buying soda in liters now. It's a start.

I think you are missing something. Nothing travels faster than c locally, but what we actually measure in terrestrial labs is cosmological redshift and we now know to a confidence level of 23 sigma that it's not Doppler shift as was thought when the redshifts were first discovered.

originally posted by: delbertlarson

So the claims made above must rely on some theorizing that we can't actually test in our terrestrial labs - which for me puts this more in the realm of speculation than science. I am missing something?

Expanding Confusion: common misconceptions of cosmological horizons and the superluminal expansion of the Universe

We use standard general relativity to illustrate and clarify several common misconceptions about the expansion of the Universe. To show the abundance of these misconceptions we cite numerous misleading, or easily misinterpreted, statements in the literature. In the context of the new standard Lambda-CDM cosmology we point out confusions regarding the particle horizon, the event horizon, the ``observable universe'' and the Hubble sphere (distance at which recession velocity = c). We show that we can observe galaxies that have, and always have had, recession velocities greater than the speed of light. We explain why this does not violate special relativity and we link these concepts to observational tests. Attempts to restrict recession velocities to less than the speed of light require a special relativistic interpretation of cosmological redshifts. We analyze apparent magnitudes of supernovae and observationally rule out the special relativistic Doppler interpretation of cosmological redshifts at a confidence level of 23 sigma.

edit on 2017628 by Arbitrageur because: clarification

Thanks for pointing me to the Michelson experiment Arbitrageur, I was unaware of it. I will do some reading

Exactly as you say though, vacuum back in his time wouldn't have been that good at all compared to the vacuum of space, he would have likely been limited to drag pumps or something maybe more exotic, still not awesome.

Exactly as you say though, vacuum back in his time wouldn't have been that good at all compared to the vacuum of space, he would have likely been limited to drag pumps or something maybe more exotic, still not awesome.

a reply to: Arbitrageur

This I believe:

But 23 sigma? C'mon man!

I know the contexts are different. But the point is still - how can you measure anything to 23 sigma?

Beyond that, here is my problem with astrophysics - we can't vary parameters and do controlled tests and then see if the results of our controlled changes agree experimentally with our theories. We do have a lot of astrophysical data, but we really can't change anything and see the effects of our changes, and I've always believed this leaves us able to tell nice stories, but how can we tell if they are true? We can't measure the distance to the stars, don't know inclinations or elliptical natures of their orbits (for binaries), can't measure their masses, and on and on. I know that a lot of smart people have models they work on, and I am sure the math holds against various assumptions they make. But you can't really say that since star A is similar to B, but at a different (unknown, really) distance from us that it is the same as actually moving that same star A to position B. We just get what nature has. And then we have the issue that the light itself travels billions of light years through space, and over billions of years in time, to get to us. What do we assume about how it got here? It just seems like there's a lot of assuming going on to claim 23 sigma.

This I believe:

originally posted by: ErosA433

Typically a measurement made by a lab will do well to get within a few % of the accepted value, going for specialist equipment/methods you might get a few 10ths maybe 100ths of % but two measurements are unlikely to be identical, like, ever. This is the experimental method. You will always get statistical and systematic uncertainties.

But 23 sigma? C'mon man!

I know the contexts are different. But the point is still - how can you measure anything to 23 sigma?

Beyond that, here is my problem with astrophysics - we can't vary parameters and do controlled tests and then see if the results of our controlled changes agree experimentally with our theories. We do have a lot of astrophysical data, but we really can't change anything and see the effects of our changes, and I've always believed this leaves us able to tell nice stories, but how can we tell if they are true? We can't measure the distance to the stars, don't know inclinations or elliptical natures of their orbits (for binaries), can't measure their masses, and on and on. I know that a lot of smart people have models they work on, and I am sure the math holds against various assumptions they make. But you can't really say that since star A is similar to B, but at a different (unknown, really) distance from us that it is the same as actually moving that same star A to position B. We just get what nature has. And then we have the issue that the light itself travels billions of light years through space, and over billions of years in time, to get to us. What do we assume about how it got here? It just seems like there's a lot of assuming going on to claim 23 sigma.

a reply to: ErosA433

Had he been able to seal the pipe I suppose the pump could indeed have been the limiting factor. He requested bids from pipe suppliers to seal the pipe but nobody would touch it. The contractor who supplied the pipe said he was on his own for sealing it and apparently he learned that it needed to be sealed better than he thought, and there were other problems besides that, such as one I might not have guessed, the moisture content of the soil possibly causing soil to expand and contract affecting the position of the supports which rested on the soil though I don't know if that was ever confirmed and it doesn't really explain the bias problem as much as some extra variability:

www.otherhand.org...

Anyway the point was that it was darn hard to accurately measure the speed of light in a vacuum in 1930.

Take for example a hypothesis that you're testing which if true will result in measurements of 9.0 on average. This is just to illustrate the statistics so I'm focusing on that and omitting any units etc for this example. Let's say your measurements actual average is 3.0 with a standard deviation of 0.03, so plus or minus 3 sigma will be plus or minus 0.09 so 99.73% of the data will fall between 2.91 and 3.09. This is a range of plus or minus 3% so not such a great measurement system per Eros explanation where specialist methods "might get a few 10ths maybe 100ths of %".

So, how many standard deviations are between 3.0 and 9.0 when the standard deviation is 0.03?

That's 6/0.03=200 standard deviations. Now before you say the difference between 3 and 9 is ridiculous, no it's actually a relevant difference since 9 is 300% as great as 3, and while it doesn't translate exactly in the math, if the expansion rate of the edge of the observable universe is ~3c and since the limit under special relativity is 1c then again we have 3c being 300% as large as 1c, so large sigmas might be attained even with measurements which aren't all that precise. I just demonstrated nearly 200 sigma with a not so great measurement system giving us plus or minus 3 sigma of plus or minus 3%. Do you see this now?

Type Ia Supernova

Had he been able to seal the pipe I suppose the pump could indeed have been the limiting factor. He requested bids from pipe suppliers to seal the pipe but nobody would touch it. The contractor who supplied the pipe said he was on his own for sealing it and apparently he learned that it needed to be sealed better than he thought, and there were other problems besides that, such as one I might not have guessed, the moisture content of the soil possibly causing soil to expand and contract affecting the position of the supports which rested on the soil though I don't know if that was ever confirmed and it doesn't really explain the bias problem as much as some extra variability:

www.otherhand.org...

The results were not as satisfactory as the San Antonio experiments. Much of it was due to the vacuum, or lack thereof. Michelson originally thought a vacuum of 2″ (50 mm of Mercury) would be adequate for good seeing. However it was found that to get sharp images in the tube, vacuum levels had to be brought down to 1 to 2 mm of Mercury. Given the many pipe joints, method of sealing and power of the pumps, this was almost impossible to achieve.

Beyond the vacuum, there were other problems. Heating of the residual air in the beam by the Sun caused the image to vanish, thus most measurements had to be taken after dark. Best measuring seemed to be when the tube was enveloped in the seasonal thick fog that occurs in the area. And there were strange, unexplained cyclic drifts in the measurements. It was thought for a while it could be a tidal influence, but correlation was weak. Some of it was put down to the clay like soil the tube was anchored to, which shifted due to changes in moisture content. The tube was also placed parallel to a drainage ditch, in which varying flows of water occurred.

Anyway the point was that it was darn hard to accurately measure the speed of light in a vacuum in 1930.

Again I think you're missing something, because that quote from Eros doesn't imply that 23 sigma can't be achieved with a not so good measurement system if your measurements are far enough off from the hypothesized values.

originally posted by: delbertlarson

But 23 sigma? C'mon man!

I know the contexts are different. But the point is still - how can you measure anything to 23 sigma?

Take for example a hypothesis that you're testing which if true will result in measurements of 9.0 on average. This is just to illustrate the statistics so I'm focusing on that and omitting any units etc for this example. Let's say your measurements actual average is 3.0 with a standard deviation of 0.03, so plus or minus 3 sigma will be plus or minus 0.09 so 99.73% of the data will fall between 2.91 and 3.09. This is a range of plus or minus 3% so not such a great measurement system per Eros explanation where specialist methods "might get a few 10ths maybe 100ths of %".

So, how many standard deviations are between 3.0 and 9.0 when the standard deviation is 0.03?

That's 6/0.03=200 standard deviations. Now before you say the difference between 3 and 9 is ridiculous, no it's actually a relevant difference since 9 is 300% as great as 3, and while it doesn't translate exactly in the math, if the expansion rate of the edge of the observable universe is ~3c and since the limit under special relativity is 1c then again we have 3c being 300% as large as 1c, so large sigmas might be attained even with measurements which aren't all that precise. I just demonstrated nearly 200 sigma with a not so great measurement system giving us plus or minus 3 sigma of plus or minus 3%. Do you see this now?

Granted there are assumptions, and sometimes they turn out to be wrong or not quite right. The "standard candles" astronomers use turned out to not be quite as standard as they thought, so the assumption they were standard was somewhat flawed (though not by a lot). However with further research astronomers determined that by watching how quickly the Type Ia light curve decays in the 15 days after maximum light in the B band, they could account for small differences in luminosity due to mass difference that were previously not considered. It gets quite detailed and complicated so generic hand-waving arguments are not very persuasive though if you have genuine concerns about specifics in the approaches used by astronomers and can point out something they've overlooked that might be helpful, but it seems to me they are looking at things quite carefully, which is how they discovered their "standard candles" weren't completely standard.

And then we have the issue that the light itself travels billions of light years through space, and over billions of years in time, to get to us. What do we assume about how it got here? It just seems like there's a lot of assuming going on to claim 23 sigma.

Type Ia Supernova

Originally thought to be standard candles where every SNIa had the same peak brightness, it has been shown that this is close to the truth, but not quite. SNIa exhibit brightnesses at maximum that range from about +1.5 to -1.5 magnitudes around a typical SNIa. It has also been shown that the over or under luminosity of these objects is correlated to how quickly the Type Ia light curve decays in the 15 days after maximum light in the B band. This is known as the luminosity – decline rate relation and is the underlying concept which turns SNIa into one of the best distance indicators available to astronomers.

edit on 2017628 by Arbitrageur because: clarification

a reply to: Arbitrageur

Thank you for your response.

I had the assumption that you would answer in that manner, so you could perceive the "dilemma" that I mean. I can't find a better word in english, so apologize if I lacked better explanation.

What I meant was that there's a increasing raise of uncertainity of the scientific method among the scientists, that somehow scares them from reaching a dead's end road. What I mean as a dead end doesn't imply that the current science will not be effective and fall into the obsolete.

In my opinion, also shared within some fellow scientists and couple family`s astrophysics, starts from the feeling that:

Seeking answers with current method will give you answers eternally on a unsatisfying loop. Considering this paradoxal path, it appears to have brought some kind of frustration to scientists from this possibility of "locked" uncertain explanations, that would bring boredom and disbelief in the long run.

So the dilemma or whatever you could translate it, relies on the possibility that you would (or could) find everlasting answers for everlasting new puzzles. But since there's no other way to do it besides using the brain or machinery to apply logical / rational results, came one of my questions that was ignored and goes into your preference of Pi Loop from "0 to 1" Arrow.

Your assumption on comparing a finite water molecule to the 0 and 1 to say it doesn't mean it's infinite only because it's possible, I disagree. The fact that density can vary within random values from the exact number of molecules that you pointed, doesn't prove that a indestructible machine could start counting from 0 and never reaches 1.

Therefore, the suggestion of this dilemma is that math - the fundamental tool to physics - it's explicitly contrary to its very essential objective - that is logic. Since science is mostly based on it, and math itself has this irrationality embeded within it, I wonder if we're missing an additional tool beyond mathematics?

Thank you for your response.

I had the assumption that you would answer in that manner, so you could perceive the "dilemma" that I mean. I can't find a better word in english, so apologize if I lacked better explanation.

What I meant was that there's a increasing raise of uncertainity of the scientific method among the scientists, that somehow scares them from reaching a dead's end road. What I mean as a dead end doesn't imply that the current science will not be effective and fall into the obsolete.

In my opinion, also shared within some fellow scientists and couple family`s astrophysics, starts from the feeling that:

Seeking answers with current method will give you answers eternally on a unsatisfying loop. Considering this paradoxal path, it appears to have brought some kind of frustration to scientists from this possibility of "locked" uncertain explanations, that would bring boredom and disbelief in the long run.

So the dilemma or whatever you could translate it, relies on the possibility that you would (or could) find everlasting answers for everlasting new puzzles. But since there's no other way to do it besides using the brain or machinery to apply logical / rational results, came one of my questions that was ignored and goes into your preference of Pi Loop from "0 to 1" Arrow.

Your assumption on comparing a finite water molecule to the 0 and 1 to say it doesn't mean it's infinite only because it's possible, I disagree. The fact that density can vary within random values from the exact number of molecules that you pointed, doesn't prove that a indestructible machine could start counting from 0 and never reaches 1.

Therefore, the suggestion of this dilemma is that math - the fundamental tool to physics - it's explicitly contrary to its very essential objective - that is logic. Since science is mostly based on it, and math itself has this irrationality embeded within it, I wonder if we're missing an additional tool beyond mathematics?

edit on 29-6-2017 by zundier because: Gramatical fix

So, my back-of-the-napkin calculations tell me that at a distance of 780 kpc, the space between the Milky Way and Andromeda galaxies is expanding at

roughly 52.38 km/s. The relative velocity of the two galaxies moving toward each other is 110 km/s. So they are overcoming expansion at a 2.1 to 1

rate. Which is constantly increasing as the distance between them decreases.

Where I get hung up is the acceleration of expansion, and trying to pin down an accurate rate for it. I'm working on personal project involving an excel spreadsheet calculator function for estimating cosmic and local distances corrected for expansion vs time. I'm also considering a function for approximating expected blueshift or redshift, and strength of gravitational attraction vs distance and time.

I'm not asking for programming tips or cell equations. Just good references to find the values i need to plug into my calculations, or the values themselves, if you know them offhand. Such as, rate of increase of expansion/time, determination of Doppler shift/velocity and vs/expansion, and a standardized format for expressing gravitational force that can scale from planetary to cosmic values.

I'm not a terribly good researcher, or I wouldn't bother asking this in here.

Where I get hung up is the acceleration of expansion, and trying to pin down an accurate rate for it. I'm working on personal project involving an excel spreadsheet calculator function for estimating cosmic and local distances corrected for expansion vs time. I'm also considering a function for approximating expected blueshift or redshift, and strength of gravitational attraction vs distance and time.

I'm not asking for programming tips or cell equations. Just good references to find the values i need to plug into my calculations, or the values themselves, if you know them offhand. Such as, rate of increase of expansion/time, determination of Doppler shift/velocity and vs/expansion, and a standardized format for expressing gravitational force that can scale from planetary to cosmic values.

I'm not a terribly good researcher, or I wouldn't bother asking this in here.

new topics

-

George Knapp AMA on DI

Area 51 and other Facilities: 3 hours ago -

Not Aliens but a Nazi Occult Inspired and then Science Rendered Design.

Aliens and UFOs: 3 hours ago -

Louisiana Lawmakers Seek to Limit Public Access to Government Records

Political Issues: 5 hours ago -

The Tories may be wiped out after the Election - Serves them Right

Regional Politics: 7 hours ago -

So I saw about 30 UFOs in formation last night.

Aliens and UFOs: 8 hours ago -

Do we live in a simulation similar to The Matrix 1999?

ATS Skunk Works: 9 hours ago -

BREAKING: O’Keefe Media Uncovers who is really running the White House

US Political Madness: 10 hours ago -

Biden--My Uncle Was Eaten By Cannibals

US Political Madness: 11 hours ago -

"We're All Hamas" Heard at Columbia University Protests

Social Issues and Civil Unrest: 11 hours ago

top topics

-

BREAKING: O’Keefe Media Uncovers who is really running the White House

US Political Madness: 10 hours ago, 23 flags -

Biden--My Uncle Was Eaten By Cannibals

US Political Madness: 11 hours ago, 18 flags -

George Knapp AMA on DI

Area 51 and other Facilities: 3 hours ago, 17 flags -

African "Newcomers" Tell NYC They Don't Like the Free Food or Shelter They've Been Given

Social Issues and Civil Unrest: 16 hours ago, 12 flags -

"We're All Hamas" Heard at Columbia University Protests

Social Issues and Civil Unrest: 11 hours ago, 7 flags -

Louisiana Lawmakers Seek to Limit Public Access to Government Records

Political Issues: 5 hours ago, 7 flags -

Russian intelligence officer: explosions at defense factories in the USA and Wales may be sabotage

Weaponry: 15 hours ago, 6 flags -

So I saw about 30 UFOs in formation last night.

Aliens and UFOs: 8 hours ago, 5 flags -

The Tories may be wiped out after the Election - Serves them Right

Regional Politics: 7 hours ago, 3 flags -

Not Aliens but a Nazi Occult Inspired and then Science Rendered Design.

Aliens and UFOs: 3 hours ago, 3 flags

active topics

-

Candidate TRUMP Now Has Crazy Judge JUAN MERCHAN After Him - The Stormy Daniels Hush-Money Case.

Political Conspiracies • 368 • : fringeofthefringe -

George Knapp AMA on DI

Area 51 and other Facilities • 11 • : DontTreadOnMe -

Not Aliens but a Nazi Occult Inspired and then Science Rendered Design.

Aliens and UFOs • 7 • : JonnyC555 -

So I saw about 30 UFOs in formation last night.

Aliens and UFOs • 19 • : rigel4 -

MULTIPLE SKYMASTER MESSAGES GOING OUT

World War Three • 31 • : annonentity -

Mood Music Part VI

Music • 3057 • : BatCaveJoe -

The Tories may be wiped out after the Election - Serves them Right

Regional Politics • 19 • : alwaysbeenhere2 -

"We're All Hamas" Heard at Columbia University Protests

Social Issues and Civil Unrest • 125 • : KrustyKrab -

Biden--My Uncle Was Eaten By Cannibals

US Political Madness • 40 • : Kaiju666 -

Alabama Man Detonated Explosive Device Outside of the State Attorney General’s Office

Social Issues and Civil Unrest • 57 • : watchitburn